Paper - DVCon India

advertisement

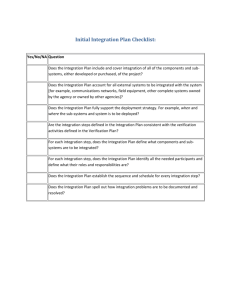

Has the performance of a sub-system been beaten to death UVM Framework does it ALL!! Subhash Joshi, Qualcomm India Private Limited (scjoshi@qti.qulacomm.com) Vaddineni Manohar, Qualcomm India Private Limited (manoharv@qti.qualcomm.com) Sangaiyah Pandithurai , Qualcomm India Private Limited (psangaiy@qti.qualcomm.com) Abstract— This paper talks about the challenges and solutions deployed in the RTL performance verification of complex systems. It aims to enrich the eco-system with strategic approach which facilitates- Best-in-class, highly accurate, lightning fast performance results on revolving RTL. An insight into RTL performance verification challenges and smart solutions for such systems is provided. Paper demonstrates a flow covering different strategies to target synthetic traces to identify best and worst performance limits of design, Analysis of Traces defined by Architecture Teams, strategies to analyze and validate platform traces which overshoots simulation capacities, Taking wave dumps as input from different sub-system or SoC use-cases and validating dumps through offline and static methods. Demonstrated flow also aims to replace adhoc approaches with performance driven verification methodology and infrastructure. Paper talks about efficient implementation and highly flexible and scalable solutions using UVM as a reference methodology. Paper uncovers details on Automated and highly scalable UVM regression framework, sophisticated back-end infrastructure for extensive analysis, Offline Waveform analysis utility, Static checks on Platform TRACEs, UVM TRACE sequences re-play and correlation mechanisms. Paper focuses on development of different verification components which ensure robust and record time performance verification closure. Paper uncovers various details at different stages of performance verification cycle, it also describes the requirement and details on performance coverage metrics which ensure quality closure thereby providing a performance stable system. A detailed case-study is provided as a sample approach to breakdown and ease out performance analysis in case of complex DDR-Systems. Keywords—RTL Performance; Verification Challenges; Use-case traces. Regression Framework I. INTRODUCTION Given today’s SoC’s which are massive in size and have enormous complexities, the system performance is primarily defined by design components such as interconnects and memory. These system components deal with different QoS requirements for e.g. CPU is a latency sensitive system, GPU systems have massive workloads, and then Real time clients such as display require different QoS scheme. With increasing performance requirements of SoC’s, new architectures keep revolving. The architectural phase ensures correct performance trade-offs and generates system topology and performance parameters. These continuously changing topologies and aggressive project time lines demand record time and quality performance-based design exploration and verification. Performance validation tasks become extremely cumbersome and time consuming with brand new architectures and changing system topologies. To ensure performance parameters are met and every single clock edge is validated requires smart strategies during execution phase. Paper explains various strategies and methods adopted to crack architectural bugs by considering an example memory sub-system. A typical memory system have multiple masters accessing DDR with different performance and QoS requirements. To facilitate the growing needs of gaming and multimedia application lot of design optimizations are required, introducing enormous complexity. On the other side of interface DDR memory has its own timing implications which needs to be organized, optimized and addressed by protocol engines. Any requirements to performance enhance (optimize memory accesses) the protocol engine and make it configurable and programmable across memory technologies adds further complexity to the memory system architecture. 1 II. PERFORMANCE-COMPLEXITY OF SYSTEM Performance of a system is majorly guided by system components such as interconnect and memory. Continuously revolving architecture dictate changes in interconnect topologies and different memory requirements. With multiple interconnect and memory combinations possible, performance verification needs to ensure the integrated system as well as the standalone performance limitations of different modules are highlighted and fixed before RTL Freeze. The performance requirements between all master and client’s needs to be analyzed and performance matric needs to be projected. Multi-master and slave or concurrent cases needs to be fine-tuned to ensure the worst case numbers still operate under the performance budget. In case the performance budgets are met the analysis should reveal any possible enhancements which can further improve the system performance. Analysis of different master standard benchmarks, different user-experience cases with throughput for different patterns of data has to be completed. Particular sub-system gets evaluated against 100’s of micro-benchmarks and synthetic cases all this needs to be accommodated in extremely tight schedule with nospace for errors. This requires defining performance verification quality metrics and development of dedicated performance verification components (PVC’s). These PVC’s ensure robust RTL performance verification closure. III. PERFORMANCE VERIFICATION TECHNIQUES- A PRODUCT PERSEPECTIVE The performance verification activity starts with the definition of a product, after the sub-system performance models are developed, performance use-case analysis is completed using virtual platforms/TLM modeling. Models are not cycle-accurate and hence limit the usage of this approach also it’s some time difficult to model (due to complex IP or 3rd party IPs). Further every core runs use-cases in standalone platforms, using simulation, co-simulation platforms and sign-off best and worst possible performance numbers, SoC team runs multiple concurrent use-cases, followed by SoC signoff. Last stage is product performance validation. At each level from project definition till SoC sign-off design goes through analysis and optimization in terms of RTL changes, S/W proposals, buffer tuning, and “N” number of hardware techniques. Later once silicon is available the performance metrics are co-related against simulations numbers and singed-off with acceptable deviations. Each stage has timeline dependencies and that puts more pressure on sub-system RTL performance verification. Timeline dependencies could be stage to stage or design functional stability to performance analysis kick-off. Regress performance verification at core level de-risks the engineering cycle penalties, incurred on a bug found at a later stage. This paper focuses on RTL performance verification at sub-system level and highlights different strategies and solutions for mitigating risks and gaining max confidence in pre-silicon stage. IV. PERFORMANCE VERIFICATION SCOPE RTL performance verification activity at sub-system begins, once design has reached acceptable functional quality. The scope of activity is to verify whether the sub-system performance metrics fit in the system level socket, ensuring all possible limitations are highlighted and enhancements are proposed. The major areas of verification include: Synthetic traces: - Architecture exploration, analyzing and modeling corner case scenarios to produce performance bottleneck. Sign-off for best and worst case performance numbers analysis of MIN-MAXAVG latency histogram across single master and multiple master-client interfaces. o Interconnect: - interconnect latency, bandwidth, outstanding depth, utilization, arbitration and QoS Settings for concurrent cases. o Memory: - Rd/Wr latency, round-trips, queue depth, organization and re-ordering policies. Identification and impact analysis of stall and starve situations across logical blocks. How quick the system comes out of Stall situations, are there any non-required stalls due to various situations inside design. For e.g. Power recovery, Clock switching modes, and house-keeping update from Memory. Randomization of several formats of data and identification of related throughput for same. Removal of un-intended bubbles on logical interfaces and proposing enhancements for same. Identification of 2 arbitration scheme efficiencies, Buffer-depths and ensuring highest memory utilization by best possible command scheduling. TRACES: - Analysis of traces (Realistic-traffic) defined by Architecture-teams or globally distributed teams, generating micro-traces (techniques to reduce and reform large TRACEs within allowable deviation) and analysis of Region-Of-Interest. Traces replay and identification of workarounds (Hardware or Software). MISC: - Projection of performance effective configuration settings and ensuring uniformity across multiple sub-systems during development stages. V. RTL PERFORMANCE VERIFICATION CHALLENGES Increasing complexity of today’s system have created vast space for performance exploration. The verification needs to complete under aggressive timelines with no hidden-bugs. Performance analysis has to occur from different engineering angles, exposing enhancements, limitation, identifying best case performance settings, ensuring best and worst case limits under practically possible verification space and realistic scenarios. RTL performance verification posed multiple challenges starting from bring-up till development of closure directives. A. RTL Performance DV kick-off Performance DV kick-off requires designs to reach certain level of functional maturity. If functional and performance verification starts in parallel the verification and debug space grows huge. Issues that occur in performance simulation needs to be bucketed under functional or performance, this at times results in complex and time consuming debugs. B. Beating the timeline-pressure Ensuring a readily available and bug free performance verification framework, which could scale across multiple product-derivatives simultaneously. Re-usability across different architectures and continuously revolving micro-architecture changes in parallel. How to ensure low-development times with these changes and focus more on the study and exploration of architecture? How to create a performance bridge to perform performance analysis with quick turnarounds across distributed teams using different platforms. How to reduce the debug and analysis time. C. Every clock is important How do we ensure that design is operating in the best possible limits during any scenario? Ensuring that all logical interfaces are perfectly tuned and there are no un-intended idle cycles. The analysis becomes extremely cumbersome when traffic gets translated from data-sensitive interfaces such as AMBA bus protocol to timing-sensitive interfaces such as Memory protocols. Identification of connection bottleneck on such kind of interfaces is a tedious task. D. Micro-traces How do we ensure that memory sub-system gets exposed to realistic scenarios at early stages and enables most of other sub-systems for regress performance verification? E. Perf Data space explosion Even after embedding collection probes or performance data collection mechanisms, a huge space exists for performance data analysis, Are there ways to perform automated data-mining which could produce refined data from the exploded performance data space. F. Looking beyond sub-system Is it possible to do traffic profiling of a Master traffic reaching a memory slave, are there opportunities to further enhance master traffic generation schemes for Use-cases which meet desired performance 3 numbers. For e.g. accesses coming to memory sub-system from different master, is it possible to influence the master system so as to optimize the transaction scheduling considering memory access penalties. G. Closure directives Defining methodology which ensures complete closure and sign-off criteria for performance verification across all the cores. How much to go beyond after meeting product use-case requirements, or where to stop and ensure performance verification activity is completed. Amid all those challenges RTL based performance checking is required for final validation though it has longer turnaround times and limits the practical number of design explorations. VI. DV STRATEGIES FOR RTL PERFORMANCE VERIFICATION RTL Performance DV adopted multiple strategies to attack performance verification at early stage to beat timeline-pressure and provide quality assurance. Performance verification required development of robust verification infrastructure which could scale and provide modularity, development of multiple performance verification components (PVC’s), sophisticated filtering methods to root-cause problems and graphical display to ease-out performance analysis. Following section uncovers details on different DV strategies: A. Automated UVM FRAMEWORK “JUST BEAT IT!! UVM FRAME WORK HELPS!!” Flexibility of UVM lies in the fact that the verification environment developed using UVM consists of reusable, scalable and modular components and is supported by tools of all major vendors of the industry. Performance TB has been built on advantages of UVM. UVM helped in developing project independent sequence libraries and reusable/scalable performance VIPs. The first starting point of a performance verification must be from synthetic cases analysis where performance would be targeted based on theoretical analysis. Section [VI.A.1] talks about it. The next big questions are “how to collect performance numbers and how to report them in an automated way?” . Section [VI.A.2] explains the automated flow. Is this suffice? Or do we need to beat it more? Yes, Indeed. We have to analyze more with the real scenarios used at performance hungry cores such as Multimedia and GPU. Obvious questions are, How to get the scenarios and in which format? How to replay them? Can I replay any given waveform? How to compare performance numbers? Are the projected numbers correct for the Use-Case? Yes, we do get answers for the above questions in Section [VI.B.1]. VI.A.1 PV (Performance Verification) with Synthetic cases: To take full advantage of constrained-random stimulus generation it is important not only that each transaction be appropriately randomized, but that coordinated sequences of activity take place both on individual ports and across multiple ports of the DUT. Furthermore, the data object randomization provided by verification component developers may not be entirely appropriate for any specific verification task, and it is likely that users will need the flexibility to add scenario-specific random constraints case-by-case. After identifying the scenarios based on theoretical analysis, a sequence library is created for DDR system. Ex: To get page-hit/miss/conflict cases of DDR and to control throttling over bus. This is achieved by controlling the randomization of each transaction and for each case. The sequence library is independent of other verification components and the sequences can be used by any UVM-TB with same interface protocol (standard bus protocols are used) which makes it highly reusable and scalable. Fig 1 shows how sequence library is utilized by the verification environment. The sequences available in sequence libs are combined, in order to create a hierarchy of stimuli or to generate stimuli in parallel to multiple interfaces of a DUT (Virtual Sequences) which are associated with Virtual Sequencers, containing sub-sequences to coordinate the flow of stimuli. This way of implementation allowed different masters to use different sequences in parallel or same sequence. The selection of the sequences or transactions attribute randomization is controlled either through run time switches or randomized way. 4 Figure 1. Automated PERF TB setup with sequence library and performance report automation VI.A.2 PLR (Performance Logger and Reporter): The biggest challenges of PLR are [1]. Making modular, reusable and scalable performance VIPs. [2]. How to get the performance numbers which are spread across regression and [3]. How to compare the performance numbers? Performance monitor (UVM component) is developed which may be instantiated for each master/slaves, logical and memory interfaces. These components communicate with other verification components by using TLM communication. These may be scaled based on sub-system definition as they are developed in a layered approach. Performance monitors displays the performance numbers by manipulating the information collected from the transactions driven to and received from the DUT. At the end of the simulation, the performance numbers will be compared with theoretically calculated performance numbers with user acceptable margin (margin%, can be changed as run time argument). Based on the comparison results tests pass or fail status is displayed. After regression completes, performance metrics from each test log will be collected and consolidated as a performance-dashboard by the script. Script sends dashboard to the performance email group. The complete setup of collecting performance information and sending email is automated in the TB. B. Automated UVM Sequence for TRACE infrastructure Algorithmic approach to reduce trace lengths and replay of traces within allowable deviations. This enabled Analysis of Platform traces to flush out any bottlenecks at early stages. Identifying and recommending best design performance settings by replaying the scenarios. Graphical simulation and analysis environment enabled DV to refine, run and analyze hundreds of scenarios to identify the best design configuration. VI.B.1 Replaying scenarios: Although synthetic cases helps in identifying few issues, the completeness of performance verification would come if DUT get verified with real scenarios from performance hungry cores like GPU or multimedia. The UVM TRACE infrastructure (UTI) has been developed to address this issue. The UTI takes an input file which is TRACES format file (With address, lengths and IDs). Different teams provide expected BW/Latency numbers as well along with TRACES. The UTI is project independent, fully reusable and configurable that allows it to be replayed by any master. When a test is run, PERL script gets invoked by UTI and converts input TRACES file to SV understandable packet format. The generated packets would be read by UVM TRACES sequence and get driven to Drivers based on the attributes available in the read packet after turning off randomization of driver 5 packet for those attributes. The UTI has the ability to dump TRACES file from output wave. The UTI is validated by comparing input and output TRACES files. Incase different teams provides waves instead of TRACES format, offline analyzer script can generate TRACES format from waveform which can be used by UTI. Offline tool can also generate expected performance numbers from the given wave. But usually the expected numbers would be given by respective team for comparison. These will be used to decide PASS or FAIL status of the test with some error margin or provides scope for further exploration. To cross check whether the expected numbers are indeed aligning with the waveform given or not, offline waveform analyzer tool will be used. For the complete flow of UTI, please refer Fig 2. Figure 2. Automated UVM Sequence and regression frame work for TRACE Infrastructure C. Platform independent, Static Analysis Infrastructure This strategy helps in offloading simulators and getting quick analysis turnarounds. Reporting mechanism is automated and graphical interface enables easy data analysis. This offline utility helps reduce performance analysis times from Days to hours and enables user to put more focus on actual design exploration. Analysis can be performed over input waves or TRACES which are provided by different teams, sub-system or even SoC with different performance validation platforms. It eases out debugging by quickly identifying configuration or busattribute issues which limit system performance. It’s easy to re-produce issues observed by other sub-systems by generating TRACES from waves or by converting the input TRACES to sequences and running with uvminfrastructure. This utility with plug and play uvm-infrastructure speeds-up root-cause analysis and workaround identification. The reporting interface is automated and generated performance data dashboard and intelligent charts to profile or study use-cases. It facilitates protocol snooping on standard or any defined interfaces and playing around with the snooped data to refine and create meaning full data for performance verification. Usecase traffic efficiency analysis and iterations to identify best scheduling for incoming traffic is possible by changing the TRACES and replaying them. The same process may be left to reach a desired goal by enabling multiple approximation based iteration and regression framework. 6 Figure 3. Offline- tool, simulation off loader D. SIGN-OFF Criteria: To ensure the quality and successful closure of performance verification activity across all sub-system cores a performance coverage model (PCM) is developed. The performance coverage model provides insight into quality and depth of traffics generated and interesting situations that a particular design may come across. PCM works along with regression framework for synthetic as well as realistic use-cases, it may contain coverage bins for: Modeling use-case performance numbers as per Test-Plan enabling scenario based performance coverage. DDRx functional coverage model, identifying theoretical limits vs sim value. Compares protocol design values against simulated values. Provides feedback for either quality of stimulus or design limitation or enhancement. DDR command sequence coverage for worst case penalties. Buffer occupancy model: - Buffer occupancy with different use-cases and trend analysis. Model provides inference whether synthetic simulations covered scenarios with back-pressure & Buffer-depths. With more intelligence built different stall and starve situations may be added for coverage. Adding bus coverage models would assist in ensuring outstanding and out of order trends are covered. Re-using bus-coverage models from functional environments. E. SMARTER PLUGINs: DV infrastructure provides capability to enable analysis and study of performance scenarios through both online (performance-monitor approach) and offline modes (Wave dumps and TRACE analysis). Platform traces of longer simulation length are divided in multiple micro-traces with similar performance intent using iterative and successive approximation algorithms. The set of regenerated traces are fed to regression framework and analysis is done based on acceptable deviations. The performance infrastructure also enables dynamic power analysis for peak power and idle power measurement by identifying maximum bandwidth regions and associated power for same. 7 VII. RETURN ON INVESTEMENT Paper provides a deep dive into different attributes and challenges faced while signing-off performance verification of a sub-system. It showcases how design was challenged by smart DV strategies through rigorous backend analysis and reporting, offline waveform analysis infrastructure, techniques to reduce and reform large TRACEs within allowable deviation, static analysis infrastructure to study the use-cases scenarios and replay to justify the workarounds and identify the best settings. Few of the key takeaways are listed below: • UVM Framework: - Automated and fully loaded performance verification infrastructure for reusability and seamless migration across Vertical and Horizontal Streams. Seamless usage across platforms, across projects, across teams. Reduces framework portability and upgradation times from Weeks to days. • Regression infrastructure: - End to end automation of regression infrastructure enabled getting quick and refined performance data-reports, for easy analysis and debug on performance behavior of a system. Use-case regressions provide with overall outlook on realistic scenarios which may help Influencing Architecture decisions & identification of workarounds. Since the infrastructure is fully performance aware, any limitation in traffic patterns is exposed through reports. o Examples: - IN-ORDER on OOO Masters, uneven distribution of traffic across channels or ranks, not maximizing ALEN and OT or not aligning with DDR BL etc. • Platform Independent Analysis Tool: - Enables performance analysis through control knobs which tunes the performance infrastructure, with offline static checks the debug-analysis-replay loop reduces from a day-time to few hours. Facilitates in analysis of waveform dumps through refined data collection and smart reporting. Analysis and exploration of TRACES from globally distributed teams working on different platforms. Infrastructure transforms various details into predefined formats. Ensures Quick Iterations, consistency across results, multiple performance metrics by highlighting scenario and timestamp of interest. • Tracer, Issue Isolator, Attribute-comparator and configuration differentiator: - Debug time reduction by offline analysis, highlighting the region/module of interest. Ensuring consistency of design configuration used across cross–functional teams, easy out debugging by identifying bus attribute issues. Tracing bottleneck situation through stall and starve mechanisms within sub-modules and highlighting issues over graphical interface such as MS-Excel, Web-links etc. • TRACE Static Analysis: - Algorithmic approach to reduce trace lengths and replay of traces within allowable deviations. This enabled Analysis of Platform traces to flush out any bottlenecks at early stages. Identifying and recommending best design performance settings by replaying the scenarios. Graphical simulation and analysis environment enabled DV to refine, run and analyze hundreds of scenarios to identify the best design configuration. • Every single clock edge is important: Methodology to ensure un-intended transaction bubbles are flushed out or validated. • Sign-off Criteria: The requirement of Performance coverage model. Smart strategies reduce the exposure of late performance bugs due to early engagements. Provides deep dive into RTL Explorations for future products from performance perspective. VIII. REFERENCES [1] http://ieeexplore.ieee.org/stamp/stamp.jsp?tp=&arnumber=6646662 [2] http://ieeexplore.ieee.org/stamp/stamp.jsp?tp=&arnumber=7127364 [3] Accellera, UVM 1.2 User’s Guide [4] http://ieeexplore.ieee.org/xpl/articleDetails.jsp?arnumber=6936271&newsearch=true&queryText=UVM%20performance%20verificati on 8