CAVE6D - Electronic Visualization Laboratory

advertisement

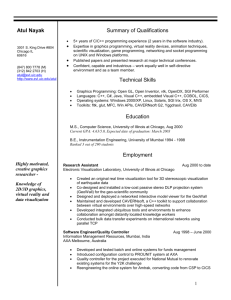

CAVE6D: A Tool for Collaborative Immersive Visualization of Environmental Data Glen H. Wheless, Cathy M. Lascara wheless@ccpo.odu.edu, lascara@ccpo.odu.edu Center for Coastal Physical Oceanography Old Dominion University Jason Leigh jleigh@eecs.uic.edu National Center for Supercomputing Applications University of Illinois at Urbana-Champagne and Electronic Visualization Laboratory University of Illinois at Chicago Abhinav Kapoor, Andrew E. Johnson, Thomas A. DeFanti akapoor@eecs.uic.edu, ajohnson@eecs.uic.edu, tom@eecs.uic.edu Electronic Visualization Laboratory University of Illinois at Chicago Abstract This “Late Breaking Hot Topic Paper” introduces and tracks the progress of OceanDIVER, a project to develop a tele-immersive collaboratory that integrates archived oceanographic data with simulation and real-time data gathered from autonomous underwater vehicles. Specifically this paper describes the work in building CAVE6D, a tool for collaboratively visualizing environmental data in CAVEs, ImmersaDesks and desktop workstations. Keywords: Virtual Reality, Environmental Hydrology, VR, Collaborative, Distributive, CAVE, CAVERN 1 Introduction In recent years there has been a rapid increase in the capability of environmental observation systems to provide high resolution spatial and temporal oceanographic data from estuarine and coastal regions. Similar advances in computational performance, development of distributed applications and the use of data sharing via national high performance networks enable scientists to now attack previously intractable large scale environmental problems through modeling and simulation. Research in these high performance computing technologies for computational and data intensive problems is driven by the need for increased understanding of dynamical processes on fine scales, improved ecosystem monitoring capabilities and the management of and response to environmental crises such as pollution containment, storm preparation and biohazard remediation. It is not enough, however, to be able to collect, generate or distribute large amounts of data. Scientists, educators, students and managers must have the ability to collaboratively view, analyze and interact with the data in a way that is understandable, repeatable and intuitive. We believe that the most efficient method to do this is through the use of a tele-immersive environment (TIE) that allows the raw data to become usable information and then cognitively useful knowledge. We define the term Tele-Immersion as the integration of audio and video conferencing with persistent collaborative virtual reality (VR) in the context of data-mining and significant computation. When participants are tele-immersed, they are able to see and interact with each other in a shared environment. This environment persists even when all the participants have left. The environment may control supercomputing computations, query databases autonomously and gather the results for visualization when the participants return. Participants may even leave messages for their colleagues who can then replay them as a full audio, video and gestural stream. Clearly, the near real-time feedback provided by TIEs have the potential to change the way data or model results are viewed and interpreted, the resulting information disseminated and the ensuing decisions or policies enacted. In this paper we outline our ongoing efforts to develop, enhance and deploy OceanDIVER (Oceanographic Distributed Virtual Environment Research,) a prototypical collaboratory for realtime oceanographic research using a TIE incorporating multiple data streams from archived data stores, model results and advanced instrumentation. The goal is to integrate archived data from several sources (models, moored stations, towed devices) as well as real-time data streams from remote instrumentation (autonomous underwater vehicles [AUVs] and systems of AUVs- see Figure 1.) We are utilizing and augmenting the emerging National Technology Grid [6] being constructed from high performance computational assets (such as supercomputers at the National Center for Supercomputing Applications,) advanced networking (such as the vBNS,) remote instrumentation and collaborative software environments. Results from building and studying the prototypical system will be useful for other disciplines requiring an information architecture and overarching software framework with which to pursue real-time collaborative knowledge discovery from complex multivariate datasets. The OceanDIVER collaboratory will enable users to view and interact with an oceanographic simulation or remote observational exercise with near-realtime visualization of the results on local graphics workstations or virtual reality hardware such as CAVEs Desktop Workstation CAVE ImmersaDesk vBNS vBNS High Performance Computing Asset vBNS sults n Re latio Simu CAVERNsoft Re −t al e im te vBNS et m le ry Instrumentation Coastal Basin Autonomous Underwater Vehicle Figure 1: Diagram illustrating the expected connectivity in the OceanDIVER project. and ImmersaDesks, within a TIE. Deployment of OceanDIVER will enhance other real-time observing networks (e.g., the LEO-15 site in coastal New Jersey waters and the REINAS project in Monterey Bay) by enabling scientists to capture, edit, synopsize, replay and store data and analysis products in a collaborative shared virtual space. Our intial developmental efforts have resulted in the merging of an application for visualizing environmental data, CAVE5D, with the CAVERNSoft Tele-Immersion system[5, 4, 3]. 2 CAVE5D CAVE5D[8], co-developed by Wheless and Lascara from Old Dominion University and Dr. Bill Hibbard from the University of Wisconsin-Madison, is a configurable virtual reality (VR) application framework. CAVE5D is supported by Vis5D[2], a very powerful graphics library that provides visualization techniques to display multi-dimensional numerical data from atmospheric, oceanographic, and other similar models, including isosurfaces, contour slices, volume visualization, wind/trajectory vectors, and various image projection formats. Figure 2 shows CAVE5D being used to view results from a numerical model of circulation in the Chesapeake Bay. The CAVE5D framework integrates the CAVE[1] VR software libraries (see http://www.vrco.com) with the Vis5D library in order to visualize numerical data in the Vis5D file format on the ImmersaDesk or CAVE and enables user interaction with the data. The Vis5D file format consists of a three-dimensional spatial grid of dependent multivariate data that evolves over time. CAVE5D has been demonstrated at both recent Internet2 members meetings and at the Next Generation Internet (NGI) meeting held in Washington, DC, in March 1998. Figure 2: CAVE5D being used to view results from a numerical model of circulation in the Chesapeake Bay. 3 CAVE6D CAVE6D, emerges as an integration of CAVE5D with CAVERNsoft to produce a TIE that allows multiple users of CAVE5D to share a common data-set and interact with each other simultaneously. The CAVERNSoft programming environment is a systematic solution to the challenges of building tele-immersive environments. The existing functionality of CAVERNSoft is too extensive to describe here (see http://www.evl.uic.edu/spiff/ti for details). Briefly, this framework employs an Information Request Broker (IRB) as the nucleus of all CAVERN-based client and server applications. An IRB is an autonomous repository of persistent data managed by a database, and accessible by a variety of networking interfaces. This hybrid system combines the distributed shared memory model with active database technology and real-time networking technology under a unified interface to allow the construction of arbitrary topologies of interconnected, tele-immersed participants. In CAVE6D, tele-immersed participants see the virtual environment from their own perspective, and can navigate around the space freely. Each participant is depicted by a virtual persona known as an avatar. Each avatar consists of a head, body and a single hand with which the participant may convey gestures such as nodding of the head and pointing with their hand. They may also observe the environment from each other’s vantage point hence allowing one participant to be a teacher or tour guide while the other a student or tourist. The purpose of collaboration however is not simply to provide a sense of virtual co-presence of the other users, but more importantly to allow them to jointly discuss and interact with the data-set in the environment. For example avatars possess long pointing-rays that can be used to point at features of interest in the data-set. In Figure 3: The first participant sets its environment, activates a set of parameters such as the Topography, Contour Salinity, Slice Salinity to be globally visible by all the users. Figure 4: The second participant joins the already existing user in the environment. The state of parameters are automatically inherited from the existing user. addition, participants may speak to each other through a telephonic conference call. Visualization parameters such as, Salinity, Circulation Vectors, Temperature and Wind Velocity Slices, larval fish distributions etc. that can be visualized by CAVE5D, have been extended in CAVE6D to allow participants to collectively operate them (see Figures 3, 4, 5.) Participants may turn any of these parameters on or off, globally (affecting everyone’s view) or locally (affecting only one’s own view.) This affords each participant the ability to individually customize and/or reduce the “cluttered-ness” of the visualization; one participant may be viewing the relationship between one subset of the total dimensions of the data while another participant may be simultaneously correlating a different subset. Time on the other-hand is globally shared and hence participants view all time-varying data synchronously. Any participant may travel forward and backward in time as well as stop time in order to discuss what they see with their collaborators. CAVE6D was implemented as follows. A shared memory arena was allocated and shared between CAVE5D and a CAVERNsoft IRB. The IRB was responsible for establishing a connection with the remote participants. The IRB allowed each participant to connect to each other or to a central IRB-based server. A central server is general used as a known persistent entry point into the teleimmersive environment. This is not absolutely necessary as any CAVE6D client can assume the role of a server. Once connected, each CAVE6D client relays information about the avatars and the state of data-set to the IRB, which then distributes it to all the collaborating participants. To minimize the need to send large amounts of data over the network, the actual data-set (which could easily exceed several hundred megabytes in size) was replicated at each site prior to launching the application. Figure 5: Another instant in the collaborative visualisation session, as more participants join in. 4 Closing Remarks CAVE6D represents the beginnings of our overall OceanDIVER project. CAVE6D integrates CAVERNsoft, with CAVE5D to enhance it with collaborative capabilities. CAVERNsoft is the underlying data distribution architecture for OceanDIVER. CAVE5D and CAVE6D is currently distributed and supported at the Old Dominion University’s Virtual Environments Lab (http://www.ccpo.odu.edu/~cave5d.) The CAVE library which allows CAVE5D and CAVE6D to be run on CAVEs, ImmersaDesks as well as non-VR desktop workstations, may be obtained by contacting: http://www.vrco.com. CAVERNsoft may be obtained from http://www.evl.uic.edu/spiff/ti. Future work will expand CAVE6D’s abilities to include the ability to dynamically download large data-sets from central or distributed servers to gradually achieve the long-term goals of OceanDIVER. In addition CAVE6D will be integrated with the Virtual Director[7], a tool for making recordings of virtual experiences. This tool will allow participants to document their discoveries directly from within the virtual environment. Acknowledgement Major funding is provided by the National Science Foundation (CDA-9303433.) The virtual reality research, collaborations, and outreach programs at EVL are made possible through major funding from the National Science Foundation, the Defense Advanced Research Projects Agency, and the US Department of Energy; specifically NSF awards CDA-9303433, CDA-9512272, NCR9712283, CDA-9720351, and the NSF ASC Partnerships for Advanced Computational Infrastructure program. The CAVE and ImmersaDesk are trademarks of the Board of Trustees of the University of Illinois. References [1] Carolina Cruz-Neira, Daniel J. Sandin, and Thomas A. DeFanti. Surround-screen projection-based virtual reality: The design and implementation of the CAVE. In James T. Kajiya, editor, Computer Graphics (SIGGRAPH ’93 Proceedings), volume 27, pages 135–142, August 1993. [2] Bill Hibbard and Brian Paul. http://www.ssec.wisc.edu//~billh/vis5d.html, 1998. Vis5D [3] Andrew E. Johnson, Jason Leigh, Thomas A. DeFanti, and Daniel J. Sandin. CAVERN: the cave research network. In Proceedings of 1st International Symposium on Multimedia Virtual Laboratories, pages 15–27, Tokyo, Japan, March 1998. [4] Jason Leigh, Andrew E. Johnson, and Thomas A. DeFanti. CAVERN: a distributed architecture for supporting scalable persistence and interoperability in collaborative virtual environments. Journal of Virtual Reality Research, Development and Applications, 2(2):217–237, 1997. [5] Jason Leigh, Andrew E. Johnson, and Thomas A. DeFanti. Issues in the design of a flexible distributed architecture for supporting persistence and interoperability in collaborative virtual environments. In Proceedings of Supercomputing’97, San Jose, California, Nov 1997. IEEE/ACM. [6] Rick Stevens, Paul Woodward, Tom DeFanti, and Charlie Catlett. From the i-way to the national technology grid. Communications of the ACM, 40(11):51–60, Nov 1997. [7] Marcus Thiebaux. The Virtual Director. Master’s thesis, Electronic Visualization Laboratory, University of Illinois at Chicago, 1997. [8] Glen H. Wheless, Cathy M. Lascara, A. Valle-Levinson, D. P. Brutzman, W. Sherman, W. L. Hibbard, and B. Paul. Virtual chesapeake bay: Interacting with a coupled physical/biological model. IEEE Computer Graphics and Applications, 16(4):52– 57, July 1996.