Mapping the Physical World to Psychological Reality: Creating

advertisement

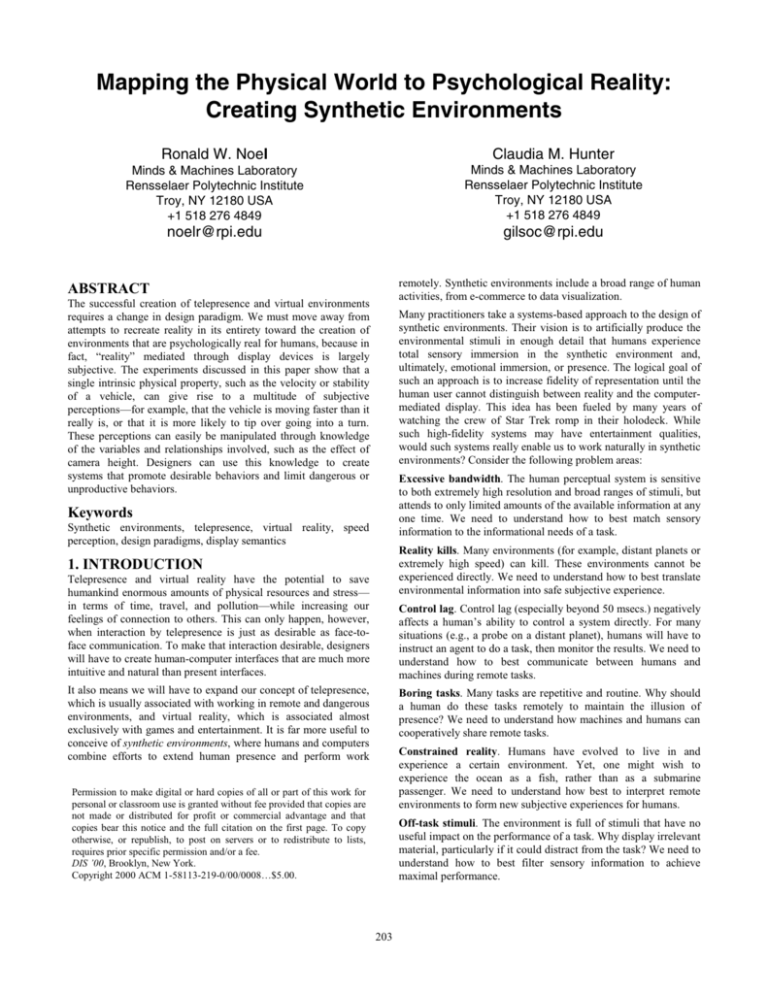

Mapping the Physical World to Psychological Reality: Creating Synthetic Environments Ronald W. Noel Claudia M. Hunter Minds & Machines Laboratory Rensselaer Polytechnic Institute Troy, NY 12180 USA +1 518 276 4849 Minds & Machines Laboratory Rensselaer Polytechnic Institute Troy, NY 12180 USA +1 518 276 4849 gilsoc@rpi.edu noelr@rpi.edu remotely. Synthetic environments include a broad range of human activities, from e-commerce to data visualization. ABSTRACT The successful creation of telepresence and virtual environments requires a change in design paradigm. We must move away from attempts to recreate reality in its entirety toward the creation of environments that are psychologically real for humans, because in fact, “reality” mediated through display devices is largely subjective. The experiments discussed in this paper show that a single intrinsic physical property, such as the velocity or stability of a vehicle, can give rise to a multitude of subjective perceptions—for example, that the vehicle is moving faster than it really is, or that it is more likely to tip over going into a turn. These perceptions can easily be manipulated through knowledge of the variables and relationships involved, such as the effect of camera height. Designers can use this knowledge to create systems that promote desirable behaviors and limit dangerous or unproductive behaviors. Many practitioners take a systems-based approach to the design of synthetic environments. Their vision is to artificially produce the environmental stimuli in enough detail that humans experience total sensory immersion in the synthetic environment and, ultimately, emotional immersion, or presence. The logical goal of such an approach is to increase fidelity of representation until the human user cannot distinguish between reality and the computermediated display. This idea has been fueled by many years of watching the crew of Star Trek romp in their holodeck. While such high-fidelity systems may have entertainment qualities, would such systems really enable us to work naturally in synthetic environments? Consider the following problem areas: Excessive bandwidth. The human perceptual system is sensitive to both extremely high resolution and broad ranges of stimuli, but attends to only limited amounts of the available information at any one time. We need to understand how to best match sensory information to the informational needs of a task. Keywords Synthetic environments, telepresence, virtual reality, speed perception, design paradigms, display semantics Reality kills. Many environments (for example, distant planets or extremely high speed) can kill. These environments cannot be experienced directly. We need to understand how to best translate environmental information into safe subjective experience. 1. INTRODUCTION Telepresence and virtual reality have the potential to save humankind enormous amounts of physical resources and stress— in terms of time, travel, and pollution—while increasing our feelings of connection to others. This can only happen, however, when interaction by telepresence is just as desirable as face-toface communication. To make that interaction desirable, designers will have to create human-computer interfaces that are much more intuitive and natural than present interfaces. Control lag. Control lag (especially beyond 50 msecs.) negatively affects a human’s ability to control a system directly. For many situations (e.g., a probe on a distant planet), humans will have to instruct an agent to do a task, then monitor the results. We need to understand how to best communicate between humans and machines during remote tasks. It also means we will have to expand our concept of telepresence, which is usually associated with working in remote and dangerous environments, and virtual reality, which is associated almost exclusively with games and entertainment. It is far more useful to conceive of synthetic environments, where humans and computers combine efforts to extend human presence and perform work Boring tasks. Many tasks are repetitive and routine. Why should a human do these tasks remotely to maintain the illusion of presence? We need to understand how machines and humans can cooperatively share remote tasks. Constrained reality. Humans have evolved to live in and experience a certain environment. Yet, one might wish to experience the ocean as a fish, rather than as a submarine passenger. We need to understand how best to interpret remote environments to form new subjective experiences for humans. Permission to make digital or hard copies of all or part of this work for personal or classroom use is granted without fee provided that copies are not made or distributed for profit or commercial advantage and that copies bear this notice and the full citation on the first page. To copy otherwise, or republish, to post on servers or to redistribute to lists, requires prior specific permission and/or a fee. DIS ’00, Brooklyn, New York. Copyright 2000 ACM 1-58113-219-0/00/0008…$5.00. Off-task stimuli. The environment is full of stimuli that have no useful impact on the performance of a task. Why display irrelevant material, particularly if it could distract from the task? We need to understand how to best filter sensory information to achieve maximal performance. 203 Individual differences. Humans vary greatly in physical and mental capabilities and in styles of interaction with their environment. However, economy demands a universal approach to the design of devices for synthetic environments. We need to understand how to make synthetic environments both universal and adaptive to the individual. More importantly, we should aim to design a single interface that makes it possible for a human to control many different kinds of devices without requiring them to change their deeply embedded behaviors and responses. We should not have to retrain, for example, to drive a microscopic-sized vehicle when our driving responses are attuned to automobiles. Any design scheme will have to address these problem areas. But we believe that total sensory immersion as a design requirement is not desirable. What we want instead is a way to create experiences that are psychologically, not virtually, real for humans. Our goal is not reproduction of reality, but the production of a medium in which machines communicate task-specific information in ways that create an intended subjective experience. This kind of experience would enable humans’ vast perceptual capabilities to act in holistic, parallel, and intuitive manners. In exploring how display semantics affects human speed perception, our research shows a progression from measuring perception to actually capturing decisions and behavior. 2.1.1 Effects of view height Changing the scale between normal driving height and a remote vehicle changes the geometric relationships in the field of view, which may affect the driver’s perceived speed. For example, in our research we use a small (1/10-scale) radio-controlled car we call a “telebot,” connected to a computer workstation and equipped with a small television camera. The reduced scale of the telebot lowers the view height by lowering the camera. This change in height impacts the vertical angles in the view, but not the horizontal angles. For example, the rocks in the road underneath the driver are closer and thus subtend greater visual angles, while the trunks of trees at the side of the road subtend the same viewing angles. 2. NEW METAPHORS FOR DESIGN One possible new design metaphor for synthetic environments is display semantics. Display semantics is our term for how humans understand the meaning of elements in any kind of display device that connects them to a computer. As computing becomes more ubiquitous, display devices will take many forms, from interactive multimedia kiosks to devices that we wear on our bodies. The traditional paradigm for human-computer display, however, is mired at the stage of “informational” displays, like digital or analog gauges. These displays require humans to calculate and interpret information in order to perform a task, adding to the cognitive load already imposed by interacting with the computer. We can, alternatively, create displays that offer “environmental” information—by recreating the stimuli that we attend to in the natural world and leaving it to human expertise in recognizing and predicting patterns and recognizing salient cues to extract meaning instinctively. We expect that synthetic environments will undergo a surge of design activity in the next few years. Before this activity begins, designers should know the constraints and psychological principles that will produce realistic subjective experience. Without direction, design decisions are likely to be made that will be far more expensive than they have to, and what’s more, may limit what we can accomplish with synthetic environments until technology improvements can provide sufficient bandwidth. Figure 1. Research “telebot” The exact impact of camera view height on judging the telebot’s speed depends on how humans perceive speed in the context of viewing movement in a real-world environment through a display device. Current theories of visual perception hold that humans use two major sources of visual information in perceiving self-motion: discontinuity (or edge) rate, and global optical flow rate [1], [3], [8]. Discontinuity rate is the rate at which edges pass a fixed reference point in the field of view. Global optical flow rate is the velocity of forward motion scaled in altitude units, or eyeheights [10]. These visual cues are direct perceptual cues that pertain only to the optical array. As a case in point, we should not count too much on our intuitions about what makes a scene seem psychologically real. Convincing research on synthetic environments requires us to examine how humans really perceive such psychological variables as speed of self-motion. 2.1 Display semantics effects on perception of speed At this university’s applied cognitive science program, one of our concentrations in synthetic environments research is perception of speed. Speed in this sense is a psychological measure of rate of self-motion, rather than the physical measure of velocity, or distance traveled over time. Perceived speed affects all our responses in controlling remote devices, from the smallest nanobot to the largest mining vehicle. It is a factor in judging braking distance, rate of turning, and the time available for making decisions. Realistically perceiving speed will also make gaming more natural and thrilling. Global optical flow rate explains perceived speed as a function of optic flow in the visual field [4], [5], [6]. In estimating speed, people would note how the angular speed of textural patterns changes as a result of the lower view height of the telebot. The most important optic flow for driving is the locomotor flow line, or the flow of the textural field underneath the observer. Changes in the angular speed of this flow line are proportional to changes in view height. This means that to maintain similar optic flow, and therefore perceived speed, we must reduce speed as we reduce 204 use any numbers they thought appropriate, a method known as modulus-free magnitude estimation [2]. view height. In fact, the proportional relationship is equal to the scale of the change in view height. For example, if we lower the view height by half, then the vehicle only needs to go half as fast to create the same flow pattern, and thus the same perceived speed, for the observer. 2.2.2 Results Each of the fifteen participants responded three times to seven different video clips for a total of 21 responses. Because variability was an issue with the absence of a standard modulus, we performed Engen’s [2] logarithmic transformations on the data to minimize inter- and intra-subject variability. However, because our conception of display semantics in synthetic environments predicts that people will instinctively attempt to extract the maximum information from an environmental display, we might equally suppose that people use all available cues to judge self-motion, including perceived distance cues such as the known relationships between physical size of known objects, angles, etc. We first fitted a regression line to the data where the x-values or velocities were unscaled for both telebot and car. The resulting model was significant (F (1, 5) = 11.88, p = .02, adjusted R2 = .64). However, it was not a particularly good fit. Based on these results, we assumed that the unscaled data were not very useful for predicting magnitude estimates of telebot speed. Even the use of the discontinuity rate is based on perceived distance cues. People would have to recognize the sources of the edges that move through their field of view, such as cars, trees, hills, or highway markings, and know their sizes, in order to judge the distances between them. We then multiplied the velocities for the telebot by the scale factor of 2.625, corresponding to the 2.625:1 ratio between the virtual eyeheights of the car and telebot. The adjustment served to collapse the difference of the perceived locomotor flow line between the two virtual eyeheights. This time, the regression model was a much better fit; it was significant (F (1, 5) = 115.53, p = .0001) and the corresponding adjusted R2 was .95. Figure 2 shows the logarithmic magnitude estimates for the realigned data plotted against logarithmic velocity, and the regression line. It should also be noted that eyeheight as a determinant in global optical flow rate is not a useful concept in synthetic environments. The eyeheight of the observer sitting in front of the display device is fixed; instead, the height of the sensor at the time of recording the synthetic environment varies. It is worth examining whether “virtual” eyeheight serves the same function in determining global optical flow rate. We might ask, then, which kinds of cues predominate in synthetic environments. This knowledge would enable designers to predict people’s perceived speed relatively accurately. More importantly, we can predict whether perceived speed scales at the same rate as the virtual eyeheight. Speed Estimates for Realigned Data Plotted in Log-Log Scales Mean Magnitude Estimate 100 Discontinuity rate does not depend on altitude or view height, but does depend on actual velocity and textural density [3]. If people use discontinuity rate as a primary cue, we can predict that they would not perceive a difference in speed as a result of changing virtual eyeheight. However, view height does affect global optical flow rate. Thus the use of global optical flow rate as a primary cue predicts that the velocity needed to produce a perceived speed should scale to the virtual eyeheight. Particularly, any reduction in virtual eyeheight should match a similar reduction in velocity to maintain a constant perceived speed. 10 Telebot Car Predicted 1 2.2 An Experiment in Perception 1 One of the goals of our first project was to explore which kind of cues, global optical flow rate or discontinuity rate, better predict people’s perception of speed. We wanted to confirm that perceived speed scales at the same rate as virtual eyeheight. We asked people to estimate the speed they experience while watching video clips of forward motion taken from two different virtual eyeheights. 10 Velocity (MPH) 100 Figure 2. Speed estimates for scaled data Through further data analysis (fitting to Stevens’ [9] power function for describing relationships between objective and subjective quantities), we found that the following equation described the relationship between perceived speed and velocity very well: 2.2.1 Procedure Perceived speed = 1.13 (velocity × scale factor) .86 Seven video clips of forward motion, three from the telebot and four from a Volkswagen Fox, were presented to fifteen graduate and undergraduate students from the university. The telebot’s camera was mounted about 16 inches (in.) from road level, while the virtual eyeheight from the Volkswagen was approximately 42 in. from road level. The telebot velocities were approximately 3, 6, and 9 miles per hour (mph). The Volkswagen velocities were 10, 20, 40, and 60 mph. where perceived speed is the subjective quantity, 1.13 is a constant, velocity is the objective measure, scale factor is 2.625 for telebot velocities and 1.0 for car velocities, and .86 is the slope of the line obtained when magnitude estimates of perceived speed and velocities are plotted together. In this case, the constant is very close to 1, which verifies the assumption that people’s estimates for perceived speed at zero mph actually are zero. Participants were instructed to assign any number they chose (not necessarily a speed in mph) to the first video clip, then make all subsequent comparisons based on that first number. They could In this experiment, perceived speed is related to velocity by an exponent of .86. This equation is 95% accurate in predicting people’s perception of speed for the data we collected. This is a 205 useful fact that designers can employ to align the apparent velocity of the display to the velocity of a remote vehicle by the scale factor of their virtual eyeheights, at least for this configuration of automobile and telebot scales. Thirty undergraduate students from the university participated in the experiment. Six video clips of forward motion from the train, taken at the same speed setting, were presented to the participants in random order. The participants were asked to rate how likely the vehicle was to tip over on a scale from 1 to 6, where 1 = not at all likely to tip over and 6 = certain to tip over. 2.3 An Experiment in Decisions and Behavior Does this knowledge help designers predict how people actually behave while driving? Based on the previous experiment, we would have said yes. However, we continued to research how the ratio of virtual to actual eyeheight works when applied to a situation that stresses people’s perception of speed. 2.3.2 Results Each participant responded once to each clip, for a total of 30 responses. We found we were able to collapse the data for rightand left-handed movement, as these results were not significantly different (χ2 = 5.375, df = 5, p = 0.3720). We designed a simulation where people could not drive a remote vehicle safely unless their perception was correctly mapped to an appropriate speed. We wanted to know if peoples’ perception of the stability of a vehicle going into a turn would also scale with virtual eyeheight. Based on driving experience, we intuitively felt that the higher the virtual eyeheight, the more likely people would feel that the vehicle would tip over in a turn. But from our previous results, we could infer that the perception of higher speed at lower virtual eyeheight would work against that feeling. The train was actually traveling a speed of 3.1 miles per hour, but taking into account the virtual eyeheight of the camera, the train appeared to be traveling much faster. According to the equation from the previous experiment, at 1” the perceived speed of the train should have been 120 mph; at 1.25” it should have been 99 mph, and at 1.5” it should have been 83 mph. Figure 5 shows the frequency of the participants’ ratings of how likely the train was to tip over at each of the three virtual eyeheights. The difference in ratings was highly significant (χ2 = 50.222, df = 5, p < 0.0000). 2.3.1 Procedure This experiment used another kind of simulation device: an LGBscale toy train set. The train provides a constant, well-regulated speed along a defined path. A tiny television camera was mounted at the front of the train, focused on a first-surface mirror held at about a 15° angle to the floor surface. This apparatus was used to provide three different virtual eyeheights of approximately 1”, 1.25”, and 1.5”. Likelihood that Vehicle Will Tip Over 30 1" 1.25" Number of Participants 25 1.5" 20 15 10 5 Figure 3. Train equipped with television camera 0 The train moved along an oval track. We filmed the train traveling around the track both left and right. 1 2 3 4 Likelihood Rating 5 6 Figure 5. Ratings of likelihood of tipping Participants thought the train was much more likely to tip over at the lowest virtual eyeheight (1”) than at the highest virtual eyeheight (1.5”). This means that they think the train is less stable at lower height, which contradicts our intuition that vehicles should feel less stable the higher they are. But it is at lower height that people think vehicles are moving faster. This experiment bears out our previous findings, yet modifies them in an important way. Apparently, the display semantics of synthetic environments make directly perceived speed the more important visual cue in behavioral situations, over the indirect perception of vehicle stability as a function of height. Interestingly, at least a few of the participants thought the train was likely to tip over at each of the virtual eyeheights. In this experiment, we did not find an eyeheight that all participants Figure 4. View from train at 1”, 1.25”, and 1.5” 206 judged to be safe. Apparently perceived speeds of 83 to 120 mph are simply too fast to permit safe turns. 4. ACKNOWLEDGEMENTS We gratefully acknowledge the contributions of the following students, who carried out significant portions of the studies described: Brian M. Casey, Gregory M. Phoenix, Eric Stein, Gabrielle Mahar, Jennifer Janezic, and Corey Caughey. 3. LESSONS FOR DESIGNERS The results of these two experiments illustrate three important points that designers can use: Vastly different physical realities can give rise to similar psychological realities or experiences. In the first experiment, the participants made judgments of their experience of speed for video captured from both a normal-sized car and a small, remotecontrolled toy car. When we examine the graph of results from this experiment, we can see that participants found some of their speed experiences to be similar in both vehicles. That is, their judgments overlapped, although the physical realities were quite different and did not overlap at all. 5. REFERENCES Similar physical realities can give rise to vastly different subjective realities. In the second experiment, all the video clips were captured from one device, a toy train, traveling at the same velocity in all instances. The graph for the second experiment shows that the participants judged their risk of tipping over to be quite different. By simply manipulating camera height, we can convert participants’ judgments from “quite likely to tip” to “not at all likely to tip.” [3] Larish, J. F. and J. M. Flach, “Sources of Optical Information Useful for Perception of Speed of Rectilinear Self-Motion,” Journal of Experimental Psychology, Human Perception and Performance, 16(2), 295-302 (1990). [1] Dyre, B. P., “Perception of Accelerating Self-Motion: Global Optical Flow Rate Dominates Discontinuity Rate,” Proceedings of the Human Factors and Ergonomics Society 41st Annual Meeting, (pp. 1333-1337), Human Factors and Ergonomics Society, Santa Monica, CA (1997). [2] Engen, T., “Scaling Methods,” in J. W. Kling & L. A. Riggs (eds.). Woodworth & Scholsberg’s Experimental Psychology 3rd ed., Holt, Rinehart & Winston, New York (1971). [4] Lee, D. N., “Visual Information During Locomotion,” in R. B. MacLeod and H. L. Pick, Jr. (eds.), Perception: Essays in honor of J. J. Gibson (pp. 250-267), Cornell University Press, Ithaca, NY (1974). View, or telecentric perspective, is a powerful variable that designers can use to create the subjective reality or impression of choice. The design impact of modeling and understanding the relationship of the physical to the psychological is that the designer can choose which subjective reality to instill in the observer—for example, that the vehicle is top-heavy—and accomplish that feat. In other words, one can design subjective experiences separately from the constraints of physical reality. The notion of designing subjective experience is at the heart of synthetic environments. The correct criterion to use in designing a synthetic experience is whether it gives rise to the correct or intended semantic interpretation, not some arbitrary mapping between reality and the display. [5] Lee, D. N., “A Theory of Visual Control of Braking Based on Information about Time to Collision,” Perception, 5, 437459 (1976). [6] Lee, D. N., “The Optic Flow Field: The Foundation of vision,” Transactions of the Royal Society, 290B, 169-179 (1980). [7] Noel, R. W., C. M. Hunter, B. M. Casey, and G. M. Phoenix, “Telepresence Effects on the Relationship of Velocity to Perceived Speed,” Proceedings of the Human Factors and Ergonomics Society 43rd Annual Meeting (pp. 1247-1250), Human Factors and Ergonomics Society, Santa Monica, CA (1999). Changing the design criterion from replication of physical reality to a designed reality gives us new tools with which to attack the problem areas that still plague the development of synthetic environments. It is easy to think of ways that one might use these tools to make a system that is more tuned to an individual’s needs. We might also consider how an experience could be made to seem less boring (perhaps, by increasing perceived speed), or how to make operators perform more safely by increasing their perceived risk. Even the need for video bandwidth and input control quickness should be related to perceived speed. An operator may be more tolerant of poor video quality, dropouts, and control lags if perceived speed is slower. [8] Owen, D. H., R. Warren, R. S. Jensen, S. J. Mangold, and L. J. Hettinger, “Optical Information for Detecting Loss in One’s Own Forward Speed,” Acta Psychologica, 48, 203213 (1981). [9] Stevens, S. S., “On the Psychophysical Law,” Psychological Review, 64, 153-181 (1957). [10] Warren, R., Optical Transformations during Movement: Review of the Optical Concomitants of Egospeed, (Final technical report for Grant No. AFOSR-81-0108), Ohio State University, Department of Psychology, Aviation Psychology Laboratory, Columbus, OH (1982). 207