Statistical Power of Psychological Research

advertisement

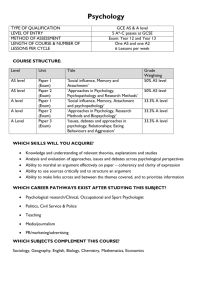

Journal of Consuming and Clinical Psychology 1990. Vol. 58, No. 5, 646-656 Copyright 1990 by the American Psychological Association, Inc. 0022-^06 X/90/SOO. 7 5 METHODOLOGICAL CONTRIBUTION TO CLINICAL RESEARCH Statistical Power of Psychological Research: What Have We Gained in 20 Years? Joseph S. Rossi This document is copyrighted by the American Psychological Association or one of its allied publishers. This article is intended solely for the personal use of the individual user and is not to be disseminated broadly. Cancer Prevention Research Center University of Rhode Island Power was calculated for 6,155 statistical tests in 221 journal articles published in the 1982 volumes of the Journal of Abnormal Psychology, Journal of Consulting and Clinical Psychology, and Journal oj Personality and Social Psychology. Power to detect small, medium, and large effects was .17, .57, and .83, respectively. 20 years after Cohen (1962) conducted the first power survey, the power of psychological research is still low. The implications of these results concerning the proliferation of Type I errors in the published literature, the failure of replication studies, and the interpretation of null (negative) results are emphasized. An example is given of the use of power analysis to help interpret null results by setting probable upper bounds on the magnitudes of effects. Limitations of statistical power analyses, suggestions for future research, sources of computational information, and recommendations for improving power are discussed. The power of a statistical test is the probability that the test 1. Knowledge of the power of a statistical test indicates the will correctly reject the null hypothesis. Because the aim of likelihood of obtaining a statistically significant result. Presum- behavioral research is to discover important relations between ably, most researchers would not want to conduct an investiga- variables, a consideration of power might be regarded as a natu- tion of low statistical power. The time, effort, and resources ral and important part of the planning and interpretation of required to conduct research are usually sufficiently great that a research. Cohen (1977) has expressed the point aptly: "Since reasonable chance of obtaining a successful outcome is at least statistical significance is so earnestly sought and devoutly wished for by behavioral scientists, one would think that the a the researcher might elect either to increase power or to aban- implicitly desirable. Thus, if a priori power estimates are low, priori probability of its accomplishment would be routinely don the proposed research altogether if the costs of increasing determined and well understood" (p. 1). Unfortunately, this power are too high, or if the costs of conducting research of low seems not to be the case, and it is probably not an exaggeration power cannot be justified. to assert that most researchers know little more about statisti- 2. Knowledge of the power of a statistical test facilitates in- cal power than its definition, even though a routine consider- terpretation of null results. It is often stressed in textbooks, ation of power has several beneficial consequences. although seldom adhered to in practice, that failure to reject the null hypothesis does not mean that the null hypothesis is true, only that there is insufficient evidence to reject the null. In fact, Portions of this article are based on a doctoral dissertation submitted at the University of Rhode Island. I thank the members of my committee, Charles E. Collyer, Janet Kulberg, and Peter F. Merenda, for their support and guidance. I also thank Colleen A. Redding and Susan R. Rossi for helpful discussions and critical readings of the manuscript. Portions of the data were presented at the annual conventions of the Eastern Psychological Association, Baltimore, Maryland, April 1984; the American Association for the Advancement of Science, New York, New York, May 1984; the Psychometric Society, Toronto, Ontario, Canada, June 1986; and the American Psychological Association, Atlanta. Georgia, August 1988. This research was supported, in part, by Grants CA27821, CA50087, and CAS 1690 from the National Cancer Institute. Computing resources were provided by the Academic Computer Center of the University of Rhode Island and by a Biomedical Research Support Grant to Joseph S. Rossi. Correspondence concerning this article should be addressed to Joseph S. Rossi, Cancer Prevention Research Center, Department of Psychology, University of Rhode Island, Kingston, Rhode Island 028810808. the proper interpretation of a null result is conditional on the power of the test. If power was low, then it is reasonable to suggest that, a priori, there was not a fair chance of rejecting the null hypothesis and that the failure to reject the null should not weigh so heavily against the alternative hypothesis (the "alibi" use of power). However, if power was high, then failure to reject the null can, within limits, be considered as an affirmation of the null hypothesis, because the probability of a Type II error must be low. Thus, in the same way that a statistically significant test result permits the rejection of the null hypothesis with only a small probability of error (alpha, the Type I error rate), high power permits the rejection of the alternative hypothesis with a relatively small probability of error (beta, the Type II error rate). The qualification on accepting the null hypothesis involves the concept of probable upper bounds on effect sizes and will be addressed in greater detail in the Discussion section. 3. Statistical power provides insight concerning entire re646 This document is copyrighted by the American Psychological Association or one of its allied publishers. This article is intended solely for the personal use of the individual user and is not to be disseminated broadly. POWER OF PSYCHOLOGICAL RESEARCH 647 search domains. When the average statistical power of an entire (1969,1977) convenient power tables. These surveys represent a research literature is low, the veracity of even statistically signifi- wide variety of disciplines (see Table 1) and have closely fol- cant results may be questioned, because the probability of re- lowed the procedures described by Cohen (1962). The principal jecting a true null hypothesis may then be only slightly smaller than the probability of rejecting the null hypothesis when the difference in method between Cohen's (1962) survey and those conducted by later investigators concerns the definitions of ef- alternative is true (Bakan, 1966). Thus, a substantial proportion of published significant results may be Type I errors. When (1969) himself so that the definitions of small, medium, and fect size. Changes in these definitions were suggested by Cohen power is marginal (e.g, approximately .50), an inconsistent pat- large effects would be more consistent across different types of tern of results may be obtained in which some studies yield statistical tests. The difference between the earlier and later significant results while others do not (Kazdin & Bass, 1989; definitions of effect size are relatively minor, but they deserve Rossi, 1982,1986). This brief survey of the value of power analysis raises the note. For example, for the / test the definitions were changed from 0.25,0.50, and 1.00 standard deviation units to 0.20,0.50, question of why researchers ignore an issue of such clear and and 0.80 standard deviation units for small, medium, and large obvious merit. Indeed, the benefits of a routine consideration of statistical power may strike some readers as almost too good effects, respectively. Rjr the Pearson correlation coefficient, the definitions were changed from correlations of .20, .40, and .60 to be true. Certainly researchers have been admonished periodi- to correlations of. 10, .30, and .50 for small, medium, and large cally to pay more attention to statistical power and less to statis- effects, respectively. tical significance (Cohen, 1965; Greenwald, 1975; Rosnow & Because these changes resulted in smaller effect size defini- Rosenthal, 1989). Yet historically, behavioral researchers have tions, a decrease in power might have been expected for subse- been concerned primarily with Type I errors and the associated quent power surveys. Instead, results were generally similar to concept of statistical "significance" and have largely ignored those obtained by Cohen (1962; see Table 1). With few excep- the Type II error and its concomitant, statistical power (Chase tions, most surveys produced remarkably consistent results, especially given the diverse nature of the research fields covered. & Tucker, 1976; Cowles & Davis, 1982; Taylor, 1959). The average statistical power for all 25 power surveys (includ- Cohen's Power Survey What effect has the lack of attention to statistical power had on the power of research? Cohen (1962) was the first to conduct a systematic survey of statistical power, and his paper is frequently credited with introducing power into the literature of the social sciences (but for an earlier account see Mosteller & ing Cohenls) was .26 for small effects, .64 for medium effects, and .85 for large effects and was based on 40,000 statistical tests published in over 1,500 journal articles. Power of Psychological Research Bush, 1954). Cohen (1962) surveyed all of the articles published in the Journal of Abnormal and Social Psychology (JASP) for the The low statistical power indicated by the results of these surveys has created some concern among methodologists about year 1960. Eliminated from consideration were articles in the health of psychological research, especially with respect to which no statistical tests were conducted (e.g, case reports, fac- the possible inflation of Type I error rates and the interpreta- tor-analytic studies). Also eliminated from the survey were statistical tests considered to be peripheral, that is, tests that did not address major hypotheses. tion of null results (e.g, Bakan, 1966; Cohen, 1965; Greenwald, 1975; Overall, 1969; Tversky & Kahneman, 1971). The irony of The determination of power was not without difficulty. these results is that the low statistical power often attributed to psychological research is based primarily on power surveys of Power is determined by three separate factors: sample size, al- research in other disciplines (see Table 1) and on Cohen's re- pha level, and effect size; and all three factors must be known to sults, which were gathered over 25 years ago. Since the publica- estimate the power of a test. Sample sizes were (usually) deter- tion of Cohen's survey, only two other power surveys of psycho- mined easily from the research report, and alpha was assumed logical research have been conducted. Sedlmeier and Giger- to be .05 and nondirectional. However, the estimation of effect enzer (1989) surveyed the power of research reported in the size proved to be a major difficulty, because authors never re- Journal of Abnormal Psychology. Using Cohen's (1962) original ported expected population effect sizes. Therefore, Cohen effect size definitions, they found mean power values of .21, (1962) elected to estimate the power of each statistical test for a .50, and .84, respectively, for small, medium, and large effect range of effect sizes: small, medium, and large. This procedure resulted in three power estimates for each of 2,088 statistical sizes, almost identical to those reported by Cohen. These re- tests. Power for each journal article was determined by averag- been no increase in statistical power since Cohen's survey. sults are disappointing, because they suggest that there has ing across all of the statistical tests reported in an article, and The survey conducted by Chase and Chase (1976) for the the power of abnormal-social psychological research as a Journal of Applied Psychology is somewhat more encouraging. whole was estimated by averaging the results of the 70 journal Using Cohen^ (1969) smaller effect size definitions, Chase and Chase (1976) nevertheless found substantially greater power for medium-sized effects (.67) than either Cohen (1962) or Sedl- articles included in the survey. The average statistical power for the JASP articles was. 18 to detect small effects, .48 for medium effects, and .83 for large effects. Results of Other Power Surveys Numerous power surveys have been conducted since Cohen (1962), no doubt encouraged by the publication of Cohen's meier and Gigerenzer (1989). Unfortunately, the results obtained by Chase and Chase (1976) may not be representative of research in other areas of psychology. In particular, the sample sizes characteristic of studies in the Journal of Applied Psychology are substantially larger than the sample sizes typically used This document is copyrighted by the American Psychological Association or one of its allied publishers. This article is intended solely for the personal use of the individual user and is not to be disseminated broadly. 648 JOSEPH S. ROSSI oot—ooceovr^r-ONr rn—•O — en 55 QNOOOOOCTiONOOOO ^ f -r < —- CN u « S oo i --"-"" m" ON 11 ^•«/~)Wir^f-u">^ao""} si 5 r-"^ •a —' m L II "S 1 S'S ' .S u <« .a e ^ c •-? o 11 1 e -p . « s 649 POWER OF PSYCHOLOGICAL RESEARCH in most psychological research. Average sample size in the Journal of Applied Psychology is approximately 350 (Muchinsky, the inclusion of either nonparametric or multivariate statistical methods would have affected the results of the power survey. 1979) but is only about 50 or 60 in most other psychological journals (Holmes, 1979). The purpose of the research reported here was to provide a more current and representative assessment of the power of psychological research. Three journals covering a wide range of psychological research were selected for the survey: the Journal of Abnormal Psychology, the Journal of Consulting and Clinical Psychology, and the Journal of Personality and Social Psychology. The Journal of Abnormal Psychology and the Journal of Personality and Social Psychology are the direct journal "de- This document is copyrighted by the American Psychological Association or one of its allied publishers. This article is intended solely for the personal use of the individual user and is not to be disseminated broadly. scendents" of the Journal of Abnormal and Social Psychology, the journal examined by Cohen (1962). Thus, a second purpose of this study was to provide a direct comparison to Cohen's results, to discover what changes have occurred over the past 20 years in the power of psychological research. Method Selection Procedures: Journal Articles All of the articles published in the Journal of Abnormal Psychology, 1982, Volume 91; the Journal of Consulting and Clinical Psychology, 1982, Volume 50; and the Journal of Personality and Social Psychology, 1982, Volume 42, were examined, and articles not reporting any statistical tests were eliminated from the study. In addition, some articles were excluded because they contained statistical methods for which power could not be determined. Selection Procedures: Statistical Tests As in previous surveys, a distinction was made between major and peripheral statistical tests. Major tests were those that bore directly on the research hypotheses of the study, whereas peripheral tests did not. Peripheral tests, which were excluded from the survey, included all of the correlation coefficients of a factor analysis, unhypothesized higher order analysis of variance interactions, manipulation checks, interrater reliability coefficients, reliabilities of psychometric tests (internal consistency, test-retest), post hoc analysis of variance procedures (means comparisons tests, simple effects), and tests of statistical assumptions. For major statistical tests, power was determined for the following: / test, Pearson r, z test for the difference between two independent correlation coefficients, sign test, z test for the difference between two independent proportions, chi-square test, F test in the analysis of variance and covariance, and multiple regression F test. Primarily excluded from the survey were most nonparametric techniques (e.g., Mann-Whitney U, rank order correlation tests), multivariate methods (e.g., canonical correlation, multivariate analysis of variance), and various other methods for which the concept of power is not directly relevant (e.g, factor analysis, cluster analysis, and multidimensional scaling). At the time of Cohen's (1962) study, nonparametric techniques were much more popular than they are now, whereas the use of multivariate techniques has increased. Because the power characteristics of many nonparametric statistics were unavailable, Cohen (1962) substituted power estimates for the analogous parametric tests (e.g., / test for the Mann-Whitney U test). This procedure seems problematical in that it overestimates power in many situations. Because the frequency of use of nonparametric methods is now quite low, Cohen's procedure was not continued in the present survey. Multivariate statistics were also excluded, because neither convenient computer algorithms nor suitable power tables for most multivariate techniques were available at the time the survey was conducted. It is unlikely that Determination of Statistical Power Cohen's (1977) tables were used to determine power for sign tests and chi-square tests. Tables and formulas in Rossi (1985b) were used to determine power for z tests for the difference between independent correlation coefficients and for z tests for the difference between independent proportions. The tabled values in these sources are accurate to about one d igit in the second decimal place when compared with exact values. Computer programs were written by the author to expedite the determination of power for the remaining tests (t, r, and F). This was done because of the frequency of occurrence of these statistics and to avoid the interpolation errors inevitably encountered with the use of tables or charts. All programs were written in double precision IBM BASIC (version 3.10) to run on an IBM PC/AT microcomputer. The computer program to determine the power of the t test was based on the normal approximation to the noncentral I distribution given by Cohen (1977). Because this formula assumes that sample sizes are equal, it was modified slightly to permit unequal n power calculations. The determination of power for the Pearson correlation coefficient was based on the normal score approximation for r provided by the hyperbolic arctangent transformation, plus a correction factor for small sample sizes (Cohen, 1977). The cube root normal approximation of the noncentral F distribution was used for the analysis of variance power program (Laubscher. 1960). The accuracy of this formula has been found to be quite good, with errors appearing only in the 3rd or 4th decimal places for a = .05 (Cohen & Nee, 1987). Although more accurate approximations exist, the small gain in precision did not justify the additional computational complexity. For all computer programs, normal score approximations were converted to probability (power) values using formula 26.2.19 in Abramowitz and Stegun (1965, p. 932), who give the accuracy of the algorithm as±1.5 X 10~7. The resulting power values agreed to two decimal places with those obtained from Cohen's (1977) power tables and recently available computer program (Borenstein & Cohen, 1988). Results A total of 312 articles were examined, 58 in the Journal of Abnormal Psychology (JAP), 146 in the Journal of Consulting and Clinical Psychology (JCCP), and 108 in the Journal of Personality and Social Psychology (JPSP). Of the total, 91 articles were excluded: 9 in JAP 68 in JCCP, and 14 in JPSP The majority of excluded articles reported no statistical tests at all (n — 62). Only 29 articles contained statistical tests for which power was not or could not be determined (primarily data reduction techniques, such as cluster analysis and factor analysis). The large number of excluded articles for JCCP was due to the publication of a special issue of the journal containing only review articles. Power was determined for statistical tests reported in the remaining 221 articles: 49 in JAP, 78 in JCCP, and 94 in JPSP The total number of tests for which power was calculated was 6,155:1,289 in JAK2,231 in JCCP, and 2,635 in JPSPThe frequency of occurrence for each test is given in Table 2. The sample is dominated by the traditional statistical tests: Pearson r, analysis of variance, and the (test, which constituted 90% of the reported techniques. Because this tabulation excludes tests for which power was not determined, the listed frequencies are 650 JOSEPH S. ROSSI Table 2 Frequency Distribution for Statistical Tests in Power Survey JAP Statistical test This document is copyrighted by the American Psychological Association or one of its allied publishers. This article is intended solely for the personal use of the individual user and is not to be disseminated broadly. F lest: ANOVA and ANCOVA Pearson r nest Chi-square test F test: multiple regression z test: difference between rs z test: difference between ps Sign test Total Freq. 456 430 194 133 75 1 0 0 JPSP JCCP Total % Freq. % Freq. % Freq. % 35.4 33.4 15.1 10.3 1,083 48.5 31.7 1.099 41.7 37.6 15.8 2,638 2,128 752 400 154 62 17 4 42.9 34.6 12.2 5.8 0.1 0 0 1,289 708 142 227 27 41 3 0 2,231 6.4 10.2 1.2 1.8 0.1 0 990 416 40 52 20 14 4 2,635 1.5 2.0 0.8 0.5 0.2 6.5 2.5 1.0 0.3 0.1 6,155 Note. JAP = Journal of Abnormal Psychology; JCCP = Journal of Consulting and Clinical Psychology; JPSP= Journal of Personality and Social Psychology. Freq. = frequency of occurrence. ANOVA = analysis of variance; ANCOVA = analysis of covariance. not necessarily representative of the use of statistics in psychological research (see Moore, 1981). ((289) = 3.71, p < .001, a2 = .042; and for large effects, ((289) = 3.80, p<. 001, u 2 = . 044. Because the number of statistical tests appearing in each journal article varied greatly (from I to 256), the article was used as the unit of analysis.1 Power was determined by averaging across the statistical tests reported in the article so that each article contributed equally to the overall power assessment of the journal literature (following Cohen, 1962). Six separate Discussion Power of Current Psychological Research Compared With Cohen's (1962) Survey power estimates were made for each statistical test. Three esti- The results of this study seem to suggest that statistical power mates were based on Cohen's (1962) original definitions of small, medium, and large effects. A second set of estimates was has increased slightly since Cohen (1962) conducted his survey. However, these increases are no cause for joy. In fact, the gen- based on Cohen's (1969, 1977) "modern" definitions. In most eral character of the statistical power of psychological research practical respects, power did not depend greatly on which definitions were used. Therefore, except where noted below, only effects continues to be poor, power to detect medium effects the results that were based on Cohen's more recent and fre- remains the same today as it was then: Power to detect small continues to be marginal, and power to detect large effects continues to be adequate. Furthermore, any increases in power are quently used definitions will be reported. An alpha level of .05 (two-tailed) was assumed for all power calculations. probably effectively eliminated by the increased use of alpha- The mean power to detect small, medium, and large effects adjusted procedures since Cohen's (1962) survey was conducted for all 221 journal articles is given in Table 3. These results are (Sedlmeier and Gigerenzer, 1989). Thus, the increases in power collapsed across journals, inasmuch as a comparison of the results for the three journals revealed very similar levels of sta- reported here do not appear to be practically meaningful. Nevertheless, from a purely technical standpoint, these re- tistical power (see Table 4). The average statistical power com- sults are not entirely consistent with those recently reported by bined across journals was .17 to detect small effects, .57 to Sedlmeier and Gigerenzer (1989), who found no increase in detect medium effects, and .83 to detect large effects. Com- statistical power compared with Cohen (1962). Unlike the pres- pared with the results of other surveys (see Table 1), these results suggest somewhat lower statistical power for JAP, JCCP, and JPSP.2 For small effects, ((220) = 9.39, p < .001, oi2 = .283; for medium effects, ((220) = 4.33, p < .001, ui2 = .074; and for large effects, ((220) = 2.24, p < .05, w2 = .018. In general, the magnitude of the discrepancy decreases with increasing effect size. Power for the three journals was also computed using Cohen's (1962) earlier definitions of effect size, to ensure comparability with the results reported by Cohen (1962). Using these somewhat larger definitions, the mean power to detect small, medium, and large effects was .25, .60, and .90, respectively. Cohen (1962) reported results of .18, .48, and .83 for small, medium, and large effects. For all levels of effect size, the increases in power were statistically significant:3 For small effects, ((289) = 3.03, p < .01, w2 = .027; for medium effects, ' Use of either statistical tests or separate studies reported within articles as the unit of analysis gave results essentially identical to those found using articles as the unit of analysis. 2 These are one-sample / tests using the mean power values of the 25 previously conducted power surveys reported in Table 1 as normative (population) estimates and compared with the mean power values reported in Table 3 for the 221 studies used in the present survey. 1 Two-sample (independent groups) t tests were used to compare the resultsof the 70 studies analyzed by Cohen (1962) with the 221 studies analyzed in the present survey. Standard deviations of the mean power values reported here are .08, .20, and. 16 for small, medium, and large effect sizes, respectively, for Cohen (1962) and .19, .25, and .13 for small, medium, and large effect sizes, respectively, for the present survey. 651 POWER OF PSYCHOLOGICAL RESEARCH Table 3 Power of 221 Studies Published in the Journal of Abnormal Psychology, the Journal of Consulting and Clinical Psychology, and the Journal of Personality and Social Psychology Medium effects This document is copyrighted by the American Psychological Association or one of its allied publishers. This article is intended solely for the personal use of the individual user and is not to be disseminated broadly. Small effects Power Frequency .99 .9S-.98 .90-.94 .80-.89 .70-.79 .60-.69 .50-59 .40-.49 .30-39 .20-.29 .10-.I9 .05-.09 2 — Cumulative % Frequency Cumulative % 100 99 99 99 99 98 97 96 94 90 77 32 14 9 6 19 29 18 24 32 34 26 10 — 100 94 90 87 78 65 57 46 32 16 5 — — 1 2 1 3 5 8 29 100 70 JV M Mdn SD Q, 0, 95% confidence interval on M Large effects Frequency Cumulative % 45 44 19 36 24 23 16 7 7 100 80 60 51 35 24 14 6 3 — — — — — — 221 .17 .12 .14 .09 .18 221 .57 .53 .25 .36 .77 221 .83 .89 .18 .71 .98 .148-. 186 .S33-.599 .801 -.849 Note. Q, and Q, are the first and third quartiles of each frequency distribution. ent survey, which analyzed studies appearing in the 1982 vol- year variations in statistical power, although it is not clear why umes of three journals, Sedlmeier and Gigerenzer (1989) examined studies published in the 1984 volume of one journal, the this should be so. Journal of Abnormal Psychology. However, in the current study all three journals yielded virtually identical results. Examining Power of Current Psychological Research Compared With the Power of Other Surveys only the Journal of Abnormal Psychology results for the present survey and using Cohen's (1962) original effect size definitions, Power estimates that are based on Cohen's (1977) more recent power to detect small, medium, and large effects was .24, .60, and smaller effect size definitions are naturally somewhat lower and .91, respectively, compared with .21, .50, and .84, respectively, obtained by Sedlmeier and Gigerenzer (1989). Power is than power estimates that are based on Cohen's (1962) earlier somewhat greater for medium and large effects in the present current psychological research is somewhat lower than the survey. This discrepancy is somewhat puzzling, inasmuch as power of research in other social and behavioral science disci- these results are based on the same journal published only two plines (Table 1). However, it is likely that these differences are years apart. It is possible that there may be significant year-to- not very important. The d isparity in power is most apparent for definitions. These results (Table 3) suggest that the power of Table 4 Comparison of the Power of Studies Published in the Journal of Abnormal Psychology, the Journal of Consulting and Clinical Psychology, and the Journal of Personality and Social Psychology Small effects Medium effects Large effects Journal N M SD M SD M SD JAP JCCP JPSP 49 78 94 .16 .18 .16 .15 .17 .11 .56 .58 .55 .24 .27 .24 .84 .83 .81 .17 .19 .18 Note. JAP = Journal of Abnormal Psychology; JCCP = Journal of Consulting and Clinical Psychology; JPSP = Journal of Personality and Social Psychology. This document is copyrighted by the American Psychological Association or one of its allied publishers. This article is intended solely for the personal use of the individual user and is not to be disseminated broadly. 652 JOSEPH S. ROSSI small effects, diminishing rapidly with increasing effect size. In general, the power of current psychological research is quite consistent with the range of results obtained by most of the power surveys shown in Table 1. Thus, the common tendency to generalize to psychological research the low power obtained by studies in other areas of social and behavioral research seems to have been justified. Methodologists have typically recommended that researchers design their studies so that there is an 80% chance of detecting the effect under investigation (e.g, Cohen, 1965). In this respect, the results of these power surveys are not encouraging. The average statistical power of research found in our survey exceeded .80 only for large effects, and more than a third (35%) of all studies were unable to attain this level of power even for large effects. More than 75% of all studies in the survey failed to achieve power of .80 for medium-sized effects, and almost half of the studies did not have even a 50% chance of detecting effects of this size. The prospects of detecting small effects are dismal: More than 90% of the surveyed studies had less than one chance in three of detecting a small effect. Implications of Survey Results Whether power is based on Cohen's earlier or later effect size definitions, the results of these power surveys are not encouraging. Although power may be adequate if a large effect is postulated, there is often no way to judge whether or not such an assumption is justified, because most researchers do not report obtained effect sizes. Surveys of the magnitude of effects in psychological research suggest that typical effect sizes may be no larger than Cohen's (1977) definition of a medium-sized effect (Cooper & Findley, 1982; Haase, Waechter, & Solomon, 1982). Even small effects can no longer be ignored in psychological research, thanks to the illuminating analyses provided by Abelson (1985) and Rosenthal and Rubin (1982) demonstrating the potential impact of such effects. The levels of statistical power found in the present survey have several serious consequences depending on how large we suppose population effect sizes are in psychological research. What if effect sizes are small? Power for small effects was very low (. 17) in our survey. Of course, it might be argued that the power of psychological research could not be this low, inasmuch as a large proportion of all published studies report statistically significant results (Greenwald, 1975; Sterling, 1959). Unfortunately, not only does low power suggest that there may be a large number of Type II errors, but low power also suggests the possibility of a proliferation of Type I errors in the research literature. Consider a researcher who conducts an investigation for which the null hypothesis happens to be true. With effect size equal to zero, the probability of obtaining a statistically significant result will be equal to alpha, because there is no true alternative to detect. Should our unfortunate researcher (or more likely, other researchers) persist in conducting research in this area, in the long run significant results will occur at a rate of 5%, if alpha is .05. Assuming an editorial publication bias favoring statistically significant results, disproportionately more of the significant results are likely to be published, while the nonsignificant results will for the most part remain in "file drawers" (Rosenthal, 1979). Because the population effect size in this case is zero, 100% of all published significant results would be Type I errors, despite a Type I error rate of 5%! Although this situation is admittedly contrived, the situation with power greater than alpha but still low is not much better. When the power of research is low, the probability of rejecting a true null hypothesis may be only slightly smaller than the probability of rejecting the null hypothesis when the alternative is true. That is, the ratio of Type I errors to power may be uncomfortably large, indicating that a substantial proportion of all significant results may be due to false rejections of valid null hypotheses. On the basis of the results of the present survey, this ratio is .05:. 17, suggesting one Type I error for approximately every three valid rejections of the null hypothesis, for a "true" Type I error rate of about .23. Nor is this situation unknown to methodologists, many of whom have commented on various aspects of the problem (e.g, Bakan, 1966; Cohen, 1965; Overall, 1969; Selvin & Stuart, 1966; Sterling, 1959; Tversky & Kahneman, 1971), some going so far as to suggest that all published significant results may be Type I errors. It is in this way that low power undermines the confidence that can be placed even in statistically significant results. This may well be the legacy of low statistical power for small effects. What if effect sizes are medium? Power to detect medium effects was .57 in our survey. The problem of increased Type I errors in the published literature is less serious here than if effect sizes are small. Yet, a problem of a different sort arises when power is marginal, that is, in the general vicinity of .50. The problem is that an inconsistent pattern of results may be obtained in which some studies yield significant results while others do not. Such a pattern of results is especially troublesome for research that is directed at a specific issue or problem area and frequently results in the failure to replicate an experimental finding. An example may be found in the literature on the spontaneous recovery of verbal associations. During the period when it was still a hot topic (1948-1969), spontaneous recovery became quite controversial, there being roughly equal numbers of significant and nonsignificant findings. The controversy was resolved by applying meta-analysis and power analysis to the entire body of spontaneous recovery studies (Rossi, 1982, 1986). The meta-analysis revealed a rather small effect size of .032 (proportion of variance accounted for). Rather than conduct a power survey of the spontaneous recovery literature using Cohen's range of small to large effect sizes, power for each study was computed using the actually obtained effect size. The average power of all 48 studies was .375, suggesting that 37.5% of spontaneous recovery studies should have found statistically significant results, in good agreement with the observed rate of significance of 41.7% (20 of 48 significant results). This analysis suggested that the sample sizes of spontaneous recovery studies were inadequate to ensure detection of the effect in most studies but were sufficient to guarantee some statistically significant results. It is easy to see how the controversy over the existence of the effect was generated under these circumstances. Current texts regard spontaneous recovery as ephemeral, and the issue was never resolved so much as it was abandoned. The entire episode is reminiscent of Meehl's (1978) characterization of the fate of theories in "soft psychology" as never dying, just slowing fading away as researchers lose interest to pursue new POWER OF PSYCHOLOGICAL RESEARCH theories. This may be the legacy of marginal levels of statistical nals. By specific research literatures 1 mean a set of studies for which a specific research hypothesis has been clearly defined power for medium effects. If effect sizes in psychological and operationalized, such as gender differences in mathemati- research are large, then the results of the present survey suggest that power will be somewhat greater than .80. But it is doubtful cal ability, psychotherapy outcome studies, or the existence of spontaneous recovery. A few such studies exist, all of which that anyone seriously believes average effect sizes in psychology have reported relatively low statistical power (Beaumont & What if effect This document is copyrighted by the American Psychological Association or one of its allied publishers. This article is intended solely for the personal use of the individual user and is not to be disseminated broadly. 653 sizes are large? are large, especially for research conducted outside the labora- Breslow, 1981; Bones, 1972; Crane, 1976; Freiman, Chalmers, tory, and there is certainly no evidence to support such a belief. Smith, & Kuebler, 1978; Katzell & Dyer, 1977; King, 1985; The few surveys that have been conducted suggest average effect sizes approximating Cohen's (1977) definition of medium Rothpearl, Mohs, & Davis, 1981). These studies are limited, however, in the same way that traditional power surveys have effects (Cooper & Findley, 1982; Haase et al, 1982). Informal been limited: All have estimated statistical power using either observations of the effect sizes reported in published meta-ana- Cohen's range of effect size estimates or other similarly arbi- lyses are consistent with this view. trary effect sizes. Instead it would be much more interesting to use meta-analysis to determine the actual obtained effect size of Limitations of Power Surveys the specific research literature and to use this estimate of effect size as the population parameter against which to compute Power surveys have some important limitations that should be kept in mind when one is evaluating the results reported in power. This has been done, as previously described, for the this article. Several have already been mentioned, including other research areas as well (Rossi, 1986), with generally similar limited time sampling (usually only one year) and a small num- results. The only other such study was conducted by Kazdin ber of journals (usually only one). More comprehensive surveys and Bass (1989), who analyzed the power of comparative psy- are certainly needed. Unfortunately, other problems may not be chotherapy outcome studies. They found adequate statistical so easily addressed. One critical issue concerns the specific definitions assumed to represent small, medium, and large ef- power for treatment versus no-treatment studies, but relatively fects. Lacking comprehensive survey data on effect sizes in be- versus active control comparisons. Thus, the frequently ob- havioral research, Cohen (1962,1969,1977) chose admittedly tained result of no-treatment differences in psychotherapy outcome research may well be due to inadequate statistical power, arbitrary definitions for small, medium, and large effects. If research on spontaneous recovery (Rossi, 1982) and for several marginal power for treatment versus treatment and treatment Cohen^ range of effect sizes is not representative of psychological research, then the results of power surveys will be similarly similar to the situation described for spontaneous recovery. The not representative. Fortunately, as it has already been pointed of the enormous amount of effort that has gone into the detec- out, surveys of effect sizes have supported Cohen's estimates. However, such surveys have been few, have covered a very lim- tion of differential treatment effects over the past 25 years. ited range of disciplines, and have for the most part been based scribed here, may bring into focus more clearly the impact of low statistical power on research. on relatively small sample sizes. There is a real need to conduct importance of this result should not be underestimated in view Power surveys of specific research areas, such as those de- more comprehensive effect size surveys over a wide range of psychological journals. Because reporting of obtained effect sizes is still not a common publication practice, a really comprehensive survey will be both difficult and tedious to complete. A survey of the effect sizes reported in published metaanalyses might be a good place to start. Use of Statistical Power Analysis in the Interpretation of Negative Results Perhaps the most frequent use of statistical power analysis is Another limitation of power surveys is that they have con- in determining sample sizes for planned investigations. Somewhat overlooked is the fact that power analysis may also be centrated on very general research domains, primarily by analyzing all of the studies appearing in one or more specific jour- cant results have not been obtained. Therefore, it seems worth nals. The research published in any journal necessarily covers a noting in some detail how statistical power analysis may be heterogeneous range of topics. Thus, even if the range of effect useful in helping to interpret negative results. As an example, it sizes on which power analyses are based is generally accurate, will be convenient to reconsider the discrepancy in results be- the results of power surveys are nevertheless strictly applicable only to the broad domain of research covered in the particular those obtained in the present survey. useful after a study has been completed, especially when signifi- tween those obtained by Sedlmeier and Gigerenzer (1989) and journal that is surveyed. Of course, the knowledge gained One possible explanation for the discrepancy is the relatively through such surveys has considerable value, as I have tried to small number of articles on which the Journal of Abnormal point out throughout this article. Yet it may be argued that the Psychology results are based, 54 for Sedlmeier and Gigerenzer (1989) and 49 in our study, thus leading to relatively wide confi- impact of such knowledge is diffused across the many specific research areas represented in the journal, leading to the speculation that the accumulated impact of low statistical power on research may be similarly diffuse. It may be for this reason that dence intervals around the mean power values. For example, for medium effects, the 95% confidence interval for Sedlmeier surveys of statistical power have had so little influence on the behavior of practicing researchers. One solution to this problem would be to conduct power .S33-.671. These results suggest that the inability of Sedlmeier surveys on specific research literatures instead of entire jour- population effect size for the increase in statistical power since and Gigerenzer (1989) is .426-.S74 and for the present survey is and Gigerenzer (1989) to detect a change in statistical power is itself a problem in statistical power. The best estimate of the 654 JOSEPH S. ROSSI per limit on the magnitude of the effect that escaped detection. Such a procedure is commonplace in the more developed Table 5 Probable Upper Bounds on the Increase in Statistical Power to Detect Medium Effects for the sciences (Rossi, 1985a). This document is copyrighted by the American Psychological Association or one of its allied publishers. This article is intended solely for the personal use of the individual user and is not to be disseminated broadly. Sedlmeier & Gigerenzer (1989) Survey Effect size Power Type II error rate Upper bound on increase in power .01 .02 .03 .04 .05 .06 .07 .08 .09 .10 .19 .35 .49 .61 .71 .79 .85 .90 .93 .95 .81 .65 .51 .39 .29 .21 .15 .10 .07 .05 .53 .55 .56 .58 .59 .60 .61 .62 .63 .64 Note. Effect size is reported in terms of proportion of variance accounted for. Upper bounds are for increases in power to detect medium effects since Cohen (1962). Conclusions and Recommendations The results of this study suggest that, more than 20 years after Cohen (1962) conducted his study, the power of psychological research is still low. These results have serious implications not only for individual researchers conducting their own studies but for the entire discipline, especially with respect to the proliferation of Type I errors in the published literature and the frequent failure of replication studies. It is clear that the power of psychological research needs to be improved, but it is less clear how best to accomplish this goal. Reviewers, editors, and researchers themselves are unlikely to be comfortable with increasing alpha levels beyond the currently accepted standard of .05. Of course the easiest recommendation is to yet again admonish researchers to increase the size of their samples, but such increases are not always practical, and they are almost always expensive. This would seem to indicate that increasing Cohen (1962) is co2 = .038, the average of the three u2s for the increases in power for small, medium, and large effects found the magnitude of effects may be the only practical alternative to expensive increases in sample size as a means for increasing the in our survey. For sample sizes off!, = 70 (Cohen, 1962) and «2 = statistical power of psychological research. 54 (Sedlmeier & Gigerenzer, 1989), an independent groups / We tend to think of effect size (when we think of it at all) as a test with alpha (two-tailed) of .05 has power of only .585 to fixed and immutable quantity that we attempt to detect. It may detect an effect accounting for 3.8% of total variance. To achieve power of .80, Sedlmeier and Gigerenzer (1989) would be more useful to think of effect size as a manipulable parameter that can, in a sense, be made larger through greater measure- have had to include 178 studies in their survey. In contrast, the ment accuracy. This can be done through the use of more effec- power of our study was considerably higher, .822, but could be tive measurement models, more sensitive research designs, and obtained only by pooling results across the three journals in- more powerful statistical techniques. Examples might include more reliable psychometric tests; better control of extraneous cluded in the survey. If only the Journal of Abnormal Psychology had been included in the present study, power would have been only .561. Power analysis can also help put Sedlmeier and Gigerenzer's (1989) null results into some perspective. In particular, it would be valuable to know, given the power of their study, how large an sources of variance through the use of blocking, covariates, factorial designs, and repeated measurement designs; and, in general, through the use of any procedures that effectively reduce the "noise" in the system. Inasmuch as behavior has multiple causes, the increased use of multivariate statistical methods needs to be encouraged. Toward this end, additional work must increase in statistical power might have escaped detection. Table 5 gives the power and the probability of a Type II error of be done to make the computation of multivariate power more their study against a range of effect sizes. For example, for a accessible to researchers, along the lines started by Cohen population effect size accounting for 8% of total variance, the (1988) and Stevens (1980,1986). Type II error rate is approximately 10%. Using tables and formu- Sources of additional information on statistical power for las given in Cohen (1977), it can be shown that an effect of this nontechnical readers, including computational details and magnitude would have been obtained by Sedlmeier and Giger- worked examples, have become much more widely available in enzer (1989) if the power to detect medium effects reported in the past few years. Notable among these is the newly revised the Journal of Abnormal Psychology had increased to .62 (or edition of Cohen's (1988) seminal power handbook and the decreased to .33). Thus it may be concluded that the probability monographs by Kraemer and Thiemann (1987) and Lipsey that power has increased to .62 and that Sedlmeier and Giger- (1990). Unfortunately, most current general statistics textbooks still do not contain much information on the calculation of enzer (1989) failed to detect the increase is. 10. If you are willing to live with this probability of error, then this value of .62 may be regarded as a probable upper bound on the increase in statistical power since Cohen (1962). Table 5 lists additional upper statistical power. Those that do are usually couched in terms of noncentrality parameters or are limited to the consideration of simple cases, such as t tests and one-way analysis of variance F bounds for a wide range of Type II error rates for the Sedlmeier and Gigerenzer (1989) survey. In general, information similar to that presented in Table 5 can be invaluable for interpreting tests. Numerous computer programs are also now available to help microcomputer users determine their power and sample size requirements. Thirteen such programs covering a wide negative results. It does not, strictly speaking, permit the acceptance of the null hypothesis, because the null hypothesis is es- range of techniques are comprehensively reviewed by Goldstein (1989). To date, the only mainframe computer package that provides statistical power as standard output is the multivariate sentially always false (Bakan, 1966; Meehl, 1978). Rather, this information permits the researcher to put an approximate up- analysis of variance (MANOVA) routine in SPSS-X (1988). Users POWER OF PSYCHOLOGICAL RESEARCH are cautioned, however, that this program provides only retrospective power, that is, power based on the effect size actually obtained in the analysis. Finally, because power analysis requires a knowledge of effect size, researchers are strongly encouraged to use and report indices of effect size in their research reports or to provide enough data to permit others to do so. If negative results are found, then a probable upper bound on effect size should be determined. Future researchers will then have an adequate data base on which to estimate the power of their own research, for example, through the use of meta-analytic procedures. If a researcher has no past research or other knowledge to serve as a guide in the This document is copyrighted by the American Psychological Association or one of its allied publishers. This article is intended solely for the personal use of the individual user and is not to be disseminated broadly. selection of an appropriate effect size, then estimating power over a broad range of effect sizes is the least that should be done. References Abelson, R. P. (1985). A variance explanation paradox: When a little is a lot. Psychological Bulletin, 97,129-133. Abramowitz, M, & Stegun, I. A. (1965). Handbook of mathematical functions with formulas, graphs, and mathematical tables. New \brk: Dover. Bakan, D. (1966). The test of significance in psychological research. Psychological Bulletin, 66, 423-437. Beaumont, J. J., & Breslow, N. E. (1981). Power considerations in epidemiologic studies of vinyl chloride workers. American Journal of Epidemiology, 114, 725-734. Bones, J. (1972). Statistical power analysis and geography. Professional Geographer, 24, 229-232. Borenstein, M, & Cohen, J. (1988). Statistical power analysis: A computer program. Hillsdale, NJ: Erlbaum. Brewer, J. K. (1972). On the power of statistical tests in the American Educational Research Journal. American Educational Research Journal, 9, 391-401. Brewer, J. K.., & Owen, P. W (1973). A note on the power of statistical tests in the Journal of Educational Measurement. Journal of Educational Measurement, 10, 71-74. Chase, L. J., & Baran, S. 1. (1976). An assessment of quantitative research in mass communication. Journalism Quarterly, 53, 308-311. Chase, L. J., Chase, L. R., & Tucker, R. K. (1978). Statistical power in physical anthropology: A technical report. American Journal of Physical Anthropology, 49,133-138. Chase, L. J., & Chase, R. B. (1976). A statistical power analysis of applied psychological research. Journal of Applied Psychology, 61, 234-237. Chase, L. J., & Tucker, R. K. (1975). A power-analytic examination of contemporary communication research. Speech Monographs, 42, 29-41. Chase, L. J., & Tucker, R. K. (1976). Statistical power: Derivation, development, and data-analytic implications. Psychological Record, 26, 473-486. Christensen, J. E., & Christensen, C. E. (1977). Statistical power analysis of health, physical education, and recreation research. Research Quarterly, 48, 204-208. Cohen, J. (1962). The statistical power of abnormal-social psychological research: A review. Journal of Abnormal and Social Psychology, 65.145-153. Cohen, J. (1965). Some statistical issues in psychological research. In B. B. Wolman (Ed.), Handbook of clinical psychology (pp. 95-121). New York: McGraw-Hill. Cohen, J. (1969). Statistical power analysis for the behavioral sciences. New "York: Academic Press. 655 Cohen, J. (1977). Statistical power analysis for the behavioral sciences (rev. ed.). New York: Academic Press. Cohen, J. (1988). Statistical power analysis for the behavioral sciences (2nd ed.). Hillsdale, NJ: Erlbaum. Cohen, J., & Nee, J. C. M. (1987). A comparison of two noncentral F approximations with applications to power analysis in set correlation. Multivariate Behavioral Research, 22, 483-490. Cooper, H, & Findley, M. (1982). Expected effect sizes: Estimates for statistical power analysis in social psychology. Personality and Social Psychology Bulletin, S, 168-173. Cowles, M. P, & Davis, C. (1982). On the origins of the .05 level of statistical significance. American Psychologist, 37, 553-558. Crane, J. A. (1976). The power of social intervention experiments to discriminate differences between experimental and control groups. Social Service Review, 50, 224-242. Daly, J. A, & Hexamer, A. (1983). Statistical power in research in English education. Research in the Teaching of English, 17,157-164. Freiman, J. A., Chalmers, T. C, Smith, H, & Kuebler, R. R. (1978). The importance of beta, the type II error and sample size in the design and interpretation of the randomized control trial: Survey of 71 "negative" trials. New England Journal of Medicine, 299, 690-694. Goldstein, R. (1989). Power and sample size via MS/PC-DOS computers. American Statistician, 43, 253-260. Greenwald, A. G. (1975). Consequences of prejudice against the null hypothesis. Psychological Bulletin, 82,1-20. Haase, R. F. (1974). Power analysis of research in counselor education. Counselor Education and Supervision, 14,124-132. Haase, R. F, Waechter, D. M., & Solomon, G. S. (1982). How significant is a significant difference? Average effect size of research in counseling psychology. Journal of Counseling Psychology, 29, 58-65. Holmes, C. B. (1979). Sample size in psychological research. Perceptual and Motor Skills, 49, 283-288. Jones, B. J, & Brewer, J. K. (1972). An analysis of the power of statistical tests reported in the Research Quarterly. Research Quarterly, 43, 23-30. Katzell, R. A, & Dyer, F. J. (1977). Differential validity revived. Journal of Applied Psychology, 62,137-145. Katzer, J, & Sodt, J. (1973). An analysis of the use of statistical testing in communication research. Journal of Communication, 23, 251265. Kazdin, A. E, & Bass, D. (1989). Power to detect differences between alternative treatments in comparative psychotherapy outcome research. Journal of Consulting and Clinical Psychology, 57,138-147. King, D. S. (1985). Statistical power of the controlled research on wheat gluten and schizophrenia. Biological Psychiatry, 20, 785-787. Kraemer, H. C, &Thiemann, S. (1987). How many subjects? Statistical power analysis in research. Newbury Park, CA: Sage. Kroll, R. M, & Chase, L. J.(1975). Communication disorders: A power analytic assessment of recent research. Journal of Communication Disorders, 8, 237-247. Laubscher, N. F. (1960). Normalizing the noncentral t and F distributions. Annals of Mathematical Statistics, 31,1105-1112. Levenson, R. L. (1980). Statistical power analysis: Implications for researchers, planners, and practitioners in gerontology. Gerontologist, 20, 494-498. Lipsey, M. W (1990). Design sensitivity: Statistical power for experimental research. Newbury Park, CA: Sage. Mazen, A. M, Graf, L. A., Kellogg, C. E, & Hemmasi, M. (1987). Statistical power in contemporary management research. Academy of Management Journal, 30, 369-380. Mazen, A. M. M., Hemmasi, M, & Lewis, M. F. (1987). Assessment of statistical power in contemporary strategy research. Strategic Management Journal, 8, 403-410. Meehl, P. E. (1978). Theoretical risks and tabular asterisks: Sir Karl, Sir This document is copyrighted by the American Psychological Association or one of its allied publishers. This article is intended solely for the personal use of the individual user and is not to be disseminated broadly. 656 JOSEPH S. ROSSI Ronald, and the slow progress of soft psychology. Journal of Consulting and Clinical Psychology, 46, 806-834. Moore, M. (1981). Empirical statistics: 3. Changes in use of statistics in psychology. Psychological Reports, 48, 751-753. Mosteller, E, & Bush, R. R. (1954). Selected quantitative techniques. In G. Lindzey (Ed.), Handbook of social psychology: Theory and method (Vol. 1, pp. 289-334). Reading, MA: Addison-Wesley. Muchinsky, P. M. (1979). Some changes in the characteristics of articles published in the Journal of Applied Psychology over the past 20 years. Journal of Applied Psychology, 64, 455-459. Orme, J. G., & Combs-Orme, T. (1986). Statistical power and type II errors in social work research. Social Work Research & Abstracts, 22, 3-10. Orme, J. G., & Tolman, R. M. (1986). The statistical power of a decade of social work education research. Social Service Review, 60, 619632. Ottenbacher, K. (1982). Statistical power and research in occupational therapy. Occupational Therapy Journal of Research, 2,13-25. Overall, J. E. (1969). Classical statistical hypothesis testing within the context of Bayesian theory. Psychological Bulletin, 71, 285-292. Pennick, J. E., & Brewer, J. K. (1972). The power of statistical tests in science teaching research. Journal of Research in Science Teaching, 9, 377-381. Rosenthal, R. (1979). The "file drawer problem" and tolerance for null results. Psychological Bulletin. 86, 638-641. Rosenthal, R, & Rubin, D. B. (1982). A simple, general purpose display of magnitude of experimental effect. Journal of Educational Psychology, 74.166-169. Rosnow, R. L., & Rosenthal, R. (1989). Statistical procedures and the justification of knowledge in psychological science. American Psychologist, 44,1276-1284. Rossi, J. S. (1982, April). Mela-analysis, power analysis and arlifactual controversy: The case of spontaneous recovery of verbal associations. Paper presented at the 53rd Annual Meeting of the Eastern Psychological Association, Baltimore, MD. Rossi, J. S. (1985a, March). Comparison of physical and behavioral science: The roles of theory, measurement, and effect size. Paper presented at the 56th Annual Meeting of the Eastern Psychological Association, Boston, MA. Rossi, J. S. (1985b). Tables of effect size for z score tests of differences between proportions and between correlation coefficients. Educational and Psychological Measurement, 45, 737-745. Rossi, J. S. (1986, June). Effects of inadequate statistical power on specific research literatures. Paper presented at the annual meeting of the Psychometric Society, Toronto, Ontario, Canada. Rothpearl, A. B., Mohs, R. C, & Davis, K. L. (1981). Statistical power in biological psychiatry. Psychiatry Research, 5, 257-266. Sawyer, A. G., & Ball, A. D. (1981). Statistical power and effect size in marketing research. Journal of Marketing Research, 18, 275-290. Sedlmeier, P., & Gigerenzer, G. (1989). Do studies of statistical power have an effect on the power of studies? Psychological Bulletin, 105, 309-316. Selvin, H. C, & Stuart, A. (1966). Data-dredging procedures in survey research. American Statistician. 20(3), 20-23. Spreitzer, E, & Chase, L. J. (1974). Statistical power in sociological research: An examination of data-analytic strategies. Unpublished manuscript, Bowling Green State University, Department of Sociology. Bowling Green, OH. SPSS-X user's guide (3rd ed.). (1988). Chicago: SPSS, Inc. Sterling, T. D. (1959). Publication decisions and their possible effects on inferences drawn from tests of significance—or vice versa. Journal of the American Statistical Association, 54, 30-34. Stevens, J. P. (1980). Power of the multivariate analysis of variance tests. Psychological Bulletin, 88, 728-737. Stevens, J. P. (1986). Applied multivariate statistics for the social sciences. Hillsdale, NJ: Erlbaum. Taylor, E B. (1959). Development of the testing of statistical hypotheses. Unpublished doctoral dissertation, Columbia University, New York. Tversky, A, & Kahneman, D. (1971). Belief in the law of small numbers. Psychological Bulletin, 76, 105-110. Woolley, T. W (1983). A comprehensive power-analytic investigation of research in medical education. Journal of Medical Education, 58, 710-715. Woolley, T. W, & Dawson, G. O. (1983). A follow-up power analysis of the statistical tests used in the Journal of Research in Science Teaching. Journal of Research in Science Teaching, 20, 673-681. Received December 8,1989 Revision received March 29,1990 Accepted May 14,1990 •