by Richard G. Lipsey - canadian economics association

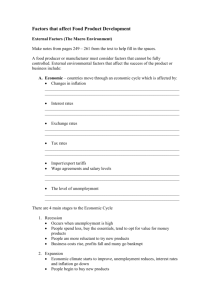

advertisement