Part-of-Speech Tagging Outline Probability of A

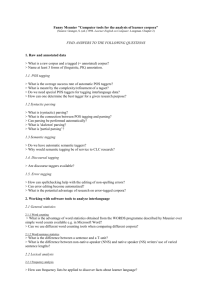

advertisement

Preamble: Probability Theory in less than 10 slides Introduction Linguistic issues in POS tagging Part-of-Speech Tagging Marco Baroni Text Processing Inducing POS taggers from data Hidden Markov Model tagging Maximum Entropy Markov Model tagging Current themes in POS tagging Unsupervised and partially supervised POS tagging A solved problem? Lemmatization Practical issues in POS tagging Picking a tagger Training a tagger Outline Preamble: Probability Theory in less than 10 slides Introduction Probability of A I I The probability that event A takes place is a number between 0 and 1: 0 ≤ P(A) ≤ 1 You can think of P(A) as the proportion of times that A takes place in the relevant “universe” of events I Linguistic issues in POS tagging I Lemmatization Practical issues in POS tagging For complementary events such as A and not-A: P(A ∪ −A) = 1 Inducing POS taggers from data Current themes in POS tagging or as a quantified assessment of how plausible A is I Either A or not-A must happen I Generalizes to A as a variable that must take one of n mutually exclusive values: i=n X i=1 P(Ai ) = 1 Conditional and joint probability I Independence Conditional: P(A|B) I I I I The probability of A given B Joint: P(A, B) A and B are independent: P(A|B) = P(A) The probability of A and B (aka P(A ∩ B), P(AB). . . ) From joint to conditional: P(A, B) P(A|B) = P(B) I I P(B|A) = P(B) I From the chain rule, the joint probability becomes: From conditional to joint (the chain rule): P(A, B) = P(A)P(B|A) = P(A)P(B) P(A, B) = P(B)P(A|B) = P(A)P(B|A) I When A is a variable taking n values: i=n X i=1 P(Ai |B) = 1 Bayes’ Law Bayes’ Law The philosophical angle I Hence: P(A|B) = I O is some observed data, H is a “hypothesis” (i.e., an unobserved/unobservable state of events) I Straightforward application of Bayes’ Law: From the chain rule: P(A, B) = P(B)P(A|B) = P(A)P(B|A) I I P(A)P(B|A) P(B) P(H|O) = P(H)P(O|H) P(O) I Compute posterior probability of hypothesis after seeing some data, given prior probability of the hypothesis (e.g., our current scientific knowledge) and likelihood of the observed data given the hypothesis I If we want to pick the most likely of various hypotheses, we can ignore P(O) in denominator, since it will be constant across Hs Applications of Bayes’ Law are sometimes referred to as Bayesian inversion, since we invert the order of conditioning Estimation Estimation: Relative frequency estimates I I I In empirical work, the main problem is that we do not know the various probabilities, and coming up with reasonable guesses, especially when we are dealing with joint probabilities, gets very complicated soon Clever math and simplifying assumptions needed to reduce the problem of estimation of probability to estimating a number of simple terms we know how to calculate Estimation: Smoothing P̂(A) = I Smoothing techniques are important especially in the presence of sparse data (very common in NLP), where we are not confident that our observations cover the whole space of possible outcomes The simple “add 1” smoothing approach adds 1 to all relevant event counts (adjusting the denominator accordingly) Count(A) Count(Everything) P̂(A|B) = I Count(A, B) Count(B) It can be shown that these relative frequency estimates are maximum likelihood estimates, i.e., they are the probability values that make the observed data most likely Estimation: Add 1 smoothing I I The most common (and intuitive) way to estimate probabilities from count data is by relative frequency If our universe has only A and not-A events: P̂(A) = I I Count(A) + 1 Count(Everything) + 2 In this way, we would for example not rule out A completely even if we did not observe it in the available data Interestingly, in the Bayesian approach add 1 smoothing and similar techniques can be derived from theoretical considerations I Very intuitively, add 1 smoothing is equivalent to assuming that in our prior experience we observed both A and not-A once Outline Main source Preamble: Probability Theory in less than 10 slides Introduction Linguistic issues in POS tagging I I (Especially for the model induction parts) Chapters 5 and 6 of: I Inducing POS taggers from data Daniel Jurafksy and James Martin – Speech and Language Processing (2nd Edition) Current themes in POS tagging Lemmatization Practical issues in POS tagging What? Why? I I The Part-Of-Speech tagging task: Typical example of “small-scale”, intermediate task that turns out to be useful in all sorts of applications I I like books I I I/PRO like/VER books/NOUN I Why is it difficult? I As an intermediate step for parsing To extract lexical information, terminology, collocations. . . To improve: information extraction, relation extraction, distributional semantic models. . . Many other tasks (named entity recognition, word sense disambiguation) can be seen as instances of “generalized” POS tagging: I Assign a label to each word in a stream based on context and word properties, moving from left to right Machine learning approach I I From late seventies (at least), emphasis on extracting statistical generalizations from pre-annotated data rather than using hand-crafted rules The general setting: I I I I Create “training corpus” by POS-tagging a certain amount of text by hand “Train” POS-tagging program to extract generalizations from annotated corpus Use trained POS tagger too annotate new texts I Context: words – and properties of words – to left, right I Morphology: edges and other properties of target words I Probably because of impoverished morphology of English, traditional taggers tend to put emphasis on contextual cues You should separate out some manually annotated data for testing or use cross-validation I I Cues split manually annotated corpus into k parts, use each of the parts in turn for testing, the remaining k − 1 for training Testing on training set leads to overfitting and poor generalization Outline POS tagging as a linguistic problem Preamble: Probability Theory in less than 10 slides Introduction Linguistic issues in POS tagging Inducing POS taggers from data Current themes in POS tagging Lemmatization Practical issues in POS tagging I POS tagging assumes a manually annotated training corpus, learns a statistical model, automatically annotates new text I Lots of emphasis on the learning part, the linguistic issues related to what and how to annotate tend to be overlooked Which tags? I John says that Mary will meet a man I John saw the man that Mary will meet I Is that the same that? POS tagging as an ill-posed linguistic task I We attach single labels to single words, but we want to capture a hierarchical structure where I I the same word is dominated by multiple nodes some labels are only meaningful when applied to word sequences How granular? I Kids eat junk food I Do we encode number, tense and person information in the tags? I More granular: more informative, less ambiguous, but less training data! I (A more serious issue in languages with richer morphology: gender, number, many persons, many tenses, etc.) POS tagging as an ill-posed linguistic task I The League of Nations, Joan of Arc I He brought up the issue I military act vs. war act I The ugly zombies, the zombies were ugly I The slain zombies, the zombies were slain I Il continuo lamentare la mancanza di. . . Linguistic issues in POS tagging: practical aspects Outline Preamble: Probability Theory in less than 10 slides I Since POS tagging projects rarely start from scratch, often tagset and tagging policies of pre-existing resources (annotated corpus, pre-trained tagger) play a dominant role in the linguistic choices to be made within a project I Users of POS taggers and annotated corpora for the most part are not theoretical linguists: tagsets that are entirely sound from the point of view of theoretical linguistics, but without “naive linguistics” appeal, are of little practical usefulness I (Will come back briefly to the “linguistic issues” at the end of the POS lectures) Introduction Linguistic issues in POS tagging Inducing POS taggers from data Hidden Markov Model tagging Maximum Entropy Markov Model tagging Current themes in POS tagging Lemmatization Practical issues in POS tagging Statistical POS tagging: general questions Outline Preamble: Probability Theory in less than 10 slides I How do we formulate the POS tagging problem in probabilistic terms? (the theoretical modeling issue) I How do we use the training data to estimate the probabilities that our model needs? (estimation/training) I Given new text, how do we use the model to assign tags to it? (the decoding problem) I Will illustrate with Hidden Markov Model tagging Introduction Linguistic issues in POS tagging Inducing POS taggers from data Hidden Markov Model tagging Maximum Entropy Markov Model tagging Current themes in POS tagging Lemmatization Practical issues in POS tagging Hidden Markov Model (HMM) tagging I I HMM tagging is one of oldest (Church 1988) and most intuitive approaches, with performance still at state-of-the-art, at least when tuned properly Some relatively recent implementations: TnT (Brants 2000), ACOPOST tt (Schroeder 2002), FreeLing (Carreras, Chao, Padró & Padró 2004), the Apache UIMA Tagger I Theoretical formulation: a sentence is generated by a sequence of POS tags, each POS tag “emitting” a word I Given word sequence (the observed data), we need to guess the most likely POS sequence (the hidden elements) that generated it Bayesian inversion I Given word sequence w1 , ..., wn , we want to find most probable tag sequence t1 , ..., tn : t1 , ..., tn = argmaxP(t1 , ..., tn |w1 , ..., wn ) t1 ,...,tn I Using Bayes’ Law, we can go from: t1 , ..., tn = argmaxP(t1 , ..., tn |w1 , ..., wn ) t1 ,...,tn I to: t1 , ..., tn = argmax t1 ,...,tn P(w1 , ..., wn |t1 , ..., tn )P(t1 , ..., tn ) P(w1 , ..., wn ) Bayesian inversion Bayesian inversion An aside for probability theorists I Denominator will be the same for all potential tag combinations (because the word sequence we want to tag is the same): t1 , ..., tn = argmax t1 ,...,tn I P(w1 , ..., wn |t1 , ..., tn )P(t1 , ..., tn ) P(w1 , ..., wn ) So, we can ignore probability of word sequence: t1 , ..., tn = argmaxP(w1 , ..., wn |t1 , ..., tn )P(t1 , ..., tn ) t1 ,...,tn I I New formulation of POS tagging task naturally lends itself to generative interpretation We model the POS tagging task in terms of P(w1 , ..., wn |t1 , ..., tn )P(t1 , ..., tn ) I I.e., by the chain rule, we model the joint tag and word distribution: P(w1 , ..., wn |t1 , ..., tn )P(t1 , ..., tn ) = P(w1 , ..., wn , t1 , ..., tn ) Simplifying assumptions I Simplifying assumptions Probability of a word only depends on its own tag, not on other words in sentence nor on their tags: P(w1 , ..., wn |t1 , ..., tn ) ≈ P(w1 |t1 )P(w2 |t2 )...P(wn |tn ) I I Second-order Markov assumption would correspond to “trigram model” – i.e., the (more common) model that looks at a window of 2 tags to the left I Third, fourth-order models, etc. (will suffer from serious data sparseness problems) Interpretation of the terms t1 ,...,tn I To: t1 , ..., tn = argmax i=n Y t1 ,...,tn i=1 P(wi |ti )P(ti |ti−1 ) Training/estimation i=n Y t1 ,...,tn i=1 I t1 , ..., tn = argmaxP(w1 , ..., wn |t1 , ..., tn )P(t1 , ..., tn ) The simplified formula: t1 , ..., tn = argmax I From: The (first-order) Markov assumption: P(t1 , ..., tn ) ≈ P(t1 |t0 )P(t2 |t1 )...P(tn |tn−1 ) I I P(wi |ti )P(ti |ti−1 ) Second term(s) represent(s) probability of seeing current tag given tag we just saw (transition probability) – e.g., how likely it is that current tag is VERB if previous tag was AUX? In Bayesian terms, it is the prior probability of current tag First term(s) represent(s) probability of seeing current word given current tag (emission probability) – e.g., if current tag is VERB, how likely it is that current word is book? In Bayesian terms, it is the likelihood of the data given hypothesized tag I Training a basic HMM tagger is trivial I Just collect tag-tag and tag-word co-occurrence frequencies (a “language model”) from training corpus I Convert to probabilities by dividing tag-tag counts by first tag count, tag-word counts by tag count I Devil is in the details. . . Estimation I Decoding The simplified formula: t1 , ..., tn = argmax i=n Y t1 ,...,tn i=1 I I The decoding task: given word sequence and estimated probabilities, find tag sequence that maximizes Estimating factors of first term (where C(x) counts occurrences of x in training corpus): P̂(wi |ti ) = I P(wi |ti )P(ti |ti−1 ) C(ti , wi ) C(ti ) t1 , ..., tn = argmax t1 ,...,tn i=1 I Estimating factors of second term: P̂(ti |ti−1 ) = i=n Y P(wi |ti )P(ti |ti−1 ) In principle, there are k n sequences to evaluate, where k is number of tags in tagset and n is number of words in sequence C(ti−1 , ti ) C(ti−1 ) The Viterbi decoding algorithm The Viterbi decoding algorithm A few preliminaries I I A dynamic programming approach, based on breaking up a complex task into simpler sub-steps I Similar methods employed in speech recognition, minimum edit distance computations I Given a fixed word sequence W , if t1 , ..., tm is a sequence ending in tm , (unnormalized) probability of a sequence t1 , ..., tm , tm+1 is given by: P(t1 , ..., tm |W )P(wm |tm )P(tm+1 |tm ) I will refer to P(t1 , ..., tm |W ) as the probability of path to tm and to P(wm |tm )P(tm+1 |tm ) as the probability of path from tm to tm+1 In POS tagging, Viterbi decoding used not only with HMMs, but with other probabilistic taggers as well I Assume that, in last step of parsing a sentence, probability from tm to tm+1 also includes the P(wm+1 |tm+1 ) term, or other ways to deal with edges (or more in general emission probabilities) – they will not add much to search space, and I ignore the issue here The Viterbi decoding algorithm The Viterbi decoding algorithm Basic intuition Basic method I I Recall that probability of a sequence t1 , ..., tm , tm+1 is given by: P(t1 , ..., tm |W )P(wm |tm )P(tm+1 |tm ) If t1 , ..., tm is most likely tag sequence ending in tm , then t1 , ..., tm , tm+1 is most likely tag sequence ending in tm , tm+1 I I probability of path ending in tm , tm+1 only depends on probability of path to tm (P(t1 , ..., tm |W )) and probability of path from tm to tm+1 (P(wm |tm )P(tm+1 |tm )), that are independent, and where the second term is constant (because we are considering fixed tm , wm and tm+1 ) I I With k tags, there are k 2 possible t1 , t2 paths Once we have computed the probabilities of those and found most likely path to each t2 , we only need to compute the t2 and t3 probabilities for all k 2 possible t2 , t3 combinations I I No need to look at k 3 possible t1 , t2 , t3 paths, since we already know the best paths to the t2 s! We can proceed in this way until the end of a n-word sentence to find best path by exploring nk 2 (sub-)paths instead of k n ! When looking at sequences ending in t1 , ..., tm , tm+1 , We don’t need to consider other paths ending with tm but the most likely one! The Viterbi decoding algorithm Decoding without Viterbi Computing sub-paths incrementally 34 paths I Compute the probability of all possible t1 , t2 paths, and keep track of most likely paths b(t)1 , t2 to each t2 , storing their probabilities (we explore k 2 paths) I Next, compute probability of all possible t2 , t3 paths, and keep track of most likely path b(t)2 , t3 to each t3 , storing the product of the probability of this path by the probability of b(t)1 , b(t)2 , i.e., the probability of b(t)1 , b(t)2 , t3 (we explore k 2 paths) I Keep going step by step until the end of the sequence I At the end, pick the final tag tn that results in the highest probability for b(t)1 , ..., b(t)n−1 , tn , and backtrace the concatenation of paths that brought you there I You found the most likely path by exploring nk 2 paths (instead of k n ) The Viterbi decoding algorithm 2 32 more sub-paths, total: 2 × 32 3 sub-paths The Viterbi decoding algorithm 2 The Viterbi decoding algorithm 2 3 more sub-paths, total: 3 × 3 The Viterbi decoding algorithm 32 more sub-paths, total: 4 × 32 The Viterbi decoding algorithm Working through a training and tagging example Backtracking to find the best path No Viterbi search, we look at two paths only! I I Word sequence: book it Candidate tag sequences: I I The “training” data item PUN VVB NN1 PNP PUN VVB PUN NN1 VVB PNP NN1 PNP book + VVB book + NN1 it + PNP VVB PNP NN1 PNP I From BNC tagset: VVB The finite base form of lexical verbs (e.g., forget, send, live, return), including the imperative and present subjunctive PNP Personal pronoun (e.g., I, you, them, ours) NN1 Singular common noun (e.g., pencil, goose, time, revelation) I We will use BNC as our “training corpus” (with “adjustments” to make the example work ;-) I NB: for sentence initial item, we take PUN (the “end-of-sentence” marker) as ti−1 Working through an example frequency 11,092,814 1,197,077 14,281,232 4,977,521 162,714 383,445 184,179 7,790 77 20,894 820,719 I What is the crucial factor that determines best path? I Note right-to-left effect despite left-to-right formulation of model I Given how small probabilities become, it is more practical to work with logarithms How to tag unseen things? I Which probability do we assign to tag sequences that do not occur in training corpus? I Which tags (and with which probability) do we assign to words that do not occur in training corpus? I We examine these problems here within the framework of HMM tagging, but they must be tackled, in one way or the other, by all approaches to tagging Smoothing I As with any other probability estimation problem, we can use a smoothing technique to make sure that all probabilities we need to estimate are non-0 I The simplest approach is add 1 (or Laplace) smoothing In our case, we increment all counts by one, so that if in the data C(ti−1 , ti ) = 0, the smoothed count will be C(ti−1 , ti ) = 1 I I Care has to be taken to keep counts consistent; e.g., if we increment C(ti−1 , ti ) we should also increment C(ti−1 ) and C(ti ) Unseen tag sequences I Tag distributions are Zipfian (few very common tag sequences, endless number of rare tag sequences) I more (training) data is better data I Unseen tag sequences will be especially common in trigram-based and higher order models I Trivial solution (0 probability) is usually undesirable, since it implies that any path going through the unseen sequence will have 0 probability Linear interpolation I With n-grams, we can do something smarter than simple smoothing, i.e., approximate the probability of a longer sequence ending in ti by a weighted combination of shorter sequences ending in ti : P(ti |ti−2 , ti−1 ) ≈ λ1 P̂(ti |ti−2 , ti−1 ) + λ2 P̂(ti |ti−1 ) + λ3 P̂(ti ) I E.g.: P(VVP|PNP, AUX) ≈ λ1 P̂(VVP|PNP, AUX) + λ2 P̂(VVP|AUX) + λ3 P̂(VVP) Linear interpolation Unknown words Brants’ TnT approach P(ti |ti−2 , ti−1 ) ≈ λ1 P̂(ti |ti−2 , ti−1 ) + λ2 P̂(ti |ti−1 ) + λ3 P̂(ti ) I How do we estimate the λs? I General idea: use held-out trigrams from the training corpus to see which of the 3 terms would be best in predicting ti , and reward it by increasing its λ I P(wi |ti ) estimation requires corpus frequency of specific words with at least some tags, but no training corpus will contain all words (Zipf’s law, and think of technical terms, loanwords, neologisms, proper nouns, brand names. . . ) I Suffix analysis method: P(t|strapparavizing) estimated with combination of P̂(t|...vizing), P̂(t|...izing), . . . , P̂(t|...g). I P(strapparavizing|t) can be derived from P(t|strapparavizing) with Bayes’ law (P(strapparavizing) will be constant across tags and decodings): P(strapparavizing|t) = Outline P(t|strapparavizing)P(strapparavizing) P(t) Advantages of HMM tagging Preamble: Probability Theory in less than 10 slides Introduction I Nice and clean probabilistic model I Linguistic issues in POS tagging Inducing POS taggers from data Hidden Markov Model tagging Maximum Entropy Markov Model tagging Current themes in POS tagging Lemmatization Practical issues in POS tagging Easy to integrate with other probabilistic models, e.g., probabilistic semantic models (Topic Models) I Training and tagging simple and efficient I With appropriate tuning, still at the state of the art I Mathematically well-understood (but empirically disappointing) approach to unsupervised or semi-supervised learning (Expectation Maximization algorithm) Problems with HMM tagging I Difficult to integrate many “incidental” cues and occasional long-distance dependencies in the model I Tagging A. Smith & Co. should be easy Some rules we might want to assign a high probability to: I I I If next next word is Co. and current word is capitalized, current word is Proper Noun If current word is capitalized and ends in period, current word is Proper Noun Problems with HMM tagging I If current word is capitalized and ends in period, current word is Proper Noun I To capture this, we should break down P(fi1 , fi2 , ..., fik |ti ) into a form that takes dependencies between features into account (in this case, the capitalization feature and the “character in the last word” feature) I This is very complicated, and it leads to models that are very difficult to estimate from the data I Again: we are interested in the particular case of words that are capitalized and end in period, we do not need/want a full fledged model of the interaction between capitalization and characters in various positions of the word Problems with HMM tagging I If next next word is Co. and current word is capitalized, current word is Proper Noun I The only way to capture relation with next next word is by moving up the Markov scale to a trigram model replacing any P(ti |ti−1 ) with P(ti |ti−2 , ti−1 ) I I To capture properties of words such as “being capitalized”, we need to decompose P(wi |ti ) into P(fi1 , fi2 , ..., fik |ti ) where the f s are k features characterizing a word We then need a full-fledged model of these features, and data to estimate them, although in most cases the only thing we will care about is the word’s identity (a feature like: word is dog, word is cat. . . ) From generative to discriminative models I (First order) HMM taggers model the probability of a tag in a certain context indirectly, by modeling the generation of tags from previous tags and words from tags: P(ti |t1 , ..., ti−1 , w1 , ..., wn ) ≈ P(wi |ti )P(ti |ti−1 ) I Discriminative models focus on modeling the probability of a tag in a certain context directly I In particular, Maximum Entropy Markov Model (MEMM) taggers (Ratnaparkhi, 1996) estimate terms like the following directly from the data: P(ti |t1 , ..., ti−1 , w1 , ..., wn ) ≈ P(ti |ti−1 , w1 , ..., wn ) HMM vs. MEMM Representing context in MEMM tagging I In a (first order) MEMM tagger, we estimate the probability of ti given t1 , ..., ti−1 , w1 , ..., wn in terms of the preceding tag and all the words in the sentence: P(ti |t1 , ..., ti−1 , w1 , ..., wn ) ≈ P(ti |ti−1 , w1 , ..., wn ) I The conditioning context (ti−1 , w1 , ..., wn ) is described by checking if a set of statements (such as previous tag is V and next word is period) apply to it I If we use ci to represent the context ti−1 , w1 , ..., wn , have a set of k statements s1 , s2 , ..., sk and assume a true(c, s) function returning 1 if s is true of c, 0 otherwise, our model becomes: P(t|ci ) = P(t|true(ci , s1 ), true(ci , s2 ), ..., true(ci , sk )) Representing context in MEMM tagging Computing P(t|ci ) I I We call the binary true(c, s) function applied to ci and sj a feature (fj (ci )) P(t|ci ) = P(t|f1 (ci ), f2 (ci ), ..., fk (ci )) I P(t|ci ) = P(t|true(ci , s1 ), true(ci , s2 ), ..., true(ci , sk )) = P(t|f1 (ci ), f2 (ci ), ..., fk (ci )) I In the language or regression, the 0/1-valued f s are indicator variables Given: Instead of inverting and trying to compute P(f1 (ci ), f2 (ci ), .., fk (ci )|t), we compute P(t|f1 (ci ), f2 (ci ), ..., fk (ci )) directly as a function of a linear combination of the k features: j=k X 1 exp( λj × fj (ci )) P(t|ci ) = Zci j=1 I If the relevant statement does not apply to ci , fj (ci ) = 0, and thus the corresponding λ weight will not contribute to the computation of P(t|ci ) Computing P(t|ci ) Computing P(t|ci ) j=k X 1 λj × fj (ci )) P(t|ci ) = exp( Zci j=1 I The normalizing constant Zci ensures that P(t|ci ) is part of a well-formed probability distribution across all possible P tags j P(tj |ci ) = 1 I I A different Zci is needed for each set of contexts that lead to the same setting of the k fj (c) values (need as many Z s as possible combinations of f s) I I I Note that we are not modeling the context-derived features probabilistically I We determine the distribution of the ts given a certain setting of the values of the binary features Features Tag to the left is PUN: f31 = I j=1 The presence of the exponential is motivated by statistical modeling reasons (making sure we have a well-formed probability distribution, and that we are able to estimate the weights) Feature examples I j=k X 1 P(t|ci ) = exp( λj × fj (ci )) Zci 1 if ti−1 = PUN 0 otherwise Current word is capitalized and next next word is Co.: 1 if upper(wordi ) & wordi+2 ="Co." f100 = 0 otherwise Current word is capitalized and ends in period: 1 if upper(wordi ) & lastchar(wordi )="." f104 = 0 otherwise Previous word is NN1 and current suffix is -izes: 1 if ti−1 = NN1 & suff(wordi )="izes" f231 = 0 otherwise I Typically, lots of features are thrown at the model; automatically tuned weights play important role in determining importance of features I With many features, it is also important that weight tuning algorithm is not prone to overfitting I In Maximum Entropy and related models, composite features (those with & in the previous slide) are determined by hand I In Support Vector Machines and related models, feature conjunctions are automatically explored by model Estimating a MEMM model Decoding j=k X 1 exp( λj × fj (ci )) P(t|ci ) = Zci j=1 I I I I Finding good values for the λs is obviously more complicated (and a lot less efficient) than HMM parameter estimation from training data From a statistical perspective, MEMM taggers are a special case of multinomial logistic regression (the “Markov” parts pertains to the assumptions about the relevant context, the P(t|c) models itself is vanilla multinomial logistic regression) Thus, we can use standard methods for estimation of generalized linear models, based on maximizing the likelihood of the corpus No analytical solution to this problem, numerical methods must be employed Outline I Given MEMM formulation, Viterbi decoding is possible for these taggers as well I In practice, the MEMM taggers I tried tend to be slower and more brittle than HMM taggers, not only in training but also for tagging (issues with the normalization terms?) I Feature extraction component is needed for training and tagging Outline Preamble: Probability Theory in less than 10 slides Preamble: Probability Theory in less than 10 slides Introduction Introduction Linguistic issues in POS tagging Linguistic issues in POS tagging Inducing POS taggers from data Inducing POS taggers from data Current themes in POS tagging Unsupervised and partially supervised POS tagging A solved problem? Current themes in POS tagging Unsupervised and partially supervised POS tagging A solved problem? Lemmatization Lemmatization Practical issues in POS tagging Practical issues in POS tagging Making use of unlabeled data Making use of unlabeled data Unsupervised Semi-supervised I Manual tagging is slow and requires skilled labour I There is a lot of unannotated language data out there I Can we use them to obtain a reasonably good tagger without manually annotated data (unsupervised learning)? I The current answer is: not really I (There is also evaluation problem: if you really do not have manually labeled training data, you do not have manually labeled test data, either!) I A recent state-of-the-art evaluation: C. Christodoulopoulos, S. Goldwater and M. Steedman: Two decades of unsupervised POS induction: How far have we come? EMNLP 2010 Self training I There is a lot of unannotated language data out there I Can we combine them with annotated data to improve tagger performance (semi-supervised or partially supervised learning)? I The current answer is: not really. . . I . . . despite the fact that systems combining annotated and raw data were successful in other fields of computational linguistics, e.g., Word Sense Disambiguation (see Abney’s 2008 Semisupervised learning in computational linguistics book) I A recent attempt (with pointers to earlier work): A. Søgaard: Simple semi-supervised training of part-of-speech taggers. ACL 2010 Outline Preamble: Probability Theory in less than 10 slides Introduction I Semi-supervised learning in its simplest form: I I I I Start with regular POS tagger trained on (small amounts of) labeled data Annotate more text Retrain the tagger using both the original manually labeled data and the newly tagged text Why should it work? Linguistic issues in POS tagging Inducing POS taggers from data Current themes in POS tagging Unsupervised and partially supervised POS tagging A solved problem? Lemmatization Practical issues in POS tagging A solved problem? I Modern POS taggers reach accuracies just above 97% for English, close to that for other major European languages I Vanilla POS tagging is probably not an area you should invest in if you want to become rich and famous Some (related) topics that are still worth pursuing: I I I I Unsupervised, semi-supervised Fast language, domain adaptation Handling rare words, rare constructions Manning’s error analysis A solved problem? I Chris Manning. Part-of-Speech Tagging from 97% to 100%: Is it time for some linguistics? Proceedings of CICLing 2011 I 97.3% word-level accuracy corresponds to approximately 56% sentence accuracy I That is: almost half of the sentences tagged with the best POS tagger for English contain a tagging mistake! I Analysis of 100 errors of state-of-the-art Stanford tagger trained and tested on widely used WSJ-based corpus (Penn Treebank) Manning’s error analysis Lexicon gap word occurs only with different tag in training set Unknown word word never occurs in training data Could get right no clear reason for error Lexicon gap 4.5% Difficult linguistics broader contextual knowledge than what the tagger can access (e.g., set as present or past) Unknown word 4.5% Underspecified/unclear it is not clear what the right tag should be (e.g., is discontinued adjective or past participle in against discontinued operations?) Difficult linguistics 19.5% Inconsistent training data training set has different tags for same word in same context (e.g., ’30s tagged as plural noun or as cardinal number) Errors in training data gold standard tag is wrong! (e.g., newsweekly tagged as adverb) Could get right 16.0% Underspecified/unclear 12.0% Inconsistent training data 28.0% Errors in training data 15.5% Outline Lemmatization Preamble: Probability Theory in less than 10 slides Introduction I The task of lemmatization, i.e., assigning a “dictionary form” to each input word, is typically associated with POS tagging I Once a word receives a POS, if the word is in dictionary, lemmatization is simply a matter of dictionary lookup I Attracted much less attention than POS tagging (because of English relatively poor inflectional morphology?) Linguistic issues in POS tagging Inducing POS taggers from data Current themes in POS tagging Lemmatization Practical issues in POS tagging The lemmatization task The lemmatization task The/ART led/NOUN is/AUX blinking/VERB:ing This/PRON led/VER to/PREP distress/NOUN I I The/ART/the led/NOUN/led is/AUX/are blinking/VERB:ing/blink This/PRON/this led/VER/lead to/PREP/to distress/NOUN/distress Typically, not a full morphological analysis, and no morphological segmentation (e.g., no blinking → blink+ing) Useful especially in languages with richer inflectional morphology (e.g., try extracting verb+noun collocations in Italian without lemmatization!) Dictionary and guessing rules Lemma guessing Our method I Dictionary-based lemmatization (as implemented, e.g., in the TreeTagger) will only take you so far I Out-of-dictionary words are not a random set, and they are often the tokens of most interest: technical terms, derived morphological forms, neologism, proper nouns... I I Run TreeTagger on corpus I Extract distinct word POS lemma tuples (types, not tokens), e.g.: departments NOUN department containment NOUN containment Extract suffix-based word-to-stem mapping rules such as: artments NOUN artment rtments NOUN rtment ... ... ... s NOUN 0 Collect frequency of rules in the list created in this way I It is a good idea to supplement dictionary-based lemmatization with a lemma guesser module I I Unsupervised/semi-supervised morphological analysis Apply rules to unknown words, selecting the longest applicable suffix, and the most frequent in case of ties Outline Preamble: Probability Theory in less than 10 slides Introduction I By now, a relatively large literature Linguistic issues in POS tagging I See e.g. the MorphoChallenge competition: http://research.ics.aalto.fi/events/ morphochallenge Inducing POS taggers from data Current themes in POS tagging Lemmatization Practical issues in POS tagging Picking a tagger Training a tagger POS tagging in practice Outline Preamble: Probability Theory in less than 10 slides Introduction I If you are moderately lucky, you can use a pre-trained tagger out of the box Linguistic issues in POS tagging I If you are not, you might need to adapt or train a tagger I You almost certainly do not need to write a tagger from scratch! Inducing POS taggers from data Current themes in POS tagging Lemmatization Practical issues in POS tagging Picking a tagger Training a tagger Picking a tagger Performance in English I I If you are lucky, a Google search will return one or more freely available pre-trained taggers for the language you are interested in I I I I For some languages, such as Chinese and Japanese, you are more likely to find tools called “morphological analyzers” that also do tokenization and tagging I If there is a choice, which tagger should you use? Some criteria: I I I I I Performance (less important than you might think) Robustness Tagset Does the tagger do tokenization, lemmatization? Tagger available for other languages as well? Modern English taggers reach accuracies between 96 and slightly above 97% on WSJ corpus with 45-element tagset (Jurafsky and Martin) Not a huge difference among models, one that is sometimes reported as best for English (97.24% accuracy) is a Maximum Entropy-like model with particular attention to unknown word handling: I I K. Toutanova, D. Klein, Ch. Manning and Y. Singer (2003). Feature-rich part-of-speech tagging with a cyclic dependency network. Proceedings of HLT-NAACL 2003, 252-259. This (or some close relative) should be available as the Stanford LogLinear tagger: http: //nlp.stanford.edu/software/tagger.shtml Performance for other European languages is also at similar levels I See EVALITA 2007 and 2009 POS tagging tasks for Italian Robustness I In my experience, Stanford LogLinear tagger has a strong penchant to die on any form of anomalous text I On the other hand, the good old TreeTagger has never died on me, and on standard machines it can tag billions of tokens in half day I Whether the tagger is actually going to be able to tag your text from beginning to end and in decent times is more important than reported performance! (Pre-trained) tagging and tokenization Use the TreeTagger if you can! I http://www.ims.uni-stuttgart.de/projekte/ corplex/TreeTagger/ I HMM model that uses decision trees to merge transition probabilities with similar histories to avoid data-sparseness I Robust, fast I Performance still at the state-of-the-art (see EVALITA results) I Works out of the box on Linux, Mac, Windows, Sun I Freely available parameter files for English, German, Italian, Dutch, Swahili, Spanish, Bulgarian, Russian, Greek, Chinese, Portuguese, Galician, Estonian, French and old French I Easy to train I It does also tokenization and lemmatization Outline Preamble: Probability Theory in less than 10 slides I If the tagger does not perform its own tokenization, make sure that you produce the sort of normalization that it expects I I If the tagger expects do n’t and you produce don’ t, there will be trouble! This is one of the reasons why, IMHO, tokenization should follow the most trivial principles I I should not have to worry about whether you think that out of is one or two words Introduction Linguistic issues in POS tagging Inducing POS taggers from data Current themes in POS tagging Lemmatization Practical issues in POS tagging Picking a tagger Training a tagger Training a tagger Choosing a tagset I I Because there is no pre-trained tagger for the language you work on I Because the tagset used by available tagger is too different from what you need (consider writing mapping program instead) I I A huge tagset will require a huge training set: if you need detailed morphological information, consider two-stage procedure in which you assign coarse tags with tagger, and more granular morphological information with morphological analyzer Do not follow tradition, look at theoretical linguistics: I Because the texts you want to work on are very different from the ones existing taggers were trained on I I Choosing source texts I On the one hand, the more your source text will look like your target text, the better the tagging performance will be I On the other, the more varied the texts you tag, the more “general-purpose” the tagger will be I The times they are-a-changin’: we are in dire need of modern manually tagged texts: web-pages, blogs, e-mails. . . I Consider issue of re-distribution: if possible, tag texts distributed with CreativeCommons or similar license “Standard” Italian EAGLES set fails to recognize difference between full and clitic pronouns, that have totally distinct distribution At the same time, it distinguishes between a “pronoun” che and a “conjunction” che, whereas syntacticians have seen them as the same thing (a complementizer) for decades now On the other hand, you should also keep other users in mind: it is a pity if nobody else can use your tagger because they do not understand your tags How much texts? I The more the better I EVALITA results and my own experience with the la Repubblica corpus suggest that you can get state-of-the-art performance with about 100K tokens I Can you get away with less? Correcting is better than annotating from scratch Developing a lexicon I I I Can you start from output of an existing tagger? Perhaps, from tags assigned by a lexicon? Use a tagger from a cognate language?? Iterative annotation procedure (assume you can start with a certain amount of annotated data): I I I I I Train tagger on all manually annotated tokens available at this point Run it to tag another X tokens Correct annotation of the newly processed tokens Repeat Active learning methods try to automatically identify most useful training data to tag next Training, testing, tagging I Training existing taggers will typically be trivial I Separate test data (or use cross-validation) to get a realistic evaluation assessment I However, at the very end remember to use all annotated data to train the tagger I Morphological/lemma lexicon, typically in format: inflected form POS lemma dogs NOUN dog dogs VER:fin dog ... ... ... The larger the lexicon, the better the performance of the tagger (less unknown words) I Easiest to bootstrap by running existing tagger/lemmatizer (e.g., TreeTagger) on unannotated data I Some forms can be generated by rule (e.g., verbal, adjectival morphology) I Sometimes, it is better to remove uncommon analyses of common words (e.g., having dogs as verb in the lexicon might actually harm performance causing mistaggings of the much more frequent nominal analysis)