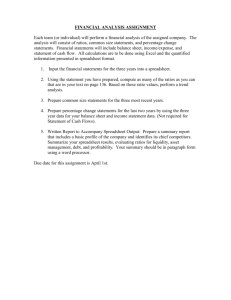

Spreadsheet Auditing

advertisement