Reading 6.3

Causes of Conflict

Source: Module 28 (pages 355-368) of Myers, D. G. (2012).

Exploring social psychology (6th ed.). New York: McGraw-Hill.

Social Psychology

Professor Scott Plous

Wesleyan University

Note: This reading material is being provided free of charge to Coursera

students through the generosity of David G. Myers and McGraw-Hill, all

rights reserved. Unauthorized duplication, sales, or distribution of this

work is strictly forbidden and makes it less likely that publishers and

authors will freely share materials in the future, so please respect the

spirit of open education by limiting your use to this course.

M OD U L E

28

❖

Causes of Conflict

T

here is a speech that has been spoken in many languages by the

leaders of many countries. It goes like this: “The intentions of our

country are entirely peaceful. Yet, we are also aware that other

nations, with their new weapons, threaten us. Thus we must defend

ourselves against attack. By so doing, we shall protect our way of life

and preserve the peace” (Richardson, 1960). Almost every nation claims

concern only for peace but, mistrusting other nations, arms itself in selfdefense. The result is a world that has been spending $2 billion per day

on arms and armies while hundreds of millions die of malnutrition and

untreated disease.

The elements of such conflict (a perceived incompatibility of actions

or goals) are similar at many levels: conflict between nations in an arms

race, between religious factions disputing points of doctrine, between

corporate executives and workers disputing salaries, and between bickering spouses. Let’s consider these conflict elements.

SOCIAL DILEMMAS

Several of the problems that most threaten our human future—nuclear

arms, climate change, overpopulation, natural-resource depletion—arise

as various parties pursue their self-interests, ironically, to their collective

detriment. One individual may think, “It would cost me a lot to buy

expensive greenhouse emission controls. Besides, the greenhouse gases

I personally generate are trivial.” Many others reason similarly, and the

result is a warming climate, rising seas, and more extreme weather.

355

356

PART FOUR SOCIAL RELATIONS

Thus, choices that are individually rewarding become collectively

punishing. We therefore have a dilemma: How can we reconcile individual self-interest with communal well-being?

To isolate and study that dilemma, social psychologists have used

laboratory games that expose the heart of many real social conflicts.

“Social psychologists who study conflict are in much the same position

as the astronomers,” noted conflict researcher Morton Deutsch (1999).

“We cannot conduct true experiments with large-scale social events. But

we can identify the conceptual similarities between the large scale and

the small, as the astronomers have between the planets and Newton’s

apple. That is why the games people play as subjects in our laboratory

may advance our understanding of war, peace, and social justice.”

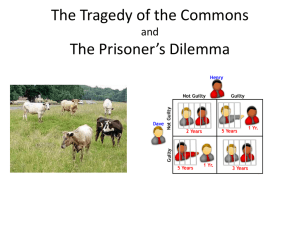

Let’s consider two laboratory games that are each an example of a

social trap: the Prisoner’s Dilemma and the Tragedy of the Commons.

The Prisoner’s Dilemma

This dilemma derives from an anecdote concerning two suspects being

questioned separately by a district attorney (DA) (Rapoport, 1960). The

DA knows they are jointly guilty but has only enough evidence to convict them of a lesser offense. So the DA creates an incentive for each one

to confess privately:

w.mh

com/my

s e s p 6e

ww

e.

er

h

• If Prisoner A confesses and Prisoner B doesn’t, the DA will

grant immunity to A, and will use A’s confession to convict B

of a maximum offense (and vice versa if B confesses and A

doesn’t).

• If both confess, each will receive a moderate sentence.

• If neither prisoner confesses, each will be convicted of a lesser

crime and receive a light sentence.

Activity

28.1

The matrix of Figure 28-1 summarizes the choices. If you were a prisoner

faced with such a dilemma, with no chance to talk to the other prisoner,

would you confess?

Many people say they would confess to be granted immunity, even

though mutual nonconfession elicits lighter sentences than mutual confession. Perhaps this is because (as shown in the Figure 28-1 matrix) no

matter what the other prisoner decides, each is better off confessing than

being convicted individually. If the other also confesses, the sentence is

moderate rather than severe. If the other does not confess, one goes free.

In some 2,000 studies (Dawes, 1991), university students have faced

variations of the Prisoner’s Dilemma with the choices being to defect or

to cooperate, and the outcomes not being prison terms but chips, money,

or course points. On any given decision, a person is better off defecting

357

MODULE 28 CAUSES OF CONFLICT

Prisoner A

Confesses

5 years

Doesn’t

confess

10 years

Confesses

5 years

Prisoner B

0 years

0 years

1 year

Doesn’t

confess

10 years

1 year

FIGURE 28-1

The classic Prisoner’s Dilemma. In each box, the number

above the diagonal is prisoner A’s outcome. Thus, if both

prisoners confess, both get five years. If neither confesses, each

gets a year. If one confesses, that prisoner is set free in exchange

for evidence used to convict the other of a crime bringing a

10-year sentence. If you were one of the prisoners, unable to

communicate with your fellow prisoner, would you confess?

(because such behavior exploits the other’s cooperation or protects

against the other’s exploitation). However—and here’s the rub—by not

cooperating, both parties end up far worse off than if they had trusted

each other and thus had gained a joint profit. This dilemma often traps

each one in a maddening predicament in which both realize they could

mutually profit. But unable to communicate and mistrusting each other,

they often become “locked in” to not cooperating.

Punishing another’s lack of cooperation might seem like a smart

strategy, but in the laboratory it can have counterproductive effects

(Dreber & others, 2008). Punishment typically triggers retaliation, which

means that those who punish tend to escalate conflict, worsening their

outcomes, while nice guys finish first. What punishers see as a defensive

reaction, recipients see as an aggressive escalation (Anderson & others,

2008). When hitting back, they may hit harder while seeing themselves

as merely returning tit for tat. In one experiment, London volunteers

used a mechanical device to press back on another’s finger after receiving pressure on their own. While seeking to reciprocate with the same

358

PART FOUR SOCIAL RELATIONS

degree of pressure, they typically responded with 40 percent more force.

Thus, touches soon escalated to hard presses, much like a child saying

“I just touched him, and then he hit me!” (Shergill & others, 2003).

The Tragedy of the Commons

Many social dilemmas involve more than two parties. Global warming

stems from deforestation and from the carbon dioxide emitted by cars,

furnaces, and coal-fired power plants. Each gas-guzzling SUV contributes infinitesimally to the problem, and the harm each does is diffused

over many people. To model such social predicaments, researchers have

developed laboratory dilemmas that involve multiple people.

A metaphor for the insidious nature of social dilemmas is what ecologist Garrett Hardin (1968) called the Tragedy of the Commons. He

derived the name from the centrally located grassy pasture in old English towns.

In today’s world the “commons” can be air, water, fish, cookies, or

any shared and limited resource. If all use the resource in moderation,

it may replenish itself as rapidly as it’s harvested. The grass will grow,

the fish will reproduce, and the cookie jar will be restocked. If not, there

occurs a tragedy of the commons. Imagine 100 farmers surrounding a

commons capable of sustaining 100 cows. When each grazes one cow,

the common feeding ground is optimally used. But then a farmer reasons, “If I put a second cow in the pasture, I’ll double my output, minus

the mere 1 percent overgrazing” and adds a second cow. So does each of

the other farmers. The inevitable result? The Tragedy of the Commons—

a mud field.

Likewise, environmental pollution is the sum of many minor pollutions, each of which benefits the individual polluters much more than

they could benefit themselves (and the environment) if they stopped

polluting. We litter public places—dorm lounges, parks, zoos—while

keeping our personal spaces clean. We deplete our natural resources

because the immediate personal benefits of, say, taking a long, hot

shower outweigh the seemingly inconsequential costs. Whalers knew

others would exploit the whales if they didn’t and that taking a few

whales would hardly diminish the species. Therein lies the tragedy.

Everybody’s business (conservation) becomes nobody’s business.

Is such individualism uniquely American? Kaori Sato (1987) gave

students in a more collective culture, Japan, opportunities to harvest—

for actual money—trees from a simulated forest. The students shared

equally the costs of planting the forest, and the result was like those in

Western cultures. More than half the trees were harvested before they

had grown to the most profitable size.

Sato’s forest reminds me of our home’s cookie jar, which was

restocked once a week. What we should have done was conserve cookies

MODULE 28 CAUSES OF CONFLICT

359

so that each day we could each enjoy two or three. But lacking regulation and fearing that other family members would soon deplete the

resource, what we actually did was maximize our individual cookie

consumption by downing one after the other. The result: Within

24 hours the cookie glut would end, the jar sitting empty for the rest

of the week.

The Prisoner’s Dilemma and the Tragedy of the Commons games have

several similar features. First, both games tempt people to explain their own

behavior situationally (“I had to protect myself against exploitation by my

opponent”) and to explain their partners’ behavior dispositionally (“she

was greedy,” “he was untrustworthy”). Most never realize that their counterparts are viewing them with the same fundamental attribution error

(Gifford & Hine, 1997; Hine & Gifford, 1996). People with self-inflating,

self-focused narcissistic tendencies are especially unlikely to empathize

with others’ perspectives (Campbell & others, 2005).

Second, motives often change. At first, people are eager to make some

easy money, then to minimize their losses, and finally to save face and

avoid defeat (Brockner & others, 1982; Teger, 1980). These shifting

motives are strikingly similar to the shifting motives during the buildup

of the 1960s Vietnam war. At first, President Johnson’s speeches expressed

concern for democracy, freedom, and justice. As the conflict escalated,

his concern became protecting America’s honor and avoiding the national

humiliation of losing a war. A similar shift occurred during the war in

Iraq, which was initially proposed as a response to supposed weapons

of mass destruction.

Third, most real-life conflicts, like the Prisoner’s Dilemma and the

Tragedy of the Commons, are non-zero-sum games. The two sides’ profits and losses need not add up to zero. Both can win; both can lose. Each

game pits the immediate interests of individuals against the well-being

of the group. Each is a diabolical social trap that shows how, even when

each individual behaves “rationally,” harm can result. No malicious person planned for the earth’s atmosphere to be warmed by a blanket of

carbon dioxide.

Not all self-serving behavior leads to collective doom. In a plentiful

commons—as in the world of the eighteenth-century capitalist economist

Adam Smith (1776, p. 18)—individuals who seek to maximize their own

profit may also give the community what it needs: “It is not from the

benevolence of the butcher, the brewer, or the baker, that we expect our

dinner,” he observed, “but from their regard to their own interest.”

Resolving Social Dilemmas

Faced with social traps, how can we induce people to cooperate for their

mutual betterment? Research with the laboratory dilemmas reveals several ways (Gifford & Hine, 1997).

360

PART FOUR SOCIAL RELATIONS

Regulation

If taxes were entirely voluntary, how many would pay their full share?

Modern societies do not depend on charity to pay for schools, parks, and

social and military security. We also develop rules to safeguard our common good. Fishing and hunting have long been regulated by local

seasons and limits; at the global level, an International Whaling Commission sets an agreed-upon “harvest” that enables whales to regenerate.

Likewise, where fishing industries, such as the Alaskan halibut fishery,

have implemented “catch shares”—guaranteeing each fisher a percentage of each year’s allowable catch—competition and overfishing have

been greatly reduced (Costello & others, 2008).

Small Is Beautiful

There is another way to resolve social dilemmas: Make the group small.

In a small commons, each person feels more responsible and effective

(Kerr, 1989). As a group grows larger, people become more likely to

think, “I couldn’t have made a difference anyway”—a common excuse

for noncooperation (Kerr & Kaufman-Gilliland, 1997).

In small groups, people also feel more identified with a group’s success. Anything else that enhances group identity will also increase cooperation. Even just a few minutes of discussion or just believing that one

shares similarities with others in the group can increase “we feeling” and

cooperation (Brewer, 1987; Orbell & others, 1988). Residential stability

also strengthens communal identity and procommunity behavior, including even baseball game attendance independent of a team’s record (Oishi

& others, 2007).

On the Pacific Northwest island where I grew up, our small neighborhood shared a communal water supply. On hot summer days when

the reservoir ran low, a light came on, signaling our 15 families to conserve. Recognizing our responsibility to one another, and feeling that our

conservation really mattered, each of us conserved. Never did the reservoir run dry. In a much larger commons—say, a city—voluntary conservation is less successful.

Communication

To resolve a social dilemma, people must communicate. In the laboratory

as in real life, group communication sometimes degenerates into threats

and name-calling (Deutsch & Krauss, 1960). More often, communication

enables people to cooperate (Bornstein & others, 1988, 1989). Discussing

the dilemma forges a group identity, which enhances concern for everyone’s welfare. It devises group norms and consensus expectations and

puts pressure on members to follow them. Especially when people are

face-to-face, it enables them to commit themselves to cooperation (Bouas

& Komorita, 1996; Drolet & Morris, 2000; Kerr & others, 1994, 1997;

Pruitt, 1998).

MODULE 28 CAUSES OF CONFLICT

361

Without communication, those who expect others not to cooperate

will usually refuse to cooperate themselves (Messé & Sivacek, 1979;

Pruitt & Kimmel, 1977). One who mistrusts is almost sure to be uncooperative (to protect against exploitation). Noncooperation, in turn, feeds

further mistrust (“What else could I do? It’s a dog-eat-dog world”). In

experiments, communication reduces mistrust, enabling people to reach

agreements that lead to their common betterment.

Changing the Payoffs

Laboratory cooperation rises when experimenters change the payoff

matrix to reward cooperation and punish exploitation (Komorita &

Barth, 1985; Pruitt & Rubin, 1986). Changing payoffs also helps resolve

actual dilemmas. In some cities, freeways clog and skies smog because

people prefer the convenience of driving themselves directly to work.

Each knows that one more car does not add noticeably to the congestion

and pollution. To alter the personal cost-benefit calculations, many cities

now give carpoolers incentives, such as designated freeway lanes or

reduced tolls.

Appeals to Altruistic Norms

When cooperation obviously serves the public good, one can usefully

appeal to the social-responsibility norm (Lynn & Oldenquist, 1986).

For example, if people believe public transportation saves time, they

will be more likely to use it if they also believe it reduces pollution

(Van Vugt & others, 1996). In the 1960s struggle for civil rights, many

marchers willingly agreed, for the sake of the larger group, to suffer

harassment, beatings, and jail. In wartime, people make great personal sacrifices for the good of their group. As Winston Churchill

said of the Battle of Britain, the actions of the Royal Air Force pilots

were genuinely altruistic: A great many people owed a great deal to

those who flew into battle knowing there was a high probability—70

percent for those on a standard tour of duty—that they would not

return (Levinson, 1950).

To summarize, we can minimize destructive entrapment in social

dilemmas by establishing rules that regulate self-serving behavior, by

keeping groups small, by enabling people to communicate, by changing

payoffs to make cooperation more rewarding, and by invoking compelling altruistic norms.

C

OMPETITION

In Module 28 (Causes of Conflict), we noted that racial hostilities often

arise when groups compete for scarce jobs, housing, or resources. When

interests clash, conflict erupts.

362

PART FOUR SOCIAL RELATIONS

But does competition by itself provoke hostile conflict? Real-life

situations are so complex that it is hard to be sure. If competition is

indeed responsible, then it should be possible to provoke in an experiment. We could randomly divide people into two groups, have the

groups compete for a scarce resource, and note what happens. That is

precisely what Muzafer Sherif (1966) and his colleagues did in a dramatic

series of experiments with typical 11- and 12-year-old boys. The inspiration for those experiments dated back to Sherif’s witnessing, as a teenager, Greek troops invading his Turkish province in 1919.

They started killing people right and left. [That] made a great impression

on me. There and then I became interested in understanding why these

things were happening among human beings. . . . I wanted to learn whatever science or specialization was needed to understand this intergroup

savagery. (Quoted by Aron & Aron, 1989, p. 131.)

After studying the social roots of savagery, Sherif introduced the

seeming essentials into several three-week summer camping experiences. In one such study, he divided 22 unacquainted Oklahoma City

boys into two groups, took them to a Boy Scout camp in separate buses,

and settled them in bunkhouses about a half-mile apart at Oklahoma’s

Robber’s Cave State Park. For most of the first week, each group was

unaware of the other’s existence. By cooperating in various activities—

preparing meals, camping out, fixing up a swimming hole, building a

rope bridge—each group soon became close-knit. They gave themselves

names: “Rattlers” and “Eagles.” Typifying the good feeling, a sign

appeared in one cabin: “Home Sweet Home.”

Group identity thus established, the stage was set for the conflict.

Near the first week’s end, the Rattlers discovered the Eagles “on ‘our’

baseball field.” When the camp staff then proposed a tournament of

competitive activities between the two groups (baseball games, tugs-ofwar, cabin inspections, treasure hunts, and so forth), both groups

responded enthusiastically. This was win-lose competition. The spoils

(medals, knives) would all go to the tournament victor.

The result? The camp gradually degenerated into open warfare. It was

like a scene from William Golding’s novel Lord of the Flies, which depicts

the social disintegration of boys marooned on an island. In Sherif’s study,

the conflict began with each side calling the other names during the competitive activities. Soon it escalated to dining hall “garbage wars,” flag

burnings, cabin ransackings, even fistfights. Asked to describe the other

group, the boys said they were “sneaky,” “smart alecks,” “stinkers,” but

referring to their own group as “brave,” “tough,” “friendly.”

The win-lose competition had produced intense conflict, negative

images of the outgroup, and strong ingroup cohesiveness and pride. Group

polarization no doubt exacerbated the conflict. In competition-fostering

MODULE 28 CAUSES OF CONFLICT

363

situations, groups behave more competitively than do individuals (Wildschut & others, 2003, 2007). Men, especially, get caught up in intergroup

competition (Van Vugt & others, 2007).

All of this occurred without any cultural, physical, or economic

differences between the two groups and with boys who were their

communities’ “cream of the crop.” Sherif noted that, had we visited the

camp at that point, we would have concluded these “were wicked,

disturbed, and vicious bunches of youngsters” (1966, p. 85). Actually,

their evil behavior was triggered by an evil situation. Fortunately, as

we will see in Module 29, Sherif not only made strangers into enemies;

he then also made the enemies into friends.

PERCEIVED INJUSTICE

“That’s unfair!” “What a ripoff!” “We deserve better!” Such comments

typify conflicts bred by perceived injustice. But what is “justice”? According to some social-psychological theorists, people perceive justice as

equity—the distribution of rewards in proportion to individuals’ contributions (Walster & others, 1978). If you and I have a relationship

(employer-employee, teacher-student, husband-wife, colleague-colleague),

it is equitable if

My outcomes

Your outcomes

5

My inputs

Your inputs

If you contribute more and benefit less than I do, you will feel exploited

and irritated; I may feel exploitative and guilty. Chances are, though, that

you will be more sensitive to the inequity than I will (Greenberg, 1986;

Messick & Sentis, 1979).

We may agree with the equity principle’s definition of justice yet

disagree on whether our relationship is equitable. If two people are colleagues, what will each consider a relevant input? The one who is older

may favor basing pay on seniority, the other on current productivity.

Given such a disagreement, whose definition is likely to prevail? More

often than not, those with social power convince themselves and others

that they deserve what they’re getting (Mikula, 1984). This has been

called a “golden” rule: Whoever has the gold makes the rules.

And how do those who are exploited react? Elaine Hatfield, William

Walster, and Ellen Berscheid (1978) detected three possibilities. They can

accept and justify their inferior position (“We’re poor but we’re happy”).

They can demand compensation, perhaps by harassing, embarrassing, even

cheating their exploiter. If all else fails, they may try to restore equity by

retaliating.

364

PART FOUR SOCIAL RELATIONS

MISPERCEPTION

Recall that conflict is a perceived incompatibility of actions or goals.

Many conflicts contain but a small core of truly incompatible goals; the

bigger problem is the misperceptions of the other’s motives and goals.

The Eagles and the Rattlers did indeed have some genuinely incompatible aims. But their perceptions subjectively magnified their differences

(Figure 28-2).

In earlier modules we considered the seeds of such misperception.

The self-serving bias leads individuals and groups to accept credit for

their good deeds and shirk responsibility for bad deeds, without

according others the same benefit of the doubt. A tendency to selfjustify inclines people to deny the wrong of their evil acts (“You call

that hitting? I hardly touched him!”). Thanks to the fundamental attribution error, each side sees the other’s hostility as reflecting an evil

disposition. One then filters the information and interprets it to fit

one’s preconceptions. Groups frequently polarize these self-serving, selfjustifying, biasing tendencies. One symptom of groupthink is the tendency to perceive one’s own group as moral and strong, the opposition as evil and weak. Acts of terrorism that in most people’s eyes are

despicable brutality are seen by others as “holy war.” Indeed, the

mere fact of being in a group triggers an ingroup bias. And negative

stereotypes of the outgroup, once formed, are often resistant to contradictory evidence.

So it should not surprise us, though it should sober us, to discover

that people in conflict form distorted images of one another. Even the

types of misperception are intriguingly predictable.

Misperceptions

True

incompatibility

FIGURE 28-2

Many conflicts contain a core of truly

incompatible goals surrounded by a

larger exterior of misperceptions.

MODULE 28 CAUSES OF CONFLICT

365

Mirror-Image Perceptions

To a striking degree, the misperceptions of those in conflict are mutual.

People in conflict attribute similar virtues to themselves and vices to the

other. When the American psychologist Urie Bronfenbrenner (1961) visited

the Soviet Union in 1960 and conversed with many ordinary citizens in

Russian, he was astonished to hear them saying the same things about

America that Americans were saying about Russia. The Russians said that

the U.S. government was militarily aggressive; that it exploited and

deluded the American people; that in diplomacy it was not to be trusted.

“Slowly and painfully, it forced itself upon one that the Russians’ distorted

picture of us was curiously similar to our view of them—a mirror image.”

When two sides have clashing perceptions, at least one of the two is

misperceiving the other. And when such misperceptions exist, noted

Bronfenbrenner, “It is a psychological phenomenon without parallel in

the gravity of its consequences . . . for it is characteristic of such images that

they are self-confirming.” If A expects B to be hostile, A may treat B in such

a way that B fulfills A’s expectations, thus beginning a vicious circle

(Kennedy & Pronin, 2008). Morton Deutsch (1986) explained:

You hear the false rumor that a friend is saying nasty things about you;

you snub him; he then badmouths you, confirming your expectation.

Similarly, if the policymakers of East and West believe that war is likely

and either attempts to increase its military security vis-à-vis the other, the

other’s response will justify the initial move.

Negative mirror-image perceptions have been an obstacle to peace in

many places:

• Both sides of the Arab-Israeli conflict insisted that “we” are

motivated by our need to protect our security and our territory,

whereas “they” want to obliterate us and gobble up our land.

“We” are the indigenous people here, “they” are the invaders.

“We” are the victims; “they” are the aggressors (Bar-Tal, 2004;

Heradstveit, 1979; Kelmom, 2007). Given such intense mistrust,

negotiation is difficult.

• At Northern Ireland’s University of Ulster, J. A. Hunter and his

colleagues (1991) showed Catholic and Protestant students videos of a Protestant attack at a Catholic funeral and a Catholic

attack at a Protestant funeral. Most students attributed the other

side’s attack to “bloodthirsty” motives but its own side’s attack

to retaliation or self-defense.

• Terrorism is in the eye of the beholder. In the Middle East, a

public opinion survey found 98 percent of Palestinians agreeing

that the killing of 29 Palestinians by an assault-rifle-bearing

Israeli at a mosque constituted terrorism, and 82 percent disagreed

366

PART FOUR SOCIAL RELATIONS

that the killing of 21 Israeli youths by a Palestinian suicidebombing constituted terrorism (Kruglanski & Fishman, 2006).

Israelis likewise have responded to violence with intensified

perceptions of Palestinian evil intent (Bar-Tal, 2004).

Such conflicts, notes Philip Zimbardo (2004a), engage “a two-category

world—of good people, like US, and of bad people, like THEM.” “In

fact,” note Daniel Kahneman and Jonathan Renshon (2007), all the biases

uncovered in 40 years of psychological research are conducive to war.

They “incline national leaders to exaggerate the evil intentions of adversaries, to misjudge how adversaries perceive them, to be overly sanguine

when hostilities start, and overly reluctant to make necessary concessions in negotiations.”

Opposing sides in a conflict tend to exaggerate their differences. On

issues such as immigration and affirmative action, proponents aren’t as

liberal and opponents aren’t as conservative as their adversaries suppose

(Sherman & others, 2003). Opposing sides also tend to have a “bias blind

spot,” notes Cynthia McPherson Frantz (2006). They see their own

understandings as not influenced by their liking or disliking for others,

while seeing those who disagree with them as unfair and biased. Moreover, partisans tend to perceive a rival as especially disagreeing with

their own core values (Chambers & Melnyk, 2006).

John Chambers, Robert Baron, and Mary Inman (2006) confirmed

misperceptions on issues related to abortion and politics. Partisans perceived exaggerated differences from their adversaries, who actually

agreed with them more often than they supposed. From such exaggerated perceptions of the other’s position arise culture wars. Ralph White

(1996, 1998) reports that the Serbs started the war in Bosnia partly out

of an exaggerated fear of the relatively secularized Bosnian Muslims,

whose beliefs they wrongly associated with Middle Eastern Islamic fundamentalism and fanatical terrorism. Resolving conflict involves abandoning such exaggerated perceptions and coming to understand the

other’s mind. But that isn’t easy, notes Robert Wright (2003): “Putting

yourself in the shoes of people who do things you find abhorrent may

be the hardest moral exercise there is.”

Destructive mirror-image perceptions also operate in conflicts

between small groups and between individuals. As we saw in the

dilemma games, both parties may say, “We want to cooperate. But their

refusal to cooperate forces us to react defensively.” In a study of executives, Kenneth Thomas and Louis Pondy (1977) uncovered such attributions. Asked to describe a significant recent conflict, only 12 percent felt

the other party was cooperative; 74 percent perceived themselves as

cooperative. The typical executive explained that he or she had “suggested,” “informed,” and “recommended,” whereas the antagonist had

“demanded,” “disagreed with everything I said,” and “refused.”

MODULE 28 CAUSES OF CONFLICT

367

Group conflicts are often fueled by an illusion that the enemy’s top

leaders are evil but their people, though controlled and manipulated, are

pro-us. This evil-leader–good people perception characterized Americans’

and Russians’ views of each other during the Cold War. The United

States entered the Vietnam war believing that in areas dominated by the

Communist Vietcong “terrorists,” many of the people were allies-inwaiting. As suppressed information later revealed, those beliefs were

mere wishful thinking. In 2003 the United States began the Iraq war

presuming the existence of “a vast underground network that would rise

in support of coalition forces to assist security and law enforcement”

(Phillips, 2003). Alas, the network didn’t materialize, and the resulting

postwar security vacuum enabled looting, sabotage, persistent attacks on

American forces, and increasing attacks from an insurgency determined

to drive Western interests from the country.

Shifting Perceptions

If misperceptions accompany conflict, then they should appear and disappear as conflicts wax and wane. And they do, with startling regularity.

The same processes that create the enemy’s image can reverse that image

when the enemy becomes an ally. Thus, the “bloodthirsty, cruel, treacherous, buck-toothed little Japs” of World War II soon became—in North

American minds (Gallup, 1972) and in the media—our “intelligent, hardworking, self-disciplined, resourceful allies.”

The Germans, who after two world wars were hated, then admired,

and then again hated, were once again admired—apparently no longer

plagued by what earlier was presumed to be cruelty in their national

character. So long as Iraq was attacking unpopular Iran, even while

using chemical weapons to massacre its own Kurds, many nations supported it. Our enemy’s enemy is our friend. When Iraq ended its war

with Iran and invaded oil-rich Kuwait, Iraq’s behavior suddenly became

“barbaric.” Images of our enemies change with amazing ease.

The extent of misperceptions during conflict provides a chilling reminder

that people need not be insane or abnormally malicious to form these distorted images of their antagonists. When we experience conflict with another

nation, another group, or simply a roommate or a parent, we readily misperceive our own motives and actions as good and the other’s as evil. And just

as readily, our antagonists form a mirror-image perception of us.

So, with the antagonists trapped in a social dilemma, competing for

scarce resources, or perceiving injustice, the conflict continues until

something enables both parties to peel away their misperceptions and

work at reconciling their actual differences. Good advice, then, is this:

When in conflict, do not assume that the other fails to share your values

and morality. Rather, compare perceptions, assuming that the other is

likely perceiving the situation differently.

368

PART FOUR SOCIAL RELATIONS

CONCEPTS TO REMEMBER

conflict A perceived incompatibil-

ity of actions or goals.

social trap A situation in which

the conflicting parties, by

each rationally pursuing its

self-interest, become caught in

mutually destructive behavior.

Examples include the Prisoner’s

Dilemma and the Tragedy of

the Commons.

non-zero-sum games Games in

which outcomes need not sum

to zero. With cooperation, both

can win; with competition,

both can lose. (Also called

mixed-motive situations.)

mirror-image perceptions Reciprocal

views of each other often

held by parties in conflict; for

example, each may view itself

as moral and peace-loving and

the other as evil and aggressive.