342) Performance Features v8.4 with i2 Servers

advertisement

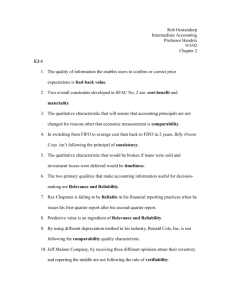

Performance of OpenVMS v8.4 with i2 servers Rafiq Ahamed K, OpenVMS Engineering ©2011 Hewlett-Packard Development Company, L.P. ©2010 The information contained herein is subject to change without notice Agenda • What's new in i2 Servers? • Introduction – New i2 server a performance differentiator – Performance – Future • Enhancements Focus Performance Results – Platform – Application – Alpha to i2 server Migration Differences • Summary • Q&A 2 9/19/2011 ―The performance results shared in this session are from engineering test environment, they do not represent any specific customer workload. Your mileage may vary.‖ 3 9/19/2011 What is new in i2 servers, post i2 blades? i2 server family Introduced rx2800 i2 rack-mounted server OpenVMS V8.4 Integrity 2 Socket Rack Server Integrity Server Blades World’s first scale-up blades built on the industry’s #1 blade infrastructure New! 5 9/19/2011 8-core scalability with 3x improved density without sacrificing RAS Introducing rx2800; a performance differentiator rx2800 i2 Server Architecture 7 9/19/2011 CPU-CPU 19.2 GB/s Memory: 28.8GB/s peak per Processor Module QPI, IOH to Processors: 38.4 GB/s Intel 9300 Processor A performance differentiator 8 9/19/2011 Performance Features of i2 Servers Memory • Dual Integrated Memory Controllers with 4 SMI channels, peak memory band-width up to 34 GB/s (6x) MB MB MB • Intel® Scalable Memory Interconnect (Intel® SMI), connects to the Intel® 7500 Scalable Memory Buffer (SMB) to support larger physical memory DDR3 RDIMMs MB SMI MB • DDR3 Higher Throughput (800MT/s), Lower Power, Faster Response Time, Increased Capacity/DIMM (16GB) MB • Directory-based Cache Coherency – Reduces Snoop traffic and contention DDR3 DIMM DDR3 DIMM DDR3 DIMM DDR3 DIMM DDR3 DIMM DDR3 DIMM DDR3 DIMM DDR3 DIMM DDR3 DIMM DDR3 DIMM DDR3 DIMM DDR3 DIMM DDR3 DIMM DDR3 DIMM DDR3 DIMM DDR3 DIMM DDR3 DIMM DDR3 DIMM DDR3 DIMM DDR3 DIMM DDR3 DIMM DDR3 DIMM DDR3 DIMM DDR3 DIMM MB • 1MB Directory Cache/IMC Processor MB • Capability to supports up to 1TB memory per IMC • 6 Gen 2 PCIe slots, supporting 5GT/sec • p410i SAS 6G RAID Controller • 8 10K RPM Disks • Enhanced Thread-Level Parallelism (TLP) [8T/P] • Instructions-level parallelism (ILP) – minimize threads from stalling the pipeline • Intel® Turbo Boost Technology – Performance on Demand • Intel® VT-i2 is Introduced Itanium® 9300 (Tukwila-MC) Itanium® 9300 (Tukwila-MC) QPI Intel® 7500 IOH (Boxboro-MC) • Data TLB support for 8K and 16K pages QPI Intel® ICH10 • New Intel® QuickPath Interconnect Technology replaces the Front Side Bus with a point-to-point • 4 full-width Intel QuickPath Interconnect links and 2 halfwidth links per processor • SMB supports different size and types of DIMM IO NUMA Aware! PCIe Gen2 Gen1 PCIe Devices PCIe Devices • Peak processor-to-processor and processor-to-I/O communications up to 96 GB/s (9x) • Glueless System Designs Up to Eight Sockets – FSB Limitations Key Characteristics Intel® Itanium® processor 9100 Intel® Itanium® processor 9300 Cores 2 4 Total On-Die Cache 27.5 MB 30 MB Software Threads per Core 2 2 (w/ enhanced thread management) System Interconnect (bandwidth per processor for a 2-socket system) Front Side Bus • Peak bandwidth per processor: 5 GB/s Intel® QuickPath Interconnect Technology • Peak bandwidth: 48 GB/s (up to 9x improvement) • Enhanced RAS • Enables common IOHs with nextgeneration Intel® Xeon® processors Memory Interconnect (bandwidth per processor for a 2-socket system) Front Side Bus • Peak bandwidth per processor: 5 GB/s Dual Integrated Memory Controllers • Peak bandwidth 34 GB/s (up to 6x improvement) Memory Capacity (4-socket system) 128-384 GB 1TB (using 16 GB RDIMMs) — up to 8x improvement Partitioning and Virtualization Intel® VT-i Intel® VT-i2 Energy Efficiency Demand Based Switching (DBS) • • • SMP Scalability 10 9/19/2011 Source: Intel Corporation • • • 64-bit Virtual Addressability 50-bit Physical Addressability Home snoop coherency • • • • Enhanced DBS (voltage modulation in addition to frequency) Intel® Turbo Boost Technology Advanced CPU and Memory Thermal Management 64-bit Virtual Addressability 50-bit Physical Addressability Directory coherency for better performance in large SMP configurations Up to 8-socket Glueless systems (higher scalability with OEM chipsets) OpenVMS V8.4 Performance Enhancements (includes post V8.4) 11 V8.4 Major Performance Features • Resource Affinity Domain (RAD) support for IA64 • Packet Processing Engine (PPE) Support for TCP/IP • Automatic Dynamic Processor Resilience (DPR) • Compression support for BACKUP • RMS SET MBC count support for 255 blocks • XFC support for 4G Global Hint Pages • Shadowing T4 Collectors • File System Consistency Check Control for reducing directory corruption • Asynchronous Virtual IO (AVIO) support for Guest OpenVMS running on HPVM V8.4 Performance Enhancements.. • Core OS improvements –Dedicated –Exception –Reduced Lock Manager and Cluster Driver Optimizations Handling Optimizations Spin Lock Contentions (SCHED, MMG etc) –Optimizations in Global Section Deletion and Creation Algorithms –Introduced –SYSMAN –RTL Paged Pool Look Aside List (LAL) IO AUTO Performance Improvements (Fibre Only) Changes to optimize strcmp() and memcmp() –Support for new high speed USB connectivity and enhancements –rx2800 console performance fixed! V8.4 Performance Enhancements.. • Shadow feature improvements to WriteBitMap, MiniCopy, MiniMerge and SPLIT_READ_LBN • Compiler improvements (BASIC RTL, COBOL, OTS$STRCMP) • Miscellaneous Improvements – FLT Tracing has 2 new options for SUMMARY report (using INDEX/PID) – LIBRARIAN, 14 9/19/2011 MONITOR , DCL DELETE OpenVMS 8.4 Performance Results OpenVMS V8.4 Summary of Improvements Significant performance gains across various workloads and system configurations Reduced BACKUP Compressed Data 272 Faster Shadow READ 300 Faster Bitmap Updating in SHADOWING 400 High Speed USB Boot 25 High Usage of mem(str)cmp() 350 Larger AST Queuing 50 Stressful DLM Resources 200 Heavy Exception Handling 50 Heavy Image Activation 45 0 100 % Improvement 200 300 400 500 Future Focus • Continuous work to optimize performance on new servers with new processors • On going effort to reduce alignment faults • Maximize utilization using hyper threading • Additional T4 collectors • Spin Lock Optimizations • Many more… 16 9/19/2011 Performance of i2 Servers; Platform and Application Tests Price/Performance Migration Path New Blades NewIntegrity Integrity blades: Cores 8-Socket 16-core rx7640 4-Socket BL890c i2 8-core rx6600 BL870c i2 2-Socket rx3600 BL870c 2-Socket rx2660 Blue is Price, Light Blue is Performance. BL860c i2 rx2800 BL860c New Integrity Blades are more scalable than previous generation • Double the number of cores • More memory • More I/Os OpenVMS running on i2 servers Performance Highlights i2 servers architected for high performance – Architecture provides increased number and faster cores/socket – Superior memory and interconnect technology – Memory intensive applications benefit from low latency and high bandwidth architecture – Higher – More time IO bandwidth and throughput resulting from new IO architecture headroom for CPU, Memory and IO intensive workloads with improved response Upto 3x performance improvement with i2 servers running OpenVMS – Our test have shown up to 2x improvement with java, some database and web server applications – Oracle 19 9/19/2011 has shown 3x improvement CPU Ratings Integer Tests Per Processor 1.73 GHz/ 1.6 GHz 9300 series processor show 2-2.3x performance improvement Ratings (More is Better) 4000 3500 3000 9300 - BL8x0c-i2 (1.73GHz/6.0MB) 2500 9300- BL8x0c-i2 (1.60GHz/6.0MB) 2000 9300 - BL8x0c-i2 (1.33GHz/4.0MB) 1500 9000 - BL860c (1.59GHz/9.0MB) 1000 9100 - rx7640 (1.60GHz/12.0MB) 500 0 – These numbers are per processor/socket – As the frequency increases, we see a increase in rating Applications with large Integer computations, financial analysis, high data processing 20 9/19/2011 CPU Ratings Floating Point Computation Tests Per Processor 1.73 GHz/ 1.6 GHz 9300 series processor show 2.12.3x performance improvement FP Rating (More is Better) 9000 8000 7000 6000 5000 4000 3000 9300 - BL8x0c-i2 (1.73GHz/6.0MB) 9300 - BL8x0c-i2 (1.60GHz/6.0MB) 9300 - BL8x0c-i2 (1.33GHz/4.0MB) 9000 - BL860c (1.59GHz/9.0MB) 9100 - rx7640 (1.60GHz/12.0MB) 2000 1000 0 – Intel ® Itanium ® 9300-series processors have new high precision floating architecture Fast response to complex operations; Scientific, Automation and robotic applications should benefit 21 9/19/2011 Memory Tests 55% improvement in memory bandwidth between 3600 and BL860c i2 Throughput – More is Better Memory Bandwidth Single Stream (More is Better) 3500 3000 2500 BL860c-i2 (1.73GHz/6.0MB) 2000 rx3600 (1.67GHz/9.0MB) rx6600 (1.59GHz/12.0MB) 1500 rx7640 (1.60GHz/12.0MB) 1000 SD32A (1.60GHz/9.0MB) 500 0 MB/Sec – Computed via Memory Test Program – Single Stream – The new BL8x0c i2 server demonstrated very good memory bandwidth – Memory bound applications should see a difference with aggregated bandwidth 22 9/19/2011 Core I/O on rx2800 rx2800 i2 - Core SAS Caching rx2800 i2 - SAS Logical Disk (Striping) 700 2500 600 2000 400 IOPS IOPS 500 300 200 1500 1000 500 100 0 0 1 2 4 8 16 32 64 128 1 256 2 • 8 16 32 64 128 Cache 1 disk with Cache 2 disk with Cache 4 disk with Cache rx2800 i2 server comes with p410i SAS Controller as Core SAS I/O – Small Block Random Tests were run on same sized disk – Logical Volumes were spread across multiple disks to show the I/O striping effect • p410i with cache* exponentially boosts the performance (upto 2.5x) • Increased number of disks in a raid group linearly increases performance 23 9/19/2011 256 Load Load W/O Cache 4 * Additional Cache Kit Rack mounted - Core I/O i2 comparison Integirty Core SAS 700 600 IOPS 500 400 300 200 100 0 1 2 4 8 16 32 64 128 256 Load rx6600 (1.59GHz/12.0MB) rx2800 i2 (1.46GHz/5.0MB) - Core Cache rx2800 i2 (1.46GHz/5.0MB) - W/O Cache • Core IO SAS Controller (rx6600 - LSISAS106x, rx2800 – p410i) • Older IA64 SAS performance is similar to W/O Cache on i2 server • rx2800 i2 server with Cache is a clear winner ( upto 2.5x times better) 24 9/19/2011 Apache Performance 2x Improvement Apache Bench Tests on OpenVMS 8.4 600 Bandwidth Throughput Time Taken (sec) (More is better) ( More is Better) (Less is Better) 1400 531 1200 500 60 1162 1000 400 276.21 300 800 600 200 40 597 0 Transfer rate (Kbytes/sec) 25.818 10 200 0 30 20 400 100 50.277 50 0 Requests per second Time Taken (sec) BL860c i2 (1.73GHz/6.0MB) BL860c i2 (1.73GHz/6.0MB) BL860c i2 (1.73GHz/6.0MB) BL860c (1.59GHz/9.0MB) BL860c (1.59GHz/9.0MB) BL860c (1.59GHz/9.0MB) – Configuration Details • The tests were run on OpenVMS 8.4, with 1G network end to end connectivity • Apache 2.1-1 with ECO2 , Apache Bench 2.0.40-dev – Time Taken should be less; Req/sec and KB/sec should be more • 25 BL860c-i2 was able to cater 2x performance compared to BL860c 9/19/2011 Java Workload Tests 2x Improvement Native Java Tests on OpenVMS 8.4 – More is Better Java Workload 140000 140000 120000 120000 Operation Rate Operation Rate Java Workload 100000 80000 60000 40000 100000 80000 60000 40000 20000 20000 0 0 0 2 4 6 8 10 12 14 16 18 8 9 10 BL870c i2 (1.60GHz/5.0MB) rx6600 (1.59GHz/12.0MB) – Java Workloads scale up better on i2 Servers – Java Workloads are high CPU and Memory Intensive 26 9/19/2011 12 13 14 15 Threads Threads rx6600 (1.59GHz/12.0MB) 11 BL870c i2 (1.60GHz/5.0MB) 16 Rdb Tests 2x Improvement Rdb Performance Load Tests – OpenVMS 8.4 RDB Performance RDB Performance (Less is better) 250 1.2 200 1 150 sec/txn TPS (More is better) 100 0.8 0.6 0.4 50 0.2 0 0 HP Integrity rx2800 i2 (1.60GHz/5.0MB) HP Integrity rx2800 i2 (1.60GHz/5.0MB) HP rx3600 (1.59GHz/9.0MB) HP rx3600 (1.59GHz/9.0MB) – Test runs a load with100 jobs of 100000 txns/job, DB on local disks (single) – Rx2800 i2 server shows 2x better throughput than rx3600 • • The Transaction Per Second (TPS) is 2x Rdb is high Memory and I/O oriented application, which seems to take advantage of p410i 6G SAS controller and high speed memory architecture – Results have shown that Rdb takes advantage of HT in high end i2 servers 27 9/19/2011 Oracle 10gR2 on new i2 Server 3x Improvement 20000 TPM 15000 10000 5000 0 16 32 48 Users rx7640 (1.60GHz/12.0MB) BL890c i2 (1.60GHz/6.0MB) – Oracle Swing Bench Tests were run with tuning configuration (same) • rx7640 and BL890c i2 are NUMA based systems, MostlyNUMA RAD Enabled and Hyper-Thread disabled, 6 RAID 5 EVA8100 Volumes – Oracle was run in Shared Server Mode – BL890c i2 server consistently shows 3x improvement for same numbers of users 28 9/19/2011 Oracle Tests – Resource Usage 16000 14000 12000 10000 8000 3x Increase 6000 4000 2000 0 CPU TPM BL890c i2 (1.60GHz/6.0MB) rx7640 (1.60GHz/12.0MB) – BL890c i2 is able to drive 3x improvement for same CPU usage 29 9/19/2011 ALPHA TO IA64 Speeds and Feeds ©2010 Hewlett-Packard Development Company, L.P. The information contained herein is subject to change without notice Mission-critical scaling Speeds and Feeds comparison GS1280 M32 BL890c i2 Processor Type 1150 MHz EV7 8 Intel® Itanium® processor 9300 series (quad-core and dual-core) Max Memory 256 GB 1.5 TB Internal Storage Ultra3 SCSI 6G SAS HW RAID Networking (integrated) 1 GbE NICs 10 GbE (Flex-10) NICs PCI/PCI-X slots @133 MHz PCIe Gen2 Mezz slots 2x 41U 4U IO Slots Form Factor BL890c i2 scales to support mission-critical workloads 32 9/19/2011 Compilation Tests Compilation Tests (Less is better) 600000 557110 500000 400000 300000 242455 200000 100000 0 BL890C - 8S Itanium 9300 (1.6GHz) Alpha GS1280 EV7(1150 Mhz) Charged CPU • This test is to compile around 200 modules using c++ compiler • The overhead of running this on BL890C i2 was reduced ~2.3 times compared to GS1280 33 9/19/2011 Java Workload Tests Operation Rate Java Tests 200000 180000 160000 140000 120000 100000 80000 60000 40000 20000 0 1 5 9 13 17 21 25 29 33 37 41 45 49 53 57 61 Threads BL890c i2 GS1280 – Java Workloads scale exponentially with load as compared to Alpha –There is no active development involved in Java on Alpha –Last available on alpha is JDK 1.5 as compared to JDK 1.6 – Java Workloads are high CPU and Memory Intensive 34 9/19/2011 EV7 vs. Itanium 9300(Tukwila) Per Core – Tukwila Processors are ~2.6 times faster than EV7 – These numbers are per Core (within a processor/socket) – Tukwila processors have new high precision floating architecture Floating Point Ratings (Higher is better) 2000 1882 1800 1600 1400 1200 1000 800 699 600 – Fast response to complex operations; Scientific, Automation and robotic applications should benefit 35 9/19/2011 400 200 0 BL890C - 8S Itanium 9300 (1.6GHz) Alpha GS1280 EV7(1150 Mhz) Floating Point Rate EV7 vs. Intel Itanium 9300(Tukwila) Per Core – Tukwila Processors are ~33% better than EV7 – These numbers are per Core (within a processor/socket) – CPU Bound applications should benefit (database queries), specifically integer computational bound applications Integer Ratings (Higher is better) 900 851.46 800 700 563.38 600 500 400 300 200 100 0 BL890C - 8S Itanium 9300 (1.6GHz) Integer Rate 36 9/19/2011 Alpha GS1280 EV7(1150 Mhz) Memory Bandwidth Tests • • This tests simulates heavy load directly to memory module by passing the cache The bandwidth on a ―single stream‖ test for BL890c i2 is 52% better Memory Bandwidth (Higher is better) 3500 3200 3000 2500 2100 2000 1500 1000 500 0 BL890C - 8S Itanium 9300 (1.6GHz) Memory (MB/sec) 37 9/19/2011 Alpha GS1280 EV7(1150 Mhz) IO Tests Single Process Tests IO Tests on EVA8100 with 2/4Gb Cards, RAID 5 320GB Volumes Random Workloads Small Block Random Mixed Load 647.9 700 14000 12000 IOPS 500 400 325 287.2 300 200 100 112.7 Operation Rate 600 10000 8000 6000 4000 2000 0 0 1 Threads AlphaServer ES45 Model 2B 2 4 8 16 32 64 128 256 Load BL860c i2 AlphaServer ES45 Model 2B BL860c i2 –4K IO Tests run with Mixed QIO/FastIO Loads, RANDOM workloads are very CPU intensive –We see ~3x increase in throughput for same load on i2 server – On alpha platforms, the max device supported is 2G FC HBA’s and the bus speed and feed influenced a lot in the performance 38 9/19/2011 Rdb Tests RDB Performance (More is better) 250 214 200 145 TPS 150 100 50 0 BL890C - 8S Itanium 9300 (1.6GHz) Alpha GS1280 EV7(1150 Mhz) – Test runs a load with 100 jobs of 100000 txns/job, DB on local disks (single) – BL890c 8S performs approx.1.5x better than GS1280 (Alpha) • 39 The Transaction Per Second (TPS) is 1.5x 9/19/2011 Performance Enhanced with the Integrity Server Blades Based on Blade Scale Architecture Up to 2X1 faster performance Integrity server blades based on Blade Scale Architecture Dual-core Integrity servers 2- & 4-socket Integrity with built-in resiliency and less power consumption 1 some Per socket performance increases 2.3x Up to 2x applications have shown up to 3x Integer & Floating Tests Application Performance Summary • i2 servers replace the Front Side Bus (FSB) with Quick Path Interconnect(QPI) Fabric • Even the low end i2 server comes with scalable features and supports NUMA • The performance improvements delivered with i2 server are a mix of – Hardware-level – Operating improvements system innovations • Customers will see up to 2x performance benefits with i2 servers • The i2 servers support state of the art RAS capabilities • The TCO paper outlines the cost, power and density benefits • The speed and feed of i2 servers are very large compared to Alpha! 43 9/19/2011 References • Please mail us your OpenVMS performance feedback or concerns to t4@hp.com • Please refer to below 2 white papers on OpenVMS performance and scalability – Performance – Total • Benefits on BL8x0c i2 Server Blades Cost of Upgrade i2 Server Technical Reads – Take a tour around the new HP Integrity rx2800 server (Video, 2:44) – new HP Integrity rx2800 technical white paper – White paper: Why scalable blades: HP Integrity server blades • Didn’t find what you are looking for – Enterprise servers, workstations, and systems hardware technical web site 44 9/19/2011 Questions/Comments • Business Manager (Rohini Madhavan) – rohini.madhavan@hp.com • Office of Customer Programs – OpenVMS.Programs@hp.com THANK YOU Boxboro & ICH10 • Boxboro I/O Hub (E7500 Chipset) Connects to local CPUs via QPI links – Provides 36 PCIe Gen 2 lanes – • – Hosts major IO functions • • • • • • Order of magnitude peak IO bandwidth increase over previous generation in BL870c i2 p410i RAID/SAS controller Two dual-port 10GbE Flex-10 NICs Three x8-provisioned mezzanine slots Gromit XE (iLO3) mgmt controller ICH10 I/O Controller Hub (SouthBridge) BIOH 2.5 GB/s 5 GB/s 5 GB/s 10 GB/s 10 GB/s 10 GB/s ICH10 utilization x4 PCIe Gen 1 link for partner blade support – Support VGA controller, USB controller – 53 1.2 GB/s 9/19/2011 Gromit XE RAID/SAS Controller Dual 10 GbE Dual 10 GbE Mezz Slot 1 Mezz Slot 2 Mezz Slot 3 ICH10 on ICH Card 2.5 GB/s Peak bidirectional link bandwidth Intel ® Itanium ® 9300 Changes High Performance Enhanced Thread-Level Parallelism (TLP) •4 Threads/Processor; Improve performance and scalability for heavily threaded software •Improved Thread management providing high core utilization; Thread switch for medium latency events and spin locks •Dedicated cache instead of shared cache model; Quick response, no contention on cache Enhanced Instruction-Level Parallelism (ILP) •Simultaneously process multiple instructions on each software thread; Increases throughput and faster response •Wide and short pipelining with many optimizations. Supports many zero cycle load and re-steering, with extensive bypassing for not stalling the threads. •1MB First-level Data Translation Lookaside Buffer (DTLB)/ Core supports larger pages (8 K and 16 K) Intel® Turbo Boost Technology – Performance on Demand •Automatically allows processor cores to run faster than the base operating frequency •When workload demands additional performance, the processor frequency will dynamically increase by 133 MHz on short and regular intervals (6microsec), 120 events monitored Intel® VT-i2 Introduced - Provide additional performance optimizations •HW assistance helping reduction in emulation code and OS-VMM transitions 54 9/19/2011 Changes/Improvements Scalability and Headroom Enhanced Communication Channels (Memory, IO) – Highly Scalable • New Intel® QuickPath Interconnect Technology - replaces the Front Side Bus with a pointto-point • 4 full-width Intel QuickPath Interconnect links and 2 half-width links • Peak processor-to-processor and processor-to-I/O communications up to 96 GB/s (9x) • Supports PCI Express Gen2 (5.0 GT/s) as well as PCI Express Gen1 (2.5 GT/s). Two Integrated Memory Controllers and an Intel® Scalable Memory Interconnect • 2 integrated memory controllers, peak memory band-width up to 34 GB/s (6x) • Capability to supports up to 1TB memory per IMC • Intel® Scalable Memory Interconnect (Intel® SMI), connects to the Intel® 7500 Scalable Memory Buffer to support larger physical memory DDR3 RDIMMs (faster) Directory-based Cache Coherency – Reduces Snoop traffic and contention • Cache coherency ensures that data in memory and cache remain synchronized, so the most current data is used in every transaction • In combination with QPI provides excellent scaling Glueless System Designs Up to Eight Sockets – FSB limitation of max 4 is eliminated. 55 9/19/2011 Memory tests Memory Latency on NUMA Systems Memory Latency (Less is Better) Latency(nsec) Single Stream 400 350 300 250 200 150 100 50 0 Local rx7640 (1.60GHz/12.0MB) 56 Remote Interleaved BL860c-i2 (1.73GHz/6.0MB) • Computed via Memory Test Program – Single Stream • The local is 10% better, remote 28% and interleaved 20% 9/19/2011