Performance analysis of 3-dimensional fingerprint scan system

advertisement

ABSTRACT OF THESIS PERFORMANCE ANALYSIS OF 3-DIMENSIONAL

FINGERPRINT SCAN SYSTEM

Fingerprint recognition has been extensively applied in both forensic law enforcement and security

involved personal identification. Traditional fingerprint acquisition is generally done in 2-D, with a

typical automatic fingerprint identification system (AFIS) consisting of four modules: image acquisition,

preprocessing, feature extraction, and feature matching. In this thesis, we present a technology of noncontact 3-D fingerprint capturing and processing for higher performance fingerprint data acquisition and

verification as the image acquisition and preprocessing models, and a new technology of unraveling the

3D data to 2D fingerprint. We use NIST fingerprint software as the feature extraction and feature

matching models. Our scan system relies on a novel real-time and low-cost 3-D sensor using structured

light illumination (SLI) which generates both texture and detailed ridge depth information. The high

resolution 3-D scans are then converted into 2-D unraveled equivalent images, using our proposed best fit

sphere unravel algorithm. As a result, many limitations imposed upon conventional fingerprint capturing

and processing are relaxed by the unobtrusiveness of the system and the extra depth information obtained.

In addition, expect for the small distortions that may be caused by camera and projector, compared to the

techniques used nowadays, the whole process defuses distortion, and the unraveled fingerprint is

controlled to 500 dots per inch. The image quality is evaluated and analyzed using NIST fingerprint

image software. A comparison is performed between the converted 2-D unraveled equivalent fingerprints

and their 2-D ink rolled counterparts. Then, NIST matching software is applied to the 2-D unraveled

fingerprints, and the results are given and analyzed, which shows strong relationship between matching

performance and quality of the fingerprints. In the end, some incremental future works are proposed in

order to make further improvements to our new 3D fingerprint scan system.

Index Terms: 3-D fingerprints, fingerprint acquisition, best fit sphere algorithm, rolled-equivalent image,

fingerprint verification, fingerprint quality, matching performance.

PERFORMANCE ANALYSIS OF 3-DIMENSIONAL

FINGERPRINT SCAN SYSTEM

By Yongchang Wang Director of Thesis Director of Graduate Students Data RULES FOR THE USE OF THESES

Unpublished theses submitted for the Master’s degree and deposited in the University of Kentucky Library are as a rule open for inspection, but are to be used only with due regard to the rights of the authors. Bibliographical references may be noted, but quotations or summaries of parts may be published only with the permission of the author, with the usual scholarly acknowledgments. Extensive copying or publication of the thesis in whole or in part also requires the consent of the Dean of the Graduate School of the University of Kentucky. A library that borrows this thesis for use by its patrons is expected to secure the signature of each user. Name Date THESIS

Yongchang Wang

The Graduate School

University of Kengtucky

2008

PERFORMANCE ANALYSIS OF 3-DIMENSIONAL

FINGERPRINT SCAN SYSTEM

THESIS A thesis submitted in partial fulfillment of the

requirements for the degree of Masters of Science in

the College of Engineering

at the University of Kentucky

By

Yongchang Wang

Lexington, Kentucky

Director: Dr.Daniel L. Lau, Associate Professor of Electrical Engineering

Lexington, Kentucky

2008 MASTER’S THESIS RELEASE I authorize the University of Kentucky Libraries

to reproduce this thesis in

whole or in part for purposes of research

Signed:________________

Date:__________________ Dedicated to Cui Li – my loving wife

ACKNOWLEDGEMENTS

I would like to thank all those people who have helped me for this thesis.

Here, firstly, my sincere thanks to my advisor, Dr. Daniel Lau for giving me an

opportunity to work on this project and lead me into this area. Also Dr. Lau taught me so

much from the whole idea to the technique details. It is his persistence for perfection and

interest in little details that have supplemented my own quest for knowledge. I would also

like to extend my great thanks to Dr. L. G. Hassebrook for his constant encouragement

and support during all the time. Whenever I met problems Dr. Lau and Dr. Hassebrook

always helped me and taught me how to solve them.

I am also grateful to Veer Ganesh Yalla for providing the 3-D fingerprint scans as and

when required. Thank Abhishika Fatehpuria, especially when I first came into this area,

Abhishika gave me so much help. And thank Kai Liu, it is him that lead me into

programming. Discussions with Abhishika, Kai and Ganesh regarding the work, were

always interesting and intellectual.

This work would not have been possible without the support and love of my parents and

wife. They were the ones who always encouraged and motivated me to go ahead. I also

thank all my friends for always putting a smile on my face during tough times and

support me.

iii TABLE OF CONTENTS ACKNOWLEDGEMENTS ……………………………………………………………………………………….. iii LIST OF TABLES ……………………………………………………………………………………………………. vii LIST OF FIGURES ………………………………………………………………………………………………….. viii Chapter 1 Introduction ……………………………………………………………………………………….. 1 1.1

Fingerprint Acquisition ………………………………………………………………………… 3 1.2

Classification of Fingerprint …………………………………………………………………. 9 1.3

Fingerprint Matching …………………………………………………………………………… 11 1.4

Previous Work ……………………………………………………………………………………… 16 Chapter 2 Post Processing of 3D Fingerprint ……………………………………..…………………. 18 2.1 Fit a Sphere to the 3D Surface ………………………………………………………………….. 18 2.1.1 Calculate the Sphere ………………………………………………………………….. 18 2.1.2 Change the North Pole of the Sphere ………..…………..………………….. 21 2.2 Unravel the 3D Fingerprint ……………………………………………………………………….. 22 2.2.1 Create Grid Data …………………………………………………………………………. 22 2.2.2 Unravel the 3D Surface ……………………………………………………………….. 25 2.2.3 Apply filters to Unraveled 2D Fingerprint ……………………………………. 26 2.2.4 Down Sample to Standard …………………………………………………………… 27 2.2.5 Further Distortion Correction ……….……………………………………………… 29 2.3 Apply NIST Software to the Data ………………………………………………………………… 37 2.4 Fingerprints Quality Analysis …………………………………………………………………….… 39 iv 2.4.1 2D Ink Rolled Fingerprint Scanning .…………………………………………….. 42 2.4.2 2D Ink Fingerprint Experimental Results and Analysis ..……………….. 43 2.4.3 3D Unraveled Fingerprint Experimental Results and Analysis ..……. 50 2.4.4 Compare between 2D Inked and 3D Unraveled Fingerprint ..…..….. 58 Chapter 3 Fingerprint Image Software ………………………………………………………………….. 64 3.1 NIST Minutiae Detection (MINDTCT) System ……………………………………………….. 65 3.2 NIST Fingerprint Pattern Classification (PCASYS) System ……………………………… 67 3.3 NIST Fingerprint Image Quality (NFIQ) System …………………………………………….. 68 3.4 NIST Fingerprint Matcher (BOZORTH3) System ……………………………………………. 69 3.4.1 Construct Intra‐Fingerprint Minutia Comparison Tables ……………… 70 3.4.2 Construct an Inter‐Fingerprint Compatibility Table ……………………… 72 3.4.3 Traverse the Inter‐Fingerprint Compatibility Table ……………………… 73 Chapter 4 Experiments and Results ……………………………………………………………………… 76 4.1 Matching Result of 3D Unraveled Fingerprints ………………………………………….. 77 4.2 Relationship between Fingerprint Quality and Matching Score …………………. 79 Chapter 5 Conclusions and Future Works …………………………………………………………….. 88 5.1 Conclusions ……………………………………………………………………………………………….. 88 5.2 Future Works …………………………………………………………………………………………….. 91 Appendix A 3D Unravelled fingerprint images from subject 0 to subject 14 ………………………….. 93 Bibliography …………………………………………………………………………………………………………. 101 Vita ………………………………………………………………………………………………………………………. 105

v List of Tables 1.1 Basic and composite ridge characteristics (minutiae) ………………………………………….. 13 2.1 Results of running PCASYS, MINDTCT and NFIQ on the 2D images of Subjects 0 through 14 ………………………………………………………………………………………………………….. 43 2.2 Results of running PCASYS, MINDTCT and NFIQ on the 3D unraveled fingerprint images of Subjects 0 through 14 …………………………………………………………………………. 50 3.1 Feature Vector Description[NIST] ……………………………………………………………………….. 68 vi List of Figures 1.1 Various types of fingerprint impressions: (a) rolled inked fingerprint (from NIST 4 database); (b) latent fingerprint; (c) fingerprint obtained using an optical sensor; (d) fingerprint obtained using a solid state sensor …………………………………………………….. 4 1.2 Fingerprint sensors ……………………………………………………………………………………………… 5 1.3 Classification of fingerprints ………………………………………………………………………………… 11 1.4 A sample fingerprint image showing different ridge patterns and minutiae type … 12 1.5 Matching process between two fingerprints ……………………………………………………….. 15 1.6 Experimantal setup used for 3D fingerprint scanner ……………………………………………. 16 2.1 Three different views of original input 3D fingerprint …………………………………………. 19 2.2 3D views of finger print data and sphere. To get a clear view, the data is great down sampled, from 1392*1040 to 65*50. Blue points ‘.’ represent points from the finger print data. Red points ‘*’ represent points from the ‘best fit’ sphere. From left to right are four different views of the 3D data ………………………………………………………. 20 2.3 Compare before and after moving the north pole. Black points clouds in figures are the points down sampled from the surface of the 3D fingerprint, where the light line is the axis. Fig (a) shows that before the moving of the north pole, the unravel center is not on the fingerprint’s surface. Fig (b) shows that after the rotation and translation, the unravel center is changed to the fingerprint’s center …………………. 22 2.4 Value distributions of theta and phi. Figure (a) is theta, and figure (b) is phi. Theta and phi, whose values are equally distributed, together form a mesh, which is vii projected onto the 3D finger print. The density of this created mesh is 3 times higher than the 3D finger print data ……………………………………………………………………. 23 2.5 Grid created data on the 3D finger print. Each point on the figure is from the mesh points. For a clear view, the data is greatly down sampled from 1392 by1040 to 130 by 100. From left to right are four different views of the 3D data ……………………….. 24 2.6 Unraveled 2D finger print. The density of the unraveled 2D finger print is 3 time higher than the original density from the 3D finger print ……………………………………. 25 2.7 2D finger print after high pass filter. The Gaussian low pass filter is a 20×20 size filter with hsize equals to 3×3 and sigma equals to 40. Fig. a is the filter out data, and fig. b is the data after subtracting the low frequency from the unraveled 2D fingerprint …………………………………………………………………………………………………………… 26 2.8 finger print after post filter process. To be similar to inked finger print, the color is inverted. Image size is [870, 1180], which is with three times higher points density than the original 3D finger print data …………………………………………………………………… 27 2.9 Plot of distance along theta direction ………………………………………………………………….. 28 2.10 Plot of distance along theta direction after FFT ………………………………………………….. 28 2.11 Distance along theta direction after scale to 500 dpi …………………………………………. 29 2.12 (a) Distance plot along x = 300; (b) Distance plot along y = 300 …………………………. 30 2.13 (a) Distance plot along x = 450; (b) Distance plot along y = 150 …………………………. 31 2.14 (a) New theta map; (b) New phi map …………………………………………………………………. 32 2.15 (a) New theta map with size [600, 600]; (b) New phi map with size [600, 600] …. 33 2.16 (a) Distance pot along x = 300; (b) Distance plot along y = 300 …………………………… 34 2.17 (a) Distance plot along x = 450; (b) Distance plot along y = 150 ………………………….. 35 2.18 Variance comparison before and after distortion correction. (a) Variances along viii theta and phi direction before correction. (b) Variances along theta and phi direction after correction ……………………………………………………………………………………. 36 2.19 2D unraveled fingerprint from scanned 3D data ………………………………………………… 37 2.20 Binarilized fingerprint of the 2D unraveled fingerprint from 3D ………………………… 38 2.21 Quality image of the 2D unraveled finger print. White color represents 4, which is the highest quality. From white to dark, darker color is poorer quality. 0 is lowest quality, which means there is no meaningful data. The average quality of this sample is 3.1090 …………………………………………………………………………………………………. 38 2.22 Schematic flow chart of the Best Fit Sphere algorithm ………………………………………. 39 2.23 An example fingerprint card ………………………………………………………………………………. 43 2.24 Variation of the number of foreground blocks in quality zones 1‐4 with respect to the overall quality number for 2D rolled inked fingerprints. The number of blocks in quality zone 4 decreases, while that in quality zone 2 increases with decrease in overall quality from best to unusable …………………………………………………………………. 47 2.25 Variation of the number of minutiae with quality greater than 0.5, 0.6, 0.75, 0.8, 0.9, 0.95, 0.97 with respect to the overall quality number for 2D rolled inked fingerprints. The number of minutiae with quality greater than 0.5, 0.6, 0.75 decreases with a decrease in overall quality from best to unusable …………………… 48 2.26 Scatter plot between number of blocks in quality zone 4 and number of minutiae with quality greater than 0.75 for 2D rolled inked fingerprints. The plot shows a strong correlation between the two parameters ……………………………………………….. 49 2.27 Plot of classification confidence number generated by the PCASYS system with respect to the overall quality number for 2D rolled inked fingerprints ………………. 49 2.28 Variation of the number of foreground blocks in quality zones 1‐4 with respect to ix the overall quality number for 2D unraveled fingerprints obtained from 3D scans. The number of blocks in quality zone 4 decreases, while that in quality zone 2 increases with decrease in overall quality from best to unusable. The distribution is much the same as 2D inked ……………………………………………………………………………….. 55 2.29 Variation of the number of minutiae with quality greater than 0.5, 0.6, 0.75, 0.8, 0.9, 0.95 and 0.97 with respect to the overall quality number for 2D unraveled finger prints obtained from 3D scans. The number of minutiae decreases with a decrease in the first four quality numbers and increase at the last quality number, which is similar to 2D inked fingerprints …………………………………………………………….. 56 2.30 Scatter plot between number of blocks in quality zone 4 and number of minutiae with quality greater than 0.75 for 2D unraveled fingerprints obtained from 3D scans. The plot shows a strong correlation between the two parameters, which is much the same as 2D inked fingerprints …………………………………………………………….. 57 2.31 Plot of classification confidence number generated by the PCASYS system with respect to the overall quality number for 2D unraveled fingerprints obtained from 3D scans, which is much the same as 2D inked …………………………………………………… 57 2.32 Number of blocks in quality zones 4 with respect to the overall quality number, which shows the 2D unraveled fingerprints have a higher percentage of quality zone 4 than the 2D inked fingerprints ………………………………………………………………… 59 2.33 Number of minutiae with quality greater than 0.75, with respect to the overall quality number and classification confidence number, which shows 2D unraveled fingerprints have more minutiae with quality bigger than 0.75 ………………………….. 60 2.34 Overall quality number for 2‐D rolled inked fingerprints and 2‐D unraveled fingerprints obtained from 3‐D scans …………………………………………………………………. 61 x 2.35 Distributions of minutiae with quality greater than 0.8, for 2‐D rolled inked fingerprints and 2‐D unraveled fingerprints obtained from 3‐D scans, which shows the 2D unraveled fingerprints have a better result …………………………………………….. 62 3.1 Flow‐chart of minutiae detection process …………………………………………………………… 66 3.2 Minutiae Detection Result. The detection is based on the binary image. Left image is unraveled 2D binary finger print. Right image is the corresponding minutiae detection result with quality larger than 50, where the minutiae are marked by small black square ……………………………………………………………………………………………….. 67 3.3 Results of running NFIQ package on the example fingerprints. Each group figures how the generated quality map (right) for the corresponding finger print image(left). The average quality of each quality map is also shown below the images ………………………………………………………………………………………………………………… 69 4.1 Histogram of match and non‐match distributions. All the data is scanned from index fingers …………………………………………………………………………………………………………………. 78 4.2 ROC of overall test data. All the data is scanned from index fingers. When the FAR is 0.01, the TAR is 0.891. And for the FAR 0.1, the TAR is 0.988 ……………………………… 79 4.3 Distribution of matching scores, when matched fingerprints are from the same finger ………………………………………………………………………………………………………………….. 81 4.4 Distribution of matching scores, when matched fingerprints are from different fingers …………………………………………………………………………………………………………………. 82 4.5 ROC, when apply local quality classification ………………………………………………………… 83 4.6 Distribution of matching scores, when matched fingerprints are from the same finger ………………………………………………………………………………………………………………….. 84 4.7 Distribution of matching scores, when matched fingerprints are from different xi fingers …………………………………………………………………………………………………………………. 85 4.8 ROC, when apply overall quality classification ……………………………………………………… 86 A 2D unraveled fingerprint images from subject 0 to 14 ……………………………………….….. 93 xii Chapter 1 Introduction A fingerprint is an impression of the friction ridges of all or any part of the finger.

Fingerprint identification (sometimes referred to as dactyloscopy) [8] is the process of

comparing questioned and known friction skin ridge impressions from fingers, palms,

and toes to determine if the impressions are from the same finger (or palm, toe, etc.) [6,

8, 9]. Among all the biometric techniques, fingerprint-based identification is the oldest

method which has been successfully used in numerous applications [10]. Everyone is

known to have unique, immutable fingerprints [2].

The science of fingerprint

Identification stands out among all other forensic sciences for many reasons, including

the following:

•

Has served all governments worldwide during the past 100 years to provide

accurate identification of criminals. No two fingerprints have ever been found alike in

many billions of human and automated computer comparisons. Fingerprints are the

very basis for criminal history foundation at every police agency [10, 11].

•

Established the first forensic professional organization, the International

Association for Identification (IAI), in 1915. [10]

•

Established the first professional certification program for forensic scientists,

the IAI's Certified Latent Print Examiner program (in 1977), issuing certification to

those meeting stringent criteria and revoking certification for serious errors such as

erroneous identifications [11].

•

Remains the most commonly used forensic evidence worldwide - in most

jurisdictions fingerprint examination cases match or outnumber all other forensic

examination casework combined. [12]

1

•

Continues to expand as the premier method for identifying persons, with tens of

thousands of persons added to fingerprint repositories daily in America alone - far

outdistancing similar databases in growth [7].

•

Outperforms DNA and all other human identification systems to identify more

murderers, rapists and other serious offenders (fingerprints solve ten times more

unknown suspect cases than DNA in most jurisdictions) [10].

Other visible human characteristics change - fingerprints do not. The flexibility of

friction ridge skin means that no two finger or palm prints are ever exactly alike (never

identical in every detail), even two impressions recorded immediately after each other.

Fingerprint identification (also referred to as individualization) occurs when an expert

(or an expert computer system operating under threshold scoring rules) determines that

two friction ridge impressions originated from the same finger or palm (or toe, sole) to

the exclusion of all others.

The history of fingerprinting can be traced back to prehistoric times based on the

human fingerprints discovered on a large number of archaeological artifacts and

historical items [9]. In 1686, Marcello Malpighi, a professor of anatomy at the

University of Bologna, noted in his treatise; ridges, spirals and loops in fingerprints. He

made no mention of their value as a tool for individual identification. A layer of skin

was named after him; "Malpighi" layer, which is approximately 1.8mm thick [14].

During the 1870's, Dr. Henry Faulds, the British Surgeon-Superintendent of Tsukiji

Hospital in Tokyo, Japan, took up the study of "skin-furrows" after noticing finger

marks on specimens of "prehistoric" pottery [9]. A learned and industrious man, Dr.

Faulds not only recognized the importance of fingerprints as a means of identification,

but devised a method of classification as well.

In 1880, Faulds forwarded an

2

explanation of his classification system and a sample of the forms he had designed for

recording inked impressions, to Sir Charles Darwin. Darwin, in advanced age and ill

health, informed Dr. Faulds that he could be of no assistance to him, but promised to

pass the materials on to his cousin, Francis Galton. Also in 1880, Dr. Faulds published

an article in the Scientific Journal, "Nature" (nature). He discussed fingerprints as a

means of personal identification, and the use of printers ink as a method for obtaining

such fingerprints [11, 12, 14]. He is also credited with the first fingerprint identification

of a greasy fingerprint left on an alcohol bottle. Later, Juan Vucetich made the first

criminal fingerprint identification in 1892 [12, 14].

Today, the largest AFIS repository in America is operated by the Department of

Homeland Security's US Visit Program, containing over 63 million persons'

fingerprints, primarily in the form of two-finger records (non-compliant with FBI and

Interpol standards). Fingerprint identification is divided into four modules,

(i)acquisition, (ii)preprocessing, (iii)feature extraction, and (iv)feature matching. What

we will mainly discuss in this thesis is acquisition and preprocessing.

1.1 Fingerprint Acquisition

There are many different ways of imaging the ridge and valley patterns of finger skin,

each with its own strengths, weaknesses, and idiosyncrasies. Based on the method of

acquisition, these processes can be classified as either offline or online processes. A

digital fingerprint image can be characterized by its resolution, area, number of pixels,

dynamic range (depth), geometric accuracy, and image quality [15].

3

Fig 1.1 Various types of fingerprint impressions: (a) rolled inked fingerprint (from NIST 4 database); (b) latent fingerprint; (c) fingerprint obtained using an optical sensor; (d) fingerprint obtained using a solid state sensor. 4

Fig. 1.2 Fingerprint sensors These sensors in Fig. 1.2 can be classified into optical sensors, solid state sensors,

ultrasonic sensors and others. Details of all nowadays used techniques are discussed in

[2, 15].

For optical sensors, frustrated Total Internal Reflection (FTIR) is the oldest and the most

widely used live scan technique [16, 17] where light is focused on a glass-to-air interface at

an angle exceeding the critical angle for total reflection. Reflection is disrupted at the point

of contact on the glass-to-air interface. This reflected beam is focused on an electro-optical

array, consisting of a lens and a CCD or CMOS image sensor where the fingerprint

impression is captured. Since these devices map the real 3-D finger on the electro-optical

5

array, it is very difficult to deceive these devices by presentation of a photograph or printed

image, but a distortion is introduced in the captured image as the fingerprint surface is not

parallel to the imaging surface. Hologram based methods help in avoiding this problem [20,

21, 22], provided that fingerprints have high spatial fidelity, but these Hologram based

methods cannot be miniaturized because reducing the optical path results in severe

distortions at image edges. A relatively new and hygienic technology currently in use is

that of direct or non-contact reading [3, 13], which use high-quality cameras to directly

focus the fingertip. The finger is not in contact with any surface, and a mechanical support

is provided for the user to present the finger at a suitable distance. Although competent in

overcoming most of the difficulties faced by optical live scanners, obtaining well focused

and high contrast images with this technique is very difficult.

The solid state scanners became commercial in mid 1990s [25]. These sensors consist of an

array of pixels with each pixel being a tiny sensor itself. The user directly touches the

silicon surface and hence, the need for optical components and a CCD or CMOS sensor is

eliminated. The cost of these scanners is high. A capacitive sensor [28, 29, 30, 31, 32, 33,

34] consist of a 2-D array of micro-capacitor plates embedded in a chip, with the finger

being the other plate for each capacitor. When the finger is placed on the chip, small

electrical charges are created between the surface of the finger and the silicon chip, the

magnitude of which depends on the distance between them. Thus, fingerprint ridges and

valleys result in different capacitance patterns in the plates, which can be mapped into a

digital fingerprint image. Thermal sensors are made up of pyro-electric material that

generates current based on temperature differentials [23, 24]. The ridges, which are in

contact with the sensor surface, produce a different temperature differential than the valleys,

which are away from the surface.

Ultrasonic sensors are based on sending acoustic signals toward the fingertip and capturing

the echo signal. This echo signal is used to compute the ridge structure of the finger. The

sensor has a transmitter that generates ultrasonic pulses, and a receiver that detects the

reflected sound signals from the finger surface [26, 27]. These scanners are resilient to dirt

6

and oil accumulations on the fingerprint surface and hence, result in good quality images.

However, this scanner is large and expensive and takes a few seconds to acquire the image.

Furthermore, this technology is still in its nascent stage and needs further research and

development.

Precise fingerprint image acquisition has some peculiar and challenging problems [35]. The

fingerprint imaging system introduces following distortions and noise in the acquired

images. The pressure and contact of the finger on the sensor surface determine how the

three dimensional shape of the finger gets mapped onto the two dimensional image. The

mapping function is thus uncontrollable and results in different inconsistently mapped

fingerprint images. To completely capture the ridge structure of a finger, the ridges should

be in complete contact with the sensor surface. However, some non ideal contact situation,

like, dryness of the skin, skin disease, sweat, dirt, humidity in the air may lead to non ideal

contact. Accidents or injuries to the finger may inflict cuts and bruises on the finger,

thereby changing the ridge structure either permanently or semi permanently. This may

introduce additional spurious features or modify the existing ones, which may generate

false match. The live scan techniques, usually acquire fingerprints using the dab-method, in

which a finger is impressed on the surface without rolling, thus losing important

information. The inked fingerprint technique that acquires the fingerprint by rolling it from

nail to nail [36] is very cumbersome, slow and messy.

Almost all of the aforementioned sensors are plagued by these limitations [6, 8, 37]. As the

majority of these limitations arise due to contact of the finger surface with the sensor

surface, a direct reading or a non-contact 2D or 3D scanner can overcome most of the

shortcomings [6]. For these reasons, a new generation of non-contact based fingerprint

scanners is developed. Our fingerprint scan system is PMP technique based 3D scan system.

In an attempt to build such a system, we have been developing a scanning system [1, 2, 94]

as a means of acquiring 3-D scans of all the five fingers and the palm with sufficient high

resolution as to record ridge level details, which is mainly consisted by a ViewSonic PJ250

projector (1280 by 1024) and a Pulnix TM1400CL, 8 bit camera (1392 by 1040) [2]. The

7

prototype system will be designed such that it can sit on top of a table or desk with

adjustable base for precise height adjustment. Our 3D fingerprint scan system has the

following properties:

•

To avoid distortion of fingerprint, our 3D fingerprint scan system is non-touch

based.

•

The scanning time of our 3D fingerprint scan system should be limited. Now, it

costs around 10 seconds to scan a finger. And using the palm scan system,

which is much the same as the 3D fingerprint scan system, it costs around 10

seconds to scan 10 fingers, which is much faster than the traditional fingerprint

acquisition system.

•

There is post-processing of the fingerprint system, after obtaining the 3D

fingerprint data, which would unravel the 3D fingerprint so that it would be

much the same as the traditional inked roll fingerprint. Since the scan system

does not introduce distortion, it makes possible that the whole process of

obtaining 2D unraveled fingerprint is free of distortion. Our new proposed

unraveling algorithm is free of distortion, and thus we control the Dots Per Inch

(dpi) value of the whole fingerprint to the standard dpi value we wanted.

Compared to the existing unraveling algorithm, the spring algorithm proposed

by Abhishika [2], this new algorithm proposed in this thesis not only costs much

less computation but also successfully controls the dpi value of the whole

fingerprint. We will talk this post-processing algorithm in details in chapter 2.

•

In order to acquire 3D fingerprint, we implement a Phase Measuring

Profilometry (PMP) 3D scan system, which is discussed into details in [1, 3, 4,

5].

In this thesis, we use our new 3D scan system to acquire the 3D fingerprint, and use

best fit sphere algorithm, proposed in this thesis, to unravel the 3D fingerprint to 2D

such that the quality test and matching system can be applied to our result. Details

about the 3D scan system are discussed in [1]. As most of nowadays’ techniques

8

introduce distortion when acquire the fingerprint data or post process the fingerprint

data, our new system introduces no distortion when acquires the fingerprint 3D data,

since it is non-contact scan. And the best fit sphere algorithm not only does not

introduce any distortion when unravels the 3D data to 2D fingerprint, but also totally

controls the dpi of the whole 2D fingerprint, such that at any area of the 2D fingerprint

the definition is controlled to 500 dpi standard. Then, after we apply the NIST quality

and matching system to our unraveled 2D fingerprint, based on the results, we conclude

that the higher quality 2D fingerprints will indicate a higher performance of matching.

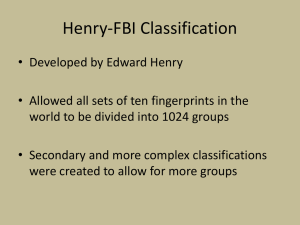

1.2 Classification of Fingerprint

Before computerization replaced manual filing systems in large fingerprint operations,

manual fingerprint classification systems were used to categorize fingerprints based on

general ridge formations (such as the presence or absence of circular patterns in various

fingers), thus permitting filing and retrieval of paper records in large collections based on

friction ridge patterns independent of name, birth date and other biographic data that

persons may misrepresent. The most popular ten print classification systems include the

Roscher system, the Vucetich system, and the Henry system [38]. Of these systems, the

Roscher system was developed in Germany and implemented in both Germany and Japan,

the Vucetich system was developed in Argentina and implemented throughout South

America, and the Henry system was developed in India and implemented in most Englishspeaking countries.

In the Henry system of classification, there are three basic fingerprint patterns: Arch, Loop

and Whorl. There are also more complex classification systems that further break down

patterns to plain arches or tented arches. Loops may be radial or ulnar, depending on the

side of the hand the tail points towards. Whorls also have sub-group classifications

including plain whorls, accidental whorls, double loop whorls, and central pocket loop

whorls [2].

9

Plain Arch

Plain Whorl

Tented Arch

Central Pocket Loop

Ulnar Loop

Double Loop Whorl

Radial Loop

Accidental Whorl

Fig 1.3 Classification of fingerprints. The five classes commonly used by today’s classification techniques are (i)arch, (ii)tented

arch, (iii)left loop, (iv)right loop, and (v)whorl (Figure 1.3) The distribution of the classes

in nature is not uniform with the probabilities of each class being approximately 0.037,

0.038, 0.317, 0.029 and 0.279 for the arch, left loop, right loop, tented arch, and whorl

respectively [39]. In order to classify fingerprint images, some features have to be extracted.

In particular, almost all the methods are based on one or more of the following features:

directional image, singular points, ridge flow, and structural features. A directional image

effectively summarizes the information contained in a fingerprint pattern and can be

reliably computed from noisy fingerprints. Also, the local directions in damaged areas can

be restored by means of a regularization process and hence, fingerprint directional images

are the most widely used for fingerprint classification. The ridge lines often produce local

10

singularities, called core and delta, by deviating from their often parallel flow. The core is

defined as the point at the top of the innermost curving ridge, and the delta is defined as the

point where two ridges, running side-by-side, diverge closest to the core. These singular

points can be very useful for aligning fingerprints with respect to a fixed point and for

classification. Ridge flow is an important discriminating characteristic and is typically

extracted from the directional image or by binarizing the image so that each ridge is

represented by a single pixel line. Ridge flow features are more robust than singular points

for classification purposes. Structural features record the relationship between low-level

elements like minutiae, local ridge orientation, or local ridge pattern and can be useful for

fingerprint classification.

To sum up, human fingerprints are unique to each person and can be regarded as a sort of

signature, certifying the person's identity. The most famous application of this kind is in

criminology. However, nowadays, automatic fingerprint matching is becoming increasingly

popular in systems which control access to physical locations, computer/network resources,

bank accounts, or register employee attendance time in enterprises. To improve the

accuracy, a more reliable way to acquire the finger print data and preprocessing it becomes

necessary. For more details, please refer to [2].

1.3 Fingerprint Matching

11

Fig. 1.4 A sample fingerprint image showing different ridge patterns and minutiae types.

The uniqueness of a fingerprint is determined by the topographic relief of its ridge structure,

which exhibits anomalies in local regions of the fingertip, known as minutiae. The position

and orientation of these minutiae are used to represent and match fingerprints [40]. A

sample fingerprint image with the various ridge patterns and the common minutiae types

marked is shown in Fig. 1.4. Minutiae are the discontinuities of the ridges:

Endings, the points at which a ridge stops

Bifurcations, the point at which one ridge divides into two

Dots, very small ridges

Islands, ridges slightly longer than dots, occupying a middle space between two

temporarily divergent ridges

Ponds or lakes, empty spaces between two temporarily divergent ridges

Spurs, a notch protruding from a ridge

Bridges, small ridges joining two longer adjacent ridges

Crossovers, two ridges which cross each other

The core is the inner point, normally in the middle of the print, around which swirls,

loops, or arches center. It is frequently characterized by a ridge ending and several acutely

curved ridges.

Deltas are the points, normally at the lower left and right hand of the fingerprint, around

12

which a triangular series of ridges center.

There are many kinds of minutiae features, some minutiae features can be classified into

the following table.

Table 1.1 Basic and composite ridge characteristics (minutiae) Minutiae

Ridge ending

Example

Bifurcation

Dot

Island (short ridge)

Pond

Spur

Bridge

Crossover

Double bifurcation

Trifurcation

Opposed bifurcation

Ridge ending/opposed bifurcation

13

The ridge patterns along with the core and delta define the global configuration while the

minutiae points define the local structure of a fingerprint. Typically, the global

configuration is used to determine the class of the fingerprint while the distribution of

minutiae points is used to match and establish similarity between two fingerprints.

Fingerprint matching techniques can be placed into two categories: minutiae-based and

correlation based. Minutiae-based techniques first find minutiae points and then map

their relative placement on the finger. However, there are some difficulties when using

this approach. It is difficult to extract the minutiae points accurately when the

fingerprint is of low quality. Also this method does not take into account the global

pattern of ridges and furrows [38, 39, 40]. The correlation-based method is able to

overcome some of the difficulties of the minutiae-based approach. However, it has

some of its own shortcomings. Correlation-based techniques require the precise

location of a registration point and are affected by image translation and rotation.

14

Fig 1.5 Matching process between two fingerprints. Fingerprint matching based on minutiae has problems in matching different sized

(unregistered) minutiae patterns. Local ridge structures can not be completely characterized

by minutiae [41]. We are trying an alternate representation of fingerprints which will

capture more local information and yield a fixed length code for the fingerprint. The

matching will then hopefully become a relatively simple task of calculating the Euclidean

distance will between the two codes [42].

Generally, as shown in figure 1.5, an ordinary fingerprint has about 50 minutiae in it. The

"location" and "direction" are extracted from the minutia. The matching is based on these

pieces of information on the minutia. However, the information on the location and a

direction of the minutia points alone is not enough for fingerprint identification because of

the flexibility of fingerprint skin. For this reason, we add information called a "relation".

The relation is the number of ridges between the minutiae. This relation information

significantly improves the matching accuracy when combined with the information on the

15

minutia. In this thesis we will use BOZORTH3 developed by NIST, as the matching

system to test our result. In this application, the degree of similarity is given by a similarity

number, and we will discuss it in details in chapter 3.

1.4 Previous Work

Ganesh set up the first fingerprint scanner in [3, 4]. The fingerprint scan system is multi-

frequency Measuring Profilometry (PMP) technique based 3D scan system. In an attempt

to build such a system, we have been developing a non-contact scanning system (Fig. 1.6

that uses multiple, high-resolution, commodity, digital cameras and employs Structured

Light Illumination (SLI) [1, 4] as a means of acquiring 3-D scans of all the five fingers and

the palm with sufficient high resolution as to record ridge level details. The system will

operate in both Autonomous Entry and Operator Controlled Entry interfaces. The prototype

system will be designed such that it can sit on top of a table or desk with adjustable base for

precise height adjustment. For more details about the setup of the 3D fingerprint scan

system, please refer to [3].

Fig 1.6 Experimantal setup used for 3D fingerprint scanner. After acquiring the 3D fingerprint, in [2], Abhishika uses spring algorithm unravel the 3D

into 2D fingerprint. She also describes certain quantitative measures that will help evaluate

2D unraveled fingerprints. Specifically, she uses some image software components

16

developed by the National Institute of Standards and Technology (NIST), to derive the

performance metrics. A comparison is also made between 2D fingerprint images obtained

by the traditional means and the 2D images obtained after unrolling the 3D scans and the

quality of the acquired scans is quantified using the metrics. It is shown that both the 2D

inked and 3D unraveled fingerprints have the similar quality distribution and trend.

However, based on the spring algorithm and experimental 3D fingerprint scanner, the 2D

inked fingerprint shows a higher performance than the 3D fingerprints. In this thesis, by

employing the new 3D fingerprint scanner and the best fit sphere unraveling algorithm, not

only we reduce the post processing computation, but also we make the 3D fingerprints

better perform than the 2D inked fingerprints. In addition, we introduce the matching

software and show that the quality of the 3D unraveled fingerprints has a strong relation

with matching performance, where higher quality fingerprint achieves higher possibility of

better matching performance.

17

Chapter 2 Post Processing of 3D Fingerprint In order to assess our 3D fingerprints system, it is necessary to find a system to evaluate the 3D

fingerprint data and compare it with 2D inked fingerprint. Since nowadays the most used

fingerprints are all in 2D, the most commonly used evaluation systems are also based on 2D data.

That means it is necessary first to unravel the 3D fingerprints into 2D and extract all the ridges

information before we assess the new method. The 2-D equivalent rolled image from the 3D

scanned fingerprint data is necessary for (i) using NIST software to extract minutiae, analyze the

quality and match between fingerprints and (ii) compare our results with others. To obtain a 2-D

equivalent rolled image from the extracted finger surface, Abhishika used spring algorithm [2].

However, the computation of the spring algorithm is expensive. To make the computation more

efficient and improve the quality of the unravel results, here we introduce best fit sphere algorithm.

At the end of this chapter, NIST software for fingerprint quality measuring is performed on our

unraveled fingerprints by best fit sphere and the result is compared with 2D inked fingerprints and

fingerprints unraveled by spring algorithm.

2.1 Fit a Sphere to the 3D Surface

2.1.1 Calculate the Sphere

The first step to unravel the finger print is to fit a sphere to the 3D data. A sphere can be defined by

specifying its center point (xc, yc, zc) and its radius r [43, 44]. So, the goal here is to develop a

program which will compute out the center point and the radius based on least squares, which

means the sphere minimizes the sum of the squared distance from the points on the sphere to the

corresponding points on the finger print.

18

Fig. 2.1 Three different views of original input 3D fingerprint. Because our purpose is to minimize the sum of the squared distances, the function to compute out

the distance for each point to the center of sphere is needed, which is given as following:

d0 = (xf-xc)2 + (yf-yc)2 + (zf-zc)2

(2.1)

where (xf, yf, zf) is the point from the 3D finger print and (xc, yc, zc) is the center of sphere. After

computing d0, the distance for each point from the surface of finger print to the surface of the

sphere is further given as:

d = sqrt [(xf-xc)2 + (yf-yc)2 + (zf-zc)2] – r

(2.2)

That is, the distance from the surface of sphere to the finger print is equal to the distance from the

center of sphere to the finger print less the radius of the sphere [45, 46]. The distance would be

positive if the finger print point is inside the sphere, would be negative if it is outside the sphere

[47]. Although it does not matter now because the distance is squared now, it is useful for further

detailed computation. Suppose we have n input points (xf1, yf1, zf1), ..., (xfn, yfn, zfn), by equation

2.1, for each point, we have:

a×xf + b×yf + czf – d0 +(xf2 + yf2 + zf2) = 0

(2.1)

when n>4, it can be further write as:

19

⎡a ⎤

⎡ x f 1 , y f 1 , z f 1 , −1, ( x + y + z ) ⎤ ⎢b ⎥

⎢

⎥⎢ ⎥

...

⎢

⎥ ⎢c ⎥ = 0

2

2

2

⎢

⎥ ⎢d ⎥

⎣ x fn , y fn , z fn , −1, ( x fn + y fn + z fn ) ⎦ ⎢ 0 ⎥

⎢⎣ s ⎥⎦

2

f1

2

f1

2

f1

(2.1)

The parameter s is the scale value. We can solve the function by SVD decomposition of A, where

A = UDVT and the last column of V is the solution. Thus, a = a/s, b = b/s, c = c/s, and d0 = d0 /s.

And r = sqrt((a2+b2+c2)/4-d0), the center point (xc, yc, zc) = (-a/2, -b/2, -c/2).

Fig. 2.2 3D views of finger print data and sphere. To get a clear view, the data is great down sampled, from 1392×1040 to 65×50. Blue points ‘.’ represent points from the finger print data. Red points ‘*’ represent points from the ‘best fit’ sphere. From left to right are four different views of the 3D data. The last step to find the best fit sphere is to change the north axis of the sphere to the center of the

20

fingerprint, after we get the r and center point coordinate of the sphere. This step is necessary,

because after unraveling we want the 2D fingerprint located to the center of the image, and also it

helps a lot when we try to down sample the whole image to500 dpi, which we will talk about in the

following of this chapter. This goal is done by keeping the sphere unchanged and rotate the whole

3D fingerprint, such that the center of the fingerprint is rotated to the north pole of the sphere.

2.1.2 Change the North Pole of the Sphere

By only compute out the center and radius of the sphere, we can get little information about where

the north pole of the sphere is. Thus, the unravel center of the fingerprint may not even locate on

the 3D fingerprint surface. To ensure that the unravel center is also the center of the fingerprint, we

first move the origin point to the center of the sphere and then rotate the whole fingerprint such

that the north pole point out the center of the fingerprint.

As shown in figure 2.3, where the light lines represent axes, after the rotation and translation, the

unravel center, which is on the z axis, is changed onto the surface of the fingerprint and the z axis

goes through the center of the fingerprint points cloud. This step is important to defuse distortion

brought by the fit sphere unraveling program.

21

(a)

(b)

Fig. 2.3 Compare before and after moving the north pole. Black points clouds in figures are the points down

sampled from the surface of the 3D fingerprint, where the light line is the axis. Fig (a) shows that

before the moving of the north pole, the unravel center is not on the fingerprint’s surface. Fig (b)

shows that after the rotation and translation, the unravel center is changed to the fingerprint’s center.

2.2 Unravel the 3D Fingerprint

2.2.1 Create Grid Data

Generally, the part near the camera has a higher points’ density than the part far from the camera.

So, the points on the finger print are not equally distributed, before unravel the finger print, the

corresponding grid data is created to ensure that the points’ density is equally distributed on the

surface. To achieve this, the whole data set is converted from (x, y, z) cart to (theta, phi, rho)

22

sphere dimension. Then, a uniform mesh, consisted by theta and phi, is created. So that, if we

consider the finger print is perfectly fitted to the sphere, the created mesh is equally projected onto

the finger print, and the value of each point on the mesh is linearly computed based on the data

from the finger print. To save more information of original 3D finger print, the mesh is created

with generally 3 or 4 times higher density than the original 3D finger print data. The grid data is

shown in fig 2.4.

100

100

200

200

300

300

400

400

500

500

600

600

700

700

800

800

900

900

200

400

600

800

1000

(a)

1200

200

400

600

800

1000

1200

(b)

Fig. 2.4 Value distributions of theta and phi. Figure (a) is theta, and figure (b) is phi. Theta

and phi, whose values are equally distributed, together form a mesh, which is

projected onto the 3D finger print. The density of this created mesh is 3 times higher

than the 3D finger print data.

23

Fig. 2.5 Grid created data on the 3D finger print. Each point on the figure is from the created mesh points. For a clear view, the data is greatly down sampled from 1392×1040 to 130×100. From left to right are four different views of the 3D data. From Fig. 2.5, besides the fact that the part of the finger near to the camera has a higher dentsity,

since the 3D figure print is not perfectly fitted to the ‘best fit’ sphere, the point density becomes

higher when these two surfaces become closer. However, the mesh is equally spaced as we can see

from Fig. 2.4. Further unravel is based on grid created data. When we get these two theta and phi

maps, we integrate the rho value to form a new rho map, based on what we perform the unraveling

process. Another thing we can find in figure 2.5 is that the density of the points is changing, which

is mainly caused by the un-fitness of the sphere to the 3D fingerprint. Actually, no sphere can be

perfectly fit to a 3D fingerprint model. And since we are creating linear maps of theta and phi, as

shown in figure 2.4, this un-fitness will result a un-equally spaced points density in 3D. Thus,

when we unravel the 3D fingerprint into 2D fingerprint, the distortion is created by the algorithm

24

of best fit sphere. And further steps to defuse this distortion are needed, which we will discuss in

the following part of this chapter.

2.2.2 Unravel the 3D Surface

After for each point on the mesh the rho value is computed, the unraveling will be processed based

on the unraveling of the created mesh. It is just to arrange the computed rho value according to Fig.

2.4.

100

200

300

400

500

600

700

800

900

200

400

600

800

1000

1200

Fig. 2.6 Unraveled 2D finger print. The density of the unraveled 2D finger print is 3 time higher than the original density from the 3D finger print. The unraveled result is given in Fig.2.6. As we can expect, a better fit of the sphere will result a

better unraveled 2D finger print. And this figure is actually created based on the theta and phi

maps, shown in figure 2.3. The rho values of the fingerprint are integrated based on the theta and

phi maps to create a new rho map of the fingerprint. This new rho value has mainly two uses.

Firstly, this rho map is used to create the unraveled fingerprint. If we want the unraveled

fingerprint, filters should be applied to this new created rho map, such that after the bound pass

filter, we can get the information how the rho values change along the surface of the finger.

Secondly, this rho map is used to correct the distortion of fingerprint caused by the algorithm of

best fit sphere.

25

2.2.3 Apply Filters to the Unraveled 2D Fingerprint

After we get the new rho map, shown in Fig.2.6, we firstly use this map to get the unraveled 2D

fingerprint, which is the variation of rho along the surface of the fingerprint. To achieve this, a

bound pass filter should be performed to abstract the finger ridges. Here, in order to apply a bound

pass filter, we first perform a high pass filter and then subtract the high frequency.

100

100

200

200

300

300

400

400

500

500

600

600

700

700

800

800

900

900

200

400

600

800

1000

(a)

1200

200

400

600

800

1000

1200

(b)

Fig. 2.7 2D finger print after high pass filter. The Gaussian low pass filter is a 20×20 size

filter with hsize equals to 3×3 and sigma equals to 40. Fig. a is the filter out data,

and Fig. b is the data after subtracting the low frequency from the unraveled 2D

fingerprint.

As shown in figure 2.7 b, after the high pass filter, the unraveled 2D fingerprint is almost there.

However, there is lot of high frequency noises caused by the collecting of the 3D fingerprint

process. The frequency of this noise is generally much higher than the frequency of the ridges on

the finger. To reduce noise of the data in Fig. 2.7 b, an appropriate low pass filter is needed.

If both the high pass and the low pass filters are regarded as one process, a bound pass filter is

actually performed to the unraveled 2D finger print. After the filter, the un-meaningful filter

created edge data is cropped out, and a hist-euqal function is performed to the data.

26

Fig. 2.8 2D finger print after post filter process. To be similar to inked finger print, the color is

inverted. Image size is [870, 1180], which is with three times higher points density than

the original 3D finger print data.

2.2.4 Down Sample to Standard

According to the standard, it is 500 dpi, which means there should be 500 dots per inch. In order to

save as much information as the original scanned 3D finger print data, the data size for the unravel

process becomes 3 times larger than the original, which is in this data sample 4 times larger than

the standard. The algorithm is straight forward. We compute out how many points per inch in our

unraveled data, and then by down sample all the data, the data achieves the 500 dpi standard.

Following is the plot of the distance along the theta and phi directions. Here we take the distance

along the theta direction as an example.

27

0.014

0.0138

0.0136

0.0134

0.0132

0.013

0.0128

0.0126

0.0124

0.0122

0

200

400

600

800

1000

1200

Fig 2.9 Plot of distance along theta direction

Since there is noise in these wave forms, if we directly scale it to 500 dpi, the distance change

caused by noise and ridges would also be considered into, which is not wanted. So, after getting

these distances, we firstly apply a filter of fourier transform (FFT) to them such that the wave form

can be more smooth.

0.014

0.0138

0.0136

0.0134

0.0132

0.013

0.0128

0.0126

0.0124

0.0122

0

200

400

600

800

1000

1200

Fig 2.10 Plot of distance along theta direction after FFT

The unit used here is millimeter, if we want 500 dpi, the distance between points would be

D = 25.4/500 = 0.0508

So, we get

28

0.051

0.051

0.0509

0.0509

0.0508

0.0508

0.0507

0.0507

0.0506

0

50

100

150

200

250

300

350

Fig 2.11 Distance along theta direction after scale to 500 dpi

As we can see from 2.11, the distances between every two points are changed to around 0.0508,

which means the 500 dpi resolution. Thus, the dpi values along the two center lines are change to

500, based on which we can say the whole fingerprint is down sampled to 500 dpi.

2.2.5 Further Distortion Correction

One thing should be noticed here is, since we are not taking the distance caused by noise into

consideration, after scaling, the noise would be still there, which is also true for the ridges. In this

way, what we scaled is only the size of the image, not the distance between ridges and noise. And

this scale will also create a problem. That is, since we are scaling only in the center theta and

center phi lines, when the points come to the edges, the error becomes larger and larger. This

problem can be explained in the following figures.

As we claimed, 500 dpi means, if ideal, the distance between every two points is 0.0508 mm. Here,

the image size we are scaling to is [600,600]. So, the center line along the vertical direction is x =

300, the center line along the horizontal direction is y = 300. After scaling, the distance between

every two points along these two lines are shown below:

29

0.0512

0.0511

0.051

0.0509

0.0508

0.0507

0.0506

0.0505

0

50

100

150

200

250

300

350

(a)

0.0511

0.051

0.0509

0.0508

0.0507

0.0506

0.0505

0

50

100

150

200

250

300

350

400

450

(b)

Fig. 2.12 (a) Distance plot along x = 300; (b) Distance plot along y = 300. From the above figures, we can see the distances are all around 0.0508, which means the

dpi is 500. However, when the image comes to the edge, the distortion becomes large.

We take the two following plots as example.

30

0.054

0.0535

0.053

0.0525

0.052

0.0515

0.051

0.0505

0.05

0

50

100

150

200

250

300

350

(a)

0.049

0.0485

0.048

0.0475

0.047

0.0465

0.046

0.0455

0

50

100

150

200

250

(b)

Fig. 2.13 (a) Distance plot along x = 450; (b) Distance plot along y = 150. The above two plots show that the dpi values of that area are not 500. The reason for this is mainly

that we are creating a linear theta map and phi map, as shown in figure 2.3, based on which we

scale the image. However, the distance between points on the finger is not linear. This is the

distortion caused by the best fit sphere algorithm. In other word, no sphere can perfectly fit the

shape of a finger. So, when we unravel the fingerprint based on the sphere, the distortion is created.

To solve this problem, we should create nonlinear theta and phi maps. The new created theta and

phi maps should be distorted such that the distances between every two points both along the

vertical and horizontal directions are 0.0508. Thus, we created the following two maps.

31

100

200

300

400

500

600

700

800

100

200

300

400

500

600

(a)

100

200

300

400

500

600

100

200

300

400

500

600

700

800

900 1000 1100

(b)

Fig. 2.14 (a) New theta map; (b) New phi map. Different from the theta and phi maps we are using before, these new created two maps are

nonlinear. As shown in figure 2.7, the size of the input image is [870, 1180]. The theta map is

created by every horizontal line, which means the value on every horizontal line is not linear. For

every horizontal line on the input 3D fingerprint, we can calculate the total distance of that line and

the distances between every two points. So, the number of points on every horizontal line of the

theta map gives us the number of points after scaling. On the other hand, for the phi map, we scan

the vertical lines. For every vertical line on the 3D fingerprint, we calculate the distance between

every two points. Then, we take a FFT to get rid of the ridges and noise. After that, similarly we

will get the smooth plot. This process is much the same as what we use to get the figure 2.10. So,

we can scale all the distances along this line to 0.0508 mm, which means 500 dpi. And accumulate

all these vertical lines, we will get the new phi map. In other word, the theta map defines the

32

horizontal length of the fingerprint, and the phi map defines the vertical length of the fingerprint.

However, after all these, we still have not got the maps that can be directly used to scale the

fingerprint. As you can see from the two plots above, the image sizes are not still [600,600]. So,

further we scaled the maps so that the sizes are [600, 600] and the distance between points are

unchanged. The rule to down sample the theta and phi maps is that the theta map gives us the

horizontal length of the fingerprint, and the phi map gives us the vertical length. We can use this

rule to short the vertical length of the theta map and the horizontal length of the phi map.

100

200

300

400

500

600

100

200

300

400

500

600

400

500

600

(a)

100

200

300

400

500

600

100

200

300

(b)

Fig. 2.15 (a) New theta map with size [600, 600]; (b) New phi map with size [600, 600]. Thus, we get our two new nonlinear maps, which are distorted based on the distances between

points. Next, we wrote a function to further arrange these two maps, such that after down sample

33

to [600, 600] image size, the distances between points are still 0.0508. After this distortion

correction steps, again let us look at the distances between points on the unraveled fingerprint.

Here, first we will still take a look at the distances along two center lines, after unraveling:

0.0509

0.0509

0.0509

0.0508

0.0508

0.0508

0.0508

0.0508

0.0507

0.0507

0

50

100

150

200

250

300

350

(a)

0.0511

0.051

0.051

0.0509

0.0509

0.0508

0.0508

0.0507

0.0507

0.0506

0

50

100

150

200

250

300

350

400

450

(b)

Fig. 2.16 (a) Distance pot along x = 300; (b) Distance plot along y = 300. Compared to the figure 2.12, the small low frequency wave is defused in figure 2.16, which means

the distances along these two lines become more stable and small distortions are defused. To

compare with previous result, still, we take x = 450 and y = 150.

34

0.0509

0.0508

0.0508

0.0507

0.0507

0.0507

0.0506

0.0505

0

50

100

150

200

250

300

350

(a)

0.0509

0.0509

0.0508

0.0508

0.0507

0.0507

0.0507

0.0506

0.0505

0

20

40

60

80

100

120

140

160

180

200

(b)

Fig. 2.17 (a) Distance plot along x = 450; (b) Distance plot along y = 150. Compare figure 2.17 to figure 2.13, the distances are corrected to 0.0508 mm, which also means

that the dpi value of these two lines are corrected to 500. Examples along other lines also show that

the distances are changed to 0.0508, which means 500 dpi. And by doing this distortion correction

steps, not only the dpi is controlled to 500, but also the distortion is defused.

35

-4

3.5

x 10

theta direction

phi direction

3

variance

2.5

2

1.5

1

0.5

0

0

before distortion correction

(a)

-9

3

x 10

theta direction

phi direction

2.5

variance

2

1.5

1

0.5

0

0

after distortion correction

(b)

Fig 2.18 Variance comparison before and after distortion correction. (a) Variances along theta and phi direction before correction. (b) Variances along theta and phi direction after correction. In fig 2.18, we plot out the variances before and after the distortion correction. It is based on 120

fingerprints. As shown, the variances along theta and phi direction before the distortion correction

are 9.6941×10-5 and 3.2257×10-4. On the other hand, after the distortion correction, the variances

are changed to 2.5712×10-9 and 1.9442×10-9, which are much lower than before.

If we assume that the 3D data is accurate, which means we can get the accurate distances between

every two points in 3D space, this unravel result will be total free of unraveling distortion and the

dpi is 500. By this improvement, we can get rid of the effect of best fit sphere algorithm. Since no

matter where the center of the sphere is, the distances from every point to the sphere center are

36

calculated from the (x, y, z) values of that points, and so are the phi and theta values, we still have

accurate distance measure between points on the 3D finger. So, by down sampling in a nonlinear

way, we correct the distortion from the algorithm of best fit sphere and control the dpi of the whole

unraveled finger print to 500.

After scaling steps, the unraveled 2D fingerprint from 3D scanned fingerprint with 500 dpi is

created. Next step would be to apply the NIST software to the 2D unraveled fingerprint and

evaluate the fingerprint.

Fig 2.19 2D unraveled fingerprint from scanned 3D data. 2.3 Applying NIST Software to the Data

The evaluation process is performed by NIST software. Here we will apply the binarization system

(MINDTCT) and local quality system (NFIQ) to the 2D unraveled data to get the binary

fingerprint and its corresponding quality map.

37

Fig 2.20 Binarilized fingerprint of the 2D unraveled fingerprint from 3D. 100

200

300

400

500

600

100

200

300

400

500

600

Fig. 2.21 Quality image of the 2D unraveled finger print. White color represents 4, which is the

highest quality. From white to dark, darker color is poorer quality. 0 is lowest quality,

which means there is no meaningful data. The average quality of this sample is 3.1090.

38

Fig.2.22 Schematic flow chart of the Best Fit Sphere algorithm 2.4 Fingerprints Quality Analysis

In this part, we develop some quantitative measures for evaluating the performance of the

presented 3-D fingerprint scanning and processing. In demonstrating our validity for using

the NIST software [48] and the corresponding statistical measures of overall quality,

number of local quality zone blocks, minutiae reliability, and the classification confidence

number [2] to do scanner and unraveling program evaluation, we have also collected the

conventional 2-D rolled inked fingerprints along with the 3-D scans for the same subjects,

and run the NIST software on both 2D rolled ink fingerprints and unraveled 3D based on

fit-sphere algorithm. The NIST software components are run over both these sets of data

39

and the results are compared. The better of the two scanning technologies should generate a

higher confidence number on classification and should have a higher number of reliable

minutiae (greater than 20) and higher number of local blocks in quality zone 4 along with a

higher overall image quality. For the details of NIST quality measuring software, please

refer to [2].

A fingerprint database, created for this purpose at the University of Kentucky, was used for

the performance evaluation of the scanner system. The database consists of both 2-D, rolled

inked, fingerprint images and unraveled 2-D images of corresponding 3-D scans. For

obtaining the 3D fingerprint scans, each subject was scanned using the single finger, by

using the SLI prototype scanner described in Chapter 2. The 3-D scans were post processed

and run through the NIST filters along with their 2D equivalents to get the desired

statistical values. In this chapter, we will firstly apply the NIST systems to all the 2D inked

and 2D unraveled fingerprints, and we will show that:

•

Our 2D unraveled fingerprints have a similar distribution about the overall quality,

local quality zones, high confidence minutiae, and the confidence of classification,

which is also shown in [2] when she applied spring algorithm to unravel the 3D

fingerprints.

•

Our 2D unraveled fingerprints have higher quality, more high confidence minutiae,

higher confidence number of classification and better performance of matching than

2D inked fingerprints, where the results from [2] have a lower performance than 2D

inked fingerprints.

We will begin our assessment with PCASYS, which classifies the fingerprint images into

five basic categories depending on the position of the singular components like the core

and the delta and the direction of the ridge flow. Based on these characteristics, fingerprints

are divided in to right loop, left loop, arch, tented arch and whorl classes. Along with the

fingerprint class, PCASYS also outputs an estimated posterior probability of the

hypothesized class, which is a measure of the confidence that may be assigned to the

classifier’s decision. This confidence number is used as a quantitative metric for assessing

40

scanner performance. As the quality of the scan improves, the confidence number output of

the classifier will be closer to 1.

As a second approach, the NIST minutiae detection software MINDTCT automatically

detects the minutiae on the fingerprint image. It also assesses the quality of the minutiae

and generates an image quality map. To locally analyze the image, MINDTCT divides the

image into a grid of blocks and assesses the quality of each block by computing the

direction flow map, low contrast map, low flow map, and high curvature map, the last three

of which detect unstable regions in the fingerprint where minutiae detection is unreliable.

The information in these maps is integrated into one general map and contains 5 levels of

quality (4 being the highest quality and 0 being the lowest). The background has a score of

0 while a score of 4 means a very good region of the fingerprint. The quality assigned to a

specific block is determined based on its proximity to blocks flagged in the abovementioned maps. The total number of blocks with quality 1 or better is regarded as the

effective size of the image or the foreground. The percentage of blocks with quality 1, 2, 3,

and 4 are calculated and are regarded as quality zones 1, 2, 3, and 4 respectively.

Fingerprints with higher quality zone number of 4 are more desirable.

The MINDTCT software also computes quality or reliability measures to be associated

with each detected minutiae point based on two factors. The first is based on the location of

the minutiae point within the quality map, and the second is based on simple pixel intensity

statistics (mean and standard deviations) within the neighborhood of the point. Based on

these two factors, a quality value in the range from 0.01 to 0.99 is assigned to each

minutiae, where a low quality minutiae number represents minutiae detected in low quality

regions of the image whereas a high quality minutiae value indicates minutiae detected in

the higher quality regions. Minutiae with quality number less than 0.5 are considered

unreliable, while a quality number greater than 0.75 are considered to be highly reliable

[refer to NISTIR 7151].

Thirdly, the NIST fingerprint image quality software NFIQ assigns an overall quality

41

number to the fingerprint image by computing a feature vector using the quality image map

and the minutiae quality statistics generated by the MINDTCT system [49], which is then

used as an input to a Multi-Layer Perceptron (MLP) neural network classifier. The output

activation level of the neural network classifier is used to determine the fingerprint image

quality. There are five quality levels with 1 being the highest quality and 5 being the lowest

quality. The quality number information is a useful quantitative metric in that the

information provided by the quality number can be used to determine low quality samples

and, hence, can subsequently help in improving scanning technology.

2.4.1 2D Ink Rolled Fingerprint Scanning

To obtain the 2D, rolled equivalent, inked images, each subject was escorted to the

University of Kentucky’s Police Department, where their prints were taken manually by a

trained police officer. The fingerprint impressions were taken on a fingerprint card using a

black printer ink Fig. 5.1. The fingerprint card is an 8” × 8” single copy white card with a

“Face” side and an “Impression” side. The “Face” side provides blocks for administrative

information, while the “Impression” side provides blocks for descriptive information and

fingerprint impressions. Both the rolled, fingerprint impressions and the plain or dab finger

impressions are taken on the card. An example fingerprint card is as shown in the following

Figs. The fingerprint card, obtained for each subject, was then scanned manually using a

HP flatbed scanner at 500 dots per inch, according to the CJIS specifications, and manually

segmented in Adobe Photoshop to obtain the images for individual fingers.

42

Fig 2.23 An example fingerprint card

2.4.2 2D Fingerprint Experimental Results and Analysis

To validate our hypothesis that the quantitative measures of local image quality zones,

minutiae reliability, and the classification confidence number can quantify the scanner

performance, we first will analyze the 2D rolled inked fingerprints obtained at the police

station through the NIST PCASYS, MINDTCT, and NFIQ components [50]. To protect the

identity of the subjects, each was assigned a number rather than identifying them with their

name. The letter “L” denotes that the particular finger belongs to the left hand, while the

letter “R” denotes that it belongs to the right hand. The individual digits on each hand are

denoted, D2 for index finger, D3 for middle finger, D4 for ring finger, D5 for little finger.

So a file named subject4 L D2 will represent the left hand index finger of subject number 4.

Run the software on the 2D inked fingerprint data base, we will get the table following.

43

Table 2.1 Results of running PCASYS, MINDTCT and NFIQ on the 2D images of Subjects 0 through

14.

Subject

Subject 0

Hand

Left

Right

Subject 1

Left

Right

Subject 2

Left

Right

Subject 3

Left

Right

Subject 4

Left

Right

Subject 5

Left

Digit

D2

D3

D4

D5

D2

D3

D4

D5

D2

D3

D4

D5

D2

D3

D4

D5

D2

D3

D4

D5

D2

D3

D4

D5

D2

D3

D4

D5

D2

D3

D4

D5

D2

D3

D4

D5

D2

D3

D4

D5

D2

D3

D4

D5

PCASYS

Class Conf.

No.

W

1.00

R

0.35

L

0.99

L

0.50

W

1.00

R

0.99

R

0.98

R

0.82

L

0.68

L

1.00

W

0.54

T

0.81

R

1.00

W

1.00

W

1.00

R

0.99

R

0.91

L

0.94

L

0.87

L

0.99