Statistical Description of Data (Numerical Recipes in C)

advertisement

Numerical Recipes in C

The Art of Scientific Computing

William H. Press

Harvard-Smithsonian Center for Astrophysics

Brian P. Flannery

EXXON Research and Engineering Company

Saul A. Teukolsky

Department of Physics, Cornell University

William T. Vetterling

Polaroid Corporation

TIl<' riglrl of Ihe

of Cambridge

prill/am/sell

all mOllnef oj books

grullied by

1/ellr), Vlflln 1J)4.

The U,,1\""sll)' "us prillied

Ulli,~rsUy

10

"'lIS

a"d plIbli.thed ,",IIIUm,,"'..!y

sillt.., IJ84.

CAMBRIDGE UNIVERSITY PRESS

Cambridge

New York Port Chester

Melbourne Sydney

Statistical Description

of Data

....,.,..

Introduction

this chapter and the next, the concept of data enters the discussion

prclminerltly than before.

consist of numbers, of course. But these numbers are fed into the

not produced by it. These are numbers to be treated with considerte"pc:ct, never to be tampered with, nor subjected to a numerical process

character you do not completely understand. You are well advised to

a reverence for data that is rather different from the "sporty" attitude

is sometimes allowable, or even commendable, in other numerical tasks.

analysis of data inevitably involves some trafficking with the field of

that gray area which is as surely not a branch of mathematics as it

a branch of science. In the following sections, you will repeatedly

the following paradigm:

• apply some formula to the data to compute "a statistic"

'. compute where the value of that statistic falls in a probability distribution that is computed on the basis of some "null hypothesis"

• if it falls in a very unlikely spot, way out on a tail of the distribution,

conclude that the null hypothesis is false for your data set

a statistic falls in a reasonable part of the distribution, you must

the mistake of concluding that the null hypothesis is "verified"

;pYc,veo." That is the curse of statistics, that it can never prove things,

'di,sprovc them! At best, you can substantiate a hypothesis by ruling out,

a whole long list of competing hypotheses, everyone that has

proposed. After a while your adversaries and competitors will give

to think of alternative hypotheses, or else they will grow old and

your hypothesis will become accepted. Sounds crazy, we know,

how science works!

In this book we make a somewhat arbitrary distinction between data

procedures that are model-independent and those that are modelIn the former category, we include so-called descriptive statistics

.' characterize a data set in general terms: its mean, variance, and so

also include statistical tests which seek to establish the "sameness"

of two or more data sets, or which seek to establish and

471

13.

""',

Statistical

of Data

measure a degree of correlation between two data sets. These

discussed in this chapter.

In the other category, model-dependent statistics, we lump

subject of fitting data to a theory, parameter estimation, lea.st-sqllar,

and so on. Those snbjects are introduced in Chapter 14.

Sections 13.1-13.3 of this chapter deal with so-called measures

tendency, the moments of a distribution, the median and mode. In

learn to test whether different data sets are drawn from dis:tribuUolu'

different values of these measures of central tendency. This leads

§13.5 to the more general question of whether two distributions can

to be (significantly) different.

In §13.6-§13.8, we deal with measures of association for two distrilnj

We want to determine whether two variables are "correlated" or

'I

il

II

",l,mon}

on one another. If they are, we want to characterize the degree of

in some simple ways. The distinction between parametric and nonparam

(rank) methods is emphasized.

Section 13.9 touches briefly on the murky area of data SillLoothlng.'

This chapter draws mathematically on the material on special

that was presented in Chapter 6, especially §6.1-§6.3. You may wish,

point, to review those sections.

'I

II

REFERENCES AND FURTHER READING:

11

Bevington, Philip R. 1969. Data Reduction and Error Analysis

'I

PhysIcal Sciences (New York: McGraw-Hili).

1977, The Advanced

Statistics, 4th ed. (London: Griffin and Co.).

SPSS: Statistical Package for the Social Sciences, 2nd ed., by

H. Nie. et at. (New York: McGraw-Hili).

Kendall. Maurice, and Stuart, Alan.

"'i

'I

il

13.1 Moments of a Distribution: Mean,

Variance, Skewness, and so forth

When a set of values has a sufficiently strong central tendency,

a tendency to cluster around some particular value, then it may be

characterize the set by a few numbers that are related to its m"m,ents,1

sums of integer powers of the values.

Best known is the mean of the values Xl, . .. , X N,

1 N

X=

NLx;

j=l

which estimates the value around which central clustering occurs.

use of an overbar to denote the mean; angle brackets are an equally

of II Distribution:

Skewness

e.g. (X). You should be aware of the fact that the mean is not the

estimator of this quantity, nor is it necessarily the best one.

drawn from a probability distribution with very broad "tails," the

converge poorly, or not at all, as the number of sampled points

Alternative estimators, the median and the mode, are discussed)

and §13.3.

characterized a distribution's central value, one conventionally

ch,,,a.ct,,ri:,es its "width" or "variability" around that value. Here again,

than one measure is available. Most common is the variance,

,val1~'U'"

Var(xl ... XN)

=

1

N

N _1 )Xj _x)2

2

(13.1.2)

j=1

square root, the standard deviation,

(13.1.3)

(13.1.2) estimates the mean squared-deviation of x from its mean

There is a long story about why the denominator of (13.1.2) is N - 1

of N. If you have never heard that story, you may consult any good

text. Here we will be content to note that the N - 1 should be

to N if you are ever in the situation of measuring the variance of

str'ibu.tio'n whose mean x is known a priori rather than being estimated

data. (We might also comment that if the difference between Nand

1 ever matters to you, then you are probably up to no good anyway to substantiate a questionable hypothesis with marginal data.)

the mean depends on the first moment of the data, so do the variance

l.stan,im·d deviation depend on the second moment. It is not uncommon,

life, to be dealing with a distribution whose second moment does not

(Le. is infinite). In this case, the variance or standard deviation is useless

measure of the data's width around its central value: the values obtained

equations (13.1.2) or (13.1.3) will not converge with increased numbers

nor show any consistency from data set to data set drawn from the

distribution. This can occur even when the width of the peak looks,

perfectly finite. A more robust estimator of the width is the average

or mean absolute deviation, defined by

1 N

ADev(Xl ... XN) = N2)Xj-xl

(13.1.4)

j=1

Statisticians have historically sniffed at the use of (13.1.4) instead of

since the absolute value brackets in (13.1.4) are "nonanalytic" and

theorem-proving difficult. In recent years, however, the fashion has

and the subject of robust estimation (meaning, estimation for broad

~:::

II,:,

iii'!

11'"

Chapter 13.

"'''.,

Statistical

of Data

distributions with significant numbers of "outlier" points) has become a

lar and important one. Higher moments, or statistics involving higher

of the input data, are almost always less robust than lower moments

tics that involve only linear sums or (the lowest moment of all) c01llll1;in:g,:

That being the case, the skewness or third moment, and the ",,,'tm,;,

fourth moment should be used with caution or, better yet, not at all.

The skewness characterizes the degree of asymmetry of a di,ltributl

around its mean. While the mean, standard deviation, and average

tion are dimensional quantities, that is, have the same units as the

quantities Xj, the skewness is conventionally defined in such a way as to

it nondimensional. It is a pure number that characterizes only the

the distribution. The usual definition is

"

'1

II

"

where U = U(Xl ... XN) is the distribution's standard deviation \"' .•. 0)_

positive value of skewness signifies a distribution with an a::~~:::~~~tj

extending out towards more positive X; a negative value signifies a

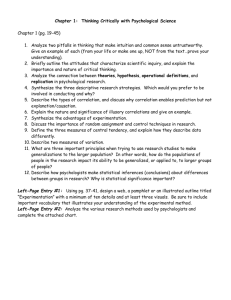

whose tail extends out towards more negative X (see Figure 13.1.1).

Of course, any set of N measured values is likely to give a nonzero

for (13.1.5), even if the underlying distribution is in fact symmetrical

zero skewness). For (13.1.5) to be meaningful, we need to have some

of its standard deviation as an estimator of the skewness of the underly

distribution. Unfortunately, that depends on the shape of the underlying

tribution, and rather critically on its tails! For the idealized case of a

(Gaussian) distribution, the standard deviation of (13.1.5) is appf(}xiJmat"'

Y6/N. In real life it is good practice to believe in skewnesses only when

are several or many times as large as this.

The kurtosis is also a nondimensional quantity. It measures the

peakedness or flatness of a distribution. Relative to what? A normal

bution, what else! A distribution with positive kurtosis is termed leI.toJ,,"rl

the outline of the Matterhorn is an example. A distribution with

kurtosis is termed platykurtic; the outline of a loaf of bread is an

(See Fignre 13.1.1.) And, you will no doubt be pleased to hear, an in-betwE

distribution is termed mesokurtic.

The conventional definition of the kurtosis is

where the -3 term makes the value zero for a normal distribution.

The standard deviation of (1).6) as an estimator of the kurtosis

underlying normal distribution is 24/N. However, the kurtosis depends

13.1 Moments of a Distribution:

Skewness

kurtosis

skewness

,ncgativc_1

I

/

I

negative,

(platykurtic) ,

I

positive

(Icptokurtic)

,--

I

I

I

(,)

(

, ""

,I

I

(b)

13.1.1. Distributions whose third and fourth moments are significantly different

normal (Gaussian) distribution. (a) Skewness or third moment. (b) Kurtosis or

moment.

a high moment that there are many real-life distributions for which the

,taJld,,,d deviation of (13.1.6) as an estimator is effectively infinite.

Calculation of the quantities defined in this section is perfectly straightMany textbooks use the binomial theorem to expand out the definiinto sums of various powers of the data, e.g. the familiar

(13.1.7)

this is generally unjustifiable in terms of computing speed and/or roundoff

O1"ol"a. <math.h>

moment(data,n,ave,adev, sdev, svar,skew,curt)

.*ave.*adev.*sdev.*8var.*skew.*curt;

array data [1 .. n] , this routine returns Its mean ave, average deviation adev, standard

sdev, varlan'ce var, skewness skew, and kurtosis curt.

int j;

float S,P:

void nrerror();

if (n <= 1) nrerror("n must be at least 2 in MOMENT");

s=O.O;

First pass to get the mean.

tor (j=l;j<=n;j++) s += data[j];

*ave=s/n;

*adev=(*svar)=(*skew)=(*curt)=O.O;

Second pass to get the first (absolute), second,

for (j=l;j<=n;j++) {

third, and fourth moments of the deviation

*adev += fabs(s=data[j]-(*ave»;

from the mean.

*svar += (p=s*s);

*skew += (p *= s);

*curt += (p *= s);

}

Put the pieces together according to the conven*adev /= n;

*svar /= (n-l);

tional definitions.

*sdev=sqrt(*svar);

if (*svar) {

*skew /= (n*(*svar)*(*sdev»;

Chapter 13.

Statistical Description of

*curt=(*curt)/(n*(*svar)*(*svar»-3.0;

} else nrerror(UNo skew/kurtosis when variance

=0

(in MOMENT) II);

}

REFERENCES AND FURTHER READING:

Downie, N.M., and Heath, RW. 1965, Basic Statistical Methods.

(New York: Harper and Row), Chapters 4 and 5.

Bevington, Philip R. 1969, Data Reduction and Error AllaIJIS'"

Physical Sciences (New York: McGraw-Hili), Chapter 2.

. "'''""

Kendall, Maurice, and Stuart, Alan. 1977, The Advanced

Statistics, 4th ed. (London: Griffin and Co.), vol. 1,

SPSS: Statistical Package for the Social Sciences, 2nd ed.,

H. Nle, et al. (New York: McGraw-Hili), §14.1

13.2 Efficient Search for the Median

The median of a probability distribution function p( x) is the

for which larger and smaller values of x are equally probable:

I

"m'd

p(x) dx

= -1 =

-00

2

1=

p(x) dx

Xmed

The median of a distribution is estimated from a sample of values

Xi which has equal numbers of values

below it. Of course, this is not possible when N is even. In that

conventional to estimate the median as the mean of the unique two

values. If the values Xj j = 1, ... , N are sorted into ascending (or,

matter, descending) order, then the formula for the median is

x N by finding that value

Xm,d

= X(N+1)/2

N odd

If a distribution has a strong central tendency, so that most of its

under a single peak, then the median is an estimator of the central

is a more robust estimator than the mean is: the median fails as an

only if the area in the tails is large, while the mean fails if the first

of the tails is large; it is easy to construct examples where the first

of the tails is large even though their area is negligible.

To find the median of a set of values, one can proceed by

set and then applying (13.2.2). This is a process of order NlogN.

might think that this is wasteful, since it provides much more

13.2 Efficient Search ·for the Median

just the median (e.g. the upper and lower quartile points, the deciles,

In fact, there are faster methods known for finding the median only,

order N log log N or N 2 /3 log N operations. For details, see Knuth.

pr'actlcal matter, these differences in speed are not usually substantial

to be important. All of the "combinatorial" methods share the same

iisadv:mt:age: they rearrange all the data, either in place or else in additional

memory.

array xCi .. n], returns their median value xmed. The array x is modified and returned

into ascending order.

int n2.n2p;

void sort 0 ;

sort(n,x);

n2p=(n2=n/2)+1;

*xmed=(n ~ 2 ? x[n2p]

This routine Is In §8.2.

O.5*(x[n2]+x[n2p));

There are times, however, when it is quite inconvenient to rearrange all

data just to find the median value. For example, you might have a very

tape of values. While you may be willing to make a number of consecutive

through the tape, sorting the whole thing is a task of considerably

magnitude, requiring an additional external storage device, etc. The

thing about the mean is that it can be computed in one pass through the

Can we do anything like that for the median?

Yes, actually, if you are willing to make on the order of log N passes

throUl,h the data. The method for doing so does not seem to be generally

so we will explain it in some detail: The median (in the case of even

, say) satisfies the equation

(13.2.3)

each term in the sum is ±1, depending on whether Xj is larger or smaller

the median value. Equation (13.2.3) can be rewritten as

(13.2.4)

shows that the median is a kind of weighted average of the points,

wei:ghted by the reciprocal of their distance from the median.

Equation (13.2.4) is an implicit equation for Xm,d' It can be solved

ite:rativ"ly, using the previous guess on the right-hand side to compute the

I'.""

I~;

Chapter 13.

Statistical Description of Data

next guess. With this procedure (supplemented by a couple of tricks

in comments below), convergence is obtained in of order log N passes

the data. The following routine pretends that the data array is in

but can easily be modified to make sequential passes through an external

#include <math.h>

#define BIG 1.0e30

#define AFAe 1.6

#define AMP 1. I)

Here, AMP Is an overconvergence factor: on each iteration, we move the guess by this factor

(13.2.4) would naively Indicate. AFAC Is a factor used to optimize the size of the "smoothing

at each iterat10n.

void mdian2(x,n,xmed)

int n;

float x[],*xmed;

Given an array x[1 .. n], returns their median value xmed. The array x is not modified,

accessed sequentially in each consecutive pass.

{

int np,nm,j;

float xx,xp,xm,Bumx,sum,eps,stemp,dum,ap.am,aa,a;

a-O.5*(x[l]+x[n]);

This can be any first guess for the median.

eps=fabs(x[n]-x[1]);

This can be any first guess for the characteristic spacing of the data points near the median.

am = -(ap=BIG);

ap and am are upper and lower bo~nds on the median.

for(;;){

sum=swnx=O.O;

Here we start one pass through the data.

Number of points above the current guess, and below

np=nm=O;

xm - - (xp-BIG) ;

Value of the point above and closest to the guess, and below and closest.

for (j=1;j<=n;j++) {

Go through the points,

xx::x[j] ;

if (xx ! = a) {

omit a zero denominator In the sums,

if (xx > a) {

update the diagnostics,

++np;

if (xx < xp) xp=xx;

} else if (xx < a) {

++nm;

if (xx > xm) xm=xx;

}

sum += dum=1.0/(eps+fabs(xx-a»;

sumx += xx*dum;

The smoothing constant 15

accumulate the sums.

}

}

stemp=(sumx!sum)-a;

if (np-nm >= 2) {

Guess is too low; make another pass,

am=a;

with a new lower bound,

aa = stemp < 0.0 ? xp : xp+stemp*AMP;

a new best guess

i f (aa > ap) aa=O. 6* (a+ap) ;

(but no larger than the upper bound)

eps=AFAC*fabs(aa-a);

and a new smoothing factor.

a::aa;

} else if (nm-np >= 2) {

Guess Is too high; make another pass,

ap=a;

With a new upper bound,

aa = stemp > 0.0 ? xm

xm+stemp*AMP;

a new best guess

if (aa < am) aa=O. 5* (a+am) ;

(but no smaller than the lower bound)

eps=AFAC*fabs(aa-a);

and a new smoothing factor.

a=aa;

} else {

Got It 1

if (n Yo 2 == 0) {

For even n median Is always an average.

nm ? xp+xm : np > nm ? a+xp : xm+a);

*xmed = O.5*(np

13.3 Estimation of the Mode for Continuous Data

} else {

*xmed

For odd n median Is always one point.

=

np == nm ? a

np > nm 1 xp : xm;

}

return;

}

It is typical for mdian2 to take about 12 ± 2 passes for 10,000 points,

(presumably) about double this number for 100,000,000 points. One nice

is that you can quit after a smaller number of passes if np andnm

enough for your purposes. For example, it will generally take only

6 passes (independent of the number of points) for them to be within

or so of each other, putting the guess a in the 50 ± 0.5'h percentile,

enough for Govermnent work. Note also the possibility of doing the

iM"m,>" by (on one pass) copying to a relatively small array only those

between the bounds ap and am, along with the counts of how many

are above and below these bounds.

FERENCES AND FURTHER READING:

Knuth, Donald E. 1973, Sorting and Searching, voL 3 of The Art of Computer Programming (Reading, Mass.: Addison-Wesley), pp. 216ff.

3 Estimation of the Mode for Continuous

Data

The mode of a probability distribution function p( x) is the value of x

it takes on a maximum value. The mode is useful primarily when there

single, sharp maximum, in which case it estimates the central value. Oca distribution will be bimodal, with two relative maxima; then one

wish to know the two modes individually. Note that, in such cases, the

and median are not very useful, since they may give only a "compro-

value between the two peaks.

Elementary statistics texts seem to have the notion that the only way to

the mode is to bin the data into a histogram or bar-graph, selecting as

est,imat"d value the midpoint of the bin with the largest number of points.

data are intrinsically integer-valued, then the binning is automatic, and

sellse "natural," so this procedure is not unreasonable.

Real-life data are more often not integer-valued, however, but real-valued.

data stored with finite precision can be viewed as integer valued; but

!mIml)er of possible integers is a number so large, e.g. 232 , that you will

have more than one data point per "integer" bin.) It is almost always a

to bin continuous data, since the binning throws away information.

The right way to estimate the mode of a distribution from a set of sampled

goes by the name ((estimating the rate of an inhomogeneous Poisson

by J'h waiting times":

13.

Statistical

of Data

• First, sort all the points Xj j = 1, ... , N into ascending order.

• Second, select an integer J as your "window size,') the reEtoh,ti,

(measured in nmnber of consecutive points) over which the

tribution function will be smeared. Smaller J gives better

olution in x and the chance of finding a high, but very

peak; but smaller J gives poorer accuracy in finding the

maximmn as opposed to a chance fiuctuation in the data.

will discuss this below. J should never be smaller than 3

should be as large as you can tolerate.

• Third, for every i = 1, ... , N - J, estimate p(x) as follows:

l'

Ii

11

I:

iii

• Fourth, take the value !(Xi + Xi+J) of the largest of these es1;irnla1

to be the estimated mode.

The standard deviation of (13.3.1) as an estimator of p(x) is

n

ijl

"

Ij!

1,

'I

In other words, the fractional error is on the order of 1/,f]. So, the

between two possible candidates for the mode is not significant unless

p's differ by several times (13.3.2).

A more subtle problem is how to compare mode candidate values

with different values of J. This problem occurs in practice quite oft<'.n;

data may have a narrow peak containing only a few points, which, for

values of J, "washes out" in favor of a broader peak at some different

of x. We know of no rigorous solution to this problem, but we do

least a high-brow heuristic technique:

Suppose we have some set of mode candidates with different J's.

be the value of the mode for the case J = j, and let Pj be the estimate

at that point. Let t;.Xj denote the i·ange of the window around

for example, it might be t;.X7 = X19 - X,2, if the mode candidate with

length 7 occurred between points 12 and 19.

Let Hj denote the hypothesis "the true mode is at Xj, while all the

mode candidates are chance fluctuations of a distribution function nO

than the true mode." If H j is true, then we need to know how

of the other t;.xn's are, in other words, how likely is it that the

1

L.1~

____L

__ -----,-

______ 1

___

~

____ _'__'__

___ L

_________

~.~

_____ _

Chapter 13.

Statistical Description of Data

• First, sort all the points Xj i = 1, ... ,N into ascending order.

• Second 1 select an integer J as your "window size," the

(measured in number of consecutive points) over which the

tribution function will be smeared. Smaller J gives better',

olution in x and the chance of finding a high, but very

peak; but smaller J gives poorer accuracy in finding the

maximum as opposed to a chance fluctuation in the data.

will discuss this below. J should never be smaller than 3

should be as large as you can tolerate.

• Thfrd, for every i = 1, ... , N - J, estimate p(x) as follows:

I'

J

~:

dHxi+Xi+JJ) '" MIXi+J -Xi'

11

"

II

"

~

iii

~j

• Fourth, take the value 1(Xi + xi+J) of the largest of these estimat

to be the estimated mode.

The standard deviation of (13.3.1) as an estimator of p(x) is

~,l

,1i

,,~

~\

(T

~:l\,

[p (![Xi + Xi+JJ)) '"

.,I

VJ

X i+J -

Xi

'

"-ti

J.'

"

'~J

In other words, the fractional error is on the order of IjVJ. So, the selectio

between two possible candidates for the mode is not significant unless

p's differ by several times (13.3.2).

A more subtle problem is how to compare mode candidate values obtaine!

with different values of J. This problem occurs in practice quite ofkn;

data may have a narrow peak containing only a few points, which, for

values of J, "washes out" in favor of a broader peak at some different

of x. We know of no rigorous solution to this problem, but we do know

least a high-brow heuristic technique:

Suppose we have 'some set of mode candidates with different J's.

be the value of the mode for the case J = j, and let P; be the estimate of

at that point. Let fix; denote the "ange of the window around candidate

for example, it might be fix7 = X,9 - X12, if the mode candidate with windo"

length 7 occurred between points 12 and 19.

Let H; denote the hypothesis "the true mode is at x;, while all the

mode candidates are chance fluctuations of a distribution function no

than the true mode." If H; is true, then we need to know how unlikely

of the other fixn's are, in other words, how likely is it that the range

Xn should be as short or shorter than observed if the true probability

is P; rather than Pn? This is given by the gamma probability distributio(

Do Two Distributions Have the Same Means or Variances?

introduced in §7.3,

"'n

(N )n-l

(Np.) Pix

e-NPj'dx

o

J

(n - 1)!

In

= P(n, Np;Ll.x n )

(13.3.3)

= P(n, np;)

Pn

=P(n,

j~Xn)

uXj

this equation, Pta, x) is the incomplete gamma .function (see equation

and the last two equalities follow from equation (13.3.1).

likelihood of hypothesis H; is now the product of these probabilities

all the other n's, not including j:

·"1

Vi

i

)f:~\

I, I

1lI!:l\

,

~~I~

Likelihood(H;) =

II P(n, j~:n)

n¥=j

(13.3.4)

!I i

select the hypothesis H; which has the the (so-called) maximum likeThis method is straightforward to program, using the incomplete

function routine gammp in §6.2.

EFERENCES AND FURTHER READING:

Parzen, Emanual, 1962, Stochastic Processes (San Francisco:

.'~'".

!"'

IpWJ

J

I~~I

i'(I';'

In

1!ltt1

Ii

:~:.;:,

Holden

Day).

,:;;

it;

!,~Jn

Ii

il~l;

Do Two Distributions Have the Same

Means or Variances?

Not uncommonly we want to know whether two distributions have the

mean. For example, a first set of measured values may have been gathbefore some event, a second set after it. We want to know whether the

a "treatment" or a "change in a control parameter," made a difference.

Our first thought is to ask "how many standard deviations" one sample

is from the other. That number may in fact be a useful thing to know.

relate to the strength or "importance" of a difference of means if that

i8 genuine. However, by itself, it says nothing about whether the

is genuine, that is, statistically significant. A difference of means

be very small compared to the standard deviation, and yet very signifif the number of data points is large. Conversely, a difference may

large but not significant, if the data are sparse. We will be

Chapter 13.

Statistical

of Data

meeting these distinct concepts of strength and significance several

the next few sections.

A quantity that measures the significance of a difference of means is

the number of standard deviations that they are apart, but the number

so-called standard errors that they are apart. The standard error of a

values is their standard deviation divided by the square root of their

the standard error estimates the standard deviation of the sample

an estimator of the population (or "true") mean.

Student's t-test for Significantly Different Means

i_"

~:.

IIw

~:

01'

I'

m:

II'

~I'

,"

I,

Applying the concept of standard error, the conventional statistic

measuring the significance of a difference of means is termed Student's

When the two distributions are thought to have the same variance, but

sibly different means, then Student's t is computed as follows: First,

the standard error of the difference of the means,s D, from the ''pooled

ance" by the formula

I

~Ii

fll

iIi'

8n =

l:one (Xi

- x;;;;:;;-)2 + l:two (Xi - x,;;;;;-)2

Nl +N2 -2

(~l + ~J

Ii

11

"f'

where each sum is over the points in one sample, the first or second, eacn lll(,!<

'I)i

likewise refers to one sample or the other, and Nl and N2 are the numbers

points in the first and second samples respectively. Second, compute t by

~i·

t = ~ - xt;;;;;

3D

Third, evaluate the significance of this value of t for Student's

with Nl + N2 - 2 degrees of freedom, by equations (6.3.7) and (6.3.9),

by the routine betai (incomplete beta function) of §6.3.

The significance is a number between zero and one, and is the probabiIi\

that It I could be this large or larger just by chance, for distributions with

means. Therefore, a small numerical value of the significance (0.05 or

means that the observed difference is "very significant." The function

in equation (6.3.7) is one minus the significance.

As a routine, we have

Two Distributions Have the Same Means or Variances?

<math.h>

ttest(datal,ni.data2,n2,t,prob)

,n2;

datal[].data2[] .*t.*prob;

the arrays datal [1 .. ni] and data2 [1 .. n2J , this routine returns Student's t as t, and

as prab, small values of prob indicating that the arrays have significantly

The data arrays are assumed to be drawn from populations with the same

float vari,var2.svar,df,avel.ave2;

void avevar 0 ;

float betai 0 ;

avevar{datal,nl,&avel.&varl);

avevar(data2,n2,&ave2,&var2);

,df"'nl +n2-2;

svar=«nl-1)*varl+(n2-1)*var2)/df;

*t=(avel-ave2)/sqrt(svar*(1.0/nl+1.0/n2»;

*prob=betai(O.5*df,O.5,df/(df+(*t)*(*t»);

......,,"

Degrees of freedom.

Pooled variance.

See eQuation (6.3.9).

Inl1l~i!

IWI~",I~

lIi';l

I·'\i

Ilrl~

1~[Wjll

l~litjl

makes use of the following routine for computing the mean and variance

a set of numbers,

avevar(data.n. ave ,svar)

data(].*ave,*svar;

array data[1 .. n], returns Its mean as ave and Its variance as var.

I '!~., 1"

,,~

tm!lW~

1~1.~

If"

11"1'1

I"~!'"

·~@;1

i.iI\:!:;:

,tLi

*ave=(*svar)=O,O;

(j=1;j<=n;j++) *ave += data[j];

*ave /= n;

for (j=1;j<=n;j++) {

s=data [j] - (*ave) ;

*Svar += s*s;

*svar

/= (n-1);

The next case to consider is where the two distributions have significantly

variances, but we nevertheless want to know if their means are the

or different. (A treatment for baldness has caused some patients to lose

hair and turned others into werewolves, but we want to know if it

cure baldness on the average!) Be suspicious of the unequal-variance

if two distributions have very different variances, then they may also

different in shape; in that case, the difference of the means

not be a particularly useful thing to knOW.

To find out whether the two data sets have variances that are significantly

you use the F-test, described later on in this section.

"1

1111

1,!

Imr;:

Chapter 13.

Statistical

of Data

The relevant statistic for the unequal variance t- test is

t~-~

- [Var(xone)/N, + Var(xtwo)/N2]'/2

This statistic is distributed approximately as Student's t with a number

degrees of freedom equal to

2

var(Xone) + Var(Xtwo)]

[ N,

N2

[Var(x one )/N,]2 +

2]2

N, 1

N2 1

Cv;;.;y,;;wo)/N

'ii:1

",I

I!!

~Irl

Il!

YJII

Expression (13.4.4) is in general not an integer, but equation (6.3.7)

care.

r'

The routine is

i~,!

~,I

~ti

,'I '

~

".\

II"

'In;!

'Iff'

11ft

"

~i

ijli

#include <math.h>

static float sqrarg:

#define SQR(a) (sqrarg=(a),sqrarg*sqrarg)

void tutest(datai,ni,data2,n2.t.prob)

float datal[].data2[].*t.*prob;

int nl,n2;

Given the arrays data1 [1 .. nil and data2 [1 .. n21 , this routine returns Student's t as t,

significance as prab, small values of prob Indicating that the arrays have significantly

means. The data arrays are allowed to be drawn from populations with uneQual vari

{

float varl.var2.df,avel.ave2;

!(j:1

void avevarO;

float betai 0 ;

avevar{data1,n1,&ave1,&var1);

avevar{data2,n2.&ave2,&var2);

*t={ave1-ave2)/sqrt{var1/n1+var2/n2);

df=SQR{var1/n1+var2/n2)/{SQR{var1/n1)/{n1-1)+SQR{var2/n2)/(n2-1»;

*prob=betai{O.6*df,O.5,df/{df+SQR(*t»);

}

Our final example of a Student's t test is the case of paired samples. J

we imagine that much of the variance in both samples is due to effects

are point-by-point identical in the two samples. For example, we

two job candidates who have each been rated by the same ten

a hiring committee. We want to know if the means of the ten scores

significantly. We first try ttest above, and obtain a value of prob

not especially significant (e.g., > 0.05). But perhaps the significance

washed out by the tendency of some committee members always to

scores, others always to give low scores, which increases the apparent

.~

Do Two Distributions Have the Same Means or Variances?

thus decreases the significance of any difference in the means. We thus

the paired-sample formulas,

1

N

Cov(xon" Xtwo) '= N -1 I)Xond - xone)(Xtwoi - Xtwo)

(13.4.5)

i=l

8D

=

Var(xone) + Var(xtwo) - 2Cov(x on" Xtwo)

N

(13.4.6)

~I~~

~I;"

',jW!t

t:

~

!lI~w.

Xone -

Xtwo

t=----

an

"

IF!

(13.4.7)

IlfK<:$

III'"

IFl

!'1M!

N is the number in each sample (number of pairs). Notice that it is

tmortant that a particular value of i label the corresponding points in each

that is, the ones that are paired. The significance of the t statistic in

is evaluated for N - 1 degrees of freedom.

The routine is

i~~

~t'#

~'''~

~, ~,

~~rlll

~ll;s,1

}II:::;'

<math.h>

tptest(datal.data2.n,t,prob)

datal[].data2[] .*t,*prob;

the paired arrays datal [1 .. nJ and data2 [1 .. n], this routine returns Student's t for

data as t, and its significance as prob, small values of prob Indicating a significant

of means.

avevar(datal,D,&avel,&varl);

'avevar(data2.n,&ave2,&var2);

(j=l:j<=n:j++)

COy += (datal[j]-avel)*(data2[j]-ave2);

/= df=n-l;

'Bd=sqrt «var! +var2-2 .O*cov) In) ;

,il:t=(avel-ave2) !ad;

',:~prob=betai (0. 5*df ,0.5 ,df!(df+(*t) *(*t») ;

~t;:;:

!~'"

'~I

I".

QNcr,

Chapter 13.

Statistical Description of Data

F-Test for Significantly Different Variances

'in

~"

~!

'"

I~

"li

W

I,

I',

,'"

I!

'~i

The F-test tests the hypothesis that two samples have different variance,

by trying to reject the null hypothesis that their variances are actually

.

sistent. The statistic F is the ratio of one variance to the other, so

either :» 1 or « 1 will indicate very significant differences. The distributiOl

of F in the null case is given in equation (6.3.11), which is evaluated using

routine betai. In the most common case, we are willing to disprove the

hypothesis (of equal variances) by either very large or very small values of

so the correct significance is two-tailed, the sum of two incomplete beta

tions. It turns out, by equation (6.3.3), that the two tails are always equal;

need only compute one, and double it. Occasionally, when the null hypothes'

is strongly viable, the identity of the two tails can become confused,

an indicated probability greater than one. Changing the probability to

minus itself correctly exchanges the tails. These considerations and equatio

(6.3.3) give the routine

void ftest(datai,ni,data2.n2,f,prob)

float datal[].data2[].*f.*prob;

int ni,n2;

Given the arrays datai[1. .ni] and data2[1. ,n2], this routine returns the value of f,

its significance as prob. Small values of prob indicate that the two arrays have signlfic,

different variances.

{

float varl.var2,avel.ave2,dfl,df2:

void avevar();

float betai 0

~f1

;

avevar(datal,nl,&avel,&varl);

avevar(data2,n2,&ave2.&var2);

if (var1 > var2) {

Make

*f=var1/var2 ;

df1=n1-1;

df2=n2-1;

I;

'ti

W

4i;

1ft:

F

the

ratio

afthe

larger variance

to the

smaller

one.

} else {

*f=var2/var1 ;

df1=n2-1;

df2=n1-1;

}

*prob = 2.0*betai(0.5*df2.0.6*df1.df2/(df2+df1*(*f»);

if (*prob > 1.0) *prob=2.0-*prob;

}

REFERENCES AND FURTHER READING:

von Mises, Richard. 1964, Mathematical Theory of Probability and

tics (New York: Academic Press), Chapter IX(B).

SPSS: Statistical Package for the Social SCiences, 2nd ed., by

H. Nie, et al. (New York: McGraw-Hili), §17.2.

13.5 Are Two Distributions Different?

13.5 Are Two Distributions Different?

Given two sets of data, we can generalize the questions asked in the previous section and ask the single question: Are the two sets drawn from the

same distribution function, or from different distribution functions? Equivalently, in proper statistical language, "Can we disprove, to a certain required

level of significance, the null hypothesis that two data sets are drawn from

the same population distribution function?" Disproving the null hypothesis

in effect proves that the data sets are from different distributions. Failing to

disprove the null hypothesis, on the other hand, only shows that the data sets

can be consistent with a single distribution function. One can never prove

two data sets come from a single distribution, since (e.g.) no practical

amount of data can distinguish between two distributions which differ only

by one part in 10 '0 .

Proving that two distributions are different, or showing that they are

consistent, is a task that comes up all the time in many areas of research: Are

visible stars distributed unifonnly in the sky? (That is, is the distribution

stars as a function of declination - position in the sky - the same as the

distribution of sky area as a function of declination?) Are educational patterns

.

same in Brooklyn as in the Bronx? (That is, are the distributions of people

a function of last-grade-attended the same?) Do two brands of fluorescent

have the same distribution of burn-out times? Is the incidence of chicken

the same for first-born, second-born, third-born children, etc.?

These four examples illustrate the four combinations arising from two

0different dichotomies: (1) Either the data is continuous or binned. (2) Eiwe wish to compare one data set to a known distribution, or we wish to

'tompare two equally unknown data sets. The data sets on fluorescent lights

on stars are continuous, since we can be given lists of individual burnout

or of stellar positions. The data sets on chicken pox and educational

are binned, since we are given tables of numbers of events in discrete categories: first-born, second-born, etc.; or 6th Grade, 7th Grade, etc. Stars and

)chicken pox, on the other hand, share the property that the null hypothesis is

known distribution (distribution of area in the sky, or incidence of chicken

in the general population). Fluorescent lights and educational level inthe comparison of two equally unknown data sets (the two brands, or

TBrooklyn and the Bronx).

One can always turn continuous data into binned data, by grouping the

into specified ranges of the continuous variable(s): Declinations bea and 10 degrees, 10 and 20, 20 and 30, etc. Binning involves a loss of

jhformation, however. Also, there is often considerable arbitrariness as to how

bins should be chosen. Along with many other investigators, we prefer to

. i unnecessary binning of data.

The accepted test for differences between binned distributions is the chitest. For continuous data as a function of a single variable, the most

(enerally accepted test is the Kolmogorov-Smirnov test. We consider each

' '1

I,I;

~!\'I

I

,

tl~\

I"

:~

I~

".Iii

~,

F!

,tl~

,

I;,J

;,t;,1

~~: \

~r<~

Ill>

Chapter 13.

Statistical Description of Data

Chi-Square Test

Suppose that Ni is the number of events observed in the i,h bin,

that ni is the number expected according to some known distribution.

that the N;'s are integers, while the n;'s may not be. Then the chi-square

statistic is

x2 =

L (Ni -

ni)2

ni

w

-

m

~I

•,

.'

I:

~I

II

It '

"~i

~,

:'~ii

Wi

'ij!:

i

,

'lfI!:,.

i

"r:

:,>

ijii'

II!::!

where the sum is over all bins. A large value of X2 indicates that the

hypothesis (that the N;'s are drawn from the population represented by

n;'s) is rather unlikely.

Any term j in (13.5.1) with 0 = nj = N j should be omitted from

SUill. A term with nj = 0, N j t 0 gives an infinite X2, as it should, since

this case the N;'s cannot possibly be drawn from the ni's!

The chi-square probability function Q(x2Iv) is an incomplete gamma

tion, and was already discussed in §6.2 (see equation 6.2.18). Strictly speakir

Q(x 2Iv) is the probability that the sum of the squares of v random

variables of unit variance will be greater than X2 • The terms in the

(13.5.1) are not individually normal. However, if either the number of

is large (» 1), or the number of events in each bin is large (» 1), then

chi-square probability function is a good approximation to the distributioI\'

(13.5.1) in the case of the null hypothesis. Its use to estimate the signiJican\

of the chi-square test is standard.

The appropriate value of v, the number of degrees of freedom, bears

additional discussion. If the data were collected in such a way that the

we.re all determined in advance, and there were no a priori constraints

any of the N;'s, then v equals the number of bins N B (note that this is

the total number of events!). Much more commonly, the n;'s are normalizl

after the fact so that their sum equals the sum of the N;'s, the total

of events measured. In this case the correct value for v is N B - 1.

model that gives the n;'s had additional free parameters that were

after the fact to agree with the data, then each of these additional

parameters reduces v by one additional unit. The number of these addltlO

fitted parameters (not including the normalization of the n;'s) is comma

called the "number of constraints," so the number of degrees of freedon:

v = N B-1 when there are "zero constraints,"

We have, then, the following program:

void chsone(bins,ebins,nbins,knstrn,df,chsq,prob)

float bins[],ebins[].*df,*chsq,*prob;

int nbins,knstrn;

Given the array bins [1 .. nhina], containing the observed numbers of events, and

ray ebins [1 .. nbins] containing the expected numbers of events, and given the

constraints knstrn (normally zero), this routine returns (trivially) the number of

freedom df, and (nontrivially) the chi-square chsq and the significance prob. A

13.5 Are Two Distributions Different?

indicates a significant difference between the distributions bins and ebins. Note that

and abins are both real arrays, although bins will normally contain integer values.

tnt j;

:float temp;

float gammq 0

:

void nrerror 0

;

~df=nbins-1-knstrn:

*chsq"'O.O;

for (j=1;j<=nhins;j++) {

if (ebins[jl <:: 0.0) nrerror(nBad expected number in CHSONE");

temp=bins[j]-ebins[j] ;

*chsq += temp*temp/ebins[j);

}

*prob=gammq(O.6*(*df),O.5*(*chsq»;

Chi-square probability function. See §6.2.

Next we consider the case of comparing two binned data sets. Let Ri be

number of events in bin i for the first data set, 8 i the number of events

. the same bin i for the second data set. Then the chi-square statistic is

x2 =

L (R; - 8,)2

,.

Ri

+ 8,

(13.5.2)

(13.5.2) to (13.5.1), you should note that the denominator of

is not just the average of R; and 8i (which would be an estimator of

in 13.5.1). Rather, it is twice the average, the sum. The reason is that each

in a chi-square sum is supposed to approximate the square of a normally

,LrlUllLeu quantity with unit variance. The variance of the difference of two

quantities is the sum of their individual variances, not the average.

If the data were collected in such a way that the sum of the R; 's is

cessaI·ily equal to the sum of 8i'S, then the number of degrees of freedom

to one less than the number of bins, NE - 1 (that is, knstrn=O), the

case. If this requirement were absent, then the number of degrees of

would be N E. EXaJllple: A birdwatcher wants to know whether the

;CUUUClUH of sighted birds as a function of species is the SaJlle this year as

Each bin corresponds to one species. If the birdwatcher takes his data to

first 1000 birds that he saw in each year, then the number of degrees of

is N E -1. If he takes his data to be all the birds he saw on a random

of days, the same days in each year, then the number of degrees of

is NE (knstrn= -1). In this latter case, note that he is also testing

the birds were more numerous overall in one year or the other: that is

extra degree of freedom. Of course, any additional constraints on the data

lower the number of degrees of freedom (Le., increase knstrn to positive

in accordance with their number.

The program is

13.

Statistical

of Data

void chstwo(binsi.bins2.nbins.knstrn,df,chsq,prob)

float binsl[] ,bins2[] .*df,*chsq.*prob;

int nbins.knstrn;

Given the arrays binsl [1 .. nbina] and bins2 [1 .. nhina], containing two sets of binned data

and given the number of additional constraints knstrn (normally 0 or -1), this routine return~

the number of degrees of freedom df, the chi-square chsq and the significance prob. A

value of prob indicates a significant difference between the distributions binsl and

Note that binsl and bins2 are both real arrays, although they will normally contain

values.

{

int j;

float temp;

float gammq 0 :

*df=nbins-i-knstrn;

*chsq=O.O;

for (j=l;j<=nhins;j++)

if (binsl[j] == 0.0

*df -= 1.0;

,(I

lr';

"II:

,l.~ :

~~

~i:

~I

.\"11. 1

0.0)

No data means one less degree of freedom.

else {

temp=bins1[j]-bins2[j);

*chsq += temp*temp/(binsl[j]+bins2[j]);

-111 Ii

.'

&& bins2[j]

}

*prob=gammq(0.6*(*df),O.6*(*chsq»;

Chi-square probabHlty functioh. See §6.2.

}

••

I'~

II\il

t'

~~

ijF

Kolmogorov-Smirnov Test

"1,1,

·l,;i

;,>:

C,.

',,:,:1

The Kolmogorov-Smirnov (or K -8) test is applicable to unbinned

butions that are functions of a single independent variable, that is, to

sets where each data point can be associated with a single number (lifetime

each lightbulb when it burns out, or declination of each star). In such

the list of data points can be easily converted to an unbiased estimator S N

of the cumulative distribution function of the probability distribution

which it was drawn: If the N events are located at values Xi, i = 1, ... ,

then SN(X) is the function giving the fraction of data points to the left

given value x. This function is obviously constant between consecutive

sorted into ascending order) xi's, and jumps by the same constant 1/N

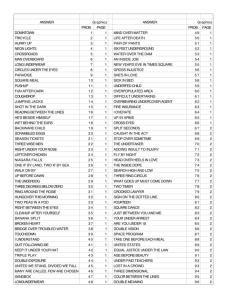

each Xi. (See Figure 13.5.1).

Different distribution functions, or sets of data, give different cumUiatIV!

distribution function estimates by the above procedure. However, all

lative distribution functions agree at the smallest allowable value of X

they are zero), and at the largest allowable value of X (where they are

(The smallest and largest values might of course be ±oo.) So it is the behavl(}

between the largest and smallest values that distinguishes distributions.

One can think of any number of statistics to measure the overall differen'

between two cumulative distribution functions: The absolute value of the

between them, for example. Or their integrated mean square difference.

Kolmogorov-Smirnov D is a particularly simple measure: It is defined as

maximum value of the absolute difference between two cumulative distribu

13.5

Two Distributions Different?

M

x

Kolmogorov-Smirnov statistic D. A measured distribution of values in x

as N dots on the lower abscissa) is to be compared with a theoretical distribution

. probability distribution is plotted as P(x). A step-function cumulative

distribut~on SN(X) is constructed, one which rises an equal amount at each

point. D is the greatest distance between the two cumulative distributions.

Thus, for comparing one data set's SN(X) to a known cumulative

dis1oribmtion function P(x), the K-8 statistic is

D=

max

-oo<X<oo

ISN(x) - P(x)1

max

-oo<x<oo

ISN, (x) - SN, (x)1

(13.5.4)

What makes the K -8 statistic useful is that its distribution in the case

the null hypothesis (data sets drawn from the same distribution) can be

'alcul,.te,d, at least to useful approximation, thus giving the significance of

observed nonzero value of D.

The function which enters into the calculation of the significance can be

as the following sum,

00

QKS(A) = 22)-);-1 e- 2;'>.'

)'=1

fl

0"

,~

r'

1'1

(13.5.3)

for comparing two different cumulative distribution functions SN, (x)

SN,(X), the K-8 statistic is

D=

~

(13.5.5)

,."

Chapter 13.

Statistical Description of Data

which is a monotonic function with the limiting values

QKS(O) = 1

QKS(OO) = 0

In terms of this function, the significance level of an observed value of D (as

a disproof of the null hypothesis that the distributions are the same) is given

approximately by the formulas

Probability (D

~,

~.

> observed)

= QKs(VN D)

for the case (13.5.3) of one distribution, where N is the number of data points,

and

a'

1~H

a.ffl'

Probability (D

> observed)

u'

fl

'II'Iqt,'

u.'

q~J

·r~

II"iii::

,!~ :1

ilt;

= QKS (

NIN2 D)

Nl+N2

for the case (13.5.4) of two distributions, where Nl is the number of

points in the first distribution, N2 the number in the second.

The nature of the approximation involved in (13.5.7) and (13.5.8) is

it becomes asymptotically accurate as the N's become large. In practice,"

N = 20 is large enough, especially if you are being conservative and requirin!

a strong significance level (0.01 or smaller).

So, we have the following routines for the cases of one and two

butions:

'11'1

II:!

·,,'1

41"

'I!:JI

#include <math.h>

static float maxargl.maxarg2;

#define MAX(a.b) (maxargl=(a),maxarg2=(b),(maxargl) > (maxarg2) ?\

(maxargl) : (maxarg2»

void ksone(data,D,func,d,prob)

float data[].*d.*prob;

float (*func)();

1* ANSI: float (*func)(float); *1

int n;

Given an array data [1 .. n], and given a user-supplied function of a single variable func

Is a cumulative distribution function ranging from 0 (for smallest values of Its

to 1 (for largest values of Its argument), this routine returns the K-S statistic d,

significance level prob. Small values of prob show that the cumulative distribution

of data is significantly different from func. The array data is modified by being sorted

ascending order.

{

int j;

float fo=O.O,fn,ff,en,dt;

void sort () ;

float probks 0 ;

sort(n,data);

en=n;

M=O.O;

for (j=1;j<=n;j++) {

fn=j/en;

If the data are already sorted Into ascending order,

this call can be omitted.

Loop over the sorted data points.

Data's c.d.f. after this step.

13.5 Are Two Distributions Different?

ff=(*func) (data[j]);

Compare to the user-supplied function.

dt = MAX(fabs(fo-ff) ,fabs(fn-ff»;

Maximum distance.

if (dt > *d) *d=dt;

£o=fn;

}

*prob=probks(sqrt(en)*(*d»;

Compute significance.

<math.h>

kstwo(datal,ni,data2,n2.d,prob)

datal[1.data2[] .*d,*prob;

array datal [1 .. n1l and an array data2 [1 .. n2], this routine returns the K-S statistic

the signIficance level prob for the null hypothesis that the data sets are drawn from

distribution. Small values of prob show that the cumulative distribution function of

is significantly different from that of data2. The arrays datal and data2 are modified

being sorted into ascending order.

int jl=1.j2=1;

float eni,en2,fnl=O.O,fn2=O.O,dt,di,d2;

void sort 0 ;

float probks 0 :

eni=ni;

en2=n2;

*d=O.O;

sort(ni,datai);

sort (n2 ,data2) ;

while (ji <= ni && j2 <= n2) {

If we are

i f «d1=data1 [j 1]) <= (d2=data2 [j2] » {

fni=(ji++)/en1;

}

if (d2

<= d1) {

fn2=(j2++) /en2;

not done ..

Next step is In datal.

Next step Is In data2.

}

if «dt=fabs(fn2-fni»

> *d) *d=dt;

}

*prob=probks(sqrt(eni*en2/(en1+en2»*(*d»;

Compute significance.

Both of the above routines use the following routine for calculating the

func:tion QKS:

~1nCJ'Ud.e

<math.h>

int j;

float a2,fac=2.0,snm=O.O.term,termbf=O.O;

a2 = -2.0*alam*alam;

for (j=i;j<=100;j++) {

term=fac*exp(a2*j*j);

Chapter 13.

Statistical Description of Data

sum += term;

if (fabs(term) <'" EPS1*termbf I I fabs(term) <= EPS2*sum) return sum;

fae '" -fae;

Alternating signs in sum.

termbf=fabs(term);

}

return 1.0;

Get here only by failing to converge.

}

REFERENCES AND FURTHER READING:

von Mises, Richard. 1964, Mathematical Theory of Probability and Statistics (New York: Academic Press). Chapters IX(C) and IX(E).

~I,~' I

iC'

~ ,~'

ria

"u~

13.6 Contingency Table Analysis of Two

Distributions

~

"t'(1

I.". .

\j,.l

II

!

w<!J

~I!

, '.

,,'

1 '"

~:.

";!.}

In this section, and the next two sections, we deal with measures

association for two distributions. The situation is this: Each data point has

two or more different quantities associated with it, and we want to know

whether knowledge of one quantity gives us any demonstrable advantage

predicting the value of another quantity. In many cases, one variable will be

an "independent" or "control" variable, and another will be a "dependent"

or 'measured" variable. Then, we want to know if the latter variable is

fact dependent on or associated with the former variable. If it is, we want

to have some quantitative measure of the strength of the association.

often hears this loosely stated as the question of whether two variables

correlated or uncorrelated, but we will reserve those terms for a particular

of association (linear, or at least monotonic), as discussed in §13.7 and

Notice that, as in previous sections, the different concepts of sirnificance and strength appear: The association between two distributions

be very significant even if that association is weak - if the quantity of

is large enough.

It is useful to distinguish among some different kinds of variables,

different categories forming a loose hierarchy.

• A variable is called nominal if its values are the members of

unordered set. For example, "state of residence" is a nomin~:'

variable that (in the U.S.) takes on one 'Of 50 values; in

physics, "type of galaxy" is a nominal variable with the

values "spiral," "elliptical," and "irregular."

• A variable is termed ordinal if its values are the members of a

crete, but ordered, set. Examples are: grade in school, planetary

order from the Sun (Mercury = 1, Venus = 2, ... ), number

offspring. There need not be any concept of "equal metric

tance" between the values of an ordinal variable, only that

be intrinsically ordered.

13.6 Contingency Table Analysis of Two Distributions

I. male

2. female

I.

2.

<cd

green

# of

red males

# of

green males

# of

males

N"

N"

N,.

# of

# of

# of

red females

green females

females

N"

N,.

# of red

# of green

total #

N.,

N.,

N

Nll

13.6.1. Example of a contingency table for two nominal variables, here sex and

The row and column marginals (totals) are shown. The variables are "nominal,"

. the order in which their values are listed is arbitrary and does not affect the result of

contingency table analysis. If the ordering of values has some intrinsic meaning, then

variables are "ordinal" or "continuous," and correlation techniques (§13.7 - §13.8) can

utilized.

• We will call a variable continuous if its values are real numbers, as are

times, distances, temperatures, etc. (Social scientists sometimes

distinguish between interval and ratio continuous variables, but

we do not find that distinction very compelling.)

A continuous variable can always be made into an ordinal one by binning

into ranges. If we choose to igoore the ordering of the bins, then we can

it into a nominal variable. Nominal variables constitute the lowest type

hierarchy, and therefore the most general. For example, a set of several

¢OintTInU()Us or ordinal variables can be turned, if crudely, into a single nominal

YaJ·iallle. by coarsely binning each variable and then taking each distinct combiTILatilon of bin assigoments as a single nominal value. When multidimensional

are sparse, this is often the only sensible way to proceed.

The remainder of this section will deal with measures of association benominal variables. For any pair of nominal variables, the data can be

di'Lphtyed as a contingency table, a table whose rows are labeled by the values

one nominal variable, whose columns are labeled by the values of the other

variable, and whose entries are nonnegative integers giving the numof observed events for each combination of row and column (see Figure

. The analysis of association between nominal variables is thus called

conting"n,:y table analysis or crosstabulation analysis.

We will introduce two different approaches. The first approach, based

the chi-square statistic, does a good job of characterizing the significance

association, but is only so-so as a measure of the strength (principally

its numerical values have no very direct interpretations). The second

ar'proa"h, based on the information-theoretic concept of entropy, says nothing

all about the sigoificance of association (use chi-square for that!), but is

of very elegantly characterizing the strength of an association already

to be sigoificant.

""I

Ohapter 13.

Statistical Description of Data

Measures of Association Based on Chi-Square

Some notation first: Let N ij denote the number of events which occur

with the first variable x taking on its ith value, and the second variable y

taking on its ith value. Let N denote the total number of events, the sum of

all the Ni/S. Let Ni. denote the number of events for which the first variable

x takes on its ith value regardless of the value of y; N.j is the number of events

with the ith value of y regardless of x. So we have

Ni · =

LN

N. j = LNij

ij

j

"",,"

N= LNi = LN. j

\11 ~ ;

~:I :1

j

If

111 ~

Iu~Ii'~," 'I

t~~

I

'II"

1 i:> !

u~ I

~q1 I

~" I

~I:r

'

111

'I'

:li"

I

~;'~

I

1rt :

"", '

W'

!I.r.:~

I

N. j and Ni. are sometimes called the row and column totais or marginals,

we will use these terms cautiously since we can never keep straight which

the rows and which are the columns!

The null hypothesis is that the two variables x and y have no associatiolf

In this case, the probability of a particular value of x given a particular

of y should be the same as the probability of that value of x regardless of

Therefore, in the null hypothesis, the expected number for any N ij , which

will denote nij, can be calculated from only the row and column totals,

nij

Ni·

N. j

N

Ni.N.j

which implies

nij=~

Notice that if a column or row total is zero, then the expected number for

the entries in that collllIlll or row is also zerOj in that case, the never-occurri~

bin of x or y should simply be removed from the analysis.

The chi-square statistic is now given by equation (13.5.1) which, in

present case, is summed over all entries in the table,

x2 =

L

i,j

(Nij - nij)"

. nij

The number of degrees of freedom is equal to the number of entries

table (product of its row size and column size) minus the number of constra

that have arisen from our use of the data itself to determine the

row total and column total is a constraint, except that this overcounts

since the total of the column totals and the total of the row totals both

N, the total number of data points. Therefore, if the table is of size I

the number of degrees of freedom is I J - I - J + 1. Equation (13.6.3),

with the chi-square probability function (§6.2) now give the significance oJ

association between the variables x and y.

,

13.6 Contingency Table Analysis of Two Distributions

Suppose there is a significant association. How do we quantify its strength,

that (e.g.) we can compare the strength of one association to another? The

here is to find some reparametrization of X2 which maps it into some COll'N.mi<mt interval, like 0 to 1, where the result is not dependent on the quantity

data that we happen to sample, but rather depends only on the underlypopulation from which the data were drawn. There are several different

of doing this. Two of the more common are called Cramer's V and the

c01,ti",qe>'cy coefficient C.

The formula for Cramer's V is

V=

V

X2

Nmin(I-l,J-l)

(13.6.4)

I and J are again the numbers of rows and columns, N is the total

num1)er of events. Cramer's V has the pleasant property that it lies between

and one inclusive, equals zero when there is no association, and equals

only when the association is perfect: All the events in any row lie in one

,WU~'"' column, and vice versa. (In chess parlance, no two rooks, placed on a

,I1ClUz,ero table entry, can capture each other.)

In the case of I = J = 2, Cramer's V is also referred to as the phi statistic.

The contingency coefficient C is defined as

C-

{x;

+

2

X2

N

(13.6.5)

also lies between zero and one, but (as is apparent from the formula) it can

achieve the upper limit. While it can be used to compare the strength

association of two tables with the same I and J, its upper limit depends on

and J. Therefore it can never be used to compare tables of different sizes.

The trouble with both Cramer's V and with the contingency coefficient

is that, when they take on values in between their extremes, there is no

direct interpretation of what that value means. For example, you are

Las Vegas, and a friend tells you that there is a small, but significant,

'asllOciation between the color of a croupier's eyes and the occurrence of red

black on his roulette wheel. Cramer's V is about 0.028, your friend tells

You know what the usual odds against you are (due to the green zero

double zero on the wheel). Is this association sufficient for you to make

"tn,en,',,? Don't ask us!

<math.h>

TINY 1. Oe-30

,nj;

*chisq.*df.*prob.*cramrv.*ccc;

a two-dimensional contingency table in the form of an Integer array nnU .. ni] [1 .. nj],

routine returns the chi-square chisq, the number of degrees of freedom df, the significance

fi'"

Chapter 13.

Statistical Description of Data

level prob (small values indicating a significant association), and two measures of association,

Cramer's V (cramrv) and the contingency coefficient C (eee).

{

int nnj,nni,j.i,minij:

float sum=O,O,expctd.*sumi.*sumj,temp,gammq().*vector();

void free_vector();

sumi=vector(l,ni);

sumj=vector(l,nj);

nni=ni;

nnj=nj;

Number of rows

and columns.

for (1=1;1<=n1;1++) {

Get the row totals.

sumi[i]=O,O;

for (j=1;j<=nj;j++) {

sum! [1] += nn [1] [j] ;

.,.'"

sum += nn [1] [j] ;

}

if (sumi[i]

0.0) --nni;

, 'I~" ~

1:)"1 ~

Eliminate any zero rows by reducing the number.

}

"'/ j

,j~

4:1:

for (j=1;j<=nj ;j++) {

Get the column totals.

Bumj [jl=O.O;

for (i=l;i<=ni;i++) sumj [j] += nn[i] [jl;

if (sumj [j] == 0.0) --nnj:

Eliminate any zero columns.

ijli~ I

}

lpi

*df=nni*nnj -nni -nnj+l:

Corrected number of degrees of freedom.

*chisq=O.O;

for Ci=l;i<=ni;i++) {

Do the chi-square sum.

for (j=l;j<=nj;j++) {

expctd=sumj [j] *sumi [i] !sum;

temp=nn[i] [j] -expctd;

*chisq += temp*temp! (expctd+TINY) ;

Here TINY guarantees that any eJimlnated row or column will not

}

}

contribute to the sum.

Chi-sQuare probability function.

*prob=gammq(0.5*(*df),O.6*(*chisq»;

minij = nni < nnj 1 nni-l : nnj-l;

*cramrv=sqrt(*chisq!(sum*minij»;

*ccc=sqrt(*chisq!(*chisq+sum»;

free_vector(sumj,l,nj);

free_vector(sumi,l,ni);

"111

grt 1j I

11!1'

~K~

I

l!Iij!

'r:!J,1

!Ie

t,!I{l

'II"

~i" !

·n !!

·:rq

~i;' '

<!j;~

f

}

Measures of Association Based on Entropy

Consider the game of "twenty questions," where by repeated yes/no

tions you try to eliminate all except one correct possibility for an unknow

object. Better yet, consider a generalization of the garne, where you are

lowed to ask multiple choice questions as well as binary (yes/no) ones. '

categories in your multiple choice questions are supposed to be mutually

elusive and exhaustive (as are "yes" and "no").

The value to you of an answer increases with the number of possibiliti•

that it eliminates. More specifically, an answer that eliminates all

a fraction p of the remaining possibilities can be assigned a value positive number, since p < 1). The purpose of the logarithm is to make

value additive, since (e.g.) one question which eliminates all but 1/6 of

possibilities is considered as good as two questions that, in sequence,

the number by factors 1/2 and 1/3.

13.6 Oontingency Table Analysis of Two Distributions

So that is the value of an answer; but what is the value of a question?

If there are I possible answers to the question (i = 1, . .. , I) and the fraction

of possibilities consistent with the i,h answer is Pi (with the sum of the pis

equal to one), then the value of the question is the expectation value of the

value of the answer, denoted H,

I

H = -

I>i In(p;)

(13.6.6)

i=l

In evaluating (13.6.6), note that

,

~!l

(13.6.7)

lim pln(p) = 0

p~o

"1

The value H lies between 0 and InI. It is zero only when one of the pis is

one, all the others zero: in this case, the question is valueless, since its answer

is preordained. H takes on its maximum value when all the Pi'S are equal,

in which case the question is sure to eliminate all but a fraction 1/I of the

remaining possibilities.

The value H is conventionally termed the entropy of the distribution

given by the pis, a terminology borrowed from statistical physics.

So far we have said nothing about the association of two variables; but

suppose we are deciding what question to ask next in the game and have to

choose between two candidates, or possibly want to ask both in one order or

another. Suppose that one question, x, has I possible answers, labeled by i,

that the other question, y, as J possible answers, labeled by j. Then the

possible outcomes of asking both questions form a contingency table whose

entries Nij, when normalized by dividing by the total number of remaining

possibilities N, give all the information about the p's. In particular, we can

make contact with the notation (13.6.1) by identifying

Nij

Pij=-

N

Ni .

Pi'=N

(outcomes of question x alone)

N. j

P'j=N

(outcomes of question y alone)

(13.6.8)

The entropies of the questions x and yare respectively

H(x) = - LPi.lnPi.

H(y) = - LP.jlnp.j

j

(13.6.9)

I"

".

f-l1i

Chapter 13.

Statistical Description of Data

The entropy of the two questions together is

H(x,y) = - LPi,lnpi,

(13.6.10)

i,j

Now what is the entropy of the question y given x (that is, if x is asked

first)? It is the expectation value over the answers to x of the entropy of the

restricted y distribution that lies in a single column of the contingency table

(corresponding to the x answer):

""",'

,)1 11

l:f:jl

Pi' InPi'

InPi'

H(Ylx) = - LPi. "L..- = - "

L-Pij

-

W'

1I!,

o

J

Pi·

Pi·

. .

Pi.

't,1

(13.6.11)

1 11 ~1

I~~j

Correspondingly, the entropy of x given y is

~'Ij:

'ftl

1'.-'

#~I'

i lf~1 !

il1dli

Jt,!1

'll '

Ir!,'

~lli

i

'Hli

I(it:,'!:

'i':: HI

'

p' = - "L..Pi,ln-'L

p"

H(xIY) = - "L..P" "

L..p-'Lln-'L

.

J

. p.,

I

p.,

..

p.,

IJ

(13.6.12)

We can readily prove that the entropy of y given x is never more than

the entropy of y alone, i.e. that asking x first can only reduce the usefulness

of asking y (in which case the two variables are associated!):

"1'

H(Ylx) - H(y) = - LPi, In Pi,lpi.

hi'

p.j

i,j

" n:~11

"

InP·J·Pi.

- L-Pij

-i,j

Pij

~ LPi, (P"~i

i,j

P'tJ

-1)

= LPi.P., - LPi,

i,j

i,j

=1-1=0

where the inequality follows from the fact

Inw~w-1

We now have everything we need to define a measure of the "dependency'>

of y on x, that is to say a measure of association. This measure is sometimes

13.6 Contingency Table Analysis of Two Distributions

called the uncertainty coefficient of y. We will denote it as U(ylx),

U(ylx)

H(y) - H(ylx)

H(y)

(13.6.15)

This measure lies between zero and one, with the value 0 indicating that x and

y have no association, the value 1 indicating that knowledge of x completely

predicts y. For in-between values, U(Ylx) gives the fraction of y's entropy H(y)

that is lost if x is already known (Le., that is redundant with the information

in x). In our game of "twenty questions," U(ylx) is the fractional loss in the

utility of question y if question x is to be asked first.

If we wish to view x as the dependent variable, y as the independent one,

then interchanging x and y we can of course define the dependency of x on y,

U(

I )=

xY -

H(x) - H(xIY)

H(x)

(13.6.16)

If we want to treat x and y symmetrically, then the useful combination

turns out to be

U(

)=2 [H(Y)+H(X)-H(X,y)]

x, y H(x) + H(y)

(13.6.17)

If the two variables are completely independent, then H(x, y) = H(x) +H(y),

so (13.6.17) vanishes. If the two variables are completely dependent, then

H(x) = H(y) = H(x, V), so (13.6.16) equals unity. In fact, you can use the

identities (easily proved from equations 13.6.9-13.6.12)

H(x, y) = H(x)

+ H(ylx) =

H(y)

+ H(xly)

(13.6.18)

to show that

U(

x, y

) = H(x)U(xIY)

H(x)

+ H(Y)u(Ylx)

+ H(y)

(13.6.19)

Le. that the symmetrical measure is just a weighted average of the two asymmetrical measures (13.6.15) and (13.6.16), weighted by the entropy of each

variable separately.

Here is a program for computing all the quantities discussed, H(x), H(y),

Iy), H(Ylx), H(x,y), U(xIY), U(Ylx), and U(x,y):

.,";'1

Chapter 13.

Statistical Description of Data

#include <math.h>

#define TINY 1.0e-30

void cntab2(nn,ni,nj,h,hi,hy,hygx,hxgy,uygx.uxgy,uxy)

int ni,nj.**nn;

float *h.*hx.*hy.*hygx,*hxgy.*uygx.*uxgy.*uxy;

Given a two-dimensional contingency table in the form of an integer array nn [i) [jl, where i

labels the x variable and ranges from 1 to ni, j labels the y variable and ranges from 1 to

nj, this routine returns the entropy h of the whole table, the entropy hx of the x distribution,

the entropy hy of the y distribution, the entropy hygx of y given x, the entropy hxgy of x

given y, the dependency uygx of yon x Ceq. 13.6.15), the dependency uxgy of x on y Ceq.

13.6.16), and the symmetrical dependency uxy Ceq. 13.6.17).

{

int i,j;

float sum=O.O,p,*sumi.*sumj,*vector();

void free_vector();

...,.'

"

,~!

sumi=vector(l.ni);