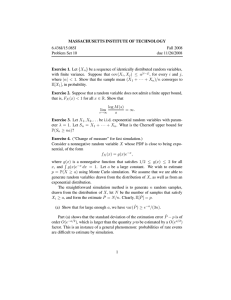

Math 180A, Winter 2015 Homework 7 Solutions 3.3.14. (a) If X is the

advertisement

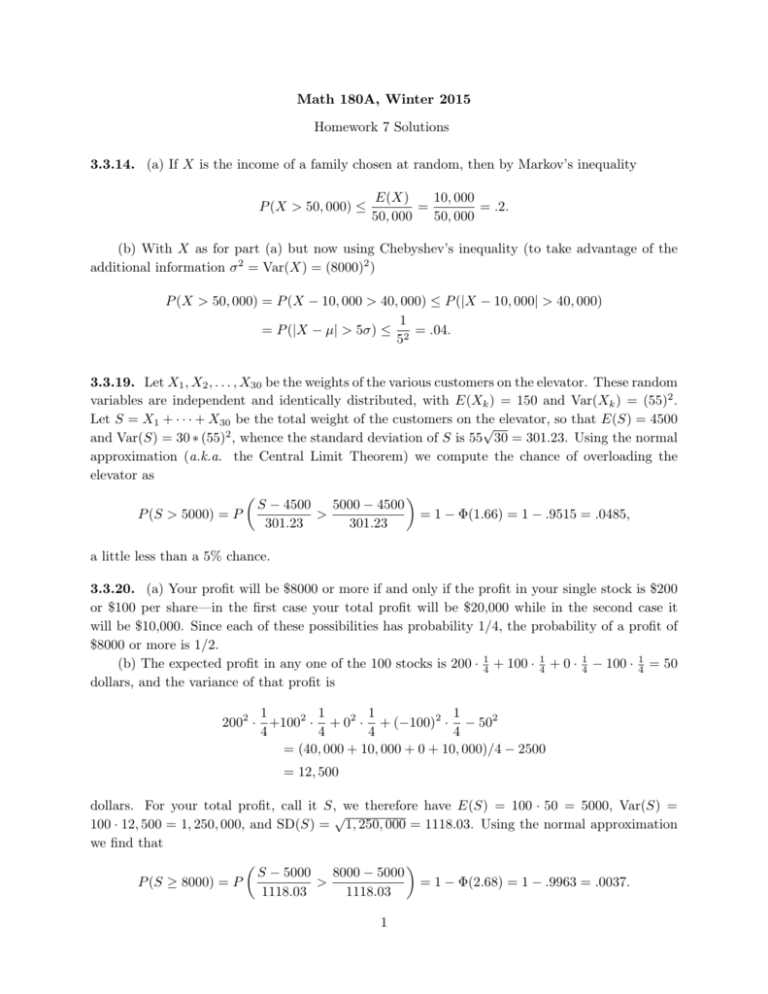

Math 180A, Winter 2015

Homework 7 Solutions

3.3.14. (a) If X is the income of a family chosen at random, then by Markov’s inequality

P (X > 50, 000) ≤

E(X)

10, 000

=

= .2.

50, 000

50, 000

(b) With X as for part (a) but now using Chebyshev’s inequality (to take advantage of the

additional information σ 2 = Var(X) = (8000)2 )

P (X > 50, 000) = P (X − 10, 000 > 40, 000) ≤ P (|X − 10, 000| > 40, 000)

1

= P (|X − µ| > 5σ) ≤ 2 = .04.

5

3.3.19. Let X1 , X2 , . . . , X30 be the weights of the various customers on the elevator. These random

variables are independent and identically distributed, with E(Xk ) = 150 and Var(Xk ) = (55)2 .

Let S = X1 + · · · + X30 be the total weight of the customers on the elevator, so that E(S) = 4500

√

and Var(S) = 30 ∗ (55)2 , whence the standard deviation of S is 55 30 = 301.23. Using the normal

approximation (a.k.a. the Central Limit Theorem) we compute the chance of overloading the

elevator as

5000 − 4500

S − 4500

>

= 1 − Φ(1.66) = 1 − .9515 = .0485,

P (S > 5000) = P

301.23

301.23

a little less than a 5% chance.

3.3.20. (a) Your profit will be $8000 or more if and only if the profit in your single stock is $200

or $100 per share—in the first case your total profit will be $20,000 while in the second case it

will be $10,000. Since each of these possibilities has probability 1/4, the probability of a profit of

$8000 or more is 1/2.

(b) The expected profit in any one of the 100 stocks is 200 · 14 + 100 · 41 + 0 · 14 − 100 · 14 = 50

dollars, and the variance of that profit is

1

1

1

1

2002 · +1002 · + 02 · + (−100)2 · − 502

4

4

4

4

= (40, 000 + 10, 000 + 0 + 10, 000)/4 − 2500

= 12, 500

dollars. For your total profit, call it S, we therefore have E(S) = 100 · 50 = 5000, Var(S) =

√

100 · 12, 500 = 1, 250, 000, and SD(S) = 1, 250, 000 = 1118.03. Using the normal approximation

we find that

S − 5000

8000 − 5000

= 1 − Φ(2.68) = 1 − .9963 = .0037.

P (S ≥ 8000) = P

>

1118.03

1118.03

1

3.4.4. (a) The chance that no one is “odd one out” is (1/2)3 + (1/2)3 = 1/4, so the chance that

someone is “odd one out” is 3/4.

(b) The game lasts for exactly r tosses if and only if no one is “odd one out” for the first r − 1

tosses, and then someone is “odd one out” on the rth toss; this has probability (1/4)r−1 (3/4).

(c) The duration of the game is a random variable with the geometric distribution (on

{1, 2, . . .}) with success probability p = 3/4. The expected value of such a random variable is

1/p = 34 .

3.4.6. (a) The probability that the number of failures before the first success (in independent

Bernoulli (p) trials) is k is q k p (k failures followed by a success), where k = 0, 1, 2, . . ..

(b) Using the formula for the sum of a geometric series:

P (W > k) =

∞

X

qj p =

j=k+1

q k+1 p

= q k+1 .

1−q

(c) Let T be the number of trials until the first success. From class discussion we know that

E(T ) = 1/p. (See also example 3, starting on page 212.) Notice that W = T − 1. Therefore,

E(W ) = E(T − 1) = E(T ) − 1 = (1/p) − 1 = q/p.

(d) From class discussion or page 213 of the text, Var(T ) = q/p2 . Therefore E(T 2 ) = q/p2 +

1/p2 = (1 + q)/p2 . Consequently,

E(W 2 ) = E(T 2 − 2T + 1) =

(1 + q) 2

2 − 3p + p2

−

+

1

=

p2

p

p2

and so

2

Var(W ) = E(W 2 ) − [E(W )] =

2 − 3p + p2

−

p2

2

q

q

= 2.

p

p

[Alternatively, W and T have the same variance because they differ by a constant.]

3.4.11. (a) A wins on toss k if and only if A tosses k − 1 tails and then a head and B tosses k

k−1

k

. Therefore,

tails. This has probability qA

pA qB

P (A wins) =

∞

X

P (A wins on toss k) =

k=1

∞

X

k−1

k

qA

pA qB

=

k=1

pA qB

.

1 − qA qB

(b) Reversing the roles of A and B we find that

P (B wins) =

pB qA

.

1 − qA qB

(c) In view of (a) and (b),

P (draw) = 1 −

pA qB

pB qA

1 − qA − qB + qA qB

−

=

.

1 − qA qB

1 − qA qB

1 − qA qB

(d) A and B toss until one or the other (or both) get a head. The distribution of the time

until they stop is thus the distribution of the number of tosses until the first success, in which the

2

success probability is p = pA + pB − pA pB (the probability that at least one of A and B get a head

on any one toss). The distribution of the number N of times A and B must toss is therefore

P (N = k) = p · q k−1 ,

k = 1, 2, 3, . . . ,

where p is as above and q = 1 − p.

3.4.15. (a) Starting with the left side of the asserted identity (and using the results of 3.4.6),

P (F = k + m, F ≥ k)

P (F ≥ k)

P (F = k + m)

q k+m p

=

=

= q m p = P (F = m),

P (F ≥ k)

qk

P (F − k = m|F ≥ k) =

as required.

(b) Conversely, suppose F is a random variable with possible values the set {0, 1, 2, . . .} of

non-negative integers, such that

P (F = k + m|F ≥ k) = P (F = m),

m, k = 0, 1, 2, . . . .

The above conditional probability is

P (F = k + m, F ≥ k)

P (F = k + m)

=

,

P (F ≥ k)

P (F ≥ k)

so the stated identity holds if and only if

(†)

P (F = k + m) = P (F = m)P (F ≥ k),

m, k = 0, 1, 2, . . . .

In particular, if we take k = 1 in (†) and define q = P (F ≥ 1) and β = P (F = 0), then

P (F = m + 1) = q · P (F = m),

m = 0, 1, 2, . . . .

Therefore

P (F = 1) = q · P (F = 0) = q · β,

P (F = 2) = q · P (F = 1) = q 2 · β,

and then by induction

P (F = n) = q n · β,

But

1=

∞

X

P (F = n) =

n=0

n = 0, 1, 2, . . . .

∞

X

n=0

qn · β =

β

,

1−q

so β must equal 1 − q (which we usually call p, and then q = 1 − p), and therefore

P (F = n) = q n · p,

3

n = 0, 1, 2, . . . .

the geometric distribution on {0, 1, 2, . . .} of the random variable W in exercise 3.4.6. .

(c) The corresponding characterization of the geometric (p) distribution on {1, 2, . . .} is

P (G = k + m|G > k) = P (G = m),

k = 0, 1, 2, . . . , m = 1, 2, . . . .

The verification of this assertion is similar to the discussion above, and so is omitted.

3.5.3. Observe that there are, on average, 4 raisins per cookie.

(a) We seek the probability that a bag of one dozen cookies contains at least one cookie with

no raisins. Such a bag will generate a complaint. The number of raisins in a given cookie has

the Poisson distribution with µ = 4. For such a cookie, the chance that there are no raisins is

e−4 40 /0! = e−4 = 0.0183. Thus the chance that a given cookie has some raisins is (1 − e−4 ) =

0.9817. By independence, the chance that all 12 cookies in a given bag contain at least one raisin

is (1 − e−4 )12 = 0.8011. This is the probability that the bag does not generate a complaint.

By complementation, the chance that a bag of a dozen cookies does generate a complaint is

1 − (1 − e−4 )12 = 0.1989.

(b) Using the reasoning of part (a), we want to find µ such that 1 − (1 − e−µ )12 = .05. That

is, (1 − e−µ )12 = .95, or (1 − e−µ ) = .951/12 = 0.9957. Solving this for µ we obtain

µ = − log(1 − .9957) = − log(0.004265) = 5.46.

So an average of just under 5.5 raisins per cookie will ensure that only 5% of the bags give rise to

complaints. This amounts to 43.68 raisins per pound of cookie dough.

3.5.6. 30 drops per square inch per minute amounts to 5 drops per square inch per 10-second

period. Thus, the chance of a particular square inch being missed by the rain in a given 10-second

period is e−5 = 0.0067. I am assuming that the rain is falling in accordance with a Poisson process,

and that the rate of rainfall is constant in time.

3.5.10. (a) E(3X + 5) = 3E(X) + 5 = 3λ + 5.

(b) Var(3X + 5) = Var(3X) = 9Var(X) = 9λ.

(c)

∞

∞

X

X

1 −λ λk

λk

−1

E[(1 + X) ] =

e

=

e−λ

k+1

k!

(k + 1)!

k=0

k=0

= λ−1

∞

X

e−λ

k=0

−1

=λ

∞

X

λ

λj

= λ−1

e−λ

(k + 1)!

(j)!

j=1

k+1

[1 − e−λ ]

3.5.11. (a) X + Y has the Poisson distribution with mean 2, so P (X + Y = 4) = e−2 24 /4! =

(2/3)e−2 = 0.09022.

2

(b) E (X + Y )2 = Var(X + Y ) + [E(X) + E(Y )] = Var(X) + Var(Y ) + 22 = 1 + 1 + 4 = 6.

(c) X + Y + Z has the Poisson distribution with mean 3, so P (X + Y + Z = 4) = e−3 34 /4! =

(27/8)e−3 = 0.1680.

4