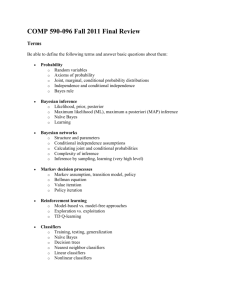

TPR3411 Pattern Recognition Tutorial 3 (Solution) Briefly describe

advertisement

TPR3411 Pattern Recognition Tutorial 3 (Solution) 1. Briefly describe how the Bayesian Decision Theory can be used to solve pattern recognition problem. The Bayesian Decision Theory is a fundamental statistical approach to the problem of statistical pattern classification. It is based on quantifying the trade-offs between various classification decisions using probability and the costs that accompany such decisions. 2. Suppose you are given a task to classify two types of snakes namely the rattlesnakes and cobra. You take the length of the snake, x, as the feature for your classifier. You know that usually there is 30% of chance of getting a cobra. Let say you are given a snake with measurement x = 60 cm. The conditional probabilities for x are 0.42 and 0.58 each for rattlesnakes and cobra. Use the Bayesian Decision Rule to find the optimal decision in order to achieve the minimum error rate. Let 1 = rattlesnakes and 1 = cobras Given P(1 ) 0.7 and P(2 ) 0.3 P( x | 1 ) 0.42 and P( x | 2 ) 0.58 To achieve minimum error rate, decide 1 if: P( x | 1 ) P(1 ) P( x | 2 ) P(2 ) So P( x | 1 ) P(1 ) 0.42 0.7 = 0.294 P( x | 2 ) P(2 ) 0.58 0.3 = 0.174 Therefore our decision is to choose 1 , the rattlesnake. 3. What is a loss function? How can the loss function be related to Conditional Risk? The lost function, λij, states exactly how costly each action is. It describes the loss incurred for takingaction αi when the state of nature is wj. The loss function is used to convert a probability determination in to a decision. The loss function is defined as ij ( i | j ) . The expected loss of taking action αi is called Conditional Risk. The Conditional Risk is defined as c R(i | x) (i | j ) P( j | x) j 1 4. What is “zero-one loss function”? State the decision rule for the zero-one loss function. This loss function assigns no loss to a correct decision, and assigns a unit loss to any error. Thus, all errors are equally costly. The decision rule for the zero-one loss function is, Decide 1 if, p( x | 1 ) 12 22 P(2 ) p( x | 2 ) 21 11 P(1 ) p ( x | 1 ) 1 0 P (2 ) p ( x | 2 ) 1 0 P(1 ) p ( x | 1 ) P(2 ) p ( x | 2 ) P(1 ) 5. The class-conditional density functions of a random variable X for two classes are shown below: x 1 2 P( x | 1 ) 2/3 1/5 P( x | 2 ) 1/3 4/5 The loss function is given as follows, where action i means “decide class i ”. 1 2 1 0 3 2 2 0 Assuming equal priors, find the optimal decision for each of the value of X. The decision rule to achieve minimum error rate is, Decide 1 if, p( x | 1 ) 12 22 P(2 ) p( x | 2 ) 21 11 P(1 ) So, For x = 1, p ( x | 1 ) 2 / 3 2 p ( x | 2 ) 1/ 3 12 22 P(2 ) 2 0 0.5 2 21 11 P(1 ) 3 0 0.5 3 Since LHS is greater than RHS, decide on 1. p( x | 1 ) 1/ 5 1 p ( x | 2 ) 4 / 5 4 12 22 P(2 ) 2 0 0.5 2 21 11 P(1 ) 3 0 0.5 3 Since RHS is greater than LHS, decide on 2. For x = 2,