Discrete Distributions

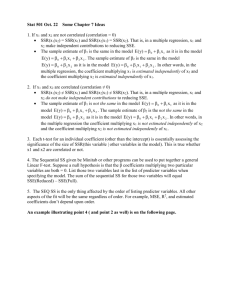

Multiple Regression

Multiple regression problems involve more than one regressor variable.

Ex. Y

o

x

1 1

x

2 2

(2 regressors x

1

, x

2

; and error term)

Termed “linear” because y is a linear function of the unknown beta parameters.

Often used as approximating functions when true functional relationship between y and x

1

, x

2

, x k

is unknown, but over certain ranges of the independent variables the linear regression model is an adequate approximation.

Any regression model that is linear in the parameters (the betas) is a linear regression model, regardless of the shape of the surface that it generates.

Ex.

Y

o

x

1 1

x

2 2

x x

3 1 2 let x

3

x x

1 2

o

2 x

2

Now the form of the equation has been linearized.

We use the method of least squares to estimate the regression coefficient. The goal is to minimize the error function:

( Y

L

i n

i

n

2

1

1 i

y i

o

j k

2

1 x j ij

o

Solve model equation for ε x

1 1

x

2 2

x

3 3

for our example.)

Partial derivatives are taken with respect to each regression coefficient and set = 0 to determine minimum of error function. This results in the least squares normal equations:

CIVL 7012/8012 Probabilistic Methods for Engineers 1

n

ˆ

0

ˆ

1 i n

1 x i 1

ˆ

2 i n

1 x i 2

ˆ k i n

1 x ik

i n

1 y i

ˆ

0 i n

1 x i 1

ˆ

1 n i

1

2 x i 1

ˆ

2 n i

1 x x i 1 i 2

ˆ k i n

1 x x i 1 ik

i n

1 x y i 1 i

ˆ

0 i n

1 x ik

ˆ

1 i n

1 x x i 1

ˆ

2 n i

1 x x i 2

ˆ k n i

1

2 x ik

i n

1 x y i

In matrix for m : y

y

1 y

2

y n

X

1

1

1

ˆ

ˆ

ˆ

ˆ k

x x

11 12 x x

21 22 x x n 1 n 2 x x

1 k

2 x nk k

where : n

number of observations k

number of regressors .

CIVL 7012/8012 Probabilistic Methods for Engineers 2

For

L

0,

ˆ

ˆ

1

T T

X X X Y or may be written as

ˆ

X X

1

.

Example:

The data given below is a sample of trip generation data collected in a major city.

Zone Home Based

Number

1

Trips/D.U.

4.2

Average Family Net Residential

Income ($

10

-3

) Density (D.U./acre)

37.4 13.9

2

3

4

5

6

7

8

9

10

11

12

13

3.0

3.9

4.5

5.6

5.8

4.4

7.0

5.9

3.1

4.6

6.6

6.1

26.0

42.0

51.2

68.7

51.2

58.0

65.7

65.7

32.8

42.0

51.2

58.8

49.2

15.2

16.2

11.5

6.5

5.7

4.9

7.6

28.5

10.4

2.6

2.4

It is suspected that a model for home based trips per dwelling (D.U.) may be written as a linear function of average family income and natural logarithm of net residential density.

To determine the "best" relationship of this form, we make the following definitions:

Y = home based trip per dwelling unit

X

1

= average family income

10

-3

X

2

= ln (net residential density)

We can write the prediction equation as:

Y

0

1

X

1

2

X

2

CIVL 7012/8012 Probabilistic Methods for Engineers 3

X

1 37 4

1 26 0 .

.

1 42 0 2 72

1 51 2

1 68 7

1 51 2 .

.

.

1 58 0 1 74

1 65 7 1 59

1 65 7

1 32 8 3 35

1 42 0 2 34

1 51 2

1 58 8 .

.

.

13

650 7 .

.

.

.

1

0 06472 .

.

0 93043

.

.

.

.

.

1

.

.

.

.

.

.

.

.

Y

CIVL 7012/8012 Probabilistic Methods for Engineers 4

The final trip generation prediction equation, then, is:

Home based trips / D U

.

.

.

ln

average family income

1000

net residential density

Multiple Correlation

To determine the suitability of the model form itself to the observed data, we use the coefficient of multiple determination, R

2

. This parameter is defined as the fraction of the total variation which is explained by the regression model. where:

SSR = sum of squares of regression

R

2

SSR

SST

SST

SSR

SSE

SST = total sum of squares

SSE = sum of squares due to error or residual

For the ideal situation (no random error), the regression model would explain all of the variation, and R

2

= 1. As R

2

approaches 0, the model fit becomes very poor. We are measuring how well the model fits the data points.

To estimate error variance (often called “common variance”):

ˆ 2

SSE n

p

T where SSE Y Y

ˆ

T T

X Y

SSE = sum of squares of errors

(p = number of betas, or k+1 degrees of freedom, where k is the number of independent variables (x’s)).

CIVL 7012/8012 Probabilistic Methods for Engineers 5

Confidence intervals for the mean of the sample:

Mean response:

Y

ˆ

0

X

T

0

ˆ for a given X

0

1

x

01

x

0 k

s

2

Y

ˆ

ˆ

0

2 T T

1

X

0

( X X ) X

0

(29)

A 100(1α )% confidence interval on the mean response at the point (x

01

, x

02

, …, x

0k

) is:

Y

ˆ

0

t

2

,( )

ˆ 2

X

T

0

(

T

X X )

1

X

0

( )

0

0 t

2

,(

For a confidence interval on the β ’s: (100(1α )%

)

ˆ 2 T T

1

X X X X

0

( )

0

(30)

ˆ j

t

2

,(

)

ˆ 2

C jj

j

ˆ j

t

2

,(

)

ˆ 2

C jj

CIVL 7012/8012 Probabilistic Methods for Engineers 6

where C jj

is the jjth element of the (X

T

X)

-1

matrix, and

ˆ 2 is the estimate of error variance.

Let’s go back to the last example. Suppose we are concerned with the average number of home based trips per dwelling unit when the average family income is $72,600 and the net residential density is 3.3 d.u./acre. Let's predict the average home based trips per dwelling, and construct a 90% C.I. on this prediction.

X

1 desired

72 .

6

X

2 desired

ln( 3 .

3 )

1 .

19

We can immediately construct the a matrix:

X o

= a

72

1 .

19

1

.

6

While we’re at it, we can get Y

from the prediction equation (already developed).

Y

4 88

Y

.

0 846

home based trips per dwelling unit (on the average).

Now,

1

was already calculated in developing the prediction equation:

1

0 06472 .

.

0 93043

.

.

.

.

Let's work under the radical in Eq. 30.

1 a

.

.

0 06472 .

.

0 93043 .

.

1

.

1 a

CIVL 7012/8012 Probabilistic Methods for Engineers 7

1 a

We now need to calculate s

2

. Using Eq. 26:

SSE Y Y X Y

We've already previously calculated:

.

ˆ

X

Y

4 .

880148 0 .

03997

0 .

84645

64

3405

134 .

.

7

.

17

035

338

Y

The measured Y values!

CIVL 7012/8012 Probabilistic Methods for Engineers 8

Y

Y

i n

1

Y i

2

Y

Y

341 .

81

SSE

341.81

338

SSE

3.40

Now, n

13 k

2 d .

f .

n

k

1

d .

f .

13

2

1

d .

f .

10

(short cut!)

Using eq. (27) to find our estimate of common variance: s

2 n

SSE

k

1

s

2

317 .

99

10 s

2

31 .

799

And using eq. (29): s

Y

ˆ

2 s

2

a

X

X

1 a

s

Y

ˆ

2

31 .

799

0 .

31434

s

Y

ˆ

2

9 .

99570

CIVL 7012/8012 Probabilistic Methods for Engineers 9

s s

Y

ˆ

s

Y

ˆ

2

3 .

16160

9 .

99570

Suppose we wish to find a 90% confidence interval for our prediction Y

ˆ

.

1

0 .

9

0 .

10

d

/ 2

.

f .

0 .

05

10

From the t -table we get: t

0 .

05 , 10

1 .

812

Now we can use eq. ( 30) !

90% C.I. = Y

ˆ t

0 .

05 , 10

s

Y

ˆ

90% C.I. = 6 .

77

1 .

812

3 .

16160

Or

= 6.77

5.73

90% C.I. = (1.04, 12.50)

ANOTHER EXAMPLE

Let’s find a 95% confidence interval on with. We’ve already found that

ˆ

1

ˆ

1

for the same example we’ve been working

0 .

03997 and we also calculated: s

2

31 .

799

We further know that

C

X

X

1

5 .

40906

0

0

.

.

06472

93043

0 .

06472

0

0

.

.

000877

009249

0

0

.

0

.

.

93043

009249

20784

CIVL 7012/8012 Probabilistic Methods for Engineers 10

Then s

ˆ

1

2 s

2

C

11

31 .

799

0 .

000877

or s

ˆ

1

2

0 .

02789 d.f. = 10

For a 95% C.I.,

(as before)

= 1 - 0.95 = 0.05

/2 = 0.025

From the t table: t

0 .

025 , 10

2 .

228

95% C.I. =

ˆ

1

t

0 .

025 , 10 s

ˆ

1

2

0 .

03997

2 .

228

95% C.I. = 0.03997

0.37207

95% C.I. = (-0.332, 0.412)

0 .

02789

CIVL 7012/8012 Probabilistic Methods for Engineers 11

Hypothesis Testing:

Test for significance of regression- tests to see if a linear relationship exists for dependent variable y and independent variables x

1

, x

2

, …x k

.

Ho: β

1

= β

2

=… β k

= 0

Ha: β j

≠ 0 for at least on j.

Rejection of Ho implies that at least one of the independent variables x

1

, x

2

, …x k contributes significantly to the model.

Recall: SST = SSR + SSE

SSE

Y Y

ˆ

T X Y

SSR

ˆ

T T

X Y n

i n

1 y i

2

T

SST Y Y n

i n

1 y i

2

T

Y Y

i n

1 y i

2

Total sum of squares = sum of squares due to regression + sum of squares due to error

T.S.

F o

SSR k

1)

MSR

MSE

Where MS = “mean square.”

Reject Ho if F o

F

1)

.

CIVL 7012/8012 Probabilistic Methods for Engineers 12

Tests on Individual Regression Coefficients:

Determines the value of each of the independent variables in the regression model

(should you add or delete?)

Ho: β j

= 0

Ha:

β j

≠ 0

T.S. t o

ˆ

ˆ j

2

C jj

ˆ 2

SSE n

p

where p

number of betas

C jj

is the diagonal element of (X'X)

-1

corresponding to ˆ j

. (*Remember- starts at

β

0

.)

Ho is rejected if t o

t

2

,( n k 1)

.

If Ho is not rejected, this indicates that x j

can possibly be deleted from the model.

CIVL 7012/8012 Probabilistic Methods for Engineers 13

Selection of Variables in Multiple Regression:

We want to find the “best” subset of regressor variables for a final model from a set of possible regressor variables.

Backward Elimination:

-

Begins with all candidate variables in the model.

-

The variable with the smallest partial F-statistic is deleted if this F-statistic is insignificant (F < Fout = F

α ,r,(n-p)

).

Algorithm terminates when no further variables can be deleted.

Set up elimination (ANOVA) table:

Source of Variation SSR Degrees of

Freedom

Mean

Square k MSR Regression Current

SSR

SSR(

β

1

∣β

2

,

β

3

,

β

4

,

β

5

,

β

0

) SSR-SSR w/o β

1

SSR( β

2

∣β

1

, β

3

, β

4

, β

5

, β

0

)

SSR( β

3

∣β

1

, β

2

, β

4

, β

5

, β

0

)

SSR(

β

4

∣β

1

,

β

2

,

β

3

,

β

5

,

β

0

)

SSR( β

5

∣β

1

, β

2

, β

3

, β

4

, β

0

)

Error

Total

SSE

SST n-k-1 n-1

MSE

Fo

MSR/MSE

CIVL 7012/8012 Probabilistic Methods for Engineers 14