6.1. Instructor presentation Why don't word for word translations

advertisement

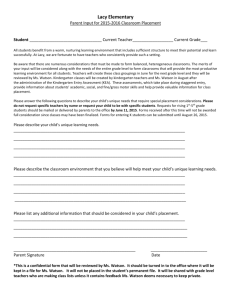

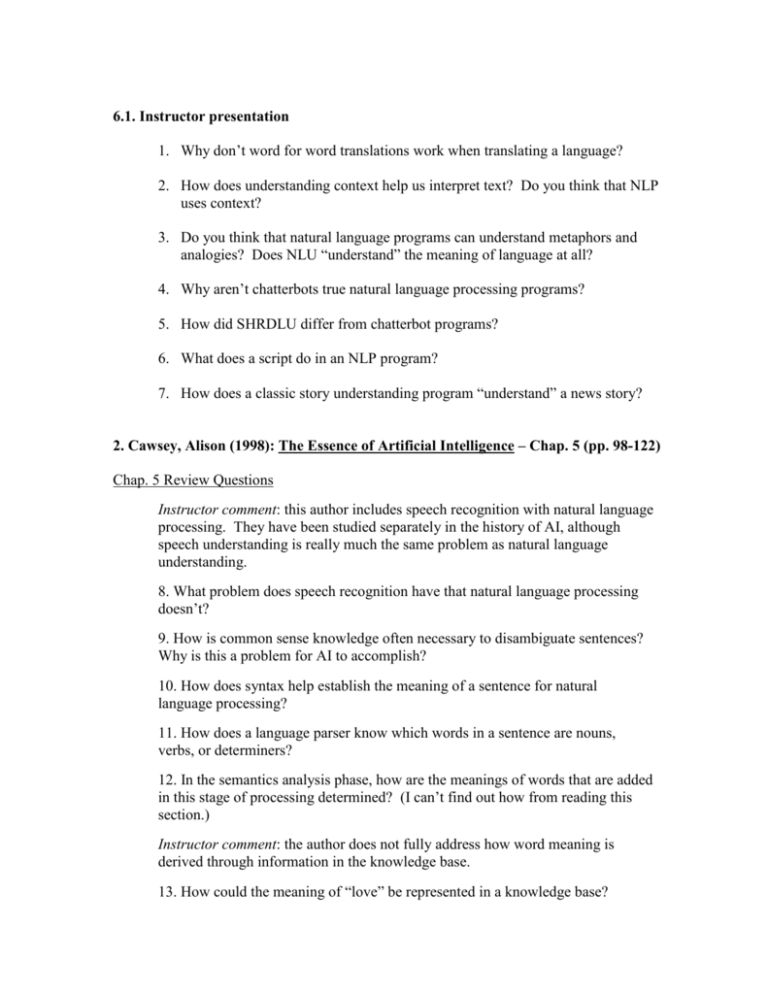

6.1. Instructor presentation 1. Why don’t word for word translations work when translating a language? 2. How does understanding context help us interpret text? Do you think that NLP uses context? 3. Do you think that natural language programs can understand metaphors and analogies? Does NLU “understand” the meaning of language at all? 4. Why aren’t chatterbots true natural language processing programs? 5. How did SHRDLU differ from chatterbot programs? 6. What does a script do in an NLP program? 7. How does a classic story understanding program “understand” a news story? 2. Cawsey, Alison (1998): The Essence of Artificial Intelligence – Chap. 5 (pp. 98-122) Chap. 5 Review Questions Instructor comment: this author includes speech recognition with natural language processing. They have been studied separately in the history of AI, although speech understanding is really much the same problem as natural language understanding. 8. What problem does speech recognition have that natural language processing doesn’t? 9. How is common sense knowledge often necessary to disambiguate sentences? Why is this a problem for AI to accomplish? 10. How does syntax help establish the meaning of a sentence for natural language processing? 11. How does a language parser know which words in a sentence are nouns, verbs, or determiners? 12. In the semantics analysis phase, how are the meanings of words that are added in this stage of processing determined? (I can’t find out how from reading this section.) Instructor comment: the author does not fully address how word meaning is derived through information in the knowledge base. 13. How could the meaning of “love” be represented in a knowledge base? 14. What are pragmatics in NLP? 15. What kind of knowledge is necessary to determine correct pronoun reference? Can parsing provide it? 3. Feldman (1999): “NLP Meets the Jabberwocky” 16. What are the different aspects of language that help to define the meaning of sentences? 17. Why can humans interpret ambiguous language without difficulty while natural language understanding systems find it difficult? 18. Why can’t information retrieval systems retrieve documents based on their meaning rather than using citation ranks, word frequencies, and correlations? 19. An NLU system performs semantic analysis by looking up associated words. Is this all that is needed for an understanding of semantics? 20. The author says that an NLP information retrieval system can use context in the query to disambiguate search terms and eliminate irrelevant search items. She doesn’t say how. Do you know how the context of the query could be found? Instructor comment: this article was published in 1999, before Google became so successful. I don’t think that Google uses Natural Language Understanding in its search algorithms. It is a statistical beast. My nephew is a Ph.D. in statistics and works for Google. 4. Lenat (1995): “A Large-Scale Investment in Knowledge Infrastructure” 21. Why did Lenat choose to enter specific “axioms” into his knowledge base rather than general axioms? Instructor comment: CYC’s knowledge base used to consist of millions of rules. They were changed to a kind of predicate calculus to make them more efficient, I believe, and perhaps to allow for inferencing. 22. Why doesn’t CYC use certainty factors in its axioms? 23. Why is every axiom in CYC tied to a particular context? 24. Do any of the applications Lenat envisions for CYC seem to be solvable by other kinds of software solutions? 25. How could CYC’s knowledge be used to make characters in a role-playing game more lifelike? How could it make them more spontaneous, unpredictable, adaptable, and crafty? Instructor comment: I could find no more recent article of a general nature about CYC than this one from 1995. Lenat has seemed to be less than forthcoming about the progress or lack of it being made by CYC. 5. Ferrucci, et al. (2010): “Building Watson – an Overview” 26. Does Watson sound like it could offer an alternative in Natural Language Processing to CYC? 27. Why should IBM undertake projects such as Deep Blue if the technology is not transferable to other applications? 28. What does the Natural Language Understanding component of Watson do? 29. Is Watson just a super search engine? How does it demonstrate intelligence? 30. How would Watson know that two words rhyme? 31. Watson’s IBM predecessors did not work especially well. What was the biggest contributor to its breakthrough in performance? 32. Do you think that massive parallelism, many independent experts, and confidence estimation will be required for success in question answering systems from now on? Instructor comment: many existing text processing technologies were combined to make Watson. The overall approach used was to employ all of them to generate multiple candidates for answers and then score the candidates with evaluation functions. These text processors often looked for patterns in a corpus of human sentences, or, as in the case of the final scoring function, a collection of Jeopardy questions and answers. I wonder if this is how artificial intelligence will get its smarts in the future – not from programming it in, but through extracting it from humans through machine learning. 33. Do you think that the question classification system within Watson contains specialized heuristics for answering each kind of question in Jeopardy? Will heuristics and programming tricks always be needed in any AI system? 34. Is there any real understanding of semantics anywhere in Watson? 35. Could Watson be said to use a swarm approach to question answering, i.e., a great number of experts generating results and communicating them to other experts in the swarm? 36. How do you think that Watson “detects relations” between objects in a database? 37. The question decomposition function that relies on computed confidence scores is conceptually similar to heuristic search in problem solving. How? 38. Watson generates hundreds of hypothetical answers for each Jeopardy question. How does it decide which one is right? 39. Can Watson be considered a Natural Language Understanding program? Why or why not? 40. Do any of the scoring algorithms used in Watson’s hypothesis scoring have any understanding of the meaning of the candidate answers? 41. If it takes a system like Watson with massively parallel processors, hundreds of algorithmic routines, and a large team of developers striving for years to make it a success at one specialized task, won’t these systems become too expensive for general use? 42. How was machine learning used to improve Watson’s final answer scoring function? 43. Can you think of other areas of AI that may benefit from applying the Watson approach to AI: massively parallel processing employing a vast array of probabilistic algorithms tuned to produce the desired output from a particular class of inputs? 44. With reference to Searle’s Chinese Room argument, can Watson be said to understand the Jeopardy questions it answers? 7.1. Instructor presentation: 1. What is speech synthesis? 2. Which parts of the vocal apparatus produce consonant sounds? 3. What is a “formant?” 4. Are phonemes the same as vowels or consonants? If not, what are they? 5. What is phoneme co-articulation and why is it a problem for speech recognition? 6. What is surprising about the “Phonemic Restoration Effect?” 7. Why is “discrete” speech easiest to recognize? 8. What does statistical modeling do to improve speech recognition performance? 9. What does speech understanding try to do? Is it much different than natural language understanding? 2. Cawsey (1998): The Essence of Artificial Intelligence – Chap. 5 (pp. 98-103) Instructor comment: this chapter was already covered in Section 6: Natural Language Understanding. 3. Deng and Huang (2004): Challenges in Adopting Speech Recognition 10. What do the authors attribute progress in speech recognition to over the past 30 years? 11. According to the authors, what has to be done to increase the acceptance of speech recognition systems? 12. Why don’t speech recognition systems work well in noisy environments? Why is this less of a problem for humans? 13. How will adding a true understanding of context and common sense improve speech recognition? 14. Why is conversational speech difficult for speech recognition systems to process accurately? 4. Furman, et al. (1999): Speech-Based Services 15. Why is accuracy in word recognition such a limiting factor in the user acceptance of speech recognition systems? 16. Errors in the recognition of speech will occur. How does the “Lucy” system try to minimize the inconvenience? 17. Why can’t word templates used to identify single words be expanded to recognize continuous speech? 18. Do continuous speech recognition algorithms identify individual words? 19. What is “wordspotting” and what does it enable a speech recognition system to do with connected speech? 20. How does grammar-based speech recognition reduce errors in processing connected speech? 21. What is a “finite-state grammar map”? Why is it tedious to construct and not generalizable across applications? 22. How does statistical language modeling work? What advantage does it have over finite-state grammars? 23. What limitation does statistical language modeling have on the range of utterances it can handle? 24. According to the authors, what will have to be accomplished before “unconstrained speech” understanding is attained? 5. White (1990): Natural Language Understanding and Speech Recognition Instructor comment: this article, like many technical articles, does not explain the technical jargon used. Therefore, parts of the text are dense and hard to understand for those of us unfamiliar with the research. 25. Why haven’t speech recognition systems simply been merged with Natural Language Understanding systems to create speech understanding? 26. Why is connected speech so difficult for speech recognition? 27. Why do you think humans can recognize connected speech effortlessly, while speech recognition systems have such difficulty? 28. What are 29. What is prosody? Can it be incorporated into speech recognition? 30. Does this article adequately define context and how it constrains the interpretation of speech? Why or why not? 31. What problem does ungrammatical speech pose for speech recognition? 32. How does the author envision using higher level speech knowledge (semantics, pragmatics, prosodics) to enhance system performance at the phoneme level? 33. Why does the author think that the ability to combine and coordinate higher level knowledge sources through NLU merging with ASR will lead to progress in speech recognition?