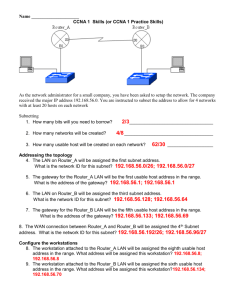

CCNA1

advertisement