HCI 575X – Computational Perception - Project Proposal

TAG’EM ALL

HCI 575X

Computational Perception

Sachin Chopra

Madhuri Rapaka

M.S. (Computer Science)

The University of Iowa

M.S. (Computer Science)

The University of Iowa

Trevor Garson

M.S. (Systems Engineering)

Iowa State University

Copyright © 2009 Sachin Chopra, Madhuri Rapaka, Trevor Garson. All Rights Reserved. Page 1 of 15

HCI 575X – Computational Perception - Project Proposal

Abstract

Photography is a means to save the cherished moments of your life. In most circumstances, the

most important part of a photograph is its subjects. These people are usually our friends,

relatives and loved ones. Since the advent of Digital Photography organizing photographs has

been a challenge. With the ever increasing number of such photographs it’s highly desirable

that they should be organized in manner. For example, one may like to organize an entire

photo library according to people in them. Organizing is most helpful when we return to a

photo library to see someone’s photograph saved in past. It’s really painful to go through the

entire library just to find it was last in the list. TAG’em ALL is the tool that makes organizing

these photographs fun. This tool simply tags all the faces in a photograph based on its

learning component. Just train the tool with a small number of photographs, and it will take

care of all your photographs in the future.

Copyright © 2009 Sachin Chopra, Madhuri Rapaka, Trevor Garson. All Rights Reserved. Page 2 of 15

HCI 575X – Computational Perception - Project Proposal

Table of Contents

1

Introduction _____________________________________________________________ 4

1.1

Two Pass Functioning ________________________________________________________ 4

•

Face Detection ______________________________________________________________________ 4

•

Face Recognition ____________________________________________________________________ 4

1.2

Target Audience / Users ______________________________________________________ 4

1.3

Need of Application__________________________________________________________ 5

2

Team Members ___________________________________________________________ 6

3

Previous Approaches/ Related Work __________________________________________ 7

4

Previous Experience _______________________________________________________ 8

5

Our Approach ____________________________________________________________ 9

6

7

5.1

Face Detection ______________________________________________________________ 9

5.2

Face Recognition ___________________________________________________________ 11

Evaluation Methodology __________________________________________________ 13

6.1

Test Cases ________________________________________________________________ 14

6.2

Success ___________________________________________________________________ 14

6.3

Improvement ______________________________________________________________ 14

References ______________________________________________________________ 15

Copyright © 2009 Sachin Chopra, Madhuri Rapaka, Trevor Garson. All Rights Reserved. Page 3 of 15

HCI 575X – Computational Perception - Project Proposal

1 Introduction

‘TAG’em All’ is a tool to organize photographs in a photo library. This tool deals with

Faces - a feature that allows organizing photos according to its subjects. ‘TAG’em All’

uses Face Detection to identify people in photographs and Face Recognition to match

similar looking faces that are probably of same person. The tool needs to be initially

trained by specifying the names of peoples in the photograph in order to develop a face

library. The tool itself will detect the face of a person and prompt the user to enter a

name. Once trained sufficiently, the tool will name all the photos of the same person for

the entire photo library and then organize the library according to people in them.

1.1 Two Pass Functioning

The tool takes a two pass approach to organizing the photo library:

• Face Detection: First, the tool runs on a specified set of photographs. For each

photograph, the tool detects the face of all the people in it, draws a rectangle

around the face and prompts the user to enter their name. This is how the tool is

trained each time the user wants the tool to recognize a new person.

• Face Recognition: Once sufficiently trained, the tool can simply be run

through the entire library. Now the tool does everything automatically, without

the need of any user input. It first detects all the faces in the library, draws a

rectangle around the face matches it with the trained set and finally names the

photographs and organizes them accordingly.

1.2 Target Audience / Users

‘TAG’em All’ is for anyone and everyone. It is efficient yet so simple. A recent survey

conducted by AOL by Digital Marketing Services (DMS) (1) had following findings:

Copyright © 2009 Sachin Chopra, Madhuri Rapaka, Trevor Garson. All Rights Reserved. Page 4 of 15

HCI 575X – Computational Perception - Project Proposal

Close to one-third, 31%, of younger respondents (18-49), say they take pictures

several times a week.

45% keep all their digital pictures on their computers Hard drive.

For more and more consumers, digital cameras are a must-have item that they

simply can't leave home without.

These findings clearly explain that ‘TAG’em All’ would be useful for a wide range of

user age group. An interesting comment comes from a lady saying she has inherited

thousands of pictures both from parents and in laws and is totally confused how to

organize them. ‘TAG’em All’ would be an efficient and useful tool for such users. Once

trained, and fully functional we hope a wide acceptance of our tool.

1.3

Need of Application

The advent of digital photography and its subsequent rise to popularity has

dramatically increased the number of photographers and in turn photographs for most

users. Now that people are taking more photographs than ever, organizing the

photographs can often be a pain. Often a user ends up having one giant storage

directory and keeps dumping all the photographs in that directory. When one needs to

look for a particular person’s photograph, it is really a pain to search through all those

photographs. One of the latest developments is tagging, wherein the user names each

person in the photograph by drawing a rectangle around a person’s face. A famous

example of tagging is Facebook. Facebook is a free-access social-networking website. It

has the ability to "tag", or label users in a photo. For instance, if a photo contains a

user's friend, then the user can tag the friend in the photo. This feature of Face book is

widely appreciated and widely used by most users of this website. It's one of the most

popular features of the website.

However despite its popularity, at this point photo tagging on Facebook is still an

entirely manual process. The user must manually select and name each individual in

each photograph, Facebook will never automatically recognize an individual in a

photograph regardless of how many times he or she has been tagged before. The

principal advantage of our tool is that it automates the process and can tag all the

pictures in the photo library, once the tool is trained.

Copyright © 2009 Sachin Chopra, Madhuri Rapaka, Trevor Garson. All Rights Reserved. Page 5 of 15

HCI 575X – Computational Perception - Project Proposal

2

Team Members

Sachin Chopra

Sachin is a Graduate student in The University of Iowa. His major is Computer Science.

He is working as a Graduate Co-op with Rockwell Collins, Cedar Rapids. His area of

interests is Application Development. He has a reasonable hand-on experience with C,

C++, Java and Dot Net. He is also interested in Software Testing. His role in this project is

to develop the back-end of our project essentially the Face Recognition and Face

Detection part. He would also be involved in the Evaluation of our project.

Madhuri Rapaka:

Madhuri was a Physics instructor for undergraduate students for five years in India. She

moved on to this part of the world as her husband has taken up his job in US. With her

growing interest towards computers and her zeal to learn programming, she started

working towards Master's in Computer Science in University of Iowa. She is also working

as a Graduate Student Co-Op in Rockwell Collins, working for Displays Unit. She has

experience in programming languages like Java and modeling languages like UML/OCL.

Her main goal with this project is to create a system that is perceived to be useful and easy

to use and her role would be to contribute as needed towards the project.

Trevor Garson:

After completing his BS in Computer Engineering from Embry-Riddle Aeronautical

University in 2007 Trevor has been working full time as a Software Engineer at Rockwell

Collins in Cedar Rapids Iowa where he works in Government Systems Flight Deck

Engineering on US and International military rotorcraft. In addition, he is a graduate

student at Iowa State finishing up his masters in Systems Engineering entirely through the

Engineering Distance Education program. He has experience in many programming

languages however he works most often in Ada, C, C++ and Java. His role in this project will

primarily be to develop the GUI and user front end as well as contributing as needed to all

other parts of the project.

Copyright © 2009 Sachin Chopra, Madhuri Rapaka, Trevor Garson. All Rights Reserved. Page 6 of 15

HCI 575X – Computational Perception - Project Proposal

3 Previous Approaches/ Related Work

Both face detection and face recognition have been attempted successfully in various

academic and commercial projects. However, the most direct comparison to our

current project is iPhoto 09. This commercial software from Apple takes the similar

approach of coupling face detection and face recognition. There has been reasonable

amount of work done under the same principles, although it lacks accuracy in terms of

face detection and recognition. iPhoto 09 works well when a face is vertical, top to

bottom. When a person starts turning his head or completely lies down horizontally, the

software has problems detecting faces and recognizing them. Studies point out that out

of 20 faces in a series of photos, iPhoto 09 correctly identified 8 of them, could not

recognize 8 of them, and incorrectly identified 4 of them. So, it has approximately 40%

success rate (2).

Besides iPhoto 09, Luxand Face SDK 1.7 is cross-platform software available for

Windows, Linux and Macintosh. The engine from Luxand uses the latest face

recognition technology to find all photos with the same person by the face, rather than

words. To enable the facial search, the library scans and indexes all faces found in the

photographs on the website. Then when the user wants to find someone, he needs to

upload a photograph with this person to the website and the engine goes to work. It

analyses the uploaded photo, detects the face, extracts its feature characteristics and

matches them against thousands of other photographs in the picture database. In a

matter of a few seconds, the engine displays all images, in which that exact person was

found (3).

Copyright © 2009 Sachin Chopra, Madhuri Rapaka, Trevor Garson. All Rights Reserved. Page 7 of 15

HCI 575X – Computational Perception - Project Proposal

4 Previous Experience

While all of our team members have technical backgrounds in Engineering, Physics, or

Computer Science, our experience with Computational Perception is mostly limited to

this course. Our experience to the subject matter as it relates to this project has been

mostly gained through the course lectures and homework assignments of this semester.

Homework 1 and Homework 2 gave us some exposure to Image morphology and to

work with Images. This would be useful when we have to work with different images.

Homework 3 dealt with motion, but also gave us reasonable exposure to detect objects.

It also gave us some preliminary idea of color detection.

The lectures gave us an insight into Face Detection and we hope that we would be able to

increase the accuracy of Face Detection in our project. Prior to conducting research for

this project we did not have any experience with Face Recognition. However, we do

know how to detect objects and we hope to apply similar techniques for face recognition.

Suffice to say, given our backgrounds and that each of us is taking this course as an

elective outside of our core area of concentration, we will be acquiring most of the

knowledge and experience necessary throughout this project.

Copyright © 2009 Sachin Chopra, Madhuri Rapaka, Trevor Garson. All Rights Reserved. Page 8 of 15

HCI 575X – Computational Perception - Project Proposal

5 Our Approach

As alluded to earlier, the primary computational perception focus of this project is on

face detection and face recognition, two very closely linked but separate processes.

Face detection is the process of finding a face, in this case a human face, within images

and videos. Face recognition compliments face detection by matching the detected face

to one of many faces known to the system. Our basic approach has been introduced

earlier in the document; however face detection will be used initially to aid in the

creation of a face library which will in turn be used to organize an offline photo library

by persons of interest using face recognition. Our technical approach is covered in

greater detail in the following sections.

5.1 Face Detection

Face detection is a very important component of this project as it is used in several

capacities. First and foremost face detection is used in order to help simplify and

expedite the process of face extraction and the creation of a face database to be utilized

for face recognition. As stated, before the software can attempt to recognize persons in

photos and ultimately organize the photos a suitable face database must be created. In

order to accomplish this task the user will enter a training mode in which he or she

well manually tag, or name, the faces of individuals in several photos. Face detection is

used in this capacity to detect and bound faces in the photograph for the user, leaving

the user with the simplified task of naming the faces. This is as compared to an entirely

manual process where the user would be responsible for the added step of bounding

faces in a photograph before naming them. Avoiding this step is ideal as the notion of a

face itself is not clearly defined among all users and the selection of regions that may

either be too small or too large would result in less meaningful face extraction to the

face database. Face detection, much like face recognition, does not work every time. In

the event that the face detection algorithm fails to detect a face in the training mode of

the software the user will be able to manually identify faces in the photograph as a

backup.

Moving on to the actual algorithms employed, face detection in this project is initially

planned to be achieved through the detection of Haar-like features using OpenCV. This

algorithm is provided by OpenCV and is detailed on the OpenCV wiki(9).

A recognition process can be much more efficient if it is based on the detection of

features that encode some information about the class to be detected. This is the

Copyright © 2009 Sachin Chopra, Madhuri Rapaka, Trevor Garson. All Rights Reserved. Page 9 of 15

HCI 575X – Computational Perception - Project Proposal

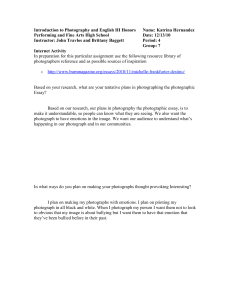

case of Haar-like features that encode the existence of oriented contrasts between

regions in the image. A set of these features can be used to encode the contrasts

exhibited by a human face and their spatial relationships. Haar-like features are so

called because they are computed similar to the coefficients in Haar wavelet

transforms.

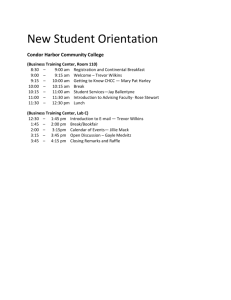

Figure 1: Example Haar Features

The object detector of OpenCV has been initially proposed by Paul Viola and

improved by Rainer Lienhart. First, a classifier (namely a cascade of boosted

classifiers working with Haar-like features) is trained with a few hundreds of

sample views of a particular object (i.e., a face or a car), called positive examples,

that are scaled to the same size (say, 20x20), and negative examples - arbitrary

images of the same size.

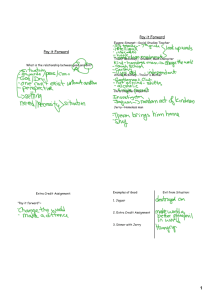

After a classifier is trained, it can be applied to a region of interest (of the same size

as used during the training) in an input image. The classifier outputs a "1" if the

region is likely to show the object (i.e., face/car), and "0" otherwise. To search for

the object in the whole image one can move the search window across the image

and check every location using the classifier. The classifier is designed so that it can

be easily "resized" in order to be able to find the objects of interest at different sizes,

which is more efficient than resizing the image itself. So, to find an object of an

unknown size in the image the scan procedure should be done several times at

different scales.

Copyright © 2009 Sachin Chopra, Madhuri Rapaka, Trevor Garson. All Rights Reserved. Page 10 of 15

HCI 575X – Computational Perception - Project Proposal

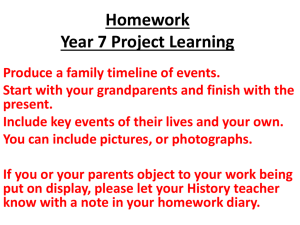

Figure 2: Haar Classifier Searching

The word "cascade" in the classifier name means that the resultant classifier

consists of several simpler classifiers (stages) that are applied subsequently to a

region of interest until at some stage the candidate is rejected or all the stages are

passed. The word "boosted" means that the classifiers at every stage of the cascade

are complex themselves and they are built out of basic classifiers using one of four

different boosting techniques (weighted voting). Currently Discrete Adaboost, Real

Adaboost, Gentle Adaboost and Logitboost are supported. The basic classifiers are

decision-tree classifiers with at least 2 leaves. Haar-like features are the input to the

basic classifiers. The feature used in a particular classifier is specified by its shape ,

position within the region of interest and the scale (this scale is not the same as the

scale used at the detection stage, though these two scales are multiplied).

5.2 Face Recognition

Face recognition is the ultimate goal of this project and while it has been accomplished

before face recognition is still a very active research area with many competing algorithms,

a few of which are listed below.

Eigenfaces or Principal Component Analysis method (PCA)

Fisherfaces or Linear Discriminant Analysis method

Kernel Methods

3D face recognition methods

Gabor Wavelets method

Hidden Markov Models

Commercial face recognition algorithms such as that used in iPhoto are unfortunately

proprietary but likely based off one of the aforementioned techniques. Face recognition

invariably starts with face detection. The face is then rotated so that the eyes are level and

Copyright © 2009 Sachin Chopra, Madhuri Rapaka, Trevor Garson. All Rights Reserved. Page 11 of 15

HCI 575X – Computational Perception - Project Proposal

scaled to a uniform size. Next, one of the different technical approaches kicks in. Each of

these approaches is covered by its own set of patents and bundled into various vendor

offerings. One approach transforms the face into a mathematical template that can be

stored and searched; a second uses the entire face as a template and performs image

matching. And a third approach attempts to create a 3-D model based on the face, and then

performs some kind of geometric matching.

While alternative approaches to face recognition will be explored within this project in an

effort to increase the accuracy of recognition, initial efforts will focus on Eigenfaces or

Principal Component Analysis (PCA) as supported by OpenCV. The OpenCV Face

Recognition wiki(10) tells us that the simplest and easiest method is to use the PCA support

within OpenCV.

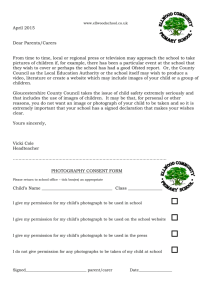

“However it does have its weaknesses. PCA is

translation variant - Even if the images are

shifted it won’t recognize the face. It is Scale

variant - Even if the images are scaled it will

be difficult to recognize. PCA is background

variant- If you want to recognize face in an

image with different background, it will be

difficult to recognize. Above all, it is lighting

variant- if the light intensity changes, the face

won’t be recognized that accurate.”

Countering these weaknesses however PCA has

several distinct strengths, namely the process is

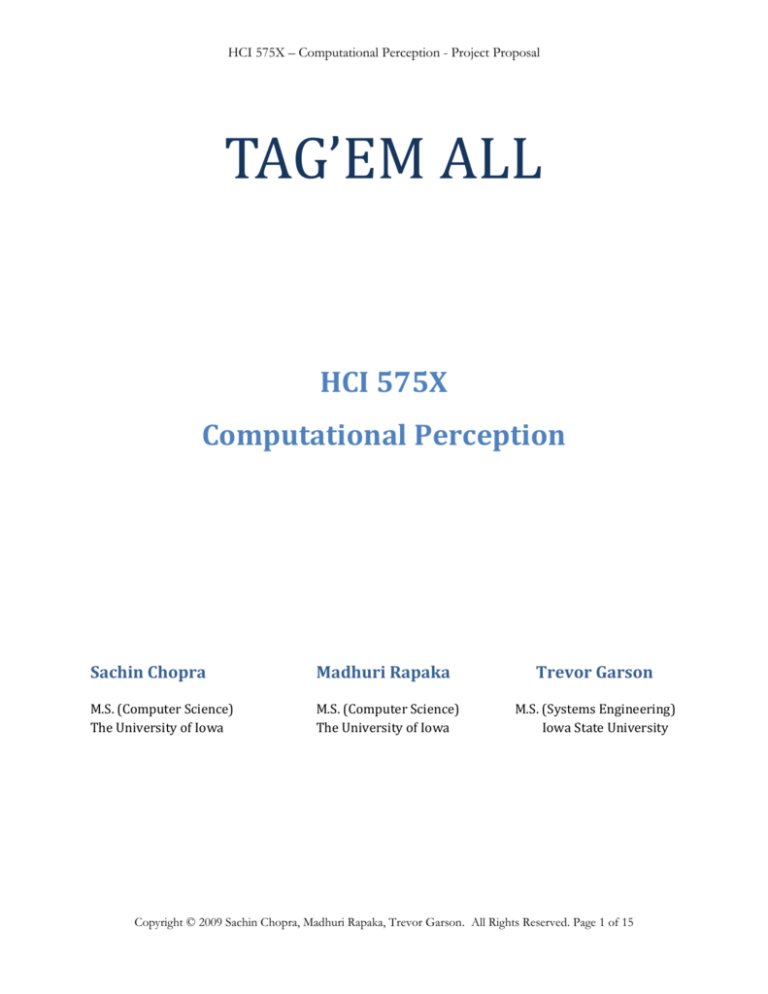

comparatively fast and requires smaller amounts of Figure 3: Sample Eigenfaces from AT&T

memory than alternative approaches due

dimensional reduction. In addition to the face extraction steps detailed in the previous

section, utilized both in the creation of the face library and in the extraction of faces to

perform recognition upon, several other pre-processing steps may also required to

perform PCA. These pre-processing steps will likely include scaling and illumination

normalization.

As we move on to the implementation phase of this project, as many face detection

algorithms will be evaluated as technically possible given schedule and technical

considerations in order to determine the ideal race recognition approach for our needs.

Copyright © 2009 Sachin Chopra, Madhuri Rapaka, Trevor Garson. All Rights Reserved. Page 12 of 15

HCI 575X – Computational Perception - Project Proposal

6 Evaluation Methodology

The project is developed to work only in offline mode with a project library. We plan to

have a ‘Control library’ which would have a known number of photographs with all the

details of entities in each photograph. Essentially, we would be having a table which

would tell us the number of times a person’s photograph is in the Control library.

Dynamic Training Mode: The Training Mode of the project would help the tool learn

the person’s image. Herein we would be having a definite number of photographs

tagged manually by us. The Face Detection would still be done by the tool, but the

names given to each image would be manual. This would be repeated for each person

for whom we wish to have a separate directory. Additionally, we plan to provide the

tool a Dynamic learning procedure. It would start with a minimal number of training

set (1 image) and would grow the training set as required. Due to this, the tool would

aim to require a minimum number of training images and would be efficient than the

case of static learning.

Consider the following example:

Let’s say we have one picture which we name as ‘Sachin’. Now the tool, when run over

the entire library would search for all the Images in which the face matches with

Sachin’s face. If another photograph is found, it would be added to the training library. If

the tool is unable to recognize any such photograph, then it would again enter into

‘train’ mode wherein we would name the picture again as Sachin and run the tool again.

We would have an upper limit of these ‘training’ cases after which if the tool fails to

recognize faces, the particular case would be deemed ‘Failed’. Once such prototype has

been prepared, we will run the tool on entire libraries, which would be different and

bigger than the training mode library.

The tool is expected to sort the entire directory according to the person’s image, or

according to the number of times each person appears, as desired.

Copyright © 2009 Sachin Chopra, Madhuri Rapaka, Trevor Garson. All Rights Reserved. Page 13 of 15

HCI 575X – Computational Perception - Project Proposal

6.1 Test Cases

Once we have trained the system, with an upper limit on sample images of each person,

we would test our tool on different libraries, which were not used in the Training mode.

Additionally, we would test the system robustness, by testing it when no known face is

in the library, when people in the photograph have various props like hat, ear rings,

glasses etc.; when the images are blurred and finally for tilted images. For the cases, no

known face is in the library we would expect the system to acknowledge the situation

and provide a feedback. For the other cases we would expect the system to do its best

guess and correctly classify the object but with a lower accuracy (probably around

40%).

6.2 Success

Given the accuracy of previous tools and techniques, we will consider our project to

success, if it shows and accuracy of 60% over the test cases described in the previous

section. At this point, we aim to perform better than the existing technologies. However,

this is an objective limit and not the bounding limit of success. We would aim at much

higher accuracy throughout our project. As mentioned, we would be building the

system following the dynamic prototyping approach wherein it will be improved based

upon the feedback and the results obtained from the system.

6.3 Improvement

Based on the results, we plan to have following improvements over the existing

technologies:

1. Add techniques to detect faces which are tilted at an angle.

2. Remove noise before processing an image to improve detection and recognition

results.

3. Build the training set efficiently so as to minimize false positives.

The major part of our project is Face Recognition and detection. We aim to increase our

accuracy within these aspects. Given all the previous work with face Detection, we

would strive to achieve better accuracy than previous work in this field.

Copyright © 2009 Sachin Chopra, Madhuri Rapaka, Trevor Garson. All Rights Reserved. Page 14 of 15

HCI 575X – Computational Perception - Project Proposal

7 References

1. http://www.livingroom.org.au/photolog/news/digital_photography_survey_results

.php

2. http://www.hiwhy.com/2009/02/10/ilife-09-iphoto-promises-face-detection-andface-recognition/

3. http://tc-europa.com/blog/tag/face-detection/

4. M. Turk and A. Pentland (1991). ``Eigenfaces for recognition''. Journal of Cognitive

Neuroscience, 3(1).

5. Dana H. Ballard (1999). ``An Introduction to Natural Computation (Complex

Adaptive Systems)'', Chapter 4, pp 70-94, MIT Press.

6. http://www.stanford.edu/class/cs229/proj2007/SchuonRobertsonZouAutomatedPhotoTaggingInFacebook.pdf

7. http://www.stanford.edu/class/cs229/proj2006/MichelsonOrtizAutoTaggingTheFacebook.pdf

8. http://resources.smile.deri.ie/conference/2008/samt/Short/184_short.pdf

9. http://opencv.willowgarage.com/wiki/FaceDetection

10. http://opencv.willowgarage.com/wiki/FaceRecognition

Copyright © 2009 Sachin Chopra, Madhuri Rapaka, Trevor Garson. All Rights Reserved. Page 15 of 15