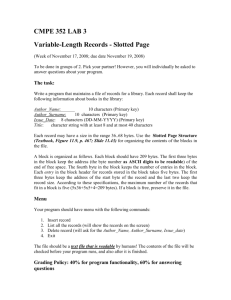

Word - Dcm4Che

advertisement

[DCM-475] reading from invalid DicomInputStream can exhaust memory Created:

06/Apr/11 Updated: 16/May/11 Resolved: 08/Apr/11

Status:

Project:

Component/s:

Affects

Version/s:

Fix Version/s:

Resolved

dcm4che2

Core

dcm4che-2.0.25

Type:

Reporter:

Resolution:

Labels:

Remaining

Estimate:

Time Spent:

Original

Estimate:

Bug

Oli Kron

Fixed

None

Not Specified

dcm4che-2.0.26

Priority:

Assignee:

Votes:

Minor

Gunter Zeilinger

1

Not Specified

Not Specified

Attachments:

DS_Store.dat

safer_read.patch

Tracking Status: Risk Analysis - Todo, Test Spec - ToReview, Test State - Not tested

Description

I write a custom dcm4che2-based application (similar to dcmsnd). In my application, successful

parsing of an input file indicates that the file is a valid DICOM object and the application can

send it to one of DICOM archives. However,

on some "invalid" (non-DICOM) input files, the application runs out of the heap space and the

show stops.

You can observe the same behaviour (OutOfMemoryError) with the plain dcmsnd or just

dcm2txt.

I'll attach a sample file which triggers that.

I'd like to make dcm4che2 behave like DCMTK 3.6.0. When I run dcmdump on the sample file

I get:

E: DcmElement: CommandField (0000,0100) larger (828667202) than remaining bytes in file

E: dcmdump: I/O suspension or premature end of stream: reading file: /tmp/DS_Store.dat

I played with DicomInputStream a bit. I have a first (very rough) patch to not allocate bytes

array "blindly" but "grow" with what was actually read. What do you think about the idea?

Comments

Comment by Oli Kron [ 06/Apr/11 ]

DS_Store.dat - sample file to trigger OutOfMemoryError

safer_read.patch - the patch

Comment by Damien Evans [ 06/Apr/11 ]

Fixing this issue would be welcomed by many dcm4che users. I look forward to Gunter's

response.

Comment by Gunter Zeilinger [ 08/Apr/11 ]

I chose a rather large grow increment (64MiB), because reallocating memory is rather

expensive.

Comment by Oli Kron [ 08/Apr/11 ]

Works for me. Thanks from your fan!

Comment by Brett Okken [ 16/May/11 ]

The patch appears to read and write 1 byte at a time, comparing each to -1. Even if the backing

InputStream is buffered, this will likely be pretty slow. I would suggest something like:

public byte[] readBytes(int vallen) throws IOException

{

//create BAOS that matches the expected length.

//be suspect of any expected length longer than 4MB and allow the

underlying byte[] to resize

final ByteArrayOutputStream valbaos = new

ByteArrayOutputStream(Math.min(vallen, 4 * 1024 * 1024)

//create a buffer of size 8k to read from underlying input stream to the

valbaos

final byte[] buffer = new byte[Math.max(8 * 1024, vallen)];

int lastRead = read(buffer);

if (lastRead != -1)

{

valbaos.write(buffer, 0, lastRead);

}

int totalRead = lastRead;

// if we did not read as many bytes as we had hoped, try reading again.

if (lastRead < vallen)

{

// as long the buffer is not full (remaining() == 0) and we have not

reached EOF (lastRead == -1) keep reading.

while (totalRead < vallen && lastRead != -1)

{

//only try to read up to vallen total bytes

lastRead = read(buffer, 0, Math.min(buffer.length, vallen totalRead));

// if we got EOF, do not add to total read.

if (lastRead != -1)

{

valbaos.write(buffer, 0, lastRead);

totalRead += lastRead;

}

}

if (totalRead < vallen)

{

throw new EOFException("Input stream shorter than requested read

length " + vallen + "; only " + totalRead + " bytes read")

}

}

return valbaos.toByteArray();

}

Comment by Damien Evans [ 16/May/11 ]

Brett, the patch was not committed "as is". See the Subversion Commits tab on this issue to see

the exact code which was committed.

Generated at Sun Mar 06 10:12:37 CET 2016 using JIRA 6.3.6#6336sha1:cf1622c62a612607f341bda9491a04918e09ebfd.