2 Event Study Analysis - Department of Computing Science and

advertisement

Event Study Analysis of Share Price

and Stock Market Index Data

Marco Giuliani

September 2011

Dissertation submitted in partial fulfilment for the degree of

Master of Science in Computing for the Financial Markets

Computing Science and Mathematics

University of Stirling

Abstract

This project involves creating a software system to aid Event Study analysis of share

price and stock market index data. Event study analysis is used to “Determine whether there

is an ‘abnormal’ stock price effect associated with an unanticipated event. From this determination the researcher can infer the significance of the event” [10].

The problem with conventional event study methods carried out is that the collection and

preparation of relevant stock and index data for the event period can take time and as there

are many individual steps to preparing the data it is prone to human error. There are commercial, dedicated event study software packages however these can be expensive solutions

requiring local installation of software on the users computer.

The objectives of the project were to develop a free, zero client installation, user friendly,

web based interface to an 'Event Study' analysis software package that could both, connect

directly to online financial data, and run statistical analysis on the data retrieved. Results of

the analysis should be presented both graphically and textually and returned within a reasonable time.

The approach to the problem was to first assess the strengths and weaknesses of alternative solutions and to incorporate the strengths into the solution where possible. An understanding of the event study methodology was achieved through online research enabling accurate creation of a software model that mapped all the steps required to carry out the analysis. An object oriented approach was maintained throughout the software implementation to

allow the solution to be extended and developed further.

The project delivered a web based, client / server solution incorporating a feature rich

web enabled interface using jQuery and jsp/Servlets. The statistical analysis and many of the

graphical features were achieved using R (www.r-project.org). R was also used to provide

the environment where the software could connect and retrieve the financial data direct from

an online resource. The graphical requirements were achieved using a number of solutions

including XML based data, and using the graphical abilities of the R environment. The solution could be further developed to include more complex models and also to carry out multiple stock analyses, however it has successfully achieved its main objectives.

i

Attestation

I understand the nature of plagiarism, and I am aware of the University’s policy on this.

I certify that this dissertation reports original work by me during my University project except for the following (adjust according to the circumstances):

The Eventus main features review was taken largely from http://www.eventstudy.com

[4].

Recorded Future key points were taken mainly from http://www.recordedfuture.com

[5]

Signature

Date

ii

Acknowledgements

I would like to thank Dr Mario Kolberg for his supervision and confidence in the project. I

would also like to thank Professor Leslie Smith for his positive comments on the solution,

and Isaac Tabner for his finance and accounting input and hopefully the solution could be

developed further to aid in his research. I would extend my thanks to my wife Gillian who

has been supportive throughout and tried to create a relaxed working environment. I would

also like to thank my children; Nicolle, Vanessa, and Natasha for sounding vaguely interested in my topic and listening to me trying to explain it.

iii

Table of Contents

Abstract ................................................................................................................................. i

Attestation............................................................................................................................. ii

Acknowledgements ............................................................................................................. iii

Table of Contents ................................................................................................................. iv

List of Figures...................................................................................................................... vi

1 Introduction ..................................................................................................................... 1

1.1 Background and Context ......................................................................................... 1

1.2 Scope and Objectives............................................................................................... 1

1.3 Achievements .......................................................................................................... 2

1.4 Overview of Dissertation ......................................................................................... 2

2 Event Study Analysis....................................................................................................... 4

2.1 Time-series data for event study analysis. ............................................................... 4

2.2 The single index model............................................................................................ 5

2.3 Capital Asset Pricing Model (CAPM) ..................................................................... 5

2.4 Fama-French 3 Factor Model .................................................................................. 6

2.5 The conventional method of event study analysis. .................................................. 6

2.5.1 Getting stock and index data .............................................................................. 7

2.5.2 Data Cleansing.................................................................................................... 7

2.5.3 Normalising Returns ........................................................................................... 7

2.5.4 Regression analysis to model returns ................................................................. 7

2.5.5 Calculating expected and abnormal returns........................................................ 8

3 State-of-The-Art .............................................................................................................. 9

3.1 Eventus .................................................................................................................... 9

3.2 Recorded Future .................................................................................................... 10

4 The EventR solution technology stack .......................................................................... 12

4.1 R - open source statistical package ........................................................................ 12

4.1.1 Quantmod ......................................................................................................... 12

4.1.2 Zoo.................................................................................................................... 13

4.2 Rserve – tcp/ip interface to R ................................................................................ 14

4.3 Apache Tomcat - Servet container and web server. ............................................... 14

4.4 Client-side technology ........................................................................................... 14

iv

4.4.1 HTML ............................................................................................................... 14

4.4.2 JQuery .............................................................................................................. 15

4.4.3 XML ................................................................................................................. 15

4.5 Server-side technology .......................................................................................... 15

4.6 Client/Server Architecture ..................................................................................... 16

5 Program Implementation ............................................................................................... 18

5.1 Controller.java ....................................................................................................... 19

5.2 REvent.java............................................................................................................ 19

5.2.1 PNG Image creation ......................................................................................... 20

5.2.2 XML Charts ...................................................................................................... 21

5.3 Newstudy.jsp.......................................................................................................... 22

5.4 User Guide ............................................................................................................. 23

6 Application Deployment ............................................................................................... 27

7 The EventR implementation of Event Study ................................................................. 29

8 Testing ........................................................................................................................... 32

8.1 Java Testing ........................................................................................................... 32

8.2 R testing ................................................................................................................. 32

8.3 Statistical Testing ................................................................................................... 32

9 Conclusion ..................................................................................................................... 33

9.1 Summary ................................................................................................................ 33

9.2 Evaluation .............................................................................................................. 33

9.3 Future Work ........................................................................................................... 34

References .......................................................................................................................... 36

Appendix 1 Installation Guide............................................................................................ 37

v

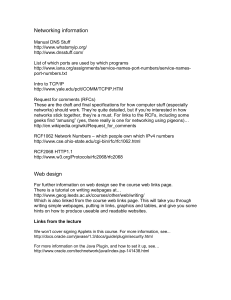

List of Figures

Figure 1.

Recorded Future indicators .............................................................................. 10

Figure 2.

Quantmod Graphic Example ........................................................................... 13

Figure 3.

MVC Design pattern ........................................................................................ 16

Figure 4.

EventR Architecture Diagram .......................................................................... 17

Figure 5.

Program Flow .................................................................................................. 18

Figure 6.

R chart on interface .......................................................................................... 21

Figure 7.

XML Chart on Interface................................................................................... 22

Figure 8.

Class diagram................................................................................................... 23

Figure 9.

WAR Export from Eclipe................................................................................. 27

Figure 10.

EventR Home Page ...................................................................................... 24

Figure 11.

New Study Screen........................................................................................ 25

Figure 12.

JQuery Calendar Widget .............................................................................. 25

Figure 13.

Returned Analysis ........................................................................................ 26

Figure 14.

jQuery Lightbox........................................................................................... 26

vi

1 Introduction

“EventR” event analysis solution aims to provide a point and click, easy to use tool for analysing the influence of specific events on stock prices and therefore the market value of a

listed firm. The collection of the relevant stock and index prices for the event date is automated, therefore removing the possibility for human error inherent in the conventional method of event study. The conventional method involves the user manually visiting online data

sources and downloading individual data files. Once downloaded, they would have to be

transformed into daily returns, again exposing the data to human error. The client/server service handles requests to multiple resources for up-to-date stock and market prices and the

statistical analysis is carried out using the advanced open source statistical package “R”. The

solution is meant to be used as a first determination of the significance of events on the stock

prices. Further statistical analysis can then be used to determine the level of significance or

other factors that may have influenced the change in price.

1.1

Background and Context

Event study analysis is used to help determine significance of an event on the change in a

stock price, this can be analysed retrospectively and used as information to help predict future changes in stock price given a recurrence of the same event. Event study has also become more mainstream in litigation cases in America where “bad news” has been hidden

from the public to keep stock prices high and when this information is leaked or discovered

the resultant loses that an investor witnesses are challenged in court.

(McWilliams & Siegel 1997) Event study “Determines whether there is an ‘abnormal’

stock price effect associated with an unanticipated event. From this […] the researcher can

infer the significance of the event” [10] Changes in stock prices occur due to a number of

external and internal factors however the goal of the event study is to accurately determine

abnormal changes in prices.

1.2

Scope and Objectives

The objective of the EventR solution is to provide a first point analysis tool to easily and

quickly look at an events influence on stock prices over one or many firms. The raw data and

initial analysis to determine significance is hidden from the end user and lets them quickly

discard non-significant events.

1

The web based client is to be a well-designed, simple to use interface to the application and

the data will be retrieved automatically from “yahoo finance” or another reliable online financial data resource. As the data is to be automatically retrieved instantly there is no need to

store this data locally as the information can simply be re-read from the online source. Charting is to be included to represent the raw data and results for quick visual analysis of the data

and should be produced in real-time.

1.3

Achievements

The solution has succeeded in providing an easy to use interface with automatic data retrieval from relevant financial data sources. Another great achievement was the ability to use the

R environment via http over the web and build a solution that utilises the statistics power of

R at the back-end with a user-friendly web front end. The further inclusion of the FamaFrench data library further enhanced the return generating model and achieved a robust and

well tested model. On a personal level, while developing the EventR software solution I have

learned how to use the R statistical package. This has in turn further developed my statistical

analysis skills for financial data. The R system is extensible and I have learned how to write

packages for the system that can be called from Java. I have tried to follow an MVC design

pattern and this has further developed my software development skills in working to a strict

design pattern. My research skills have also been improved through the avoidance of reinventing the wheel. As the R package is open source there are many pre-developed packages

that needed to be tested and assessed if they would suit the requirements of the solution. My

software testing abilities have developed as the solution progressed and as new packages and

features were added. The robustness of the system needed to be maintained throughout the

development and bugs had to be identified and removed at the earliest possibility. Project

management skills have improved through weekly deadlines and weekly tasks. These have

been updated as the program progressed to maintain an outstanding tasks list.

1.4

Overview of Dissertation

The document will cover the conventional, manual process of event study analysis and cover

the statistical background of the process. It will break down the process into each of the separate steps that are required to produce a conclusion about the events influence on stock prices.

2

It will then explain the technologies used and the reasoning behind their choices before explaining in more technical detail the method of implementation. A user guide to the application and an installation guide are included as appendices.

3

2 Event Study Analysis

The determination of significance of specific events on stock prices has been studied for a

number of years and can cover many different events. From celebrity endorsements, mergers,

takeovers and changes in key management of a company, this analysis helps predict future

changes in price for stocks that may witness the same events. The analysis looks for the significance of the change in stock price and not necessarily the direction. The goal of the event

study is to try and help make better predictions of stock price movements for unanticipated

events.

Event study relies on the identification of abnormal returns on the stock due to the event in

question. To determine what are abnormal returns two key elements are required; Actual returns (Rs), and expected returns E(r). Actual returns can be taken from a number of sources

and this is normally taken from a few respected sources for its accuracy. These are Google

finance, yahoo finance, and Centre for Research in Security Prices (CRSP). Expected returns

are achieved by building a model for stock returns. There are various models to calculate

expected returns and the main ones are the single factor model, CAPM and the Fama-French

3 factor model.

2.1

Time-series data for event study analysis.

The time-series data used for the analysis is split into a number of windowed time periods.

There is an Estimation Window, Event Window, and Event Date.

<-------------Estimation Window--------------><---------------Event Window----------------->

t-ew-1-est

t-ew-1 t-ew

t

t+ew

t = event date

ew = event window

est = estimation window

Estimation window is normally 60 days and the Event window is normally 31 days split with

15 days either side of the event date. It is using data from the estimation window that a model is built to estimate expected returns on the stock. A suitable period is chosen that is close

enough to the event date but not too close that it is affected by the event. The event window

is the period across the event date that we are looking for a significant abnormal return.

4

2.2

The single index model

This model shows how a stock return will relate to the market return and will be represented

by the formula

E(r) = α + βi(Rm) + e

The alpha and beta terms are the regression coefficients for a linear regression where the

stock return is the dependant variable and market return being the independent variable. The

α is expected excess return of the individual stock due to firm-specific factors. β is the

sensitivity factor of the stocks return to the market return. A beta value of 1 represents a

stock return that moves exactly the same as the market return moves, for every percentage

change in the market return there is an equivalent percentage change in the stock return.

It is therefore how the stock return varies with the market return as a ratio of the how the

market varies as a whole. If the model was to be the perfect model then any differences in

the actual return from the expected return would represent only random noise (e) with a

normal distribution and mean zero. Other models include more factors and try to get closer

and closer to this exact model with only random noise as the variance from actual returns.

2.3

Capital Asset Pricing Model (CAPM)

E(r) = Rf + β(Rm - Rf) + e

E(r) = Expected Return on Stock

Rf = Risk free rate

Rm= Return on the market

The CAPM models expected returns excess of the risk free rate based on the security market

line (SML) and is an extension of the single index model. The SML enables us to calculate

the reward-to-risk ratio for any security in relation to that of the overall market. Therefore,

when the expected rate of return for any security is deflated by its beta coefficient, the reward-to-risk ratio for any individual security in the market is equal to the market reward-torisk ratio. For a stock with a beta of zero the expected return would be equal to the risk free

rate of return.

5

2.4

Fama-French 3 Factor Model

E(r) = Rf + β1(Rm-Rf) + β2(SMB) + β3(HML) + α

Rf = Risk free rate

Rm= Return on the market

SMB=Small Minus Big (The returns for a portfolio of small stocks based on market capitalisation minus the returns of big stocks based on the same criteria)

HML=High Minus Low(The returns for a portfolio of high stocks based on book to market

minus the returns of a portfolio of low book to market stocks.

The beta and alpha values are similar to the beta and alpha in the single factor model but are

generated from the multiple linear regression of stock returns using these factors. These factors are calculated with combinations of portfolios composed by ranked stocks (BtM ranking, Cap ranking) and available historical market data. The Fama-French Three Factor model

explains over 90% of the diversified portfolios returns, compared with the average 70% given by the CAPM. The signs of the coefficients suggested that small cap and value portfolios

have higher expected returns—and arguably higher expected risk—than those of large cap

and growth portfolios.[1]

“Two easily measured variables, size and book-to-market equity, combine to capture the

cross-sectional variation in average stock returns associated with market β...” [3]

The 3 factor model is therefore seen as a closer estimation of expected returns and can be

used as a better estimation of abnormal returns under event studies. Kenneth R. French has

produced a data library [2] and although this cannot be accessed directly it can be downloaded as comma separated values (csv) to be used within the application.

2.5

The conventional method of event study analysis.

The conventional method of carrying out an event study involves a number of laborious

steps in getting and preparing the stock and market index data for analysis in a generic software package, for example Excel. The data then needs to be analysed and used further to

produce charts or graphs. These steps take the majority of the time and a need to be repeated

for every stock that you wish to analyse.

6

2.5.1

Getting stock and index data

There are a number of online resources for stock market data and using this approach the

user can choose what dataset they wish to use. For this example I will explain how you

would use Yahoo Finance at uk.finance.yahoo.com. The user would visit the website and

enter the stock ticker code, they would then have to click on the historical prices icon and

enter in the start and end dates for the requested time period. Once these details have been

entered they would have the option to download the data as a spreadsheet. This would have

to be repeated for the market index data and make sure that you have the same dates entered

into the date ranges. This manual input can lead to errors and mismatching of data. These

individual downloaded spreadsheets would need to be amalgamated into one workbook to

enable the user to carry out the statistical analysis to be explained in the next section

2.5.2

Data Cleansing

The data downloaded includes a number of columns that are not required for the analysis and

these can be removed from the sheets. The only columns required are the date and the stock /

index close price.

2.5.3

Normalising Returns

In order that stocks of varying values can be compared we need to normalise the data and use

that value as a comparison. The value used is the daily percentage returns and this is calculated for both the stock and the index. Formula for the daily percentage returns is:

(today’s price – yesterday’s price) / yesterday’s price

These returns are calculated for each trading day of the estimation period.

This would be done using an excel formula but would need to be copies and pasted for the

columns required and again could lead to errors.

2.5.4

Regression analysis to model returns

Modelling expected returns of the stock price allows the user to determine what realised abnormal returns are. One method mentioned is to use regression analysis to calculate the single index model relationship between market returns and stock returns. This will give a formula for the single factor model;

E(r) = α + β(Rm) + e

7

To do the regression in Excel the user needs to select each column for the dependant stock

returns and the independent market returns and Excel will generate statistics within the

spreadsheet.

2.5.5

Calculating expected and abnormal returns

With the estimation parameter for α and β the user can calculate the estimated returns for

the stock over the event period. The realised return of the market index is put into the single

factor model and this will give the expected return E(r) for the stock had no event occurred.

The realised return for the stock is subtracted from the expected return to give the abnormal

return (ARs) of the stock over the event period.

ARs = Rs – E(r)

If the event study has been carried out successfully the abnormal returns should be close to

zero during the event window and there should be a significant abnormal return around the

event date.

To generate a more complex model in this way, like the Fama-French 3 factor model, would

require many more steps and manual calculations to generate expected returns. As the need

for more external data increases the use of Excel becomes too complex and more automatic

solution is required.

8

3 State-of-The-Art

There are a number of commercial software packages that are either specifically designed for

event study analysis or aid in the calculations required to perform the event study. We will

look at two examples that address some of the issues raised in the last section regarding conventional event study analysis carried out in Excel.

3.1

Eventus

Eventus 'performs state-of-the-art event study estimation and testing using the CRSP stock

database or other stock return data and provides fast event-oriented data retrieval from the

CRSP stock database. Eventus software is available for Windows, Linux and Solaris' [4].

Some of the main features of Eventus include;

Choice of market model, market adjusted, mean adjusted, or raw returns in event

studies.

Allows all the above benchmarks in the same event study run for comparison.

New in version 7! Fama-French factor support.

Directly accesses the basic stock indices from the user's copy of the CRSP stock

files: equal- or value-weighted CRSP index with or without dividends, Standard &

Poor's 500.

The main benefits of this package are that it accesses to stock and index data directly from

the CRSP data sets and is therefore more convenient for carrying out multiple event studies

and minimises data transformation errors. The package is also specifically designed for event

study therefore there are many additional parameters that can be included in the users analysis to strengthen the conclusions.

The package however needs to be installed on the user’s computer and there may be version

or performance issues related to minimum specifications required for the software to run.

The user may wish only to use event study analysis as an additional argument for another

subject and only requires a quick analysis of the stock returns. For the user to install the full

software package may not be necessary. The package also requires a fair academic

knowledge of statistics and financial analysis and is not aimed at the novice user.

9

3.2

Recorded Future

From the Recorded Future website [5] the company’s key points are;

Leverage the most comprehensive index of past, planned and speculative events on

the web

Validate investment hypotheses with real-time information

Visualize sentiment and momentum on speculative events: M&A rumours, bankruptcies.

Monitor for data flow on product & company events, analyst ratings, patent and clinical trial filings, etc.

The difference with this service is that it looks at multiple events over time makes predictions about future changes in stock prices. The basis of its analysis is to cross reference textual analysis from a number of sources. This includes, large scale analysis of online media

flow including blogs, Twitter, mainstream news and government filings. The user can therefore can leverage statistically significant relationships with market outcomes of interest, and

further optimize for trading strategies.

The service is very comprehensive and the results include predictions for a number of indicators including momentum and sentiment around a specific event (Figure 1).

Figure 1. Recorded Future indicators

This is a very detailed and complete solution that can give a financial analyst indicators

about any event. It is however an expensive system and is payable on a monthly subscrip10

tion. The analysis is a black box system and you don’t see the algorithms that their predictions are based on. The system is also web based and they have recently released an API to

enable developers to access the service directly from other programming languages and statistics packages. Access to the API is however, even more expensive and starts at 2500 USD

per month

11

4 The EventR solution technology stack

This solutions pulls together a number of technologies to provide an online client/server solution to event study analysis. The three main components are;

R - open source statistical package

4.1

The goal of the project was to allow statistical analysis on financial data and it made sense to

try and integrate a packaged statistical solution to compute the statistics. The R environment

proved to be the best choice as it was opensource and free, had a Java interface written for it

already to allow programs to communicate with it, and had many additional customisable

packages written for it. R is a language and environment for statistical computing and

graphics. R provides a wide variety of statistical (linear and non-linear modelling, classical

statistical tests, time-series analysis, classification, clustering, ...) and graphical techniques,

and is highly extensible. The S language is often the vehicle of choice for research in statistical methodology, and R provides an Open Source route to participation in that activity. [6]

R is an integrated suite of software facilities for data manipulation, calculation and graphical

display. It includes

an effective data handling and storage facility,

a suite of operators for calculations on arrays, in particular matrices,

a large, coherent, integrated collection of intermediate tools for data analysis,

graphical facilities for data analysis and display either on-screen or on hard copy,

and

a well-developed, simple and effective programming language which includes conditionals, loops, user-defined recursive functions and input and output facilities.

Users can write extensions and package these up for others to use and extend further. The

main packages that have been used in this solution are

4.1.1

Quantmod

This is the main package that carries out most of the time-series analysis and charting[7]. It

also handles the collection of the data for the stock prices and market prices by connecting to

the selected source and parsing the data into a time-series object. The package was chosen as

12

it is basically a wrapper to any of the R commands that are already available. It allows shorter more succinct syntax to complete financial analysis. The package has a number of built in

functions and graphs and the example graph below (Figure 2) can be created with only two

lines in R code to retrieve the data from Yahoo finance and create the graph;

> getSymbols("MSFT")

> chartSeries(MSFT, subset='last 3 months')

What is produced is a composite graph containing a graphical representation of the daily

stock prices for the stock, in this case Microsoft, over the last three months and the volumes

traded.

Figure 2. Quantmod Graphic Example

4.1.2

Zoo

Another R package used within the solution is the Zoo package[8]. It is an R class with

methods for totally ordered indexed observations aimed particularly at irregular time series.

It is useful to use within the EventR solution as the time series data has gaps in it due to

weekends and holidays when stocks are not traded. Zoo allows for plotting of the time-series

data with missing values for example, the non-trading days,

13

4.2

Rserve – tcp/ip interface to R

There are very few examples of connecting Java to R and the few that are available are based

around using Rserve[9]. Rserve is a TCP/IP server which allows other programs to use facilities of R from various languages without the need to initialize R or link against R library.

Every connection has a separate workspace and working directory. Client-side implementations are available for popular languages such as C/C++ and Java. Rserve supports remote

connection, authentication and file transfer. Typical use is to integrate R back-end for computation of statistical models, plots etc. in other applications. Rserve had the most available

methods and also the benefit of a separate workspace and working directory made it the ideal

choice in the multi-user system.

4.3

Apache Tomcat - Servet container and web server.

Apache Tomcat is an open-source Servlet container developed by the Apache Software

Foundation (ASF). Tomcat implements the Java Servlet and the JavaServer Pages (JSP)

specifications from Oracle Corporation, and provides a "pure Java" HTTP web server environment for Java code to run. It is one of the most widely used servlet container and as the

solution does not require a full blown java application server, Tomcat is much easier to install and setup.

4.4

Client-side technology

To facilitate the requirement of a zero client installation solution, the decision was to use a

web based interface. A number of client-side technologies are included in the user interface.

These include.

4.4.1

HTML

HTML is used to generate the basic website layout of the interface and defines where the

main elements are positioned and where the user interacts with the system. There are 3 main

static web pages within the system.

The main index page that is the entry point for the system. - index.html

The quick guide Tutorial page. - tutorial.html

The full user guide page. - userguide.html

14

4.4.2

JQuery

jQuery is a cross-browser JavaScript library designed to simplify the client-side scripting of

HTML. jQuery is free, open source software and the syntax is designed to make it easier to

navigate a document, select DOM elements, create animations, handle events, and develop

Ajax applications. There are many pre-developed, generic functions that can be customised

to suit the individual requirements and these can include lightbox functions to display

graphics in a popup window on the screen, to calendar widget to help with date selection on

a forms date field. The inclusion of jQuery enabled rapid development of advanced web

based user interface elements. These jQuery components were pre-developed and pre-tested

for errors therefore giving confidence in the interface performance.

4.4.3

XML

XML is used to format the data sent from the system to the charts widget on the interface. R

provides basic charting, however the xml charts used are more customisable and require less

disk space under a multi user system.

4.5

Server-side technology

The technology used on the server side was the Java programming language. Java in an object oriented programming language and it is intended to let application developers "write

once, run anywhere." Java is currently one of the most popular programming languages in

use, particularly for client-server web applications. Java Servlets were written to generate the

data objects and connect to the R environment. Once the Servlet has carried out the analysis

via R the system redirects to Java Server Pages to display the results. The solution attempts

to follow the MVC design pattern (Figure 3). There is a controller Servlet that receives the

request from the web interface and it will interact with R to analyse and create the data objects. Once these are complete the controller then sends the request onto the view Java Server page. This is the page that creates the HTML and JavaScript to be returned to the user in

the web browser. This design pattern helps separate interface design from business logic and

facilitates cleaner, easier to read code.

15

Figure 3. MVC Design pattern

4.6

Client/Server Architecture

The method of delivery of the application is client/server. There is no installation required by

the user as a web based client is used as the interface. Any updates or code changes only

need to be applied to the server, allowing for enhancements and upgrades to be carried out

without the end user realising it. This solution does however require some additional programming requirements to ensure that every user session is kept unique and there are so

clashes in generated file names. Rserve maintains its own client session including its own

workspace and working directory so every call of the program will run separately and there

is no clash of variables and R objects. The Java Servlets however generate separate image

files and XML files for the graphs so there was a need to append a session variable to the file

16

names so that the multi user interface could distinguish one clients graphs from another.

Figure 4. EventR Architecture Diagram

Figure 4 shows the separation of client, server, and data and what technology is behind each

layer. The server layer running Tomcat, R, and Rseve could be split up to enable R and

Rserve to run on a dedicated machine with more memory if large datasets were required or

many users using the system.

17

5 Program Implementation

The interactions between the different technologies are illustrated in the following diagram(Figure 5) and are followed by a detailed description of the each of the main program

files.

Figure 5. Program Flow

The control begins with a browser session on the clients web interface and the user will request a new study by clicking on the link to newstudy.jsp. After the user enters the required

data for the stock and event dates they will submit the form to the web server and the html

form posts the data to the controller servlet, Controller.jsp. This servlet will now create the

business logic for the application by calling the REvent.java servlet. At this point the link

between java and R is established to pass information back and forward in way of R commands from Java to R and results from R back to Java objects. When control is passed to R

via an R command, the R environment will call any further R packages needed to implement

the command successfully. This will include any fetching of data from online resources,

therefore the interaction is with R and the resource and not Java and the resource. This distinction is important and it is the power of R that makes the solution possible.

The main classes required for the implementation are;

18

Controller.java

5.1

This class is used to control the program flow between the business logic and the returned

graphical display. When the controller receives a request from the application it determines

the required operation that has to be carried out by reading the request parameters sent.

REvent.java

5.2

This is the main class that implements all the business logic and handles the R connections

for statistical analysis. The class imports a number of extra classes to help develop the solution and the two main imports that handle the R connections are

org.rosuda.REngine.Rserve.RConnection

org.rosuda.REngine.Rserve.RserveException

The first allows you to connect an R session via Rserve by calling from within the java

code;

RConnection c = new Rconnection("127.0.0.1");

This creates a RConnection object and the 127.0.0.1 parameter is indicating that the Rserve

is running on the localhost. If, in a distributed environment, the Rserve was running on a

dedicated machine then the ip address of the dedicated server could be configured here. Once

we have created the RConnection object we can interact with the R environment by calling

the method parseAndEval. This method takes any string object and passes it to the R environment and evaluates it within R. If the java does not require a returned object then the parseAndEval method can be called on its own without the need to store any returned result, e.g.

c.parseAndEval("dev.off();");, however if the command returns an object it can be stored in a

Java object for use directly within the java code by using the REXP object e.g. REXP alpha

= c.parseAndEval("alpha<-coef(lm.r)[1];");. The special REXP class encapsulates any objects received or sent to Rserve. If the type of the returned objects is known in advance, accessor methods can be called to obtain the Java object corresponding to the R value. These R

command are then chained together to carry out the statistical analysis and generate the required charts and results.

Two solutions have been implemented for the charting and have their own benefits and

drawbacks.

19

5.2.1

PNG Image creation

There is no I/O API in REngine because it's actually more efficient to use R for this task and

the standard graphs are detailed enough for the solution required. The process involved, to

transfer these graphs to the user, is however slightly complex and would require large

amounts of disk space under multi-user conditions. R generates the PNG images of the

graphs required and stores these in the R session for this Rserve connection using xp =

c.parseAndEval("try(png('returns.png'))"). This is valuable because at this stage graphs are

kept separate between muli-user sessions and filenames do not need to be unique. We then

need to transfer this image from the R session working directory to a directory that is visible

to the Tomcat server so the graph can be embedded within the web interface results. This is

achieved using the java.awt.image.BufferedImage class and carrying out a binary read of the

image and creating an Image object in Java that is them saved to disk within the Tomcat application folders. When the image is written to disk the tomcat session id is appended to the

filename so that different session images can be kept unique and the multi-user interface can

distinguish between user’s graphs. The drawback is that it is more complicated to try and

customise the generic graphs within R and send all the customisable commands via Rserve.

The graphs however can be very detailed (Figure 6). This example chart shows the graphs

produced for one example stock. It is split into three main areas and each area is produced

separately and then compiled into one graph using quantmod. The top area shows the candle

stick chart for daily stock prices over the whole period analysed. The green candle sticks represent a positive return on that day’s trading and orange represents a negative return. The

middle section shows the index value for the corresponding day and in this example is for

the S&P500. The bottom section shows the daily return only for the stock. This is a nice

compilation graph that can show quickly trends in prices for the stock and the index.

The chart is generated by calling a quantmod chart and then appending the other two charts

to it using the addTA function.

//plot the candle stick chart but remove volume chart using TA=NULL

chartSeries(symChartAll, TA=NULL, theme="white", name="F");

//plot the index line graph at the bottom of the candle stick chart

plot(addTA(Cl(idxChartAll), legend="GSPC",col='blue',type='l'));

//plot the stocks daily returns at the bottom of the index graph

plot(addTA(drFAll, legend="F daily returns",col='red', type='h'));

20

Figure 6. R chart on interface

5.2.2

XML Charts

The second implementation of the charts is to generate only the XML data of the results and

then generate the graph on the client side (Figure 7). This requires less R code and also less

disk space. A Java method getXMLChart was implemented to generate the XML data from

the R results and this XML file was written to the Tomcat application folder with a session id

to again keep user sessions unique. If this solution was to be scaled up this would be the preferred implementation as this would be a much more scalable proposal both on resources and

on speed of execution. With less disk access and less data transfer the performance would be

significantly better. It would however require a bit more work to convert the XML to a

graphical representation on the client.

21

Figure 7. XML Chart on Interface

5.3

Newstudy.jsp

This is a Java Server page (jsp) and pulls together all the graphs into an interface to be displayed to the user and also allows the user to start a new study by entering the new stock parameters that the user wishes to analyse. The JSP page has different syntax from a Java

Servlet and does not noticeably extend from HttpServlet but when Tomcat loads the application on start-up the jsp pages are converted to a conventional Servlet within tomcat and these

files can be looked at but not changed e.g. The newstudy.jsp is converter on the fly to

work/Catalina/localhost/uk.co.mylocalfarm.jsp/org/apache/jsp/newstudy_jsp.java and this is

compiled to

work/Catalina/localhost/uk.co.mylocalfarm.jsp/org/apache/jsp/newstudy_jsp.class. The JSP

includes tags to access the Java objects created and also session variables to customise the

interface to the individual study. The graphs that are unique to the individual study can also

be accessed by configuring the jsp to also include the session id when reading the file names

of the graphs. e.g. When getting the XML file for the charts the path to the XML is

chart_data/firstchart<%=session.getId()%>.xml and as the file was created with the session

id this will be interpreted correctly and pick the correct XML file.

22

Figure 8. Class diagram

Figure 8 shows how the program class files extend from the common java libraries and how

at a lower level these are implemented. The javax.servlet and javax.servlet.http are both part

of the J2EE implementation and are commonly used in enterprise applications. There are

also a number of libraries used within the application and these are referenced throughout the

Java code.

TSNetCore.jar – Library including date analysis methods.

XMLLib.jar – Library including XML creation and manipulation methods. This is used to

produce the XML for the XMLGraphs

REngine.jar – Library to facilitate the interactions with the R environment.

RserveEngine.jar - Library to create the connection to Rserve.

5.4

User Guide

In order to use the EventR solution the user must know the stock code of the company and

the event date they wish to analyse. When the user accesses the website home page (Figure

9) they are given a brief overview of Event study and some example stocks/events at the bottom that they can click on to see an example study and the corresponding charts.

23

Figure 9. EventR Home Page

The user can then click on the 'New Study' tab at the top of the page and this will load the

data entry screen to capture the data required for the analysis (Figure 10). The minimum data

required is the event date, stock code, and the index required. When the user enters in the

event date, the interface dynamically populates the default dates for the event window and

the estimation window using JavaScript. These dates are based on the 60 day estimation

window and 15 days either side of the event date for the event window. The user can override these defaults and set any period that they require. The user then picks which model they

wish to use to estimate the expected returns. For more accurate analysis the Fama-French

model should be used however this does take marginally longer to execute. The interface

defaults to the single factor model. Date entry is made simpler by the inclusion of a jQuery

calendar widget (Figure 11) and with the use of the up and down arrows, users can quickly

skip months to select the correct date.

24

Figure 10.

Figure 11.

New Study Screen

JQuery Calendar Widget

Once the correct data has been entered the user can click on 'Display' to initiate the event

study analysis. Once the analysis has completed the user is presented with the graphs, charts

and estimation parameters used for the analysis (Figure 12). The R graphs have been reduced

25

in size to fit into a standard computer monitor resolution and can be clicked on to display a

larger graph using a jQuery lightbox implementation.

Figure 12.

Returned Analysis

From the lightbox window (Figure 13) the user can cycle through all the graphs produced by

the analysis.

Figure 13.

jQuery Lightbox

26

6 Application Deployment

To deploy the application to the Tomcat Servlet container it was required that the files were

packaged into a Web ARchive file (WAR). There is an option in Eclipse to export the file as

a WAR file and this makes it simpler to achieve this step (Figure 9). Eclipse also asks where

it should create the WAR file and you can configure it to create the file in the correct Tomcat

folder required. Before the WAR could be copied to the destination folder the original deployed application had to be removed first. This involved locating the Tomcat webapps folder and deleting both the old WAR file and the exploded WAR application folder. This was

tedious after a while and given more time would have looked into a better solution to deploy

the application.

Figure 14.

WAR Export from Eclipe

If eclipse was not used as the IDE there is a command line method of producing the WAR

file using the Java jar command. The WAR file needs to be placed in a Tomcat folder

(webapps) that is checked at start-up for any new web applications and expands these WAR

files within the Tomcat environment and enables access to these files at

http://localhost:8080/(webapplicationname). The extra Java library files need to also be included in the WAR file to allow Tomcat to run the code. These are normally placed into the

(webapplicationname)/WEB-INF/LIB folder for the web application but can be places at a

27

higher level at tomcat_home/LIB and visible to all web applications running on the Tomcat

server. The java files are compiled to class files and these are packaged into the WAR file at

(webapplicationname)/WEB-INF/CLASSES.

28

7 The EventR implementation of Event Study

As all the analysis of the event study was carried out using R the descriptions here are for the

R code passed to the R environment via the Java program. It is unnecessary to describe all

the Java code however it may be helpful to explain the main R calls and their function in the

overall solution. All commands are customised at runtime with the data required for the current event study. Any R code here is just an example of one instance of the solution.

The program will first get the stock and index data for the full study window and store them

in R objects using;

getSymbols("^GSPC", from="2010-11-25", to="2011-02-24"); //fetch the S&P500 data

getSymbols("CSCO", from="2010-11-25", to="2011-02-24"); //fetch stock data e.g. Cisco

We then split up all the data into the different time windows using a number of call similar

to;

symChart <- CSCO['2010-12-21::2011-02-19']; //split stock data to estimation window

We can then calculate the daily returns for the estimation window for both the stock and the

index using methods from the quantmod package using;

dailyReturn(symChartAll);

dailyReturn(idxChartAll);

To now calculate the estimation parameters for alpha and beta we need to carry out regression of index returns on stock returns. In R we can call the linear regression command and

pass in the variables created in the previous steps and get the alpha and beta from the results;

lm.r=lm(drCSCO ~ drGSPC);

//regress stock and index

alpha<-coef(lm.r)[1];

//access alpha variable

beta<-coef(lm.r)[2];

//access beta variable

29

At this point all the parameters required to carry out the single factor are generated and a

piece of R code was written to create a time-series object containing the calculated expected

returns;

n<-nrow(drCSCOEvent$daily.return);

rHat<-vector();

rHatDate<-vector();

for(i in 1:n){

rHat[i]<-(drGSPCEvent[i]*beta) + alpha;

rHatDate[i]<-index(drGSPCEvent[i]);

};

rHatZoo=zoo(rHat, as.Date(rHatDate));

If the user chooses to use the Fama-French model then the variable for the SMB, HML, and

Rf are read from a csv file locally and contain daily values for all the factors since 1963.

These can be read in and parsed for analysis by calling;

tmp <- read.table("/home/marco/Documents/dissertation/fama-french-3factor.csv", sep =

",");

fama3 <- zoo(tmp[, 2:5], as.Date(as.character(tmp[, 1]), format = "%m/%d/%Y"));

colnames(fama3) <- c("mkt", "SMB", "HML", "RF");

famawindow <- window(fama3, start = as.Date("2010-12-21"), end = as.Date("2011-0322"));

The Fama-French loading factors can then be estimated by amending the regression to include these variables in the lm command

lm.r=lm(drCSCO ~fama3$mkt + fama3$SMB + fama3HML)

alpha<-coef(lm.r)[1];

//access alpha variable

beta1<-coef(lm.r)[2];

//access market beta variable

beta1<-coef(lm.r)[3];

30

//access SMB beta variable

beta1<-coef(lm.r)[4];

//access HML variable

Now we have all three factor betas an additional routine was added to model the expected

returns using the Fama-French estimations.

for(i in 1:n) {

rHat[i]<-famawindow$RF[i] + (famawindow$mkt[i]*beta) +

(famawin-

dow$SMB[i]*beta2) + (famawindow$HML[i]*beta3) + alpha; rHatDate[i]<index(drGSPCEvent[i]);

};

rHatZoo=zoo(rHat, as.Date(rHatDate));

This has built the model of expected returns and the values from the array rHat are taken

away from the actual stock returns to calculate abnormal returns. These results can now be

plotted for analysis.

31

8 Testing

The program was tested from 3 different aspects; Java code testing, R testing, and also the

financial analysis testing;

8.1

Java Testing

Eclipse IDE was used to develop the system and this has real-time instant syntax checking so

many of the syntax errors where identified instantly and solved. Runtime errors were harder

to detect and errors were returned to the Tomcat console. When an error was returned it indicated the stack trace of the error and only when you have identified the correct file and line

number from the trace can you return to the source in Eclipse to try and solve the issue. JSP

pages were even harder as any runtime errors were reported for the auto-generated Servlet

and not the JSP page itself. Use of System.out.println was used to return variables to the console window to debug the application and trace possible errors. Rserve has its own error object and throws an exception that could have been better used within the program to detect

different error conditions.

8.2

R testing

The program was written such that every time an R command is sent to the R environment it

is also written to the Tomcat console window. This enabled testing of the R code directly in

R manually. When an event study is carried out the full R output is written to the Tomcat

console and can easily be copied and pasted into a standalone R session. Each line can be

evaluated and checked for errors. The execution of the commands directly in R produces better error reporting. The R calls can then be amended within the code to provide a better solution.

8.3

Statistical Testing

To test the results of the statistical analysis it was necessary to carry out the event study in

Microsoft Excel and compare the estimation parameters. This involved the steps mentioned

in 3.4 and the output of Excel was compared to the output of the program. Only rounding

differences were identified and conclusion was that the solution was as accurate if not more

than the conventional Excel method.

32

9 Conclusion

9.1

Summary

In conclusion, this project has gone some way to provide a quick and reliable solution to

event study analysis and remove some of the laborious tasks of manually accessing online

data resources. The technologies used allows for future enhancements and remote development of the solution further. The inclusion of open-source R packages ensures that there will

be continual development of the packages used or at least access to the underlying code to

make any changes or developments deemed necessary. As the backbone of the solution uses

a robust well tested statistical environment, the solution can be relied upon for its accuracy

and many of the single line commands issued from within the program are very powerful in

their statistical context.

9.2

Evaluation

The project has achieved its main objective of creating a simple, easy to use, event study

analysis tool that reliably reads data directly from a number of online resources. As these

online resources change their data formats, so do users change the opensource packages to

maintain consistency of execution, providing longevity of the solution. The client/server architecture succeeded in producing a zero install solution for users that wish to run the application without having to check minimum specification of their hardware and connection

speeds to the internet as it is the server that is connecting to the online resource and retrieving the data. This positive client/server design of the solution could also be a curse. If many

users were to use the system at once, the users will be relying on the server’s performance

under multi-user conditions, however this could be monitored and additional resources allocated as required. The graphs produced within the application contain all the information

required to give an overview of the analysis and can be used in a report to support further

analysis results. The web based interface provides the multi user access to the software, and

good use of the JavaScript library jQuery has enhanced the user interface design. Use of JavaScript and jQuery has also kept the user interface to a minimum screen size to fit inside the

standard users screen resolution. Inclusion of a screen to display the raw data may have been

useful for some users that wished to look at the underlying data, however the research indicated that the raw data was mainly useful only to produce the statistics and graphs. The system also hides the calculation for the analysis from the end user and this simplifies the inter33

face design. There was discussion about including the R system calls in the interface to enable the user to monitor the R code used to produce the results, however this again was not

seen as necessary. The goal of the solution is to aid event study and not necessarily what

method was used, therefore the user does not need to know if the backend is generating the

statistics in R, Excel, or any other statistical package The solution provides two return generating models and many can be added as developed. The Fama-French return generating

model included is however a commonly used model and seen as an accurate representation

of modelling stock returns. The Fama-French model can explain 90% of returns for a stock

compared to the CAPM model.

Deploying the application became a tedious task and for a small change required multiple

steps before the application could be re-tested. The development should have been extended

to include a deployment framework or tool to aid this process. Tools are available including

Apache Ant and Maven. These involve building deployment description files to map where

each file is to be deployed on the server and also make sure dependencies are included when

copied to the server. Another possibility would have been to use an installer for the web application. There are a number available, however NSIS from Nullsoft [11] would have been a

good candidate as it is free and opensource, keeping to the principle of producing a free solution. With NSIS it would have been possible to script the process of deleting all the necessary files and folders and then copying the new updated application onto the server. NSIS is

available for MS Windows and you can compile the source for a Linux, non-Windows version. There was not enough time to complete this for this project.

9.3

Future Work

Future work would be to include more models for returns and to include GARCH terms to

better improve our return generating model. It would also be better if a comma separated set

of stock codes could be supplied to be able to analyse multiple stocks over the event period

to give a cross section analysis of representative stocks for the analysis. This would be a relatively easy amendment as the analysis for multiple stocks will be a replication of the analysis

of one stock. The multiple stocks could be parsed and split into the individual stock codes. If

the solution was also to capture some metadata about the analysis and store significant results in a database then it could be data mined to make even better predictions about future

events. Similar analysis could be grouped and analysed further to expose trends or further

similarities under similar event conditions that further enhance the understanding of stock

34

returns. Recorded Future [5] does this type of analysis for a number of sectors including finance and this is an area that could be developed further with a commercial solution provided to financial analysts.

35

References

[1] Fama-French three factor model on Wikipedia

http://en.wikipedia.org/wiki/Fama%E2%80%93French_three-factor_model 2nd July

2011

[2] Fama-French data library, Tuck School of business, Dartmouth

http://mba.tuck.dartmouth.edu/pages/faculty/ken.french/data_library.html 4th Aug 2011

[3] Fama, Eugene F.; French, Kenneth R. (1992). "The Cross-Section of Expected Stock Returns". Journal of Finance 47 (2): 427–465

[4] Eventus website, http://www.eventstudy.com 12th Aug 2011

[5] Recorded Future website http://www.recordedfuture.com 15th Aug 2011

[6] R project website http://www.r-project.org/ 2011 3rd July 2011

[7] Quantmod R package website http://www.quantmod.com/ 3rd July 2011

[8] Cran summary page for the Zoo package http://zoo.r-forge.r-project.org/ 18th July 2011

[9] Department of Computer Oriented Statistics and Data Analysis, Augsburg University,

http://rosuda.org/Rserve/ 12th July 2011

[10] Event Studies in Management Research: Theoretical and Empirical Issues. Abagail

McWilliams and Donald Siegel - The Academy of Management Journal Vol. 40, No. 3

(Jun., 1997), pp. 626-657

[11] Nullsoft NSIS installer website http://nsis.sourceforge.net/Main_Page 29th Sept 2011

36

Appendix 1 Installation Guide

As there is no client side installation required this guide explains the software and steps required to setup the server-side technology. These notes are for a Linux installation, however

all the software required has MS Windows versions

Tomcat Installation

Download the tomcat binary from tomcat.apache.org e.g. apache-tomcat-6.0.18.tar.gz

Expand the compressed file; tar zxvf /tmp/apache-tomcat-6.0.18.tar.gz

Make sure you have the JAVA_HOME and the PATH environment variables set

run /usr/local/tomcat/apache-tomcat-6.0.18/bin/catalina.sh run from a terminal window.

Now verify that Tomcat was started successfully by opening the URL http://localhost:8080

(Port number 8080 is the default port used by Tomcat).

R Installation

R is available in the software repositories and all you need to do is locate the r-base package.

This has a list of dependencies that will also be installed as required. Once the installation is

complete, R can be started from a terminal window by typing 'R'

RServe Installation

As RServe is an R package it is installed from within the R environment. Once you have

launched R from the steps above you can type the following at the R prompt;

install.packages('Rserve')

This will install the Rserve package and this only has to be done once.

To start Rserve you need first load the Rserve library and then start it by typing the following

at the R prompt

library(Rserve) //load the library

Rserve() // start Rserve

The server is now able to accept connections to R via Rserve.

37