Memory Management in VxWorks

advertisement

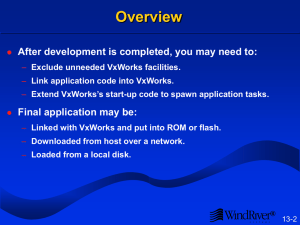

An Overview of the VxWorks Real-Time Operating System (RTOS) Peter Williams and Katrina Kowalczuk Abstract This paper will first provide an overview of the VxWorks real time operating system. We will focus our discussion on how VxWorks handles power, process, and memory management. Information about the supported VxWorks applications will also be provided. Introduction A Real-Time Operating system is a functioning operating system that has been specifically developed for use within real time situations, more typically for embedded applications. Embedded systems are often not recognisable as computers. Instead they exist within objects, which are present throughout our day-to-day lives. Real time and embedded systems often operate within constrained environments where processing power and memory are limited. They often have to perform to strict time deadlines where performance is critical to the operation of the system. VxWorks is an example of a real time operating system, and it has a good track record for providing the basis of many complex systems. These systems include 30 million devices ranging from network equipment, automotive and aerospace systems, life-critical medical systems and even space exploration. VxWorks was developed from an old operating system VRTX. VRTX did not function properly as an operating system so Wind River acquired the rights to re-sell VRTX and developed it into their own workable operating system VxWorks (some say this means VRTX now works). Wind River then went on to develop a new kernel for VxWorks and replaced the VRTX kernel with this. This enabled VxWorks to become one of the leading operating systems created for the purpose of real time applications. Memory Management in VxWorks VxWorks memory management system does not use swapping or paging. This is because the system allocates memory within the physical address space without the need of swapping data in and out of this space due to memory constraints. VxWorks assumes that there is enough physical memory available to operate its’ kernel and the applications that will run on the operating system. Therefore VxWorks does not have a directly supported virtual memory system. 1 The amount of memory available to a VxWorks system is dependent upon the platform’s hardware and the memory management unit’s imposed constraints. This amount is usually determined dynamically by the platform depending on how much memory is available, but in some architectures it is a hard coded value. This value is returned by the sysMemTop() method which will set the amount of memory available to the operating system for this session. 1 There is an extra virtual memory support component available, The VxVMI Option, which is an architectureindependent interface to the MMU. It is packaged separately as an add-on. 0xFFF… Routines which provide an interface to the system-level functions (most of the kernel) are loaded into system memory from ROM and are linked together into one module which cannot be relocated. This section of the operating system is loaded into the bottom part of the memory, starting at address 0. Dynamic Partition Dynamic Partition The rest of the available memory is known as the System Memory Pool essentially is the heap available to the application developer and the dynamic memory routines for manipulating the heap. Kernel System Memory Pool and 0 In most cases, the VxWorks memory model is flat and the operating system has just the limits of the available physical memory to work with. When memory needs to be allocated, the dynamic memory management routines use the ANSI standard C function malloc(), a first-fit algorithm. It searches for the first available block of memory that the program requires, splits the correct amount of memory required for the task, and leaves the rest. When the memory needs to be freed up, the ANSI-C function free() is used. Using the first-fit method can lead to the fragmentation of all of the memory very quickly, and obviously if lots of different sized tasks are loaded into memory between lots of free spaces this can lead to no space being available at all when the next allocation request is given. Also, searching through many fragments of memory will take longer when trying to find space for allocation. It is advised to reduce fragmentation that the dynamic partition sizes are allocated on system initialisation dependent on the size of the tasks/task data. Another way to reduce fragmentation in all of the system memory is to partition the memory off into two or more sections at start-up and ask the offending tasks to use the other partitions. Processes in VxWorks A feature of a real-time operating system like VxWorks is that the programmer developing applications for the operating system can control the process scheduling. This means that the programmer is in control of how and when the processes run rather than leaving the operating system to work it out. In terms of other operating systems a VxWorks task is similar to a thread, with the exception that VxWorks has one process in which the threads are run. In a RTOS, these tasks must endeavor to appear to run concurrently when obviously with only a single CPU this cannot happen, so clever scheduling algorithms must be enforced. Pre-emptive Task Scheduling VxWorks schedules processes using priority pre-emptive scheduling. This means that a priority is associated with each task, ranging from 0 to 255, (0 being the highest priority and 255 being the lowest). The CPU is allocated the task with the highest priority and this task will run until it completes or waits, or is pre-empted by a task with a higher priority. There are different factors which the programmer should take into consideration when assigning priorities to processes: 1. Priority is a method of ensuring continuity of tasks in the system and preventing a specific task from hogging the CPU while other important tasks are waiting. Therefore, priority levels are not a method of synchronisation (making tasks run in a specific order). 2. Tasks that have to meet strict deadlines should be assigned a high priority and the number of high priority tasks should be kept to a minimum. This is because if there are lots of high priority tasks they will all be competing for CPU time and the lower priority tasks may be left out. 3. Tasks that are CPU intensive should be assigned a lower priority. If these tasks need to perform to deadlines and need a higher priority, task locking can be used as described below. 4. VxWorks kernel tasks must be of a higher priority to application tasks in order to ensure continuity of the core operating system processes. Round Robin Scheduling There is another (optional) method of process scheduling which is used when a task (or a set of tasks) of equal priority is competing for CPU time. Round Robin scheduling is where each task is given a small piece of CPU time to run, called a quantum, and then allow the next task to run for its’ quantum. VxWorks will provide this time-slicing mechanism to a number of processes until a process completes, and then pass the next process (or set of processes with the same priority) to the CPU. The only problem with frequently relying on Round Robin scheduling is that if higher priority tasks are consuming all of the CPU time, the lower priority tasks would never be able to access the CPU. Inter-process Communication (IPC) with VxWorks As with processes in all operating systems tasks in VxWorks often need to communicate with one another, and this is known as IPC. A typical synchronisation problem encountered while processes are communicating is a race condition. This is basically where the result of shared data is determined by its timing but the timing has gone wrong. The OS must avoid these race conditions and must achieve mutual exclusion to ensure a process is not accessing shared data (while a process is in its critical region) at the same time as another. VxWorks makes use of a data type called Semaphores to achieve mutual exclusion. This works by providing an integer variable which counts waiting processes, resources or anything else that is shared. There are three types of semaphore available to the VxWorks programmer. 1. The binary semaphore – This only has two states, full or empty. The empty variable either waits until a give command is made, and a full semaphore waits for the take command. Binary semaphores are used with Interrupt Status Registers, but are not advanced enough to handle mutual exclusion. 2. The counting semaphore – This variable starts at 0, which indicates that nothing is waiting, and a positive integer above 0 indicates the number of things waiting. This is generally used where there are fixed numbers of resources but multiple requests for the resources. 3. The mutual exclusion (mutex) semaphore – This is an advanced implementation of the binary semaphore which has been extended further to ensure the critical region of a task is not interfered with. A key difference with Mutex semaphores is that it protects against priority inversion, a problem which can be encountered at some point when using a priority pre-emptive scheduler, as explained below. Shared memory (e.g. in the form of a global information bus) is also used to pass information to other tasks, as are message queues which are used when the tasks are both running. Priority Inversion A quick note on priority inversion, which although is rare, can mean success or failure for a real time operating system. Consider this: A low priority task holds a shared resource which is required by a high priority task. Pre-emptive scheduling dictates that the high priority task should be executed, but it is blocked until the low priority task releases the resource. This effectively inverts the priorities of the tasks. If the higher priority process is starved of a resource the system could hang or corrective measures could be called upon, like a system reset. VxWorks experienced a real problem with priority inversion when the operating system was used for the NASA’s Mars Pathfinder Lander in 1997. A high priority information management task moved shared data around the system ran frequently. Each time something was moved, it was synchronised by locking a mutex semaphore. A low priority meteorological data gathering task would publish data to the information management bus, again acquiring a mutex while writing. Unfortunately an interrupt would sometimes cause the medium priority communications task to be scheduled. If this was while the higher priority task was blocked from running because it was waiting for the lower priority task to finish, the communications task would stop the lower priority task but preventing the higher priority task from running at all. This caused a timeout, which was recognised by a safety algorithm. This in turn caused system resets which appeared random to the supervisors back on Earth. Information Bus Mutex Semaphore High Priority 3 2 Bus Management Task 1 ` Low Priority 4 Medium Priority Communications Task Meteorological Publishing Task 1. Low priority meteorological task is scheduled. 2. High priority bus management task blocks to wait for the meteorological task. 3. The communications task runs and precedes the low priority meteorological task. 4. Because the high priority bus task was blocked by the meteorological one it is forgotten – thus essentially inverting its priority. It is forgotten about and safety measures time out. Figure demonstrating the priority inversion problem in the Pathfinder Lander. Power Management Operating systems have to deal with the power management of its resources within the system they are operating. The operating system controls all of the devices within the system and thus has to decide which ones need to be switched on and operating and which ones are not currently needed and should be sleeping or switched off. It is entirely possible for the operating system to shut down a device, which will be needed again quickly, and this can cause a delay while the device restarts. However it is also possible for a device to be kept on too long and it will be wasting power. The only way around this issue is to use algorithms which enable the operating system to make appropriate decisions about which device to shut down and what conditions dictate when it should be powered up again. Examples of such management are slowing disks down during periods of inactivity, and reducing CPU power during idle periods. This is called dynamic power management. The problem now however is that the processors are now very power efficient and therefore are not likely to be the primary consumer of energy in systems. This now means that dynamic power management is becoming outdated and a new method of power management will need to be introduced into the operating system to tackle those system devices, which are still inefficiently consuming energy. Footnote on Power Management The information provided above is general to most operating systems but not specifically VxWorks. Dynamic device power management in a RTOS is essential, however, especially in an embedded system that for instance runs on batteries or needs to be economical with regard to heat output. Usually the operating system uses routines that are part of the architecture, for instance Intel’s ACPI interfaces. VxWorks can make use of the built-in interfaces on specific PowerPC architectures. Conclusion VxWorks is a versatile Real-Time Operating System which offers the developer a great deal of control over synchronisation and scheduling of tasks. This is definitely a stronger point of the operating system and in a RTOS this is essential in order to make sure things happen when they should and that they don’t interfere with one another, i.e. with a safety-critical system in aerospace or healthcare. Allocation of memory in VxWorks is one of the weaker points with fragmentation common because of the first-fit algorithm used to allocate memory space. Although there are ways to get around the fragmentation I believe something to compact the memory at a specific point would be helpful but this may bring about its own problems with finding the correct time to do this – if it takes time it may interfere with deadlines of critical tasks in the RTOS. References The VxWorks Cookbook. (2003). Retrieved, April 18, 2005 from, http://www.bluedonkey.org/cgibin/twiki/bin/view/Books/VxWorksCookBook Daleby, A., Ingstrom, K. (2002). Dynamic Memory Management in Hardware. Retrieved, April 21, 2005 from, http://www.idt.mdh.se/utbildning/exjobb/files/TR0142.pdf What really happened on Mars? (1997). Retrieved April 27, 2005 from, http://research.microsoft.com/~mbj/Mars_Pathfinder/Mars_Pathfinder.html Wikipedia. (2005) VxWorks. Retrieved May 02, 2005 from, http://en.wikipedia.org/wiki/VxWorks