LANGUAGE PRIMITE DATA STRUCTURES

advertisement

LANGUAGE PRIMITIVE DATA STRUCTURES

How well do the integer related data types in modern computer

languages represent the mathematical concept of integer?

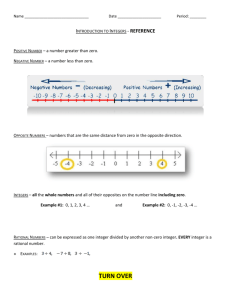

The mathematical concept of an INTEGER is an aleph null, or countable

infinite set1. Integers are often represented by the integer number line. The

set of integers is closed over addition, subtraction, and multiplication.

Integer division can also be defined so that it is closed except, of course, in

the case of division by zero. The set of integers also has other well-defined

properties such as the existence of additive and multiplicative identities, and

an additive inverse. In fact, the set of integers is a Linear Algebra over the

operations of + and *, so you can see your Linear Algebra class for more fun

along these lines.

In C++, int, long int (or long), short int (or short), and so forth store

integers in two’s complement form2 as a specific number of bits.3 For

example, an int is almost always stored as either 16 bits or 32 bits (2 or 4

bytes). Regardless of language, the range of an integer stored in N bits in

two’s complement is always:

-2N-1+2N-1-1

for 8 bits, this range is -128 to +127

for 16 bits, this range is ≈ ±32K

for 32 bits, this range is ≈ ±2G

Java supports a very similar set of integer data types, and calls them byte,

short, int, and long. Many languages also support unsigned integers. In C++,

they are called uint, ulong, ushort. The range of integers for an N bit

unsigned integer is 02N-1. In C++, when you ask for short or long

integers, the compiler will decide whether or not you get them. In Java, you

will get the size requested, but support for short is spotty, and automatic

conversions to int will often cause compilation errors. For example, short a

1

Kurt Godel first characterized infinite sets using aleph. He tried to conceive of an infinite number of

different infinites. Any set that can be placed in 1 to 1 correspondence with the set of integers is aleph null

(also called aleph naught). The uncountable infinite set of real numbers is aleph one. I read somewhere that

the set of functions R to R where R is the set of real numbers is aleph two, and so on.

2

You should have studied two’s complement in Discrete Math or Computer Organization. If you are not

familiar with this scheme, I can give you a reference.

3

I could have said bytes actually, as the number of bits allocated to an integer is always a multiple of 8 in

modern computers. In C++, you can use the sizeof ( ) function to determine the actual number of bytes

allocated to any data type.

= 3, b = 4; a++; will compile, but a= a+b; will not without converting to a =

(short)(a+b); This applies to a number of operations.

As long as no value computed or stored is outside the supported range,

the computer representation of integers is a (more or less) perfect

mirror to the mathematician’s “integer”. Note: we consider zero to be

positive and even, but mathematicians do not. Integer overflow or underflow

is the result when such an operation fails.

In C++, limits.h contains predefined constants representing the highest

and lowest values for some of these data types. The names of some of these

constants are given below. Be advised, however, that it is usually unwise to

test against these values as comparisons involve subtraction, and any

operation involving one of these numbers can easily produce overflow or

underflow.

INT_MIN

INT_MAX

UINT_MAX

LONG_MIN

LONG_MAX

ULONG_MAX

SHRT_MIN

SHRT_MAX

USHRT_MAX

In Java, wrapper classes such as Integer, Long, and Short are

predefined for primitive types, and these wrapper classes have predefined

static constants such as: Integer.MAX_VALUE, Integer.MIN_VALUE,

Long.MAX_VALUE, etc.

How well do the floating point related data types represent the

mathematical concept of a

real number?

The mathematical concept of a REAL number is an aleph one, uncountable

infinite set. The real number line is often used to represent real numbers.

Notice the fundamental difference between the set of integers and the set of

real numbers. The number of integers between any two numbers on the

integer number line is finite. The number of real numbers between any two

numbers on the real number line is infinite, and not only infinite but

uncountably infinite.

In C++, float, double, and long double are used to represent real numbers.

Java supports only float and double. Computer representations of real

numbers are stored in a manner similar to scientific notation, except in

binary. These representations are called floating-point representations. There

are many variations, but the method described here is most common today as

it follows the IEEE 754 floating-point representation standard. For 64 bits, a

normalized number is stored as a single sign bit, an 11 bit exponent4 (the

base is understood to be 2), 1 understood but not stored bit containing a 1,

and a 52 bit fraction/mantissa. There is an understood binary point between

the understood 1 and the fraction.

Despite the best efforts of a number of people over the years, 32 bits is

simply unable to store floating-point numbers with reasonable accuracy and

range. This is why C and C++ ignore float, and use the double

representation almost exclusively. For example, none of the functions in

math.h5 expect a float parameter nor do they return a float value. Java is

somewhat more orthogonal, as it overloads the mathematical method names

for most numeric types for some functions. For example, abs, min, max, and

round are defined for double, float, long and int. Even so, sqrt, floor, and

ceil are only defined for double.

The exponent provides the range of numbers that can be represented. The 11

bit exponent in double gives a range of 21023. In base 10, this is 10308.

The fraction/mantissa provides the level of accuracy of the representation

(the number of significant digits). Normalization adjusts the binary point

until there is a single 1 to the left of the binary point Thus, the mantissa is

greater than or equal to 1 and less than 2. This leftmost 1 is normally not

stored. The remaining 52 bits of the mantissa is normally stored and is

usually called the fraction for obvious reasons. The exponent is adjusted to

get mantissa in the desired range. Such numbers are called normalized.

Some values such as ZERO cannot be normalized and some other values are

de-normalized for other purposes, such as to permit a closer approach to 0.0.

These 53 bits (1 not stored) in the mantissa provide 15 to 16 significant

digits in decimal.

Thus, all whole numbers requiring no more than 15 or 16 significant digits

can be represented exactly in floating-point. Much larger numbers whose

4

Exponents are almost always stored in excess notation. Excess notation means that a bias is added to the

exponent to make it positive. For an 11 bit exponent, this bias is 1023. The reason is subtle. It allows two

64 bit floating-point numbers to be compared as if they were 64 bit integers, greatly speeding up real

number comparison and simplifying the design of an ALU.

5

Not even the functions that include an “f” to indicate “floating” such as atof, modf, and fabs deal with

float. They all return a double.

binary form has many zeros in the lower bits are also stored exactly. For

example:

10101000000000000000000000000000000000000000000000000000000000002

= 1.01012 * 264 (normalized to between 1 and 2)

mantissa = 1.01012 The stored fraction will be = 01010…02

exponent = 64

The stored exponent will be 6410 + 102310 = 108710

As 11 bits in binary, this is will be 100 0011 11112

Furthermore, any fraction that can be stated with the denominators as

powers of 2 can be represented exactly. For example:

53/64 = 32/64 + 16/64 + 4/64 + 1/64 = ½ + ¼ + 1/16 + 1/64

= 0.1101012

Many common fractions such as 1/3, 1/5, 2/3, etc cannot be represented

exactly as they become repeating binary fractions when converted to binary.

For example, 2/5 = 0.410 = 0.011001100110…2. Furthermore, no irrational

numbers or transcendental numbers such as 2 or or e can be represented

exactly.

Even such pedestrian values as those associated with money are usually

approximated when stored or computed:

$1.73 cannot be stored exactly as 73/100 cannot be expressed as a

sum of fractions with denominators all powers of 2.

Therefore, you should almost never attempt to compare two floatingpoint values for either equality or inequality. In fact, all compilers should

flag such a statement with a warning message. Unfortunately, some do and

some do not. The only situation where you can reasonably expect the

comparison to work correctly is when the values to be compared were input

or constants. (That is, not computed.) Use >= or <= when reasonable, but if

you want to test if A equals B, you should instead test something like:

if( fabs( A-B) < ERROR_FACTOR ) in C++

if( abs( A-B) < ERROR_FACTOR ) in Java

Here, ERROR_FACTOR is a previously defined constant along the lines of

1.0E-10.

You should try to avoid subtraction of values that are almost equal. This

often leads to the loss of many significant digits. Consider the following

decimal example in which the number of significant digits drops from 15

down to only 2 in 1 operation. Unfortunately, such operations are almost

inevitable if one is searching for the root of an equation. In addition, the

higher the degree of the polynomial, the more acute the round-off problem is

likely to be.

1.56784932563566

- 1.56784932563549

----------------------------------------0.00000000000017

Watch out for the accumulation of round-off error. Many times a

calculation or a sequence of calculations appears in a loop. Each iteration

bases the current calculation on the previous one, and the round-offs errors

can sometimes accumulate. In such cases, if the loop is modified so that the

steps the loop takes become smaller generating even more calculations, the

result can be either better or worse.

The order of operations can affect round-off error. If a sequence of

operations must be performed, the greatest level of accuracy will generally

follow the form with the least variation in the size of the partial results. For

example:

h=

a*b3

-----d*e*g

should probably be written something like:

h = a/d*b/e*b/g*b; // Use of the pow() function, while tempting,

// will often give the least accurate result.

The number of operations can affect round-off error. If a sequence of

operations must be performed, consider writing the expression so that the

number of operations is minimized. As each operation may have round-off

error, reducing the number of operations to be performed should produce a

positive benefit in most cases. For example, three versions of the evaluation

of a cubic polynomial in one variable are shown below. The best version is

the last one. Making a function call (that may require an unknown number of

operations) should be avoided if it can be done with reasonable ease. For

example pow( base, exponent ) below probably works by computing

antilog( exponent * log( base ) ). This is fine for a real exponent, but a

preposterous way to raise a number to an integer exponent.

Y = a * pow(x,3) + b*pow(x,2) + c * x + d; // 5 ops + 2 calls. Worst

Y = a * x * x * x + b * x * x + c * x + d; // 9 ops.

Better

Y = x * ( x * ( x * a + b ) + c) + d;

// 6 ops.

Best

DATA AGGREGATES and other DERIVED DATA TYPES

Array

An array is a data aggregate in which every element must be the same type.

The fundamental operation for accessing elements within the array is the

index (or subscript) (i.e. a[j]). C++ and Java both treat multidimensional

arrays as arrays of arrays (i.e. a[j][k][y]). Some other languages such as

Pascal and Fortran do not (i.e. a[j, k, y]). Some languages permit references

to a portion of an array, but most, including C++ and Java, do not. Java has

some additional features such as jagged arrays and an ArrayList class that

are worthy of more space than I have here.

Arrays cannot be returned as the return value of function, but a pointer to an

array can be returned. Arrays cannot be copied or input or output as if they

were atomic objects (except strings). You cannot say a = b; if they are both

arrays or cout <<a; (or in Java, System.out.println(a);) . Arrays can be

initialized when declared, but no repeat factors are allowed. The array

dimension can be deduced by the compiler from the initialization list.

int a[5] = {1, 2, -1, 5}; // Unlisted values, in this case a[4], will be given the

value 0.

int c[4][3] = {

}

{1, 2},

{4, 5, 6},

{7}

// c[0][3] will be 0

// c[2][1] and c[2][2] will be 0

// all of row [3] will be 0

int b[] = {5,7,4}; // b will be created b[3] and initialized as requested.

Arrays are always stored in a linear block of memory. The compiler converts

the index or subscript as stated in the high level language into an offset into

memory in assembly language.

An index into a single dimension integer array in C++ and Java: If an

int is stored in 4 bytes, then an array of 100 integers will be stored in a

contiguous block of 400 bytes in memory. The address of the first byte in the

block is what is passed whenever an array is passed as a parameter. A

reference to any element of the array merely requires that the index be

shifted twice to the left6 then added to the address of the beginning of the

array to generate a memory address.

In all modern languages, a two dimensional array is stored by rows, also

called row-major form. In memory, the zeroth row is stored first, then the

first row, and so on. In the example below, the values stored in the 3 by 4

array indicate the row and column. i.e. 21 means row 2, column 1. A 2D

reference is converted to linear, then shifted to generate a byte offset. Thus

the 2D array below:

int A[3][4];

Col 0

Row 0 00

Row 1 10

Row 2 20

Col 1

01

11

21

Col 2

02

12

22

Col 3

03

13

23

would actually be stored as a 1D array as shown below (assuming the

starting address 2000)

Address of A[r][c]

= AddressOfA + BytesPerInt * (r*NumCols + c)

A[2][1]

= 2000

+4

* (2 * 4

+ 1)

= 2000

+4

*9

= 2036

2D

index

[0][0]

[0][1]

[0][2]

[0][3]

6

1D

index

[0]

[1]

[2]

[3]

Value

00

01

02

03

Byte

address

2000

2004

2008

2012

Shifting a binary number to the left two bits is, of course, the same as multiplying times 4 and is much

faster on most hardware.

[1][0]

[1][1]

[1][2]

[1][3]

[2][0]

[2][1]

[2][2]

[2][3]

[4]

[5]

[6]

[7]

[8]

[9]

[10]

[11]

10

11

12

13

20

21

22

23

2016

2020

2024

2028

2032

2036

2040

2044

Struct or Record

The C++ struct is called a record in most languages that are not C based. It

will not be discussed here at any length as modern languages correctly

expect you to use classes instead. A struct or record is an aggregate of

related data. It often consists of multiple data types and sizes (i.e.

EmployeeRecord, SalesRecord, InventoryRecord, or EnrollmentRecord). A

struct can easily be used to store data of the same type however, but the data

would still need to be related in some fundamental way. References to fields

within a struct often use the dot notation. In fact, it would be fair to say class

syntax borrowed this from record syntax. Unlike arrays, records can

generally be treated as atomic objects. There are many other interesting and

useful things that could be said, but why bother. You will likely be using a

class instead. In fact, Java does not even support them.

MORE SEMANTICALLY COMPLICATED STRUCTURES

Abstract Data Type (ADT) – a data structure combined with a set of

operations defined on the structure

Class – Classes are a modern derivative of the Abstract Data Type in which

additional capabilities such as encapsulation, operator overloading,

polymorphism, and information hiding have been added. References to

components of a class generally require a similar syntax as for a struct:

objectName.dataName or

objectName.functionName