3D Imaging of Turbine Blade for Comparative Deviation

Analysis between Ideal Part Designs to As Built Part.

by

Nasir Mannan

An Engineering Project Submitted to the Graduate

Faculty of Rensselaer Polytechnic Institute

In Partial Fulfillment of the

Requirements for the degree of

MASTER OF ENGINEERING IN MECHANICAL ENGINEERING

Approved:

_________________________________________

Ernesto Gutierrez-Miravete, Project Adviser

Rensselaer Polytechnic Institute

Hartford, CT

December, 2009

(For Graduation May 2011)

© Copyright 2009

by

Nasir Mannan

All Rights Reserved

2

Contents

List of Figures ................................................................................................................................................4

List of Symbols ..............................................................................................................................................5

Acknowledgement .........................................................................................................................................6

Abstract ..........................................................................................................................................................7

1. Introduction and Background.....................................................................................................................8

1.1 Airfoil Shape .....................................................................................................................................8

1.2 Turbine............................................................................................................................................ 10

1.3 Gas Turbine .................................................................................................................................... 12

1.4 Problem Description ....................................................................................................................... 14

2. Theory and Methodology ......................................................................................................................... 15

2.1 Resources ........................................................................................................................................ 16

2.2 Coordinate Measuring Machine ...................................................................................................... 17

2.3 3D Scanning.................................................................................................................................... 21

2.4 Structured Light .............................................................................................................................. 22

2.5 Test Setup ....................................................................................................................................... 24

2.6 Calibration and Accuracy Verification ........................................................................................... 25

2.7 Turbine Blade Scanning .................................................................................................................. 27

3. Results and Discussions ........................................................................................................................... 32

3.1 Surface Deviation ........................................................................................................................... 32

3.2 Simulation Accuracy Test ............................................................................................................... 33

3.3 Physical Accuracy Test ................................................................................................................... 36

3.4 Turbine Blade Scanning.................................................................................................................. 38

4. Conclusions .............................................................................................................................................. 42

5. References / Bibliography ........................................................................................................................ 43

6. Appendices............................................................................................................................................... 44

6.1. Appendix A: Physical Accuracy Test ........................................................................................... 44

6.2 Appendix B: Extrinsic Calibration Code ....................................................................................... 51

6.3 Appendix C: Grey Code ................................................................................................................. 53

6.4 Appendix D: Scanner Calibration Code......................................................................................... 54

3

List of Figures

Figure 1 - Pressure vectors and flow over a cambered section ......................................................................9

Figure 2 - Cosmos Flow Simulation velocity map. Top: no bend. Bottom: 1 deg. bend. ............................9

Figure 3 - Impulse and Reaction Turbine Illustration .................................................................................. 11

Figure 4 - Diagram of a high-pressure turbine blade ................................................................................... 12

Figure 5 - Diagram of a gas turbine get engine ............................................................................................ 13

Figure 6 - Left: Coordinate measuring machine. Right: Zoom on contact probe head ................................ 17

Figure 7 - PAS offline CMM programming software .................................................................................. 18

Figure 8 - Left: CMM cross sectional measurments. Right: Detailed point measuring on section ............. 19

Figure 9 - Top: Binary image. Bottom: Monochromatic 8 bit grey patterns ............................................... 22

Figure 10 - Stereoscopic imaging with structured light ............................................................................... 23

Figure 11 - Stereo scanner setup with off the shelf projector ...................................................................... 24

Figure 12 - 4 axis positioner with test blade ................................................................................................ 24

Figure 13 - Left and right camera calibration images. Colored lines added by calibration algorithm ........ 25

Figure 14 - Left: 6 inch optical ball bar being scanned. Right: Spheres fitted and measured in Rapidform

XOR2 software ............................................................................................................................................ 26

Figure 15 - Snapshot of the Excel worksheet used to calculate accuracy correction factor ......................... 26

Figure 16 - Images of structured light taken from data acquisition cameras. Tooling balls used for accurate

scan alignment ............................................................................................................................................. 27

Figure 17 - Inverse kinematics diagram. ...................................................................................................... 28

Figure 18 - Sphere center calculation........................................................................................................... 28

Figure 19 - Reference scan used to define world origin. Scan origins automatically aligned to world origin

..................................................................................................................................................................... 29

Figure 20 - Top: Find closest point. Bottom: Error metrics (point to plane) ............................................. 30

Figure 21 - Multiple scans aligned via reference spheres then by iterative closest point algorithm ............ 30

Figure 22 - Re-triangulated and merged mesh ............................................................................................. 31

Figure 23 - Surface defect detection simulation........................................................................................... 32

Figure 24 - 4 inch gage block scanning ....................................................................................................... 36

Figure 25 - 3D GD&T measurments ............................................................................................................ 37

Figure 26 - Left: control mesh (top), sharp edges eroded over time (bottom). Right: deviation analysis

highlighting edge deviations ........................................................................................................................ 38

Figure 27 - Left: control mesh (top), tip eroded over time (bottom). Middle: deviation analysis

highlighting tip deviations. Right: cross-section deviation (top), location of cross section (bottom) .......... 40

Figure 28 - Left: control mesh (top), damaged edges (bottom). Right: deviation analysis highlighting edge

damages ....................................................................................................................................................... 41

4

List of Symbols

CAD Computer Aided Design

CAE

Computer Aided Engineering

CAM Computer Aided Manufacturing

CMM Coordinate Measuring Machine

ICP

Iterative Closest Point

SPC

Statistical Process Control

L

lift force (N)

air density (g/m3)

true airspeed (m/s)

A

platform area (m2)

CL

lift coefficient at angle of attack, Mach number and Reynolds number

h

specific enthalpy drop across stage (kJ/kg)

T

turbine entry total (or stagnation) temperature ( K)

u

turbine rotor peripheral velocity (m/s)

v w

change in whirl velocity (m/s)

5

Acknowledgement

This Engineering Project, although the outcome of my own work, was made possible by

the following persons and organizations to whom I extend my sincere gratitude for their

time and assistance, without whom the completion of this project would not have been

possible.

1. Connecticut Center for Advanced Technology for funding the project, providing

time and resources for research and testing.

2. Robert Memmen, Consultant for CCAT, for his help, guidance, and knowledge

regarding the design aspects of turbine blades.

3. Prof. Ernesto Gutierreze-Miravete, Professor of Mechanical Engineering at

Rensselaer Polytechnic Institute, for his support and guidance in the organization

and development of this project, while acting as my faculty advisor.

6

Abstract

Advancements in CCD sensor technology and projection systems have lead their way to

higher precision vision systems and 3D scanners. By taking images of several structured

light patterns projected onto the surface of an object it is possible to reconstruct a highly

accurate digital representation of the visible surfaces of a part. Once a physical part is

accurately digitized, the door to perform any finite element analysis study becomes wide

open. This would allow engineers to perform studies on actual part geometries, that is,

as built parts, in order to verify the part will function as designed. It also opens the door

for more complete and comprehensive studies on damaged parts. This paper will first

introduce the basics of 3D imaging, verify the accuracy of a built system then perform

deviation analysis on a digitized turbine blade for comparison of results to its computer

aided design model. Damaged parts will then be scanned to analyze the effects the

damaged area may have on the performance of the blade.

7

1. Introduction and Background

Today's Engineers use highly sophisticated Computer Aided Design (CAD) and

Computer Aided Engineering (CAE) software that allow them to design products that

have far greater capabilities than products that were designed before their use. 3D

simulation allows complete geometry and property optimization no matter how

organically or parametrically shaped the part needs to be. This is very apparent in the

design and optimization of turbine blades used in aircraft turbofan engines. These

blades are designed to operate at high temperature and pressure, withstanding stress

caused by centrifugal forces as well as large pressure forces. Each turbine blade is

specifically designed to produce thrust from exhaust gases, and so must be shaped in a

specific aerodynamic form to translate incoming energy and pressure into thrust.

1.1 Airfoil Shape

Airfoil refers to the cross sectional shape of a wing, blade, or sail. This particular shape

when moved through a fluid produces a force perpendicular to its motion called lift.

Airfoils work on the basis of Bernoulli’s principle stating that high speed flow is

associated with low pressure, and low speed flow with high pressure. Airfoils are

designed to increase the speed of the airflow above the surface of a wing, blade, or sail,

causing a decrease in pressure. Simultaneously, the slower moving air below the surface

of the airfoil increases the air pressure. The combination of the increase of the air

pressure below the airfoil and the decrease of air pressure above it creates lift or an

upwards force, as show in Figure 1 below.

8

Figure 1 - Pressure vectors and flow over a cambered section

The amount of lift generated by the airfoil depends on how much the flow is turned,

which depends on the amount of bend (curvature of the camber line) in the airfoil shape.

The curvature also however affects the downwash.

Figure 2 - Cosmos Flow Simulation velocity map. Top: no bend. Bottom: 1 deg. bend.

9

As shown in Figure 2 above, a small 16 degree bend produces higher air velocities above

the airfoil and lower velocities below, thereby increasing the lift force. The velocity of

the incoming air and the increased surface area of accelerated air velocity over the wing

in the second image mean a greater low pressure surface area which also contributes to

an increase in the lift force. As lift force is described by:

L

1

2 AC L

2

(3)

Where

L lift force

air density

true airspeed

A platform area

CL lift coefficient at

angle of attack, Mach

number and Reynolds number

A larger surface area on the convex side of the turbine blade produces greater lift.

It is important to notice the significant increase in downwash air velocity. As mentioned

earlier, turbine blades in a rotary gas engine are aligned in rows constructing what is

called a rotating nozzle assembly, the main goal being to accelerate the velocity of air as

it exits the nozzle end.

1.2 Turbine

Turbines are part of what is known as a rotary engine which produces work by extracting

energy from a fluid or air flow. In its simplest form, a turbine blade has the same basic

principle as that of an aircraft wings, i.e. it employs an airfoil shape that lets air pass

around its outer shell in a specific intended manner whereby lift is generated from the

moving fluid which in turn is transmitted onto the rotor. There are two types of turbines:

10

impulse type which are driven by a flow of high velocity of fluid or a gas jet, and

reaction type which are driven by reacting to a gas or fluid pressure by which it produces

torque. Figure 3 graphically depicts the two types of turbines:

Figure 3 - Impulse and Reaction Turbine Illustration

As depicted in Figure 3 above, stators direct the flow of air towards the turbines (rotating

nozzles). This in turn causes the turbine to react by moving in the direction of the air

stream.

Before the use of advanced 3D computer simulations basic velocity triangles were used

to calculate the performance of a turbine system, for example, the Euler equation was

used to calculate the mean performance for the stage:

h u vw

11

(1)

Whence:

h u v w

T T T

(2)

Where:

h specific enthalpy drop across stage

T turbine entry total (or stagnation) temperature

u turbine rotor peripheral velocity

v w change in whirl velocity

H

The turbine pressure ratio is a function of

and the turbine efficiency.

T

Modern turbine design carries the calculations further. Computational fluid dynamics

dispenses many of the simplified assumptions used to derive classical formulas and the

use of computer software facilitates optimization. These tools have lead to steady

improvements in turbine design over the last forty years.

1.3 Gas Turbine

A gas turbine or combustion turbine is a rotary engine that extracts energy from a flow

of combustion gas. They are described thermodynamically by the Brayton cycle, in

which air is compressed isoentropically, combustion occurs at constant pressure, and

expansion over the turbine occurs isoentropically back to the starting pressure. Figure 4

shows a typical turbine blade with internal air cooling chambers as found in gas turbine

engines.

Figure 4 - Diagram of a high-pressure turbine blade

12

Air breathing jet engines are gas turbines optimized to produce thrust from the exhaust

gases, or from ducted fans connected to the gas turbines. Figure 5 shows a diagram of a

typical gas turbine jet engine.

Figure 5 - Diagram of a gas turbine get engine

As can be seen from Figure 5 above, jet engines are comprised of stages of rotor disks

which act to compress incoming air until it is mixed with fuel in the combustion

chamber and ignited to produce thrust. The exhaust passes over more stages of rotary

disks before exiting which in turn rotate the main shaft and low pressure turbines near

the intake for a cycle repeat. The shape, location and performance of each blade is

critical since maximum work is expected out of each blade to extract highest work to

weight ratios. Design engineers focus on optimizing turbine shapes for maximizing

performance.

Once the process of optimizing the design of a turbine blade is complete, the 3D model

is send to Manufacturing Engineers who design the process for producing the physical

part. This process involves the use of sophisticated 3D Computer Aided Manufacturing

(CAM) software which allows manufacturing engineers to simulate and optimize the

processes that will be used throughout the various stages of completing the part.

Advanced multi axis machines and robotics ensure highly accurate and repeatable

cycles.

13

Once produced, the physical part is inspected to ensure specific measurements fall

within the allowable measurement tolerance band.

1.4 Problem Description

Many manufacturing companies employ the use of Coordinate Measuring Machines as

their main Metrology tool for inspecting and qualifying turbine blades. However, while

both software and hardware have advanced to aid the design and optimization of the

process of designing and manufacturing a turbine blade, many of the concepts for

contact inspection have remained the same, that is, the inspection process involves the

measurement of discrete points. This process is sufficient in many applications but for

those involving parts that are designed specifically to produce a certain fluid flow around

its outer shell geometry, measurement of the complete surface is very much desired in

order to detect any small surface defects that would compromise the intended fluid flow

around the part.

14

2. Theory and Methodology

It is important therefore to investigate the use of non-contact methods for industrial

Metrology applications. This paper will investigate the use of 3D Imaging as the basis

for fast non-contact automated inspection of turbine blades. The non contact approach is

currently a new concept which many research facilities have investigated and studied.

Both

DSSPBlueBook.pdf

and

University

of

Brown.pdf,

located

at

http://rh.edu/~mannan/EP/References/ describe the use of 3D scanning for quality

inspection.

In this paper we will investigate first the basic design of a generic blade to get an

understanding of its shape, type and location of measurements that define its shape and

drivers for their tolerance bands and also take a look at some of the manufacturing

software and hardware used to create the physical part. The methods employed to then

verify the built part meets tolerance criteria will be discussed looking both at the

accuracy of the system as well as the speed and completeness at which it is capable of

validating a part as compared to the rate of manufacturing.

Next, we will investigate the use of advanced 3D Imaging (or 3D scanning) systems for

acquiring and digitally reconstructing the surface definition of a physical part. This

paper will first discuss the main principles of how 3D scanners work, the algorithms that

are applied in various types of systems, then compare the accuracy of such a system by

simulating the scanning of a 4 inch gage block and comparing its recovered

measurement with its original CAD design. This will help to validate the mathematics

of the algorithm used. Subsequently, an optical ball bar will be scanned with a test setup

and compared to its designed measurements to calculate hardware inaccuracies such as

lens distortion, image sensor signal to noise ratio, projector gamma inaccuracies and

calibration errors.

A system using dual camera (stereo) setup was constructed to investigate the actual

accuracies achievable by the 3D reconstruction algorithm. A test blade for which the

15

inspection criteria are available was scanned via the built 3D scanner and measured

using a CMM for comparative measurement analysis.

Damaged blades were measured by both the 3D scanner and the CMM to investigate the

capabilities of either system to detect the damaged region. A stress analysis on a

damaged model of the blade was conducted to demonstrate the ill performance and

premature failure of the blade that would occur if either system was to miss measuring

the damaged area if such an area was to exist between measuring points.

2.1 Resources

The following hardware and software elements were utilized in this project:

3D Scanner

CCAT Structured Light Scanner

CCAT Data Acquisition Software

Positioning System.

Velmex B5990TS rotary table

Custom built 4 Axis positioning system (stepper motors) with custom controller.

Access to simulation software

Autodesk 3D Studio Max – scanning simulation (ray tracing).

Mesh Processing, Reverse Engineering, Quality Inspection

Rapidform XOR and XOV

Engineering Analysis Software

Cosmos Analysis Software

Access to turbine blade, prints and CMM

16

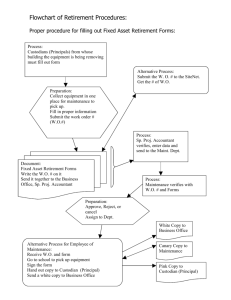

2.2 Coordinate Measuring Machine

Today, the industry standard for measuring turbine blades has shifted from profile gages

to CMM’s in order to reduce human error in measurement and to automate the process

of measuring turbine profiles. Measuring the blade portion of a turbine involves

defining planes offset from the tip of the blade that define the sections from which

discrete points will be measured via a contact probe, as shown in Figure 6 below.

Figure 6 - Left: Coordinate measuring machine. Right: Zoom on contact probe head

The probes are attached to sensitive force gages which stop the motion of any moving

axis once the probe tip comes into contact with the part. This measurement is then

recorded as X, Y, and Z measurements relative to the measuring origin as specified by

the user. Nominal data is loaded into the Statistical Process Control (SPC) software,

either onboard the CMM controller or a post processing computer which then compares

the measured data to output deviations to a text report. Each point has associated with it

a tolerance within which the part is designed to operate as desired. Should a point lie

outside the tolerance band a “point fail” is reported in the output text report. A Quality

engineer checks the report to determine whether a part has passed or failed in order to

17

either move on to the next part or pass the part back to manufacturing for refinishing

only if positive deviations exist.

Traditionally, CMM’s where programmed on the machine when a part was fixtured,

however with the advancement of new 3D offline programming software Quality

Engineers can pre program probe paths and simulate the inspection process on virtual

machines in order to optimize probing times and minimize the risks of machine crashes

as exhibited with CNC machines. Figure 7 shows an example workflow for PAS CMM

programming software.

Figure 7 - PAS offline CMM programming software

With the advent of these new software packages, Quality Engineers could semi

automatically program probing paths while their CMM’s are measuring a batch of parts

online. Machine crashes could be reduced if not eliminated during simulation runs to

ensure successful first time run programs on the machine.

18

This process, though highly accurate is still limited to only measure discrete points; for

turbines this means only sectional data can be validated to be within tolerances (Figure

8), areas between sections are only assumed to be manufactured correctly.

Figure 8 - Left: CMM cross sectional measurements. Right: Detailed point measuring on section

As can be seen in Figure 8, seven sections are offset from a reference plane

perpendicular to the stacking axis of the blade (direction of blade extrusion) that contain

sectional measurements. Each cross section measurement itself only contains a discrete

number of section measurements that are used to verify the shape of the airfoil. All

measurements between each section are only assumed to be within an acceptable

tolerance.

Also, this process does not completely automate the process of measuring turbine blades.

The user must create probe paths for each type of blade. Since this method employs the

use of physical contact, the machine must know where to start and must assume that the

user has positioned the physical part so as to match the probing path for correct

measurement. That is, if a measuring point is assigned at the corner of say a cube, the

cube must be positioned such that it is exactly aligned with the same reference defined in

the probing path so the probe will be measuring the very corner that is intended to be

19

measured and not slightly off to one edge. This requires precise and repeatable

positioning of each turbine for accurate measurement. 3D scanners do not require the

exact positioning of objects since reference objects and the parts surface are used to

align individual scans. The part only needs to remain rigidly attached to the positioning

device during scanning. This is discussed in more detail the “Turbine Blade Scanning”

section of this paper.

20

2.3 3D Scanning

A 3D scanner is a measuring device which is able to analyze real world objects or

environments by collecting large amounts of data points from the objects shape and

color. These points can then be used to extrapolate the shape of the subject (a process

called reconstruction). If color information is collected at each point, then the colors on

the surface of the subject can also be determined.

3D scanners are very analogous to cameras. Like cameras, they have a cone-like field of

view, and like cameras, they only collect information about surfaces that are not

obscured. While a camera collects only color information about surfaces within its field

of view, 3D scanners collect distance information about the surface within its field of

view. The “picture” produced by a 3D scanner describes the distance to a surface at

each point in the picture. For most situations, a single scan will not produce a complete

model of the subject. Multiple scans from many different directions are usually required

to obtain information about all the sides of the subject. These scans have to be brought

in a common reference system, a process that is usually called alignment or registration,

and then merged to create a complete model. This whole process, going from a single

range map to a whole model is usually known as the 3D scanning pipeline.

It is important to mention that optical limitations do exist for 3D scanning devices. For

example, optical technologies encounter many difficulties with shiny, mirroring or

transparent objects. This is a result of an objects color or terrestrial albedo. A white

surface will reflect lots of light and a black surface will reflect only a small amount of

light. Transparent objects such as glass will only refract the light and give false three

dimensional information. Solutions to this include coating these types of surfaces with a

thin layer of white powder that helps more light photons reflect back to the imaging

sensor.

21

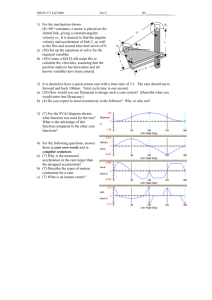

2.4 Structured Light

There are many types of technologies which could be used to construct a 3D scanner, all

of which employ the use of signal processing and ultimately triangulation between the

light and image plane to calculate three dimensional points. The structured light method

employing the grey code (8 bit grey scale pattern) and phase shifting has an advantage in

both speed and accuracy. Figure 9 below shows a sample of the binary and grey code

patterns that are projected onto the surface of an object for scanning. The binary images

are pure black and white with a hard transition between the two extremes, i.e. a 0’s and

1’s pixel value. The grey images are 8 bit monochrome images that display a range of

grayscale values from black (pixel value of 0) to white (pixel value of 255) in a sine

wave pattern. This image is then shifted one pixel value to the right which is referred to

as phase shifting since the sine wave is sifted, with Δx being one pixel width. As each

image is projected onto the surface of an object, which are originally straight, the

cameras take images of the distorted pattern as it conforms to the shape of the part.

From these images the software processes the difference between the output signal to the

input and calculates a 3D point per illuminated pixel of the image sensor via a known

triangulation angle between two cameras or a camera and a projector which is calculated

during extrinsic calibration.

Figure 9 - Top: Binary image. Bottom: Monochromatic 8 bit grey patterns

22

Instead of acquiring on point at a time, structured light scanners acquire multiple points

or an entire field of view of points at once. This reduces or eliminates the problem of

distortion from motion. By utilizing two imaging sensors, slightly apart, looking at the

same scene it is possible to analyze the slight differences between the images seen by

each sensor, making it possible to cancel distortions produced by non telecentric lenses

and to correspond images for high accuracy point calculation, as shown in Figure 10.

Marrying this stereoscopic imaging technique with the grey code structured light

algorithm it is possible to recover one point per illuminated pixel of camera, each point

having a very high degree of accuracy. This means that for a setup utilizing two one

million pixel sensors with a field of view of 10 inches square it will be possible to

calculate 1 million 3D surface points per 10 inch square field of view with 0.01 inch

point spacing. Similarly with using higher resolution imaging sensors or a smaller field

of view it is possible to decrease the point spacing for capturing smaller artifacts, for

example, thin surface cracks.

Figure 10 - Stereoscopic imaging with structured light

The point clouds produced by this 3D scanner will not be used directly. For this

application the point clouds will be triangulated into a 3D polygonal surface mesh for

point to surface measurement.

23

2.5 Test Setup

This scanner setup uses two 1.3 megapixel image sensors and a digital light projector

based around Texas Instruments Digital Micromirror Device (DMD). The setup is

shown below in Figure 11.

Figure 11 - Stereo scanner setup with off the shelf projector

A 4 axis positioning device driven by stepper motors was also constructed to automate

the scanning process. It was designed to have two rotation axis and two translational as

shown in Figure 12.

Figure 12 - 4 axis positioner with test blade

24

2.6 Calibration and Accuracy Verification

Matlab’s camera calibration toolbox was used to calibrate the stereo camera setup.

[Zhang]. This process corresponds physical dimensions with the number of pixels via a

checkered board pattern. In this case a 24 x 19 grid was used with 10mm sized squares,

as shown in Figure 13.

Figure 13 - Left and right camera calibration images. Colored lines added by calibration algorithm

This particular calibration board was printed on high resolution polygraph paper using

an inkjet plotter at high resolution and mounted to a flat machined aluminum plate. It is

necessary to check the accuracy of a scan via a known measured object in order to

validate or correct the printed grid inaccuracies inherent from its construction. For this,

a National Institute of Standards and Technology (NIST) Optical Ball Bar was used to

check the accuracy of a single scan, shown in Figure 14.

25

Figure 14 - Left: 6 inch optical ball bar being scanned. Right: Spheres fitted and measured in Rapidform

XOR2 software

Results of the calibration and accuracy check are shown in Figure 15.

Figure 15 - Snapshot of the Excel worksheet used to calculate accuracy correction factor

26

As can be seen from Figure 15, a calibration with the checkered board plate resulted in

an accuracy estimation of 0.00537”. This is calculated from the distance between the

two spheres that physically measured 5.9955” apart. A correction factor was calculated

and applied to the camera calibration file which resulted in an overall accuracy

estimation of 0.000571”.

2.7 Turbine Blade Scanning

The turbine blade was fixtured onto the positioning table as shown in Figure 12.

Eighteen scans where taken from different orientations with the addition of tooling balls

for alignment purposes, as shown in Figure 16.

Figure 16 - Images of structured light taken from data acquisition cameras. Tooling balls used for accurate

scan alignment

There are two approaches to automatically align multiple meshes together. One method

is to accurately find the center of rotation of the fourth axis then through inverse

kinematics of the positioning system, it is possible to apply a transformation matrix to

each individual scan in order for alignment (Figure 17). This approach however relies

on the accuracy of the positioning system and the accuracy of the inverse kinematic

model.

27

Figure 17 - Inverse kinematics diagram.

The second approach is to align to a known geometry, in this case spheres. By taking an

initial scan of the positioning system’s table top with the spheres arranged randomly, it is

possible to create an initial reference file containing points calculated from the centers of

the spheres, as shown in Figure 18.

Figure 18 - Sphere center calculation

Once this initial file is calculated, the turbine blade can be fixtured onto the positioning

table with the reference spheres. Each scan containing at least 3 spheres could then be

used to calculate and align sphere centers to the original reference file (Figure 18). This

method allows for accurate alignment between scans independent of positioned

accuracy. The turbine blade need only remain still during the scan to maintain accuracy.

28

Figure 19 - Reference scan used to define world origin. Scan origins automatically aligned to world origin

The result of using this method sets individual scans in a relatively accurate position

from some arbitrary location in space, accuracy depending on rigidity, circularity and,

since this algorithm uses a best fit technique, the number of spheres used for alignment.

This is graphically depicted in Figure 19. For global fine alignment an iterative closest

point algorithm is used to match and align overlapping geometry between individual

scans.

The Iterative Closest Point (ICP) algorithm is employed to minimize the difference

between two point clouds.

The algorithm iteratively revises the transformation

(translation, rotation) of each scan needed to minimize the distance between the points of

two raw scans.

Essentially, the algorithm steps are:

Associate points by nearest

neighbor criteria, estimate transformation parameters using a mean square cost function,

transform the points using the estimated parameters, iterate (re-associate the points and

so on). Figure 20 shows two ICP methods, the first being a point to point comparison

employed to align two meshes together, while the second being a point to plane

comparison employed to align a mesh to a surface model.

29

Figure 20 - Top: Find closest point. Bottom: Error metrics (point to plane)

The point to point ICP method uses each 3D point within the scan data to maximize the

alignment accuracy via statistics. Figure 21 shows the results of first roughly aligning

multiple scans taken of a turbine blade via reference spheres, then employing the ICP

algorithm to align each scan using overlapping (common) geometry, in order to

minimize global distortion.

Figure 21 - Multiple scans aligned via reference spheres then by iterative closest point algorithm

30

Once all individual scans are aligned, it is possible to then combine all scans into one

mesh, an operation similar to Boolean command in CAD software. For mesh data

however it is not always desired to have multiple surfaces layered as this may cause false

surface deviation readings during analysis if more than two surfaces are used. A

complete re-triangulation is needed to produce a clean single layer mesh. The merging

algorithm used here analyzes point redundancy and eliminates clusters of points defining

the same feature based on user set parameters. For example, the algorithm will analyze

point spacing between point pairs within a user defined surface area and eliminate

redundant points by trying to equalize point spacing to an average value, either by

moving points on the surface or eliminating certain points. The algorithm does this for

the entire surface then re-triangulates the points to a single layered mesh. The results of

using Rapidform’s triangulate/merge function is shown below in Figure 22.

Figure 22 - Re-triangulated and merged mesh

31

3. Results and Discussions

3.1 Surface Deviation

Having digitally captured the surface definition of a physical part in mesh format it is

now possible to use this model to run engineering analysis. One of the most beneficial

attributes of using meshes based on the physical object is that analysis results are based

on the actual physical dimensions which may include defects, if the physical model

contains them.

If a CAD model or a mesh file of a part that is known to be in tolerance is available,

surface deviation analysis can be performed to determine if the target scanned part has

been manufactured within specifications. It can also be used to automatically detect and

track surface defects that may grow over the life of the part.

For example let’s take a look at automatic surface defect detection.

Figure 23 - Surface defect detection simulation

Figure 23 shows a part at the center with simulated defects (exaggerated by 10x for easy

visualization) that was aligned and compared to its original defect free condition. The

32

resultant is a color plot highlighting surfaces that are above and below the surface

deviation tolerance limit (set to green).

3.2 Simulation Accuracy Test

It is important, as mentioned earlier, to verify the accuracy of the measuring device as it

directly affects the accuracy of any surface deviation analysis. First, the algorithm must

be tested to verify the mathematics are correct, that is, test the surface reconstruction

accuracy independent of hardware. Then, physical testing is required to verify mesh

accuracy and quality.

A simulation accuracy test was conducted using Solidworks and Autodesk software, the

output of which was ran through the grey code and the reconstruction algorithm.

Solidworks was used to model a four inch gage block (four inches being the length of

the block) which was exported to Autodesk as a B-rep surface file. As a virtual CAD

model this gage block is free from all physical defects and is exactly 4 inches in length,

making it the perfect control subject to test the accuracy of a virtual 3D scanner. This

virtual scanner was constructed within Autodesk by defining two cameras and a

projection device within a modeled environment, i.e. an environment set with ideal

diffuse illumination settings. The parameters of each of the cameras and the projector

where set to behave with high end response, i.e. the projection device was set with

infinite focus and a linear gamma response with a contrast ratio of 2500:1 (as this is a

typical contrast ratio for most off the self data projectors). The cameras where set with

infinite focus, high ISO sensitivity, and a high signal to noise ratio. An image sequence

was made for the binary and monochrome images which was converted to a video file

and set as the image to project by the virtual projector.

Once the environment and the virtual scanner were set a calibration board was modeled

within Autodesk and placed 1 foot in front of the projector lens. The center of the board

was positioned to be in line with the centerline of the projector lens. The two cameras

were rotated so their centerlines would coincide with the projector’s centerline and the

front surface of the calibration board. An animation sequence was made where the

33

calibration board was moved about in several random locations [according to Zhang].

During the animation, images where rendered from both camera views. These images

where then imported into the stereo calibration algorithm for calibrating the virtual

scanner. Once calibrated, the four inch gage block was positioned within the field of

view of the virtual scanner and scanned from several positions. The rendered images of

the camera views were used as the data set for the grey code and surface reconstruction

algorithm. The resulting meshes were imported into Rapidform XOV2 software,

globally aligned via the ICP algorithm then compared against with the original design

model from Solidworks. The results are shown in Figure 23b below.

Figure 23b – Results of Simulation Accuracy Test

34

The results from the simulation accuracy test are as follows:

Average Gap Distance: 0.0000000188”

Standard Deviation: 0.0000000325”

The average gap distance and standard deviation show that the grey code and surface

reconstruction algorithm are able to accurately calculate surfaces from image data.

Further it proves that the calibration procedure described in Zhang can be used for

quickly calibrating stereo setups.

35

3.3 Physical Accuracy Test

Having tested the algorithms, it was important to test a physical setup in order to

understand how hardware affects the accuracy of reconstructed surfaces. For this, two

1.3 megapixel image sensors based on CMOS technology where used as the cameras

which were fitted with two 12.5mm megapixel CCTV lenses. An off the shelf data

projector at 1024 x 768 resolution and 2500:1 contrast ratio was used as the structured

light projection device. The components where mocked together on an optical table for

testing.

In order to compare results with the simulation accuracy test, a four inch gage block with

NIST certification was fixtured in the field of view of the test scanner. Spheres were

fixed on the block and the panavise (used for fixturing) with modeling clay.

As

discussed in section 2.6, the spheres where used to automated the alignment of multiple

scans taken of the gage block. Figure 24 shows this setup as it is being scanned.

Figure 24 - 4 inch gage block scanning

The scans were imported into Rapidform XOV2 software, aligned via targets then

globally aligned via an ICP algorithm. The mesh file was aligned to the CAD model of

the gage block (constructed from the certification that came with the physical block).

36

Following, the spheres and the panavise holder where removed from the scan data.

Figure 25 shows the results of a surface deviation analysis with point call outs at random

locations.

Figure 25 - 3D GD&T measurements

The test data presented above show an overall length measurement (3D GD&T1),

sixteen reference point deviations and a whole shape deviation color map where green

indicates measured data within +0.001”

Concluding Alignment Accuracy (worst case) was +0.001”. Please see 6.1 Appendix A

for full test documentation.

37

3.4 Turbine Blade Scanning

Physical testing results have shown quick defect detection capabilities with 3D scanning

technology. The part shown in Figure 22 was used as a controlled mesh for comparison

against three blades one with eroded edges, an eroded tip, and one with damaged edges.

These turbine blades were fixtured and scanned similar to the setup shown in Figure 16.

Each of the blades scans were then target aligned then globally aligned via the ICP

algorithm. All unnecessary data was removed from the complete mesh file, that is, the

spheres and the holder. Figure 26 shows the results of a surface deviation analysis

between the original CAD model of the blade with the scan of a physical blade of a

known eroded edge damage condition.

Figure 26 - Left: control mesh (top), sharp edges eroded over time (bottom). Right: deviation analysis

highlighting edge deviations

Figure 26 shows almost all edges to be out of tolerance, highlighted in a light blue color,

which corresponds with a deviation in the surface of the mesh file to be between 0.001”

– 0.0039”.

As can be seen from the deviation analysis, the 3D surface reconstruction algorithm is

able to give us complete surface coverage on the part making it possible for a more

complete surface inspection. As the deviation analysis is comparing the original CAD

38

surface of the blade to the physically scanned mesh surface, the resolution of the results

are only limited by the resolution of the scan data which itself has been shown to be at a

far greater point density then CMM measured data. Further, since the 3D scanner is

based on non contact measurement, that is, it measures everything within its field of

view, whether or not a feature has to be measured is not a concern since the scanner will

pick up the surface regardless, as opposed to specifically programming a CMM path to

measure a feature. It can be seen from the results of this first analysis that the CMM

path (as shown in Figure 8) would have detected defects only on the profiles of the

blade, and would have missed the edge defects on the root and tips unless those paths

where specifically programmed to be inspected. If repair is possible the deviation

analysis can be used to identify all regions that would need to be repaired.

Specifically, turbine blade tips often become eroded over time and are repaired by

cutting the tip, welding a thick bead of metal on the cut portion then grinding the weld

back to the original contour. This requires numerous measurements on a CMM, firstly

to identify the extent of the damage on the tip, then to measure the weld bead thickness,

and then to continuously check the grinding results until the tip is within tolerance.

CMM programming becomes a very arduous task for this type of repair. This is in

contrast to using a 3D scanner for the same repair. To test the scanners ability to pick up

damaged tips a blade with a known eroded tip was scanned with the test setup (Figures

11 and 12). The individual scans were imported, aligned and merged in Rapidform

XOV2 software and compared against the original CAD model. The results are shown

in Figure 27.

39

Figure 27 - Left: control mesh (top), tip eroded over time (bottom). Middle: deviation analysis

highlighting tip deviations. Right: cross-section deviation (top), location of cross section (bottom)

The results clearly show the mesh surface to have a negative deviation of up to 0.0124”

in some areas, where negative deviation indicates missing material. Since now we have

a model of the damaged blade, it is possible to use this model to program the welding

path as well as the grinding path for automated blade tip repair, since the 3D scanner

output is a mesh file describing the surface as opposed to discrete measuring points.

Lastly, a blade with damaged edges was scanned in order to test the scanners ability to

pick up small defects on the surface of the blade. A blade with known defects was

scanned with the test setup (Figures 11 and 12). The individual scans where imported,

aligned and merged in Rapidform XOV2 software for comparison against the original

CAD model. The results are shown in Figure 28.

40

Figure 28 - Left: control mesh (top), damaged edges (bottom). Right: deviation analysis highlighting edge

damages

Figure 28 shows almost all measured defects to be positive deviations, in other words,

the physical model has too much material in areas that are highlighted yellow in the

figure above. In this case, since the deviations are positive and both the model of the

physical blade and the original design data are available, it is possible to export theses

surface deviations to any CAM or machine path programming software for defining a

finishing path to bring those areas that are out of tolerance on the blade, back into

tolerance. This would greatly reduce the need for hand finishing and reduce the cutting

and measuring iterations needed to verify the part is within tolerance, since all cutting

paths are programmed off of the deviation analysis.

41

4. Conclusions

This report demonstrates that the 3D image processing algorithms are able to very

accurately reconstruct the surface of a physical part. Since the grey code method

eliminates projector limitations and fully utilizes camera resolution in that a 3D point is

calculated for each illuminated pixel of the image sensor, the level of detail needed for

measurement can be increased or decreased based on camera resolution or field of view.

Large parts can be scanned with both high resolution and low resolution scanner setups

such as the one shown in Figure 11. As can be seen from Figures 24 – 26, 3D scanners

provide complete surface definitions, which can then be compared against ideal part

designs. Depending on field of view and/or camera and projector resolution the smallest

details can be captured digitally for further analysis. With multi axis automation and

target alignment parts can be scanned in completely automatically without the need for

precise fixturing or complicated machine paths. The speed at which millions of data

points are captured via optical methods is far greater than conventional and scanning

CMMs. The 3D imaging and alignment algorithms have shown to be robust, accurate,

and repeatable for use as part of an optical measuring device. The test data has shown

the ability of the system to quickly scan and detect surface defects for quality

engineering and repair purposes.

Because of the use of software calibration as apposed to hardware alignment, the 3D

scanning system has great flexibility in parts that it can scan. By simply changing the

distance between the cameras, camera angles and/or lenses, large parts can be scanned at

low resolution for overall geometry capture in one shot, or at high resolution via multiple

scans to capture small surface defects. The calibration algorithm has shown to have high

accuracies at small fields of view while lower accuracies at larger fields of view due to

the parallax distance between the camera and the projection device.

42

5. References / Bibliography

Matlab Camera Calibration Toolbox:

http://www.vision.caltech.edu/bouguetj/calib_doc/

Open Computer Vision Library:

http://sourceforge.net/projects/opencvlibrary/

Open Source Mesh Processing Software:

http://meshlab.sourceforge.net/

Douglas Lanman and Gabriel Taubin, University of Brown, August 5, 2009, Build Your

Own 3D Scanner: 3D Photography for Beginners, Siggraph 2009 Course Notes ( located

at http://rh.edu/~mannan/EP/References/ )

P. Cignoni, C. Rocchini, and R. Scopigno, Istituto per l’Elaborazione dell’Informazione

– Consiglio Nazionale delle Ricerche, 1998, Pisa, Italy, Metro: measuring error on

simplified surfaces. ( located at http://rh.edu/~mannan/EP/References/ )

Zhengyou Zhang, Microsoft Research, 1999, Flexible Camera Calibration By Viewing a

Plane From Unknown Orientations. ( located at http://rh.edu/~mannan/EP/References/ )

43

6. Appendices

6.1. Appendix A: Physical Accuracy Test

44

45

46

47

48

49

50

6.2 Appendix B: Extrinsic Calibration Code

function [Tc_ext,Rc_ext,H_ext] = ...

computeExtrinsic(fc,cc,kc,alpha_c,image_name,format_image,nX,nY,dX,dY)

% COMPUTEEXTRINSIC Determines extrinsic calibration parameters.

%

COMPUTEEXTRINSIC will find the extrinsic calibration, given

%

a set of user-selection features and the camera's intrinsic

%

calibration. Note that this function is a modified version

%

of EXTRINSIC_COMPUTATION from the Camera Calibration Toolbox.

%

%

Inputs:

%

fc: camera focal length

%

cc: principal point coordinates

%

kc: distortion coefficients

%

alpha_c: skew coefficient

%

image_name: image filename for feature extraction (without

extension)

%

format_image: image format (extension)

%

nX = 1: number of rectangles along x-axis

%

nY = 1: number of rectangles along y-axis

%

dX: length along x-axis (mm) (between checkerboard

rectangles)

%

dY: length along y-axis (mm) (between checkerboard

rectangles)

%

%

Outputs:

%

Tc_ext: 3D translation vector for the calibration grid

%

Rc_ext: 3D rotation matrix for the calibration grid

%

H_ext: homography between points on grid and points on image

plane

%

% Douglas Lanman and Gabriel Taubin

% Brown University

% 18 May 2009

% Load the calibration image (assuming format is supported).

ima_name = [image_name '.' format_image];

if format_image(1) == 'p',

if format_image(2) == 'p',

frame = double(loadppm(ima_name));

else

frame = double(loadpgm(ima_name));

end

else

if format_image(1) == 'r',

frame = readras(ima_name);

else

frame = double(imread(ima_name));

end

end

51

if size(frame,3) > 1,

I = frame(:,:,2);

end

frame = uint8(frame);

% Prompt user.

disp('

(click on the four extreme corners of the rectangular

pattern, ');

disp('

starting on the bottom-left and proceeding counterclockwise.)');

% Extract grid corners.

% Note: Assumes a fixed window size (modify as needed).

wintx = 9; winty = 9;

[x_ext,X_ext,ind_orig,ind_x,ind_y] = ...

extractGrid(I,frame,wintx,winty,fc,cc,kc,nX,nY,dX,dY);

% Computation of the extrinsic parameters attached to the grid.

[omc_ext,Tc_ext,Rc_ext,H_ext] = ...

compute_extrinsic(x_ext,X_ext,fc,cc,kc,alpha_c);

% Calculate extrinsic coordinate system.

Basis = X_ext(:,[ind_orig ind_x ind_orig ind_y ind_orig ]);

VX = Basis(:,2)-Basis(:,1);

VY = Basis(:,4)-Basis(:,1);

nX = norm(VX);

nY = norm(VY);

VZ = min(nX,nY)*cross(VX/nX,VY/nY);

Basis = [Basis VZ];

[x_basis] = project_points2(Basis,omc_ext,Tc_ext,fc,cc,kc,alpha_c);

dxpos = (x_basis(:,2)+x_basis(:,1))/2;

dypos = (x_basis(:,4)+x_basis(:,3))/2;

dzpos = (x_basis(:,6)+x_basis(:,5))/2;

% Display extrinsic coordinate system.

figure(1); set(gcf,'Name','Extrinsic Coordinate System'); clf;

imagesc(frame,[0 255]); axis image; title('Extrinsic Coordinate

System');

hold on

plot(x_ext(1,:)+1,x_ext(2,:)+1,'r+');

h = text(x_ext(1,ind_orig)-25,x_ext(2,ind_orig)-25,'O');

set(h,'Color','g','FontSize',14);

h2 = text(dxpos(1)+1,dxpos(2)-30,'X');

set(h2,'Color','g','FontSize',14);

h3 = text(dypos(1)-30,dypos(2)+1,'Y');

set(h3,'Color','g','FontSize',14);

h4 = text(dzpos(1)-10,dzpos(2)-20,'Z');

set(h4,'Color','g','FontSize',14);

plot(x_basis(1,:)+1,x_basis(2,:)+1,'g-','linewidth',2);

hold off

52

6.3 Appendix C: Grey Code

function [P,offset] = graycode(width,height)

% % Define height and width of screen.

% width = 1024;

% height = 768;

% Generate Gray codes for vertical and horizontal stripe patterns.

% See: http://en.wikipedia.org/wiki/Gray_code

P = cell(2,1);

offset = zeros(2,1);

for j = 1:2

% Allocate storage for Gray code stripe pattern.

if j == 1

N = ceil(log2(width));

offset(j) = floor((2^N-width)/2);

else

N = ceil(log2(height));

offset(j) = floor((2^N-height)/2);

end

P{j} = zeros(height,width,N,'uint8');

% Generate N-bit Gray code sequence.

B = zeros(2^N,N,'uint8');

B_char = dec2bin(0:2^N-1);

for i = 1:N

B(:,i) = str2num(B_char(:,i));

end

G = zeros(2^N,N,'uint8');

G(:,1) = B(:,1);

for i = 2:N

G(:,i) = xor(B(:,i-1),B(:,i));

end

% Store Gray code stripe pattern.

if j ==1

for i = 1:N

P{j}(:,:,i) = repmat(G((1:width)+offset(j),i)',height,1);

end

else

for i = 1:N

P{j}(:,:,i) = repmat(G((1:height)+offset(j),i),1,width);

end

end

end

% % Decode Gray code stripe patterns.

% C = gray2dec(P{1})-offset(1);

% R = gray2dec(P{2})-offset(2);

53

6.4 Appendix D: Scanner Calibration Code

% Reset Matlab environment.

clear; clc;

% Add required subdirectories.

addpath('../../utilities');

% Select projector calibation images for inclusion.

useProjImages = 1:20; %[1:5,7:9];

% Define projector calibration plane properties.

% Note: These lengths are used to determine the extrinsic calibration.

%

That is, the transformation(s) from reference plane(s) ->

camera.

proj_cal_nX = 1;

% number of rectangles along x-axis

proj_cal_nY = 1;

% number of rectangles along y-axis

proj_cal_dX = 406.4;

% length along x-axis (mm) (between

checkerboard rectangles) [406.4]

proj_cal_dY = 335.0;

% length along y-axis (mm) (between

checkerboard rectangles) [330.2]

%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%

%%%%

% Part I: Calibration of the camera(s) independently of the projector.

% Load all checkerboard corners for calibration images (for each

camera).

% Note: Must select same corners in first images so that world

coordinate

%

system is consistent across all cameras.

camIndex = 1;

while exist(['./cam/v',int2str(camIndex)],'dir')

% Load checkerboard corners for calibration images.

load(['./cam/v',int2str(camIndex),'/calib_data']);

% Display the first camera calibration image.

I_cam1 = imread(['./cam/v',int2str(camIndex),'/001.bmp']);

figure(1); image(I_cam1); axis image;

hold on;

plot(x_1(1,:)+1,x_1(2,:)+1,'r+');

hold off;

title('First camera calibration image: 001.bmp');

drawnow;

% Configure the camera model (must be done before calibration).

est_dist

= [1;1;1;1;0]; % select distortion model

est_alpha

= 0;

% include skew

center_optim = 1;

% estimate the principal point

% Run the main calibration routine.

54

go_calib_optim;

% Save the camera calibration results as camera_results.mat.

saving_calib;

copyfile('Calib_Results.mat',['./calib_results/camera_results_v',...

int2str(camIndex),'.mat']);

delete('Calib_Results.mat'); delete('Calib_Results.m');

% Save the camera calibration results (in "side" variables).

fc_cam{camIndex}

= fc;

cc_cam{camIndex}

= cc;

kc_cam{camIndex}

= kc;

alpha_c_cam{camIndex} = alpha_c;

Rc_1_cam{camIndex}

= Rc_1;

Tc_1_cam{camIndex}

= Tc_1;

x_1_cam{camIndex}

= x_1;

X_1_cam{camIndex}

= X_1;

nx_cam{camIndex}

= nx;

ny_cam{camIndex}

= ny;

n_sq_x_1_cam{camIndex} = n_sq_x_1;

n_sq_y_1_cam{camIndex} = n_sq_y_1;

dX_cam{camIndex}

= dX;

dY_cam{camIndex}

= dY;

% Increment camera index.

camIndex = camIndex+1;

end

%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%

%%%%

% Part II: Projector-Camera Color Calibration (if necessary).

% Obtain color calibration matrix for each camera.

camIndex = 1; bounds = {}; projValue = {}; inv_color_calib_cam = {};

while exist(['./cam/v',int2str(camIndex)],'dir')

% Load all color calibration images for this camera.

C = {};

for j = 1:3

C{j} =

imread(['./cam/v',int2str(camIndex),'/c',int2str(j),'.bmp']);

projValue{camIndex,j} =

load(['./cam/v',int2str(camIndex),'/projValue.mat']);

end

% Select/save bounding box(if none exists).

color_bounds = {};

if ~exist(['./cam/v',int2str(camIndex),'/color_bounds.mat'],'file')

for j = 1:3

figure(10); clf;

imagesc(C{j}); axis image off;

title('Select the bounding box for color calibration');

drawnow;

[x,y] = ginput(2);

color_bounds{j} = round([min(x) max(x) min(y) max(y)]);

55

hold on;

plot(color_bounds{j}([1 2 2 1 1]),color_bounds{j}([3 3 4 4

3]),'r.-');

hold off;

pause(0.3);

end

bounds{camIndex} = color_bounds;

save(['./cam/v',int2str(camIndex),'/color_bounds.mat'],'color_bounds');

else

load(['./cam/v',int2str(camIndex),'/color_bounds.mat']);

bounds{camIndex} = color_bounds;

end

%

%

% Extract projector-camera color correspondences.

cam_colors = []; proj_colors = [];

for j = 1:3

crop_C = C{j}(bounds{camIndex}{j}(3):bounds{camIndex}{j}(4),...

bounds{camIndex}{j}(1):bounds{camIndex}{j}(2),:);

imagesc(crop_C);

axis image; pause;

crop_C = double(reshape(crop_C,[],3,1)');

crop_P = zeros(size(crop_C));

cam_colors = [cam_colors crop_C];

if j == 1

crop_P(1,:) = projValue{camIndex,j}.projValue;

elseif j == 2

crop_P(2,:) = projValue{camIndex,j}.projValue;

else

crop_P(3,:) = projValue{camIndex,j}.projValue;

end

proj_colors = [proj_colors crop_P];

end

inv_color_calib_cam{camIndex} = inv(cam_colors/proj_colors);

figure(11);

plot([cam_colors(:,1:1e3:end); proj_colors(:,1:1e3:end)]'); grid

on;

figure(12); drawnow;

plot([inv_color_calib_cam{camIndex}*cam_colors(:,1:1e3:end); ...

proj_colors(:,1:1e3:end)]'); grid on; drawnow;

% Increment camera index.

camIndex = camIndex+1;

end

%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%

%%%%

% Part III: Calibration of the projector (using first camera

calibration).

% Load all projected checkerboard corners for calibration images.

% Note: Calibrate reference plane positions (if not available).

load('./proj/v1/calib_data');

if ~exist('./proj/v1/ext_calib_data.mat','file')

load('./proj/v1/calib_data','n_ima')

56

for i = 1:n_ima

frame = ['./proj/v1/',num2str(i,'%0.3d')];

[Tc,Rc,H] = ...

computeExtrinsic(fc_cam{1},cc_cam{1},kc_cam{1},alpha_c_cam{1},frame,'bm

p',...

proj_cal_nX,proj_cal_nY,proj_cal_dX,proj_cal_dY);

eval(['Rc_',int2str(i),' = Rc']);

eval(['Tc_',int2str(i),' = Tc']);

if i == 1

save('./proj/v1/ext_calib_data.mat',['Rc_',int2str(i)]);

else

save('./proj/v1/ext_calib_data.mat',['Rc_',int2str(i)],'append');

end

save('./proj/v1/ext_calib_data.mat',['Tc_',int2str(i)],'append');

end

else

load('./proj/v1/ext_calib_data');

end

for i = 1:n_ima

eval(['xproj_',int2str(i),' = x_',int2str(i),';']);

end

% Synthesize projector image coordinates.

% Note: This assumes that 7x7 checkerboard blocks were selected in

%

each image (i.e., row-by-col). In addition, this assumes that

%

selection was counter-clockwise starting in the lower left.

%

Finally, we assume that each square is 64x64 pixels.

%

Checkerboard begins 1x1 square "in" and x_i is the ith

%

points from left-to-right and top-to-bottom.

I = 255*ones(768,1024,'uint8');

C = uint8(255*(checkerboard(64,4,4) > 0.5));

I((1:size(C,1))+(2*64)*1,(1:size(C,2))+(2*64)*2) = C;

x = (0:64:64*6)+(2*64)*2+64+0.5-1;

y = (0:64:64*6)+(2*64)*1+64+0.5-1;

[X,Y] = meshgrid(x,y);

X = X'; Y = Y';

for i = 1:n_ima

eval(['x_proj_',int2str(i),' = [X(:) Y(:)]'';']);

end

% Display the first projector calibration image.

I_proj1 = imread('./proj/v1/001.bmp');

figure(2); image(I_proj1);

hold on;

plot(xproj_1(1,:)+1,xproj_1(2,:)+1,'r+');

hold off;

title('First projector calibration image: 001.bmp');

drawnow;

% Display points on projector image.

figure(3); image(I); colormap(gray);

hold on;

plot(x_proj_1(1,:)+1,x_proj_1(2,:)+1,'r.');

57

hold off;

title('Points overlaid on projector image');

drawnow;

% Display the 3D configuraton using the extrinsic calibration.

X_proj

= []; % 3D coordinates of the points

x_proj

= []; % 2D coordinates of the points in the projector image

n_ima_proj = []; % number of calibration images

for i = useProjImages

% Transform calibation marker positions to camera coordinate system.

X = [0 proj_cal_dX proj_cal_dX 0; 0 0 proj_cal_dY proj_cal_dY; 0 0 0

0];

X = eval(['Rc_',int2str(i),'*X +

repmat(Tc_',int2str(i),',1,size(X,2));']);

% Recover 3D points corresponding to projector calibration pattern.

% Note: Uses ray-plane intersection to solve for positions.

V =

eval(['pixel2ray(xproj_',int2str(i),',fc_cam{1},cc_cam{1},kc_cam{1},alp

ha_c_cam{1});']);

C = zeros(3,size(V,2));

calPlane = fitPlane(X(1,:),X(2,:),X(3,:));

X = intersectLineWithPlane(C,V,calPlane');

% Concatenate projector and world correspondances.

eval(['xproj = xproj_',num2str(i),';']);

eval(['X_proj_',num2str(i),' = X;']);

eval(['X_proj = [X_proj X_proj_',num2str(i),'];']);

eval(['x_proj = [x_proj x_proj_',num2str(i),'];']);

n_ima_proj = [n_ima_proj i*ones(1,size(xproj,2))];

end

% Configure the projector model (must be done before calibration).

nx

= 1024;

% number of projector columns

ny

= 768;

% number of projector rows

no_image

= 1;

% only use pre-determined corners

n_ima

= 1;

% default behavior for a single image

est_dist

= [1;1;0;0;0]; % select distortion model

est_alpha

= 0;

% no skew

center_optim = 1;

% estimate the principal point

% Run the main projector calibration routine.

X_1 = X_proj;

x_1 = x_proj;

go_calib_optim;

% Save the projector calibration results as projector_results.mat.

saving_calib;

copyfile('Calib_Results.mat','./calib_results/projector_results.mat');

delete('Calib_Results.mat'); delete('Calib_Results.m');

% Save the projector calibration results (in "side" variables).

fc_proj

= fc;

cc_proj

= cc;

kc_proj

= kc;

58

alpha_c_proj

Rc_1_proj

Tc_1_proj

x_1_proj

X_1_proj

nx_proj

ny_proj

n_sq_x_1_proj

n_sq_y_1_proj

dX_proj

dY_proj

=

=

=

=

=

=

=

=

=

=

=

alpha_c;

Rc_1;

Tc_1;

eval(['x_proj_',num2str(useProjImages(1)),';']);

eval(['X_proj_',num2str(useProjImages(1)),';']);

nx;

ny;

eval(['n_sq_x_',num2str(useProjImages(1)),';']);

eval(['n_sq_y_',num2str(useProjImages(1)),';']);

dX;

dY;

%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%

%%%%

% Part IV: Display calibration results.

% Plot camera calibration results (in camera coordinate system).

procamCalibDisplay;

for i = 1

hold on;

Y

= Rc_1_cam{i}*X_1_cam{i} +

Tc_1_cam{i}*ones(1,size(X_1_cam{i},2));

Yx

= zeros(n_sq_x_1_cam{i}+1,n_sq_y_1_cam{i}+1);

Yy

= zeros(n_sq_x_1_cam{i}+1,n_sq_y_1_cam{i}+1);

Yz

= zeros(n_sq_x_1_cam{i}+1,n_sq_y_1_cam{i}+1);

Yx(:) = Y(1,:); Yy(:) = Y(2,:); Yz(:) = Y(3,:);

mesh(Yx,Yz,-Yy,'EdgeColor','r','LineWidth',1);

Y

= X_1_proj;

Yx

= zeros(n_sq_x_1_proj+1,n_sq_y_1_proj+1);

Yy

= zeros(n_sq_x_1_proj+1,n_sq_y_1_proj+1);

Yz

= zeros(n_sq_x_1_proj+1,n_sq_y_1_proj+1);

Yx(:) = Y(1,:); Yy(:) = Y(2,:); Yz(:) = Y(3,:);

mesh(Yx,Yz,-Yy,'EdgeColor','g','LineWidth',1);

hold off;

end

title('Projector/Camera Calibration Results');

xlabel('X_c'); ylabel('Z_c'); zlabel('Y_c');

view(50,20); grid on; rotate3d on;

axis equal tight vis3d; drawnow;

%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%

%%%%

% Part V: Save calibration results.

% Determine mapping from projector pixels to optical rays.

% Note: Ideally, the projected images should be pre-warped to

%

ensure that projected planes are actually planar.

c = 1:nx_proj;

r = 1:ny_proj;

[C,R] = meshgrid(c,r);

np = pixel2ray([C(:) R(:)]',fc_proj,cc_proj,kc_proj,alpha_c_proj);

np = Rc_1_proj'*(np - Tc_1_proj*ones(1,size(np,2)));

Np = zeros([ny_proj nx_proj 3]);

Np(:,:,1) = reshape(np(1,:),ny_proj,nx_proj);

Np(:,:,2) = reshape(np(2,:),ny_proj,nx_proj);

59

Np(:,:,3) = reshape(np(3,:),ny_proj,nx_proj);

P = -Rc_1_proj'*Tc_1_proj;

% Estimate plane equations describing every projector column.

% Note: Resulting coefficient vector is in camera coordinates.

wPlaneCol = zeros(nx_proj,4);

for i = 1:nx_proj

wPlaneCol(i,:) = ...

fitPlane([P(1); Np(:,i,1)],[P(2); Np(:,i,2)],[P(3); Np(:,i,3)]);

%figure(4); hold on;

%plot3(Np(:,i,1),Np(:,i,3),-Np(:,i,2),'r-');

%drawnow;

end

% Estimate plane equations describing every projector row.

% Note: Resulting coefficient vector is in camera coordinates.

wPlaneRow = zeros(ny_proj,4);

for i = 1:ny_proj

wPlaneRow(i,:) = ...

fitPlane([P(1) Np(i,:,1)],[P(2) Np(i,:,2)],[P(3) Np(i,:,3)]);

%figure(4); hold on;

%plot3(Np(i,:,1),Np(i,:,3),-Np(i,:,2),'g-');

%drawnow;

end

% Pre-compute optical rays for each camera pixel.

for i = 1:length(fc_cam)

c = 1:nx_cam{i};

r = 1:ny_cam{i};

[C,R] = meshgrid(c,r);

Nc{i} = Rc_1_cam{1}*Rc_1_cam{i}'*pixel2ray([C(:) R(:)]'1,fc_cam{i},cc_cam{i},kc_cam{i},alpha_c_cam{i});

Oc{i} = Rc_1_cam{1}*Rc_1_cam{i}'*(-Tc_1_cam{i}) + Tc_1_cam{1};

end

% Save the projector/camera calibration results as calib_cam_proj.mat.

save_command = ...

['save ./calib_results/calib_cam_proj ',...

'fc_cam cc_cam kc_cam alpha_c_cam Rc_1_cam Tc_1_cam ',...

'x_1_cam X_1_cam nx_cam ny_cam n_sq_x_1_cam n_sq_y_1_cam ',...

'dX_cam dY_cam ',...

'inv_color_calib_cam ',...

'fc_proj cc_proj kc_proj alpha_c_proj Rc_1_proj Tc_1_proj ',...

'x_1_proj X_1_proj nx_proj ny_proj n_sq_x_1_proj n_sq_y_1_proj '...

'dX_proj dY_proj '...

'Oc Nc wPlaneCol wPlaneRow'];

eval(save_command);

(Complete Matlab scripts available at: http://rh.edu/~mannan/EP/References/)

60