Numerical Solutions 1st Order Differential Equations

Numerical Methods

Introduction

1) Basic Theory – Difference Equations

2) Euler’s Method – Tangent Line Approximation

3) Taylor Series Methods (with example)

4) Runge-Kutta Methods – 2 nd Order Derivation

5) Runge-Kutta – Higher Order Expressions

6) 4 th Order Runge-Kutta – 2 nd Order Differential Equtions

7) Examples a) MathCAD: 4 th Order Runge-Kutta/2 nd Order Diff. Eq. b) C++: Euler’s Method c) C++: Taylor Series Method d) C++: 4 th Order Runge-Kutta

8) References

Authored by: Jonathan W. Gibson

Basic Theory - Difference Equations

When Numerical Methods Apply

1) Other methods are not applicable

2) Aids in physical understanding when the exact solution is difficult to interpret

3) The exact solution is extremely difficult to obtain

Difference Equation (definition of slope / equation of a line) y

1 x

1

y x

0

0

m

12

y

y

0

m

x

x

0

Definition of the Derivative dy dx

lim

x

0 y ( x

x )

x

y ( x )

Graphical Representation or dy dx

lim h

0 y ( x

h )

y ( x ) h

Euler’s Method – Tangent Line Approximation

Consider the general, first order initial value problem. dy dx

f ( x , y ) y ( x

0

)

y

0

We wish to approximate the result at a finite number of points separated by Δx = h, thus, y ( x

0 y ( x

0 y ( x

0 y ( x

0

)

y

0

x )

2

x

)

y ( y x

0

( x

0

h )

y (

2 h )

x

1 y (

)

y

1 x

2

)

n

x )

y ( x

0

nh )

y ( x n y

2

)

y n

Integrating the original expression yields y

1 y

0

dy

x

1 x

0

f ( x , y ) dx

If we approximate the function f(x,y) by the value at ( x

0

,y

0

), it becomes a number and may be pulled out of the integral. The result is, y

1

y

0

f ( x

0

, y

0

) x

1 x

0 dx

f ( x

0

, y

0

)

x

1

x

0

Identifying the quantity (x

1

- x

0

) = h we may write this as, y

1

y

0

h

f ( x

0

, y

0

)

Repeating the procedure on the interval x n

x

x n+1

gives us Euler’s Method. y n

1

y n

h

f ( x n

, y n

)

Euler’s Method – Tangent Line Approximation

Graphical Representation of Euler’s Method

Errors in Numerical Methods

Truncation Error: Also called discretization error, this occurs because we are approximating the slope with constant values over discrete intervals. In reality, the slope is a smooth function that varies continuously. The larger the step size, the more prevalent truncation error becomes.

Round Off Error: Depending on the number of decimal points carried in each step, this may or may not be large. This error may be both positive and negative and tends to cancel itself out. The smaller the step size, the more prevalent round off error becomes.

Every differential equation has an optimum step size that balances truncation and roundoff errors.

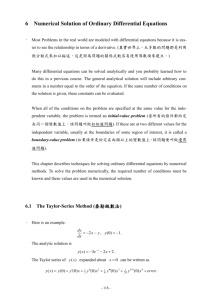

Taylor Series Methods

Taylor Series Approximation f ( x

0

h )

n

0 h n n !

f ( x

0

)

Expanded Taylor Series f ( x

0

h )

f ( x

0

)

hf ' ( x

0

)

h

2

2 f

Writing this as an iterated equation we have y n

1

y

0

hf

x n

, y n

h

2

2

'' ( x

0

)

h n !

n f ' ( x n

, y n

)

n

h n !

f f ( n ) ( x

0

( n ) ( x n

,

)

y n

)

where f ( x n

, y n

)

dy dx

Comparing this to Euler’s Method y n

1

y

0

hf

x n

, y n

We see that Euler’s Method is just a Taylor Approximation of order 1, where the order is defined as the highest order derivative used in the approximation.

Truncation Errors in Taylor Series Methods

The truncation error may be crudely estimated by the first unused term in the Taylor

Series approximation.

Euler’s Method Local Truncation Error:

(h 2 )

Example Using Taylor Series Method

Consider the initial value problem, dx dt

1

x 2

t 3 and x ( 0 )

0

Through repeated differentiation with respect to t , we find x

1

x x

2

1

1

x 2 x

3

2

x

2 x x

4

x

5

3

4

2

x

2 x x x

2

6 x

3 t

2 t

3

6 t

1

2

x

1 x

1

2

2

2 x

1 x

2 x

3

3 t 2

2 t 3 x

2

2

6

2 x

1 x

4

6 x

2 x

3

6 t

6 x

1

( 0 )

0 x

2

( 0 ) x

3

( 0 )

1

0 x

4

( 0 ) x

5

( 0 )

2

6

Using the Taylor Series Approximation, we have x ( t

h )

x ( t )

h x ( t )

h

2

2

In iterated form this becomes

x ( t )

h 3

6

( t h 4

)

24 x x n

1

x n

hx

2

h 2

2 x

3

h 3

6 x

4 h 4

24 x

5

( h 5 ) or x n

1

x n

h

x

2

h

2 x

3

h

6

2

( t x

4 h

3

24 x

5

( h 5 )

)

( h 5 )

At each step, x

2

, x

3

, x

4

, and x

5

must be recalculated using the expressions above. This method has the disadvantage that these derivatives must be analytically determined before allowing a computer to perform the iteration process.

Runge-Kutta Method – 2 nd Order Derivation

In the Taylor Series Method we were required to analytically determine the various derivatives by implicit differentiation. This can be problematic when the function is rather involved. Runge-Kutta Methods avoid this difficulty.

Consider the initial value problem, dx

dt f ( t , x ) and x ( 0 )

x

0

A form is adopted having two function evaluations of the form k

1

f ( t , x )

dx dt and k

2

f ( t

ah , x

bhk

1

)

By feeding k

1

into the expression for k

2

, we effectively eliminate the need to analytically calculate derivatives of f(t,x).

A linear combination of k

1

and k

2 are added to the value of x at t to obtain the value at t+h . We may write, x ( t

h )

x ( t )

h

c

1 k

1

c

2 k

2

x ( t

h )

x ( t )

h

c

1 f ( t , x )

c

2 f ( t

ah , x

bhf )

(1)

The goal is to find c

1

, c

2

,

, and

so that equation (1) comforms with the Taylor Series

Approximation of Order 2, equation (2). x ( t

h )

x ( t )

h x ( t )

h

2

2

x ( t )

( h

3

)

We may rewrite k

2

using the 2-Variable Taylor Series Approximation f ( t

h , x

k )

n

0

1 n !

h

t

k

x f

(2)

Taylor Series

Expanding to 2 nd Order, we have k

2

f ( t

ah , x

bhf )

f

ahf t

bhf x f

We now substitute this expression for k

2 into equation (1) to yield x ( t

h )

x ( t )

hc

1 f

hc

2

f

ahf t

bhf x f

Rearranging, we have x ( t

h )

x ( t )

c

1

c

2

hf

bh 2 c

2 f x

Using the following relation, f

ah 2 c

1 f t

(3)

f ( t , x )

x

f

x dx dt

f

t

We may rewrite equation (1) dt dt

f x f

f t x ( t

h )

x ( t )

hf

h

2

2 f x f

h 2

2 f t

(4)

Comparing equations (3) and (4), we see that we must have the following relations, c

1

c

2

1 bc

2

1

2 ac

1

1

2

A convenient solution (but not the only solution) to this set of equations is c

1

c

2

1

2 and a

b

1

Inserting these into equation (1) yields the 2 nd Order Runge-Kutta Method x ( t

h )

x ( t )

h

2

k

1

k

2

where k

1

f ( t , x ) and k

2

f

t

h , x

hk

1

Runge-Kutta Higher Order Expressions

2 nd Order Runge-Kutta x

t

h

x ( t )

h

2

k

1

k

2

k

1 k

2

f ( t , x ) f

t

h , x

hk

1

3rd Order Runge-Kutta x

t

h

x ( t )

h

9

2 k

1

3 k

2 k

1 f ( t , x ) k

2

f t

h

2

, x

h

2 k

1 k

3

f t

3 h

, x

4

3 h k

2

4

4 k

3

4 th Order Runge-Kutta x

t

h

x ( t )

h

6

k

1 k

1 f ( t , x ) k

2

f

2 k

2

2 k

3 t

h

2

, x

h

2 k

1 k

3

k

4

f f

t t

h

2

, x

h

2 k

2

h , x

hk

3

k

4

Runge-Kutta – 2 nd Order Differential Equations

Any n th order differential equation may be decomposed into n 1 st order differential equations. Consider the following 2 nd order differential equation for a damped and driven harmonic oscillator.

x b m

m x

F m cos(

t

)

We may decompose this into 2 first order differential equations in the following way, x

v

F m cos(

t

)

b m v

m x

G ( t , x , v )

Stated without proof, the 4 th Order Runge-Kutta Method is x

t

h

x ( t )

h

6

k

1

2 k

2

2 k

3

k

4

v

t

h

v ( t )

h

6

K

1

2 K

2

2 K

3

K

4

k

1 v K

1

G ( t , x , v ) k

2

f t

h

2

, x

h

2 k

1

K

2

G ( t

h

2

, x

h

2 k

1

, v

h

2

K

1

) k

3

k

4

f f

t t

h

2

, x

h

2 k

2

h , x

hk

3

K

3

G ( t

h

2

, x

h

2 k

2

, v

h

2

K

2

)

K

4

G ( t

h , x

hk

3

, v

hK

3

)

4th Order Runge-Kutta Routine for MathCad: SmallAngle, Damped and Forced Pendulum

The Equation of Motion is given by:

''

b

2

'

g

l

T

cos W t ) (where the primes indicate time derivatives)

Input the system parameters (mass of bob, length of rod, damping coefficient, driving torque and frequency, etc.) m

2 b

50 l

10 T

5 g

9.81

W

5

Define the coefficients based on these parameters

B

b

2

K

g l

A

T

The Runge-Kutta algorithm

Define the initial conditions and the time interval init

5

180 init

0 startt

0 endt

25

Define the angular acceleration as a function of t ,

, and

K

B

)

Define the number of iterations, step size, counting index, and indexed variables n

( endt

startt j

1 h

endt

startt n t

0

startt

The heart of the 4th Order Runge-Kutta algorithm is defined below

h

h

h

0.5 h

h

h

h

0.5 h

0.5 h

h

0.5 h

h

0.5 h

h

h

h

0.5 h

0.5 h

h

0.5 h

h

h

h

h

h

h

6 h

6

h

h

h

h

h

h

h

h

h

h

h

Last, place the output values from each step in a matrix

0

0

init

init

j

j

rK t

rk t

j

j

j

h j

j

j

h

t

t j

h

Plot the data. Time series and Phase Diagrams are shown below.

0.1

j

0

0.1

5 10 t j

15

0.1

0

j

0.1

0.2

0 5 10 t j

15

20

20

25

25

0.1

0

j

0.1

0.2

0.08

0.06

0.04

0.02

0

j

0.02

0.04

0.06

0.08

// Euler Method f(x,t) = 1 + x^2 + t^3 (C++)

#include <iostream>

#include <cmath>

} using namespace std; //introduces namespace std void main()

{ cout.setf(ios_base::fixed, ios_base::floatfield); float h = 0.0078125; float x = 0.0; float t = 0.0; float n = 1/h; for (int i = 1; i<=n; i++)

{

} double dxdt = 1 + x*x + t*t*t; x = x + (h*dxdt); t = t+h; cout << x << "\n";

// 128 steps over interval 0 to 1

// Result: x(1) = 1.957787

// Taylor Method f(x,t) = 1 + x^2 + t^3 (C++)

#include <iostream>

#include <cmath>

{ using namespace std; //introduces namespace std void main() cout.setf(ios_base::fixed, ios_base::floatfield); double h = 0.0078125; double x = 0.0; double t = 0.0; double n = 1/h; double x2=1.0; double x3=0.0; double x4=2.0; double x5=6.0; for (int i = 1; i<=n; i++) // 128 steps over interval 0 to 1

{ x2 = 1.0 + (x*x) + (t*t*t); x3 = (2*x*x2) + (3*t*t); x4 = (2*x*x3) + (2*x2*x2) + (6*t); x5 = (2*x*x4) + (6*x2*x3) + 6; x = x + (h*x2)+(0.5*h*h*x3)+((1/6)*h*h*h*x4)+((1/24)*h*h*h*h*x5); t = t+h; cout << x << "\n";

}

}

// Result: x(1) = 1.991327

// Runge-Kutta Method f(x,t) = 1 + x^2 + t^3 (C++)

#include <iostream>

#include <cmath> using namespace std; //introduces namespace std void main()

{ cout.setf(ios_base::fixed, ios_base::floatfield); double h = 0.0078125; double x = 0.0; double t = 0.0; double n = 1/h; double k1; double k2; double k3; double k4; for (int i = 1; i<=n; i++)

{ k1 = 1 + (x*x) + (t*t*t);

// 128 steps over interval 0 to 1 k2 = 1 + (x+(0.5*h*k1))*(x+(0.5*h*k1)) +

((t+0.5*h)*(t+0.5*h)*(t+0.5*h)); k3 = 1 + (x+(0.5*h*k2))*(x+(0.5*h*k2)) +

((t+0.5*h)*(t+0.5*h)*(t+0.5*h)); k4 = 1 + (x+(h*k3))*(x+(h*k1)) + ((t+h)*(t+h)*(t+h)); x = x + ((h/6)*(k1+(2*k2)+(2*k3)+k4)); t = t+h;

}

} cout << x << "\n";

// Result: x(1) = 1.991742

Numerical Methods

Bibliography/References

“Elementary Differential Equations and Boundary Value Problems”; 4 th Ed;

Boyce/DiPrima

“Multivariable Calculus, Linear Algebra, and Differential Equation; 3 rd Ed; Grossman

“Numerical Mathematics and Computing”; 2 nd Ed; Cheney/Kincaid

“Classical Dynamics of Particles and Systems”; 4 th Ed; Marion/Thornton

“The Numerical Analysis of Ordinary Differential Equations”; JC Butcher

“Calculus”; 9 th Ed; Thomas/Finney