Revised Chapter 10 in Specifying and Diagnostically Testing Econometric Models (Edition 3)

© by Houston H. Stokes 29 November 2011. All rights reserved. Preliminary Draft

Chapter 10

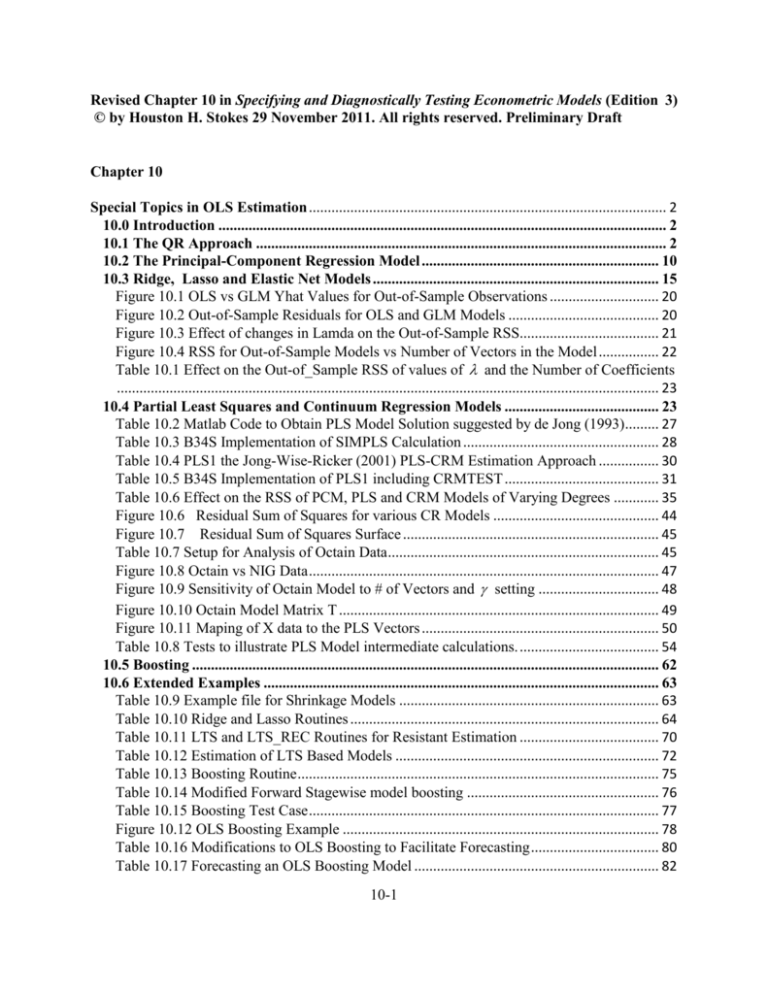

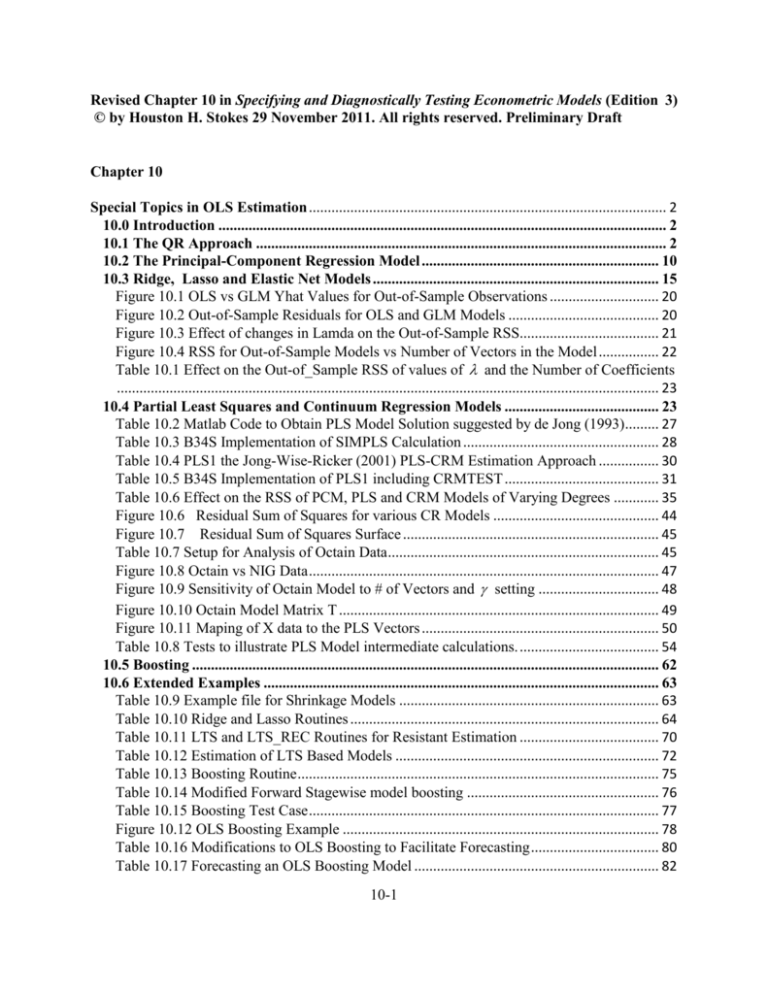

Special Topics in OLS Estimation ............................................................................................... 2

10.0 Introduction ....................................................................................................................... 2

10.1 The QR Approach ............................................................................................................. 2

10.2 The Principal-Component Regression Model ............................................................... 10

10.3 Ridge, Lasso and Elastic Net Models ............................................................................ 15

Figure 10.1 OLS vs GLM Yhat Values for Out-of-Sample Observations ............................. 20

Figure 10.2 Out-of-Sample Residuals for OLS and GLM Models ........................................ 20

Figure 10.3 Effect of changes in Lamda on the Out-of-Sample RSS..................................... 21

Figure 10.4 RSS for Out-of-Sample Models vs Number of Vectors in the Model ................ 22

Table 10.1 Effect on the Out-of_Sample RSS of values of and the Number of Coefficients

................................................................................................................................................ 23

10.4 Partial Least Squares and Continuum Regression Models ......................................... 23

Table 10.2 Matlab Code to Obtain PLS Model Solution suggested by de Jong (1993) ......... 27

Table 10.3 B34S Implementation of SIMPLS Calculation .................................................... 28

Table 10.4 PLS1 the Jong-Wise-Ricker (2001) PLS-CRM Estimation Approach ................ 30

Table 10.5 B34S Implementation of PLS1 including CRMTEST ......................................... 31

Table 10.6 Effect on the RSS of PCM, PLS and CRM Models of Varying Degrees ............ 35

Figure 10.6 Residual Sum of Squares for various CR Models ............................................ 44

Figure 10.7 Residual Sum of Squares Surface .................................................................... 45

Table 10.7 Setup for Analysis of Octain Data ........................................................................ 45

Figure 10.8 Octain vs NIG Data ............................................................................................. 47

Figure 10.9 Sensitivity of Octain Model to # of Vectors and setting ................................ 48

Figure 10.10 Octain Model Matrix T ..................................................................................... 49

Figure 10.11 Maping of X data to the PLS Vectors ............................................................... 50

Table 10.8 Tests to illustrate PLS Model intermediate calculations. ..................................... 54

10.5 Boosting ............................................................................................................................ 62

10.6 Extended Examples ......................................................................................................... 63

Table 10.9 Example file for Shrinkage Models ..................................................................... 63

Table 10.10 Ridge and Lasso Routines .................................................................................. 64

Table 10.11 LTS and LTS_REC Routines for Resistant Estimation ..................................... 70

Table 10.12 Estimation of LTS Based Models ...................................................................... 72

Table 10.13 Boosting Routine ................................................................................................ 75

Table 10.14 Modified Forward Stagewise model boosting ................................................... 76

Table 10.15 Boosting Test Case ............................................................................................. 77

Figure 10.12 OLS Boosting Example .................................................................................... 78

Table 10.16 Modifications to OLS Boosting to Facilitate Forecasting .................................. 80

Table 10.17 Forecasting an OLS Boosting Model ................................................................. 82

10-1

10-2

Chapter 10

Table 10.18 Wampler Test Problem ...................................................................................... 86

Table 10.19 Longley Test Data .............................................................................................. 89

Table 10.20 Results from the Longley Total Equation .......................................................... 92

Table 10.21 Correlation between the Error term and Right-Hand-Side................................. 93

Table 10.22 Effect of Data Precision on Accuracy using the Grunfeld Data ......................... 94

Table 10.23 Effect of Data Precision on Accuracy using Gas Data ....................................... 99

Table 10.24 Matlab Commands to Replicate Accuracy Results Obtained with B34S ........ 105

Table 10.25. Correlation of the Residual and RHS Variables using Matlab 2006b............. 106

Table 10.26. Correlation of the Residual and RHS Variables using Matlab 2007a ............. 106

10.7 Conclusion ...................................................................................................................... 106

Special Topics in OLS Estimation

10.0 Introduction

The qr command in B34S allows calculation of a high accuracy solution to the OLS

regression problem and is discussed in section 10.1. Using this command the principal component

regression can be calculated to give further insight into possible rank problems and is discussed in

section 10.2 where the singular value decomposition is introduced. The matrix command provides

substantially more capability and can be used to provide additional insight. After an initial survey of

the theory, a number of examples are presented. Further examples on this important topic are

presented in section 16.7 which involves the matrix command and illustrates accuracy gains due to

data precision and alternative methods of calculation. Section 10.3 discusses the ridge, lasso, least

trimmed squares and elastic net models which can be used to shrink the variables on the right hand

side and/or remove outliers. These procedures are related to the principle component regression

model which can also be used to shrink the model. Section 10.4 is devoted to the partial least squares

(PLS) procedure which is shown to be a contromise between the continuum of models between OLS

to principle component regression (PCR) in terms of skrinkage. The continuum power regression

model is shown to be a more general setup incolving OLS, PLS and PCR as special cases. Section

10.5 is devoted to boosting while extended examples for many of the procedures discussed are given

in section 10.6.

10.1 The QR Approach

Interest in the QR approach to estimation was stimulated by Longley's (1967) seminal paper

on computer accuracy.1 Equation (4.1-6) indicated how the OLS calculation of ˆ , which is usually

1 The literature on the QR approach is vast. No attempt will be made to provide a summary in the

limited space available in this book. Good references include Longley (1984), Dongarra et al (1979)

and Strang (1976). Chapter 4 of this book provides a discussion of this approach applied to systems

estimation. This chapter provides some examples and a brief intuitive discussion based in part on

Dongarra et al (1979), which documents the LINPACK routines DQRDC and DQRSL. These

routines are used for all QR calculations.

Special Topics in OLS Estimation

10-3

calculated as ( X ' X )1 X ' y , could be more accurately calculated as R 1Q1' y , where the T by K

matrix X was initially factored as a product of the T by T orthogonal matrix Q and the upper

triangular K by K Cholesky matrix R:

R

Q' X

0

(10.1-1)

Matrix Q is usually partitioned as Q Q1 Q2 so that

X Q1R

(10.1-2)

where Q1 is a T by K matrix that is usually kept in factored form as

Q H1H 2 ,

(10.1-3)

, Hk

where H i is the Householder transformation (Strang 1976, 279). Q2 is a T by (T K ) matrix.

In terms of the QR factorization the parameter values and the fitted values are,

ˆ ( X ' X )1 X ' y ( R ' Q1'Q1R)1 R ' Q1' y ( R ' R)1 R ' Q1' y R 1Q1' y

(10.1-4)

and

X ˆ Q1RR1Q1' y Q1Q1' y .

(10.1-5)

Davidson and MacKinnon (1993, 30) write X ˆ Q1Rˆ Q1ˆ . In view of (10-1-5) Q1ˆ Q1Q1' y and

ˆ Q1' y

(10.1-6)

from which the fitted values (10.1-5) can be calculated. Assuming T is large relative to K, then Q1 is

T by K and Q2 is T by (T-K) matrix, which is substantially larger. Usually the "economy" QR is

made where only Q1 is calculated. In many cases even Q1 is not needed, since only R is needed to

( X ' X ) 1 ( R ' Q 'Q R ) 1 R 1 ( R 1 )' from which it is possible to get the standard errors and ˆ using

1

1

a more accurate estimate of R than obtained from the Cholesky factorization of X ' X . An example

of the gain will be shown below. Dongarra et al (1979, sec. 9.1) show that given X is of rank K, then

the matrix

10-4

Chapter 10

PX Q1Q1'

(10.1-7)

is the orthogonal projection onto the column space of X and

PX Q2 Q2'

(10.1-8)

is the projection onto the orthogonal complement of X in view of equation (10.1-1). The residuals of

an OLS model are constrained to be orthogonal or have zero correlation with the right-hand sides of

the equation. Using a regression the mapping of X to Q1 will be illustrated below. Given that the

residuals are orthogonal to the left hand side, if follows from (10.1-2)

eˆ PX y Q2 Q2' y

(10.1-9)

The code listed next illustrates these relationships using the Theil (1971) textile dataset studied in

Chapter 2. First the X matrix is built and the economy QR factorization performed. The columns of

Q1 are shown to have Euclidian length unity and be orthogonal (Q1'Q1 I ) . Column 1 of Q1 is

shown to be a scalar transformation of X (-0.1205241588547691) . OLS is used to show the second

and third columns of Q1 are linear transformations of col 1 and to 2 and 1-3 of X respectively.

b34sexec options ginclude('b34sdata.mac') member(theil);

b34srun;

b34sexec matrix;

call loaddata;

call echooff;

x=mfam(catcol(log10ri log10rpt,constant));

r=qr(x,q);

call print(x,q,r);

i=nocols(q);

test=array(i:);

do k=1,nocols(q);

test(k)=sumsq(q(,k));

enddo;

call print(test);

col_1_q=q(,1);

col_2_q=q(,2);

col_3_q=q(,3);

col_1_x=x(,1);

col_2_x=x(,2);

col_3_x=x(,3);

s= afam(col_1_q)/afam(col_1_x);

call print('Scale factor for x(,1) => q(,1) ',S:);

call print('Second col of q linear transform of x Orthog. to Col 1':);

call olsq(col_2_q, col_1_x, col_2_x :noint :print);

call olsq(col_1_q, col_2_q :noint :print);

call olsq(col_3_q, col_1_x, col_2_x col_3_x :noint :print);

call print('Test of orthogonal Condition',transpose(q)*q);

b34srun;

Special Topics in OLS Estimation

10-5

Edited output

B34S 8.10Z

(D:M:Y)

Variable

TIME

CT

RI

RPT

LOG10CT

LOG10RI

LOG10RPT

CONSTANT

21/ 8/06 (H:M:S) 14: 3:40

Label

1

2

3

4

5

6

7

8

DATA STEP

PAGE

# Cases

Mean

17

17

17

17

17

17

17

17

1931.00

134.506

102.982

76.3118

2.12214

2.01222

1.87258

1.00000

YEAR

CONSUMPTION OF TEXTILES

REAL INCOME

RELATIVE PRICE OF TEXTILES

LOG10(CONSUMPTION OF TEXTILES)

LOG10(REAL INCOME)

LOG10(RELATIVE PRICE OF TEXTILES)

Number of observations in data file

17

Current missing variable code

1.000000000000000E+31

Data begins on (D:M:Y) 1: 1:1923 ends 1: 1:1939.

Frequency is

B34S(r) Matrix Command. d/m/y 21/ 8/06. h:m:s 14: 3:40.

=>

CALL LOADDATA$

=>

CALL ECHOOFF$

X

= Matrix of

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

Q

1

1.98543

1.99167

2.00000

2.02078

2.02078

2.03941

2.04454

2.05038

2.03862

2.02243

2.00732

1.97955

1.98408

1.98945

2.01030

2.00689

2.01620

R

1

-0.239292

-0.240044

-0.241048

-0.243552

-0.243552

-0.245799

-0.246416

-0.247120

-0.245703

-0.243751

-0.241931

-0.238583

-0.239129

-0.239777

-0.242290

-0.241879

-0.243000

TEST

1

-8.29709

0.00000

0.00000

= Array

1.00000

17

3

by

3

1.00000

3

elements

elements

3

-0.306449

-0.236160

-0.142443

0.920310E-01

0.923998E-01

0.301762

0.359337

0.425744

0.294795

0.113219

-0.561974E-01

-0.368744

-0.317944

-0.255997

-0.224782E-01

-0.607658E-01

0.436465E-01

by

2

-7.72132

-0.375029

0.00000

of

3

3

1.00000

1.00000

1.00000

1.00000

1.00000

1.00000

1.00000

1.00000

1.00000

1.00000

1.00000

1.00000

1.00000

1.00000

1.00000

1.00000

1.00000

2

-0.417759

-0.391904

-0.370073

-0.204205

-0.150576

-0.146393

-0.145235

-0.264908E-01

0.137147

0.177336

0.214822

0.123456

0.114489

0.347728

0.252841

0.248275

0.236848

= Matrix of

1

2

3

by

2

2.00432

2.00043

2.00000

1.95713

1.93702

1.95279

1.95713

1.91803

1.84572

1.81558

1.78746

1.79588

1.80346

1.72099

1.77597

1.77452

1.78746

= Matrix of

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

17

3

elements

3

-4.12287

0.304283E-03

-0.442434E-01

elements

1.00000

Scale factor for x(,1) => q(,1)

-0.1205241588547691

Second col of q linear transform of x Orthog. to Col 1

Std. Dev.

5.04975

23.5773

5.30097

16.8662

0.791131E-01

0.222587E-01

0.961571E-01

0.00000

1

Variance

25.5000

555.891

28.1003

284.470

0.625889E-02

0.495451E-03

0.924619E-02

0.00000

1

Maximum

Minimum

1939.00

168.000

112.300

101.000

2.22531

2.05038

2.00432

1.00000

1923.00

99.0000

95.4000

52.6000

1.99564

1.97955

1.72099

1.00000

10-6

Chapter 10

Note perfect fit of columns 1 and 2 of X mapping to column 2 of Q1 .

Ordinary Least Squares Estimation

Dependent variable

Centered R**2

Adjusted R**2

Residual Sum of Squares

Residual Variance

Standard Error

Total Sum of Squares

Log Likelihood

Mean of the Dependent Variable

Std. Error of Dependent Variable

Sum Absolute Residuals

1/Condition XPX

Maximum Absolute Residual

Number of Observations

Variable

COL_1_X

COL_2_X

Lag

0

0

Coefficient

2.4814260

-2.6664627

COL_2_Q

1.000000000000000

1.000000000000000

2.033498177717717E-26

1.355665451811812E-27

3.681936245797599E-14

0.9999999945536433

502.7987247346952

1.789899162686381E-05

0.2499999993192054

5.317413176442187E-13

5.668199248898641E-04

5.750955267558311E-14

17

SE

0.91472228E-13

0.98177458E-13

t

0.27127643E+14

-0.27159623E+14

Here column 1 of Q1 is orthogonal to col 2 of Q1 .

Ordinary Least Squares Estimation

Dependent variable

Centered R**2

Adjusted R**2

Residual Sum of Squares

Residual Variance

Standard Error

Total Sum of Squares

Log Likelihood

Mean of the Dependent Variable

Std. Error of Dependent Variable

Sum Absolute Residuals

1/Condition XPX

Maximum Absolute Residual

Number of Observations

Variable

COL_2_Q

Lag

0

COL_1_Q

-8683.232715995069

-8683.232715995069

0.9999999999999993

6.249999999999996E-02

0.2499999999999999

1.151512209199723E-04

-3.964164000159326E-02

-0.2425216604976431

2.682713422544098E-03

4.122868228459933

0.9999999999999999

0.2471202954562588

17

Coefficient

-0.27061686E-15

SE

0.25000000

t

-0.10824674E-14

Column 3 of Q1 is shown to be a linear transform of X.

Ordinary Least Squares Estimation

Dependent variable

Centered R**2

Adjusted R**2

Residual Sum of Squares

Residual Variance

Standard Error

Total Sum of Squares

Log Likelihood

Mean of the Dependent Variable

Std. Error of Dependent Variable

Sum Absolute Residuals

1/Condition XPX

Maximum Absolute Residual

Number of Observations

Variable

COL_1_X

COL_2_X

COL_3_X

Lag

0

0

0

COL_3_Q

1.000000000000000

1.000000000000000

1.675885395409665E-23

1.197060996721189E-24

1.094102827307008E-12

0.9998848542254362

445.7268402712293

-2.602552757714062E-03

0.2499856063638260

1.409913852334910E-11

9.474231625417810E-06

1.713684749660160E-12

17

Coefficient

11.248239

-0.18338531E-01

-22.602243

SE

0.12603327E-10

0.29174534E-11

0.24729178E-10

t

0.89248168E+12

-0.62858008E+10

-0.91399087E+12

Test of orthogonal Condition

Matrix of

1

2

3

1

1.00000

-0.270617E-15

-0.213371E-15

3

by

3

2

-0.270617E-15

1.00000

-0.100614E-15

elements

3

-0.213371E-15

-0.100614E-15

1.00000

B34S Matrix Command Ending. Last Command reached.

Space available in allocator

Number variables used

Number temp variables used

8856736, peak space used

63, peak number used

51, # user temp clean

3330

63

0

Special Topics in OLS Estimation

10-7

The next example illustrates the gains of the QR approach. The gas data example studied in Chapter

7 and 8 is used to show accuracy gains obtained by use of the QR factorization on a simple problem.

Later in this chapter a more difficult problem will be shown.

/$ Illustrates OLS Capability under Matrix Command

b34sexec options ginclude('b34sdata.mac')

member(gas); b34srun;

b34sexec matrix;

call load(qr_small :staging);

call echooff;

call loaddata;

nlag=6;

call olsq(gasout gasin{0 to nlag} gasout{1 to nlag} :print :savex);

call print(ccf(%y,

%res));

call print(ccf(%yhat,%res));

/;

/; Large QR used for illustration. Q2 is large!!!

/; error2 equation uses q1 => uses the economy qr. This is the

/; best way to proceed

/;

r=qr(%x,q:);

call qr_small(%x,q,r,q1,q2,r_small);

/; call print(q,q1,q2);

yhat =q1*transpose(q1)*%y;

error =q2*transpose(q2)*%y;

error2=%y - yhat;

beta=inv(r_small)*transpose(q1)*%y;

call print('Beta from QR ',beta);

call print(ccf(%y,error));

call print(ccf(yhat,error));

call print(ccf(yhat,error2));

/; call tabulate(%y,%yhat,yhat,%res,error,error2);

call print(' ':);

call print('Study Error Buildup using Cholesky':);

call print(' ':);

/; excessive problem

maxlag=40;

chol_r=vector(maxlag:);

qr_r =vector(maxlag:);

r_cond=vector(maxlag:);

do i=1,maxlag;

/;

/; :qr call uses linpack to get OLS. This is close to LAPACK QR(

/;

call olsq(gasout gasin{0 to i} gasout{1 to i} :savex);

chol_r(i)=ccf(%yhat ,

%res);

r_cond(i)=%rcond;

/; call olsq(gasout gasin{0 to i} gasout{1 to i} :qr :savex);

/; chol_r(i)=ccf(%yhat ,

%res);

/;

/; Use economy size qr to save space!!

/;

r=qr(%x,q);

qr_yhat =q*transpose(q)*%y;

qr_error =%y - qr_yhat;

qr_r(i) =ccf(qr_yhat,qr_error);

enddo;

)

10-8

Chapter 10

call tabulate(chol_r,qr_r r_cond

:title 'As maxlag increases accuracy declines');

b34srun;

When lags of 1-6 are used, as suggested by Tiao-Box (1981), the reciprocal of the matrix condition

was found to be 2.34596E-08. The correlation between the Cholesky ê and ŷ was -0.1333E-10

while for the QR this was -0.4148E-14, which is substantially smaller. When an excessive number

of lags, (40) was used, the condition fell to 0.5827E-10 and the correlations were -0.1387E-10 and

-0.3607E-13 respectively. Output documenting these findings is shown below.

=>

CALL LOAD(QR_SMALL :STAGING)$

=>

CALL ECHOOFF$

Ordinary Least Squares Estimation

Dependent variable

Centered R**2

Adjusted R**2

Residual Sum of Squares

Residual Variance

Standard Error

Total Sum of Squares

Log Likelihood

Mean of the Dependent Variable

Std. Error of Dependent Variable

Sum Absolute Residuals

F(13,

276)

F Significance

1/Condition XPX

Maximum Absolute Residual

Number of Observations

Variable

GASIN

GASIN

GASIN

GASIN

GASIN

GASIN

GASIN

GASOUT

GASOUT

GASOUT

GASOUT

GASOUT

GASOUT

CONSTANT

Lag

0

1

2

3

4

5

6

1

2

3

4

5

6

0

Coefficient

-0.67324068E-01

0.19318519

-0.21454694

-0.42981100

0.14122227

-0.94767767E-01

0.23492127

1.5418090

-0.58620686

-0.17641567

0.13419248

0.54079963E-01

-0.40030303E-01

3.8759484

GASOUT0

0.9946789205951879

0.9944282900435120

16.09378007198891

5.831079736227866E-02

0.2414762873705794

3024.532965517241

7.767793572930756

53.50965517241379

3.235044356946151

48.28024672308078

3968.705786042084

1.000000000000000

2.345968875600428E-08

1.407426755603240

290

SE

0.76805415E-01

0.16668178

0.18914123

0.18922276

0.19069299

0.18185376

0.11100544

0.59960417E-01

0.11056171

0.11539164

0.11472181

0.10092430

0.42973854E-01

0.85787179

t

-0.87655367

1.1590061

-1.1343214

-2.2714551

0.74057401

-0.52112076

2.1163041

25.713781

-5.3020786

-1.5288427

1.1697207

0.53584681

-0.93150370

4.5180975

The correlation of y and ŷ with the Cholesky error is shown.

0.72945729E-01

-0.18895630E-10

ˆ is calculated using the QR method and the same correlations performed with one difference.

When eˆ y yˆ the correlation is .4827531E-14 while when ê is calculated using Q2 in (10.1-7)

the correlation is slightly smaller in absolute value, -.350777E-14.2

Beta from QR

BETA

= Vector of

-0.673241E-01

-0.586207

14

0.193185

-0.176416

elements

-0.214547

0.134192

-0.429811

0.540800E-01

0.141222

-0.400303E-01

-0.947678E-01

3.87595

0.234921

1.54181

0.72945729E-01

2 Later in this chapter the effect of data precision on the accuracy of QR vs Cholesky calculation

is studied using two different datasets. In those examples the estimated correlation between the

residual and the right hand side variables of the model are tested.

Special Topics in OLS Estimation

10-9

-0.35077737E-14

0.48275313E-14

Study Error Buildup using Cholesky

As maxlag increases accuracy declines

Obs

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

CHOL_R

QR_R

R_COND

-0.3512E-11 -0.7724E-15 0.9180E-06

-0.1841E-10 0.1020E-13 0.2720E-06

-0.2981E-10 -0.8277E-15 0.8986E-07

-0.2070E-10 -0.5568E-14 0.5034E-07

-0.1927E-10 0.1700E-13 0.3355E-07

-0.1890E-10 0.4828E-14 0.2346E-07

-0.1908E-10 -0.9341E-14 0.1800E-07

-0.1333E-10 -0.4148E-14 0.1364E-07

-0.1823E-10 0.1090E-13 0.1009E-07

-0.1827E-10 -0.2608E-13 0.6999E-08

-0.1523E-10 0.1335E-13 0.5429E-08

-0.1454E-10 -0.1528E-13 0.4008E-08

-0.1479E-10 0.2589E-13 0.3039E-08

-0.2019E-10 0.2707E-14 0.2460E-08

-0.1711E-10 -0.2973E-13 0.1896E-08

-0.1432E-10 0.1527E-14 0.1574E-08

-0.1526E-10 -0.2731E-13 0.1299E-08

-0.1733E-10 -0.1140E-13 0.1075E-08

-0.1958E-10 -0.9592E-14 0.9116E-09

-0.1701E-10 -0.2558E-13 0.5930E-09

-0.1990E-10 0.3035E-13 0.4891E-09

-0.1744E-10 -0.3318E-14 0.4295E-09

-0.1978E-10 -0.7585E-14 0.3684E-09

-0.1676E-10 -0.1069E-13 0.3191E-09

-0.1614E-10 0.3729E-13 0.2692E-09

-0.1279E-10 -0.6407E-14 0.2405E-09

-0.1606E-10 -0.3884E-13 0.2157E-09

-0.1862E-10 0.2220E-13 0.2008E-09

-0.1539E-10 -0.5976E-14 0.1900E-09

-0.1945E-10 0.1394E-13 0.1783E-09

-0.2438E-10 -0.5711E-14 0.1660E-09

-0.1603E-10 -0.1808E-13 0.1540E-09

-0.1894E-10 0.3219E-13 0.1394E-09

-0.1650E-10 0.2197E-14 0.1561E-09

-0.8106E-11 0.3452E-13 0.1393E-09

-0.1313E-10 -0.4354E-14 0.1041E-09

-0.2144E-10 0.2191E-13 0.1067E-09

-0.1345E-10 0.1394E-13 0.8881E-10

-0.1293E-10 -0.6742E-13 0.7338E-10

-0.1387E-10 -0.3607E-13 0.5827E-10

B34S Matrix Command Ending. Last Command reached.

The qr command has a number of options that facilitate its use in cases in which rank may be a

problem. The EPS option allows the user to specify a nonnegative number such that the condition

number of X ' X must be < (1/EPS). If ESP is not supplied, it defaults to V * 1.0E-16, where V is

the largest sum of absolute values in any row of X. The IFREZ option allows the user to require that

certain variables be placed in any final model when all variables cannot be entered in the regression

due to rank problems.3

NK 2

operations while the QR decomposition

2

requires NK 2 operations. If K ( N / 2) then the QR is both faster and more accurate than the

Cholesky. When K ( N / 2) the speeds are the same while for smaller rank problems the

Cholesky is faster. The QR decomposition requires more memory than the Cholesky.

3 The Cholesky decomposition requires K 3

10-10

Chapter 10

The B34S qr command provides easy access to the singular value decomposition procedure,

although much more power is contained in the matrix procedure capability of the same name.4

Use of the capability is explained in the next section Assume a model y f ( x1, x 2, x3) . The

following command will estimate both the QR approach to the OLS model and the associated

principal component regression.

b34sexec qr ipcc=pcreglist$ model y = x1 x2 x3$ b34seend$

Values printed include ˆ j , t j , U , V , Yˆ , ˆ j , and t j . If IPCC=PCREG, U , V , Yˆ , and eˆ are not

printed. The principle component regression model is discussed next.

10.2 The Principal-Component Regression Model

The principal-component regression model can easily be calculated from the data and

provides a useful transformation, especially in cases in which there is collinearity.5 Assume X is a T

by K matrix. The singular value decomposition of X is

X U V '

(10.2-1)

where U is T by r, is r by r, V is K by r, T K and r K . U and V have the property that

U 'U I

(10.2-2)

and

V 'V I

(10.2-3)

4 The matrix command r=qr(x,q); or r=qr(x,q,q1,q2); will perform a QR factorization of X. The

QR approach to OLS is implemented by the :qr option on the call olsq( ); command. Outside of

the matrix command, the

qr command will do QR and PC estimation.

5 Mandel (1982) provides a good summary of the uses of the principal-component regression and

the discussion here has benefited from that treatment. The LINPACK FORTRAN routine

DSVDC has been used to calculate the singular value decomposition. This routine has been

found to be substantially more accurate than the singular value decomposition routines in

versions 8 and 9 of the IMSL Library and substantially faster than the corresponding NAG

routine to calculate the singular value decomposition. Stokes (1983, 1984) implemented

LINPACK in SPEAKEASY 7. Subsequent testing of the SPEAKEASY LINKULE SVDCM,

which uses DSVDC, against the LINKULE SVD, which uses the NAG routine, documented the

speed advantage.

Special Topics in OLS Estimation

10-11

i j 0 for i j and the elements i i are the square roots of the nonzero eigenvalues of X ' X

or X X ' . This result builds on the fact that while there are T eigenvalues of X X ' only K are non

zero and are in fact the eigenvalues of X ' X . The rows of V ' are the eigenvectors of X ' X , while

the columns of U are the eigenvectors of X X ' . This can be easily seen if we note that using (10.2-1)

X ' X V U 'U V ' V 2V ' and XX ' U V 'V U ' U 2U '. If K = r, then X is of full rank. If we

replace X in equation (2.1-7) by its singular value decomposition, we obtain

Y X e U V ' e

(10.2-4)

which can be written

Y U e

(10.2-5)

if we define

V '

(10.2-6)

An estimate of ̂ can be calculated from equation (2.1-8) as

ˆ (U 'U )1U ' y U ' y

(10.2-7)

ˆ (V ') 1 1ˆ

(10.2-8)

ˆ [ˆ1, ,ˆ k ] and ˆ [ˆ1, , ˆk ] . Mandel (1982) points out that the variance of each coefficient is

k

var( ˆ j ) [v 2j m / 2m m ]ˆ 2

(10.2-9)

m 1

where ˆ 2 is defined in equation (2.1-10), is vi j an element of matrix V and

var(ˆ j ) ˆ 2

(10.2-10)

Equation (10.2-9) shows that it is possible to determine just what vectors in X are causing the

increase in the variance since the elements of V are in the range 0 through 1. As the smallest m m

approaches 0, the var( ˆ ) approaches . The t test for each

j

coefficient ˆ j , t j is

t j ˆ j /

(10.2-11)

10-12

Chapter 10

in view of equation (10.2-10). The singular value decomposition can be used to illustrate the

problems of near collinearity. Following Mandel (1982), from equation (10.2-1) we write

U X V

(10.2-12)

or

a 0

U

X [Va Vb ]

0

b

(10.2-13)

where a and b are square diagonal matrices that contain the ka and kb very small singular values

(ka kb K ) along the diagonals, respectively. Va and Vb are K by ka and K by kb , respectively. In

terms of our prior notation r ka . If X is close to not being full rank, then

X Vb 0

(10.2-14)

since U [0 b ]' 0 as b approaches zero. Equation (10.2-14) is very important in understanding

why predictions of OLS models with rank problems in X have high variance in cases of near

collinearity and why there is no unique solution for ˆi when there is exact collinearity (r K ) . The

perfect collinearity case will be discussed first. Assume the vector ˆ is partitioned into ˆ

a

containing ka elements and ˆb containing kb elements. Given a new vector xT j consisting of K

elements, a forecast of y in period T+ j can be calculated from

yˆT j xT j (ˆa ˆb ) ' ( xa T j xb T j )(ˆa ˆb ) '

(10.2-15)

where xT j is partitioned into xa T j and xb T k . Following Mandel (1982), if Z V ' , where Z is

r by K, equation (10.2-8) becomes

Z ˆ (Za Zb )(ˆa ˆb )' ˆ

(10.2-16)

where Z has been partitioned into Z a , which is ka by kb , and Z b , which is ka by kb . From equation

(10.2-16) ˆ is written as

a

ˆa Za1 Zb ˆb Za1ˆ

(10.2-17)

Special Topics in OLS Estimation

10-13

Equation (10.2-17) shows that if a value for ˆb is arbitrarily determined, ˆa is uniquely determined.

Using equation (10.2-17), we substitute for ˆ in equation (10.2-15)

a

yˆT j xaT j (Za1ˆ ) ( xb T j xa T j Za1Zb )b

(10.2-18)

Equation (10.2-18) suggests that the only way we can make yˆT j independent of any arbitrary value

of ˆ is to impose the constraint

b

xb T j xa T j Za1Zb

(10.2-19)

which implies that

yˆT j xa T j (Za1ˆ )

(10.2-20)

We note that

Z a1Z b (Va' ) 1Vb'

(10.2-21)

where V ' has been partitioned as we did with Z. The above discussion repeats Mandel's (1982)

important proof that if there is collinearity such that r K , there is no unique solution of yˆT j ,

given a vector xT j , except when the new x vector ( xT j ) fulfills equation (10.2-19). Next, near

collinearity will be discussed.

Consider a new vector xT j from which we want to obtain a prediction yˆT j . If xT j satisfies

the near collinearity condition of the X matrix expressed in equation (10.2-14), then from equation

(10.2-1) we can write

xT j uT j V '

(10.2-22)

which, since 1 exists, can be written

uT j xT jV 1

(10.2-23)

where xT j and uT j are K element vectors. From equation (10.2-7)

yˆT j uT jˆ

and in view of equation (10.2-10),

(10.2-24)

10-14

Chapter 10

K

var( yˆT j ) ˆ 2 ui2

(10.2-25)

i 1

From equation (10.2-13)

a1

uT j ( xT jVa xT jVb )

0

0

b1

(10.2-26)

Assume the new vector xT j satisfies the near collinearity condition of the X matrix expressed in

equation (10.2-14), xT jVb 0 and the variance of yˆT j will be small. However in the case

where xT jVb 0 , then the value ( xT jVb )b1 will be very large, because of having to invert b where

there are small values along the diagonal. These small values will imply large values in u T j from

equation (10.2-26) and a large variance in yˆT j from equation (10.2-25). This rather long discussion,

which has benefited from Mandel's (1982) excellent paper, has stressed the problems of using an

OLS regression model for prediction purposes when the original X matrix has collinearity problems.

The singular value decomposition approach to OLS estimation has been shown to highlight the effect

of collinearity, which potentially impacts OLS, ARIMA, VAR and VARMA models. The ridge

regression model and the Lasso procedure are two ways to deal with this problem is a structured

manner.6

6 The stepwise and best regression approaches, discussed in Chapter 2, are other alternatives that

have their advantages and disadvantages.

Special Topics in OLS Estimation

10-15

10.3 Ridge, Lasso and Elastic Net Models

The Ridge regression model of Hoerl-Kennard (1970), as discussed in Hastie-TibshiraneFriedman (2001, 59)(2009 61), is a shrinkage method of estimation that involves calculation of

T

k 1

k

i 1

j 1

j 1

ˆ ridge min ( yi 0 xij j )2 j2

(10.3-1)

The ridge coefficients mimimize a penalizied residual sum of squares where controls the amount

T

of shrinkage. 0.0 implies an OLS model. If the inputs are centered and ˆ0 yi / T and X * has

i 1

the vector of 1’s removed, the rest of the ridge coefficients can be estimated as

( X *' X * I )1 X *' y .

(10.3-2)

Note

X ˆ ridge X * ( X *' X I ) 1 X *' y

U ( 2 I ) 1 U ' y

k 1

uj

j 1

2

j

( )

2

j

(10.3-4)

u 'j y

where u j are the columns of U from (10.2-1) and i is the ith diagonal element of and X * was

used in the place of X. Equation (10.3-2) shows that for 0 the ridge estimates are smaller than

their OLS counterparts. The columns of X * with the least variance will be associated with the

smallest i . An example is shown below.

The Lasso is another shrinkage procedure, which following Hastie-Tibshirano-Friedman (2001, 72)

(2009, 68), can be estimated as

T

k 1

k 1

j 1

j 1

ˆ arg min [ yi 0 ( xij x j ) j ]2 | j |d

i1

(10.3-5)

if d 1 . Setting d 2 corresponds to the ridge regression (10.3-1) while d 0 corresponds to

variable subset selection. Examples of the ridge and lasso models are presented in section 10.5 in

tables 10.2 and 10.3.

10-16

Chapter 10

A related shrinkage approach is the least trimmed regression (LTS) model discussed in

q

Faraway (2005, 101-103) where one minimizes

uˆ

i 1

2

(i )

where q T and uˆ(2i ) is the sorted residual

squared. The smaller q, the more outliers that are removed from the dataset. Compared to the full

sample, inspection of the LTS coefficients will indicate how sensitive the results are to possibly

rogue observations that are coming from a different distribution or have something wrong/strange

with the data. The LTS estimates are an example of a resistant regression method and are illustrated

in Table 10.9 in section 10.5.

The Elastic Net model, discussed next, combines the Ridge and Lasso modeling approaches

and provides a major step forward in view of the progress made in easily computing the solutions of

lasso and ridge models over a range of values.7 The basic Elastic Net Model assumes both and

and minimizes

1

2T

k

'

2

.5(1 ) j2 | j |

(

y

x

)

P

(

)

where

P

(

)

i

0

i

a

i 1

j 1

T

(10.3-6)

Equation (10.3-6) is a compromise between a ridge regression penalty ( 0) and a lasso penalty

( 1) .8 The below listed code tests a number of the capabilities of the GLM model. The problem

is to test the extent of which out-of-sample performance is impacted by shrinking the model. The

exact number of lags used by Tiao-Box (1981) of 6 is used and a holdout sample of 100 is assumed.

The GLM switch ne=9 restricts the model to nine coefficients and measures the effect on the R**2

and e ' e .

/; Illustrates effect of reduction in model on the out of sample

/; performance

/;

b34sexec options ginclude('gas.b34'); b34srun;

b34sexec matrix;

call loaddata;

call load(glm_info :staging);

call echooff;

/; logic works for holdout > 0

/; set max VAR lag and holdout # of obs

/;

/; k=max lag, parm(0.0)=> ridge (1.0) lasso, nlam = # models tried

k=6;

7 Zon-Hastie (2005) first proposed the Elastic Net Model. Friedman-Hastie-Tibshirani (2009)

provided details on a fast way to compute the general linear model using coordinated descent.

Further details on this approach are given in Hastie-Tibshirani-Friedman (2009, 662).

8 The elastic net model is implemented in the GLM command which uses GPL code developed

by Friedman-Hastie-Tibshirani in a 2009 working paper.

Special Topics in OLS Estimation

holdout=100;

maxlag=k;

nlam=100;

lam_min=.0001;

thr

=.1e-6;

parm

= .5;

ne

= 9;

/; At issue is how are yhat values calculated within the sample treated?

/; For purposes of this analysis they are removed

call olsq(gasout gasout{1 to k} gasin{1 to k} :print

:savex :holdout holdout);

%yfuture=

gasout(integers(norows(%x)+maxlag,norows(gasout)));

olsf=mfam(%xfuture)*vfam(%coef);

olsfss=sumsq(afam(%yfuture)-afam(olsf));

call print(' ':);

call print('ols forecast error sum sq ',olsfss:);

call print(' ':);

call glm(gasout gasout{1 to k} gasin{1 to k} :print :savex

:lamdamin lam_min :nlam nlam :holdout holdout

:thr thr :parm parm :ne ne);

call print(' ':);

%yfuture=

gasout(integers(norows(%x)+maxlag,norows(gasout)));

call glm_info(%yfuture,%xfuture,%coef,%a0,%alm,glmf,

fss,mod,1);

call print(' ':);

call print('glm forecast sum of squares ',fss:);

call print(' ':);

res_ols=vfam(%yfuture)-olsf;

res_glm=vfam(%yfuture)-glmf;

call graph(%yfuture,olsf,glmf :nolabel :nocontact

:pgborder :file 'ols_glm_yhat_oos.wmf'

:heading 'OLS vs GLM yhat out-of-sample');

call graph( res_ols res_glm :nolabel :nocontact

:pgborder :file 'ols_glm_res_oos.wmf'

:heading 'OLS vs GLM residual out-of-sample');

call tabulate(%yfuture,olsf,glmf res_ols res_glm);

/; tests of reduction loss as a function of restriction

tparm=grid(.1,.9,.1);

fsstest=array(norows(tparm):);

nein=ne;

do jj=1,norows(tparm);

call glm(gasout gasout{1 to k} gasin{1 to k} :savex

:lamdamin lam_min :nlam nlam :holdout holdout

:thr thr :parm tparm(jj) :ne ne);

call glm_info(%yfuture,%xfuture,%coef,%a0,%alm,glmf,

fss,mod,1);

fsstest(jj)=fss;

enddo;

call tabulate(tparm

call graph(

tparm

:nolabel :nocontact

:heading

fsstest );

fsstest :plottype xyplot :grid

:pgborder :file 'PARM_vs_OSS.wmf'

'Parm vs GLM residual SS out of sample');

10-17

10-18

Chapter 10

rss_glm=array(2*k:);

ne_used=array(2*k:);

icount=1;

%yfuture=

gasout(integers(norows(%x)+maxlag,norows(gasout)));

do ne=1,2*k;

ii=ne;

call glm(gasout gasout{1 to k} gasin{1 to k} :savex

:lamdamin lam_min :nlam nlam :holdout holdout

:thr thr :parm parm :ne ii

/;

:print

);

/; call print('+++++++++++++++++++++++++':);

call glm_info(%yfuture,%xfuture,%coef,%a0,%alm,glmf,fss,

mod,0);

rss_glm(icount)=sfam(fss);

ne_used(icount)=sfam(dfloat(ii));

icount=icount+1;

enddo;

rss_glm

=dropfirst(rss_glm,1);

ne_used

=dropfirst(ne_used,1);

call tabulate(rss_glm,ne_used);

call graph(ne_used rss_glm :grid

:heading 'RSS out-of-sample as a function of model reduction'

:plottype xyplot :nocontact :nolabel :pgborder

:file 'rss_loss.wmf');

b34srun;

Edited and annotated output follows:

Ordinary Least Squares Estimation

Dependent variable

Centered R**2

Adjusted R**2

Residual Sum of Squares

Residual Variance

Standard Error

Total Sum of Squares

Log Likelihood

Mean of the Dependent Variable

Std. Error of Dependent Variable

Sum Absolute Residuals

F(12,

177)

F Significance

1/Condition XPX

Maximum Absolute Residual

Number of Observations

Holdout option reduced sample by

Variable

GASOUT

GASOUT

GASOUT

GASOUT

GASOUT

GASOUT

GASIN

GASIN

GASIN

GASIN

GASIN

GASIN

CONSTANT

Lag

1

2

3

4

5

6

1

2

3

4

5

6

0

Coefficient

1.2285222

-0.29063287

-0.24342211

0.20531898

-0.89608071E-01

0.31553886E-01

0.12786487

-0.32396180

-0.49884894

0.25235636

-0.19926975

0.12738336

8.4137071

ols forecast error sum sq

GASOUT

0.9979718278979760

0.9978343247046184

3.682663208127010

2.080600682557632E-02

0.1442428744360578

1815.754789473684

105.0235276580087

52.50894736842105

3.099543224133753

20.92257736335549

7257.808371787274

1.000000000000000

3.637698112128508E-09

0.4505041686010856

190

100

SE

0.74956376E-01

0.11828994

0.11861680

0.11606267

0.10219641

0.40767543E-01

0.52721231E-01

0.11198859

0.12726385

0.13183588

0.12969996

0.99759679E-01

1.3930658

t

16.389829

-2.4569534

-2.0521723

1.7690355

-0.87682210

0.77399529

2.4253013

-2.8928107

-3.9198008

1.9141706

-1.5363902

1.2769022

6.0397055

52.36509239330390

The OLS out-of-sample residual sum of squares was found to be 52.365. A GLM model is shown

next where the maximum number of variables in the model is assumed to be 9. In equation (10.3-6)

it is assumed that .5 . min 0.142E-01 . Figures 10.1 and 10.2 show out-of-sample ŷ and

residuals for OLS and GLM models.

Special Topics in OLS Estimation

10-19

Generalized Linear Model via Coordinated Descent

Version 5/17/2008 converted to real*8 10/28/2009

Number of Observations

190

Holdout option reduced sample by

100

Number of right hand side variables

12

Maximum number of variables in model (:ne)

9

Number of lamda values considered

(:nlam)

100

Minimum lamda

(:flmin)

1.000000000000000E-04

Covariance updating algorithm selected (:cua)

Percent lasso (:parm)

0.5000000000000000

Analysis on standardized predictor variables

Converge threshold for Lamda solution (:thr)

1.000000000000000E-07

Left Hand Side Variable

GASOUT

Series

GASOUT

Mean

52.51

Max

60.20

Min

45.60

Right Hand Side Variables

#

1

2

3

4

5

6

7

8

9

10

11

12

Series

GASOUT

GASOUT

GASOUT

GASOUT

GASOUT

GASOUT

GASIN

GASIN

GASIN

GASIN

GASIN

GASIN

Lag

1

2

3

4

5

6

1

2

3

4

5

6

Mean

52.52

52.53

52.53

52.53

52.53

52.53

0.2094

0.2091

0.2064

0.2025

0.1980

0.1933

Max

60.20

60.20

60.20

60.20

60.20

60.20

2.834

2.834

2.834

2.834

2.834

2.834

Min

45.60

45.60

45.60

45.60

45.60

45.60

-2.716

-2.716

-2.716

-2.716

-2.716

-2.716

Total Number of passes over the data

Maximum R**2

Last Lamda Value Considered

Residual Sum of Squares for last model

Sum of Absolute Residuals for last model

Largest Adsolute residual for last model

Penalty

1.000

1.000

1.000

1.000

1.000

1.000

1.000

1.000

1.000

1.000

1.000

1.000

14807

0.9966397334158390

1.422720271397891E-02

6.101420144071417

26.03246518892973

0.6581948928894832

OLS vs GLM yhat out-of-sample

61

60

59

58

57

%

Y

F

U

T

U

R

E

56

55

54

53

52

51

50

0

10

20

30

40

50

Obs

60

70

80

90

100

O

L

S

F

G

L

M

F

10-20

Chapter 10

Figure 10.1 OLS vs GLM Yhat Values for Out-of-Sample Observations

OLS vs GLM residual out-of-sample

2

1.5

1

R

E

S

_

O

L

S

.5

0

-.5

R

E

S

_

G

L

M

-1

-1.5

0

10

20

30

40

50

Obs

60

70

80

90

100

Figure 10.2 Out-of-Sample Residuals for OLS and GLM Models

Figure 10.3 shows the effect of changing the value of on the out-of-sample residual sum of

squares. The residual sum of squares is relatively insensitive to values in the range of .3 to 1.0.

Figure 10.4 shows the sum of squares is relatively insensitive to models with 8 or more coefficients.

More detail on these results are presented in Table 10.1.

Special Topics in OLS Estimation

10-21

Parm vs GLM residual SS out of sample

700

600

500

400

300

200

100

.10

re

.20

.30

.40

.50

TPARM

.60

.70

Figure 10.3 Effect of changes in Lamda on the Out-of-Sample RSS

.80

.90

10-22

Chapter 10

RSS out-of-sample as a function of model reduction

1200

1100

1000

900

800

700

600

500

400

300

200

100

2

3

4

5

6

7

NE_USED

8

9

10

11

12

Figure 10.4 RSS for Out-of-Sample Models vs Number of Vectors in the Model

Assuming .5 , the out-of-sample sum of squares varied between 1338.78 for 5.48 to 85.43

for 85.43. Table 10.1 shows added detail as is changed from .1 to .9..

Special Topics in OLS Estimation

10-23

Table 10.1 Effect on the Out-of_Sample RSS of values of and the Number of Coefficients

____________________________________________________________________________________________________________________________

Obs

1

2

3

4

5

6

7

8

9

TPARM

0.1000

0.2000

0.3000

0.4000

0.5000

0.6000

0.7000

0.8000

0.9000

FSSTEST

691.0

247.3

74.50

69.11

85.43

84.92

84.35

83.34

82.23

1

2

3

4

5

6

7

8

9

10

11

RSS_GLM

1190.

1055.

933.6

821.6

271.1

194.8

85.43

85.43

85.43

85.43

85.43

NE_USED

2.000

3.000

4.000

5.000

6.000

7.000

8.000

9.000

10.00

11.00

12.00

Obs

_________________________________________________________________________________________________________________________

10.4 Partial Least Squares and Continuum Regression Models

Define X as the data matrix with means removed, and y as the left hand-side with mean

removed. While the principle component regression model (PCR), discussed in section 10.2,

shrinks the X matrix by using orthogonal projections that key on high variance in the X matrix

only, the partial least squares procedure (PLS), first suggested by Wold (1975), keys on both

high variance and correlation with the left hand side variable y. As such it can usually explain

more variance than the PCR approach given that the number of projections is less than the

number of columns in X. The performance of PLS vs PCR was studied by Frank-Friedman

(1993) and is summarized in Hastie-Tibshirani-Friedman (2009, 61-99). Stone-Brooks (1990)

suggested the Continuum Regression Model (CRM) of which OLS, PLS and PCR are special

cases. After first discussing PLS and CRM, a number of examples will be developed.

10-24

Chapter 10

Important research by de Jong-Wise-Ricker (2001) provides both an excellent discussion

of the theory behind the PLS and a computationally efficient procedure to obtain both PLS and

CRM results.9 A first approach to estimating a CRM suggested by Iglarsh-Cheng (1980) formed

a weighted continuous estimator (WCR) as

ˆWCR (1 )ˆOLS ˆPCR

(10.4-1)

which could be estimated with a nonlinear search procedure. The strength of this procedure is in

its simplicity. Its developers argued that the WCR estimator has a smaller mean square error than

OLS in the presence of multicollinearity. Note that OLS implies 0 while PCR implies that

1. The PLS estimator, which will be defined next, is not included in this specification which

will not be discussed further.

Define

and note that given ( X ' X ) ULU ' then ( X ' X ) UL U ' . This is

( 1)

equivalent to modifying X into its "powered" form or X ( ) UL /2V ' . 0,1, corresponds to

the effect on X of OLS, PLS and PCR respectively.10 For 0 1 multicolinearity has been

artificially taken out of the X matrix while for 1 multicolinearity has been artificially put

in the the X matrix. Define T as the PLS component or orthogonal vectors and gs ( ) as the

Gram-Schmidt orthogonalization11 of ( ) where

T gs ( K )

(10.4-2)

given

K [ULU ' y, UL2U ' y,

,ULaU ' y]

(10.4-3)

and a is the number of columns of the PLS model. Given

p U ' y/ | y |

(10.4-4)

is the vector of correlations of y with the non-zero principle components, the canonical

form of K is

K [ Lp, L2 p,

, La p]

(10.4-5)

9 This reference will be used to develop the math in the following discuission.

10 The SVD decomposition shows X USV '. X ' X VSU 'USV ' VS 2V '. For a positive

definite matrix such as X ' X then V ' U . S 2 L.

11 The Gram-Schmidt orthogonalization on a matrix is usually U from the SVD of the matrix.

Special Topics in OLS Estimation

10-25

from which we can define the orthonormal basis of K as

T gs( K ) U 'T

(10.4-6)

T UT XR

(10.4-7)

where R are the weights given by

R VL.5U 'UT VL.5T

(10.4-8)

The importance of R is that it shows how each orthogonal column in T is related to the original

data in X . The loadings c of T are defined as

c y 'T (T 'T )1 y 'UT

(10.4-9)

The loadings of T with respect to X are

P X 'T (T 'T )1 X 'T VL.5U 'UT VL.5T

(10.4-10)

The fraction of variance of y explained by the PLS model is

2

RyT

y 'T (T 'T )1T ' y / y ' y cc '/ | y |2 p 'TT ' p

(10.4-11)

where p U ' y / | y | . The PLS fitted y and PLS coefficient vector PLS are

yˆ PLS T (T 'T ) 1T ' y Tc ' XRc '

(10.4-12)

and

ˆPLS Rc '

(10.4-13)

respectively. From R we can obtain the implied vector ˆOLS as

ˆOLS RˆPLS

(10.4-14)

since the PLS coefficients are related to their implied OLS coefficients. Either can be used to

obtain ŷ .

T PLS y X OLS yˆ

(10.4-15)

10-26

Chapter 10

The importance of (10.4-14) is that inspection of the implied OLS coefficients OLS shows how

the latent vectors in T are related to the underlying data vectors in X. If the number of latent

vectors is less than the number of original vectors in X, the resulting residual sum of squares will

be larger than would occur if all PLS coefficients were used.

Table 10.2 contains the Matlab code to estimate the PLS model using the de Jong (1993)

method of analysis. Table 10.3 shows the b34s implementation of this approach. Although this

approach is outdated, it is still in use in sas proc pls and in the Matlab plsregress

command.

Special Topics in OLS Estimation

Table 10.2 Matlab Code to Obtain PLS Model Solution suggested by de Jong (1993)

function [Xloadings,Yloadings,Xscores,Yscores,Weights]=simpls(X0,Y0,ncomp)

[n,dx] = size(X0);

dy = size(Y0,2);

%

outClass = superiorfloat(X0,Y0);

Xloadings = zeros(dx,ncomp,outClass);

Yloadings = zeros(dy,ncomp,outClass);

if nargout > 2

Xscores = zeros(n,ncomp,outClass);

Yscores = zeros(n,ncomp,outClass);

if nargout > 4

Weights = zeros(dx,ncomp,outClass);

end

end

% Each new basis vector can be removed from Cov separately.

V = zeros(dx,ncomp);

Cov = X0'*Y0;

for i = 1:ncomp

% Find unit length ti=X0*ri and

% is jointly maximized, subject

[ri,si,ci] = svd(Cov,'econ');

ti = X0*ri;

normti = norm(ti); ti = ti ./

Xloadings(:,i) = X0'*ti;

qi = si*ci/normti; % = Y0'*ti

Yloadings(:,i) = qi;

if nargout >

Xscores(:,i)

Yscores(:,i)

if nargout >

Weights(:,i)

end

end

ui=Y0*ci whose covariance, ri'*X0'*Y0*ci,

to ti'*tj=0 for j=1:(i-1).

ri = ri(:,1); ci = ci(:,1); si = si(1);

normti; % ti'*ti == 1

2

= ti;

= Y0*qi; % = Y0*(Y0'*ti), and proportional to Y0*ci

4

= ri ./ normti; % rescaled weights

% Update the orthonormal basis

vi = Xloadings(:,i);

for repeat = 1:2

for j = 1:i-1

vj = V(:,j);

vi = vi - (vj'*vi)*vj;

end

end

vi = vi ./ norm(vi);

V(:,i) = vi;

Cov = Cov - vi*(vi'*Cov);

Vi = V(:,1:i);

Cov = Cov - Vi*(Vi'*Cov);

end

if nargout > 2

for i = 1:ncomp

ui = Yscores(:,i);

for repeat = 1:2

for j = 1:i-1

tj = Xscores(:,j);

ui = ui - (tj'*ui)*tj;

end

end

Yscores(:,i) = ui;

end

end

10-27

10-28

Chapter 10

Table 10.3 B34S Implementation of SIMPLS Calculation

subroutine pls_reg(y,x,pls_coef,xload,yload,xscores,

yscores,weights,yhat,pls_res,rss,ncomp,iprint);

/; Partial Least Squares. See Wold (1975)

/; pls_reg is designed to 100% track the Matlab simpls routine

/; from which this discussion of PLS and PC regression has been

/; developed.

/;

/; The Matlab code came from

/; de Jong, S. "SIMPLS: An Alternative Approach to Partial Least Squares

/; Regression." Chemometrics and Intelligent Laboratory Systems. Vol.

/; 18, 1993, pp. 251–263.

/;

/; A newer reference is:

/;

/; y

=>

left hand variable. Usually %y from olsq with :savex

/;

Can include more that 1 col!

/; x

=>

left hand variable. Usually %x from olsq with :savex

/;

n by k

/; pls_coef =>

The pls_beta. Calculated as:

/;

pls_beta=weights*transpose(yload) for each.

/;

pls_coef is a (k,ncomp) matrix of coefficients. If

/;

ncomp is set = k, then pls_coef(,ncomp) is the same

/;

as the ols coefficients

/; xload

/; yload

/; xscores

/; yscores

/; weights

/; yhat

=>

Predicted y value

/; res

=>

Residiual for last PLS regression

/; rss

=>

Residual Sum of Squares for from 1,...,ncomp models

/; ncomp

=>

# of cols in pls_beta

/; iprint

=>

=0 print nothing, =1 print results

/;

/; Built 28 April 2011 by Houston H. Stokes

/;

k=nocols(x);

kk=nocols(y);

n=norows(x);

if(ncomp.gt.k)then;

call epprint(

'ERROR: ncomp must be 0 < ncomp le # cols of x. was',ncomp:);

call epprint(

'

# of columns of x was

',k:);

go to finish;

endif;

if(norows(y).ne.norows(x))then;

call epprint('ERROR: # of obs in y and x not the same':);

go to finish;

endif;

if(kk.gt.1)then;

call epprint(

'ERROR: This release of pls_reg limited to one left hand variable':);

go to finish;

endif;

meany=mean(y);

meanx=array(k:);

y0=y-meany;

x0=x;

do i=1,k;

meanx(i)=mean(x(,i));

x0(,i)=mfam(afam(x(,i))-sfam(afam(meanx(i))));

enddo;

vbig

vi

vibig

xload

yload

xscores

yscores

weights

=matrix(k,ncomp:);

=matrix(k,1:);

=matrix(k,1:);

=matrix(k,ncomp:);

=matrix(kk,ncomp:);

=matrix(n,ncomp:);

=matrix(n,ncomp:);

=matrix(k,ncomp:);

Special Topics in OLS Estimation

rss

=vector(ncomp:);

pls_beta=matrix(k,ncomp:);

cov=matrix(nocols(x0),1:transpose(x0)*y0);

do i=1,ncomp;

s=svd(cov,ibad,21,uu,vv );

if(ibad.ne.0)then;

call epprint('ERROR: SVD of cov failed':);

go to finish;

endif;

ri=uu(,1);

ci=vv(,1);

si=s(1);

ti=x0*ri;

normti=sqrt(sumsq(ti));

if(normti.le.0.0)then;

call epprint('ERROR: Norm of ti le 0.0':);

go to finish;

endif;

ti=vfam(afam(ti)/normti);

xload(,i)=transpose(x0)*ti;

qi=si*ci/normti;

yload(,i)=qi;

xscores(,i)=ti;

yscores(,i)=y0*qi;

weights(,i)=vfam(afam(ri)/sfam(normti));

vi(,1)=xload(,i);

do repeat=1,2;

if(i.gt.1)then;

do j=1,(i-1);

vj(,1)=vbig(,j);

vi=mfam(afam(vi)-(sfam(transpose(vj)*vi)*afam(vj)));

enddo;

endif;

enddo;

normvi=sqrt(sumsq(vi));

if(normvi.le.0.0)then;

call epprint('ERROR: Norm of vi le 0.0':);

go to finish;

endif;

xjunk=afam(afam(vi)/ normvi);

vi=matrix(norows(xload),1:xjunk);

vbig(,i)=vi(,1);

cov=cov - (vi*(transpose(vi)*cov));

vibig=submatrix(vbig,1,norows(vbig),1,i);

cov=cov - (vibig*(transpose(vibig)*cov));

do iii=1,ncomp;

ui=yscores(,iii);

do repeat=1,2;

if(iii.gt.1)then;

do j=1,(iii-1);

tj=xscores(,j);

xwork=sfam(tj*ui);

ui=mfam(afam(ui)-(xwork*afam(tj)));

enddo;

endif;

enddo;

yscores(,iii)=ui;

enddo;

pls_beta=weights*transpose(yload);

adj=array(nocols(x):);

do jj=1,nocols(x);

adj(jj)=meanx(jj)*sfam(pls_beta(jj,1));

enddo;

scale=meany-sum(adj);

10-29

10-30

Chapter 10

jj=norows(pls_beta);

pls_beta(jj,1)=scale;

yhat=x*pls_beta;

pls_res=vfam(afam(y)-afam(yhat));

rss(i)=sumsq(pls_res);

pls_coef(,i)=pls_beta(,1);

enddo;

if(iprint.ne.0)then;

call print(' ':);

iix=nocols(x);

call print('Partial Least Squares - 26 April 2011 Version' :);

call print('Number Columns in origional data

',iix :);

call print('Number Columns in PLS Coefficient Vector',ncomp:);

call print('PlS sum of squared errors

',rss(ncomp):);

endif;

/;

go to done;

finish continue;

yhat=missing();

phs_beta=missing();

done continue;

return;

end;

Table 10.4 PLS1 the Jong-Wise-Ricker (2001) PLS-CRM Estimation Approach

function [c,R,P,B_CPR,R2X,R2y,T,B_CPRmh] = pls1(x,y,A,alpha,yORG,xORG)

%

% Code suggested by Jong-Wise-Ricker j Chemometrics 2001, 15: 85-100

%

% Inputs:

% x

x matrix

% y

y matrix

% A

dimensionality of PLS model

% alpha =0, .5 1. for OLS, PLS and PC

%

% Outputs:

% T

orthonormal PLS component scores where T = XR

% R

weights

% P

% B_CPR betas - PLS regression vector

% c

loadings of T with respect to y

% +++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++

%

% Code from de Jong, Wise, Ricker (2001)

% 29 April 2011 version. Additions by Michael Hunstad

% +++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++

[U,L,V]=svd(x,0);

% SVD

L=diag(L.^2);

% Eigenvalues

r=sum(L>(L(1)/1e14))

% rank of x

L=L(1:r);

% non-zero eigenvalues

U=U(:,1:r);

% unit-length PC scores

V=V(:,1:r);

% PC weights

A=min(A,r);

% dimensionality

gam = alpha/(1-alpha);

% continuum power exponent

Lgam=L.^gam;

% 'powered' eigenvalues

rho=(y'*U)';

% |y| * corr(y,PCs)

T=zeros(r,A);

% initialize T

for a=1:A;

t=Lgam.*rho;

% can version of (XX')^gam*y

t=t-T(:,1:max(1,a-1))*(T(:,1:max(1,a-1))'*t); % orthogonalize

t=t/sqrt(t'*t)

% normalize t

T(:,a)=t

% store in T

rho=rho-t*(t'*rho);

% residual of rho w. r. t. t

end

c=rho'*T;

% loadings c of T w. r.to y

cmh = y'*U*T;

% This is added

R=V*(diag(1./sqrt(L))*T);

P=V*(diag(sqrt(L))*T);

B_CPR=R*triu(c'*ones(size(c)));

% ones(size)) has been fixed

meanY = mean(yORG); %mean of original data - i.e., not de-meaned

xORG(:,end+1) = ones(size(xORG,1),1);

meanX = mean(xORG); %mean of original data - i.e., not de-meaned

B_CPRmh = R*cmh';

B_CPRmh(end) = [meanY - meanX*B_CPRmh];

Special Topics in OLS Estimation

R2X=100*1'*cumsum(T'.^2)'/sum(1);

%

R2y=100*cumsum(c.^2)/(y'*y);

%

T=U*T;

%

%note that throughout the algorithm T is actually

%step

10-31

R-squared on X Eq. 18

R-squared on y Eq. 17

Eq.11

Ttilde until the last

The algorithm logic in Tables 10.2 and 10.3 shows that initially the covariance matrix of

X and y is formed. The SVD is then repeatedly calculated as the information contained in each T

vector is used to update the covariance matrix. A number of loops are required. In contrast the de

Jong-Wise-Ricker (2001) algorithm achieves the same result with only one loop and one SVD

calculation. The Matlab logic is shown in Table 10.4 and its b34s implementation in Table 10.5.

Comments have been added to show additions to the Matlab code. The crmtest program was

developed to graphically display the results of changing on the residual sum of squares.

Table 10.5 B34S Implementation of PLS1 including CRMTEST

/;

/; Also includes crmtest to graphically study CRM Model

/;

subroutine pls1_reg(y,x,y0,x0,r,pls_beta,

u,v,s,pls_coef,yhat,pls_res,rss,ncomp1,gamma,iprint);

/;

/;

/;

/;

/;

/;

/;

/;

/;

/;

/;

/;

/;

/;

/;

/;

/;

/;

/;

/;

/;

/;

/;

/;

Partial Least Squares. See Wold (1975). This subroutine allows

user to artificially decrease (gamma < 1) or increase (gamma > 1)

the degree of multicolinearity in the X data. Only one SVD is used

in contrast to the simpls approach coded in pls_reg which should

increase performance. This is called canonical PLS.

/;

/;

/;

/;

/;

/;

/;

/;

/;

/;

/;

/;

/;

/;

/;

/;

/;

/;

/;

/;

/;

/;

/;

pls_reg and pls1_reg take the response variable into account, and

therefore often leads to models that are able to fit the response

variable with fewer components.

pls1_reg is designed to implement de Jong-Wise_Ricker Matlab code

from which this discussion of PLS and PC regression has been

developed.

Alternative Matlab code came from

de Jong, S. "SIMPLS: An Alternative Approach to Partial Least Squares

Regression." Chemometrics and Intelligent Laboratory Systems. Vol.

18, 1993, pp. 251–263 and was implemented in B34S as pls_reg. This

approach is substantially slower and has less capability.

The complete reference for this code is:

de Jong, Sijmen, Barry Wise, N. Lawrence Ricker "Canonical Partial

Least Squares and Continuum Power Regression," Journal of

Chemometrics Vol. 15, 2001, pp 85-100

pc_reg creates components to explain the observed variability in the

predictor variables, without considering the response variable at all.

y

x

=>

=>

u

v

s

y0

x0

r

pls_beta

=>

=>

=>

=>

=>

=>

=>

pls_coef =>

yhat

res

rss

=>

=>

=>

left hand variable. Usually %y from olsq with :savex

left hand variable. Usually %x from olsq with :savex

n by k

from svd(x)

x=u*diagmat(s)*transpose(v)

from svd(x)

singular values

y with mean removed

X with means subtracted except for last col

weight matrix. Note bigt=x0*r from equation (16)

pls_beta such that x0*r*pls_beta + mean(y) maps to yhat

t*pls_beta + mean(y)

since t = x0*r

If ncomp is set = k, then pls_coef is the same as the

ols coefficients. This might change in future releases

to be simular to pls_reg.

Note: (t*pls_beta)+mean(y) = x*pls_coef = yhat

Predicted y value for last regression

Residual for last PLS regression

Residual Sum of Squares all ncomp models

10-32

/;

/;

/;

/;

/;

/;

/;

/;

/;

/;

/;

/;

/;

/;

/;

/;

/;

/;

/;

/;

/;

/;

/;

/;

/;

ncomp

gamma

Chapter 10

=>

=>

Note:

iprint

# of cols in pls_beta

=0 for OLS =1. for pls

=> infinity for pc

Use caution changing gamma

gamma = 0 => OLS

gamma = 1 => PLS

gamma > 1 => increasing multicolinearity in dataset

set .le. 20

gamma < 1 => decrease

multicolinearity in dataset

=>

=0 print nothing, =1 print results,

=2 suppress coef list

Note:

bigt= x0*r;

bigt= u * t_tilda => t_tilda= transpose(u) * bigt

loadings of bigt with respect to y0

cc = y0*u*t_tilda = y0*bigt

fitted y0= bigt* cc

= x0*r*y0*x0*r

Built 11 May 2011 by Houston H. Stokes

Contributions made by Michael Hunstad to Matlab version.

First Equation number refers to de Jong-Sijman-Wise-Ricker (2001)

kin=nocols(x);

kk=nocols(y);

n=norows(x);

ncomp=ncomp1;

if(ncomp.gt.kin)then;

call epprint(

'ERROR: ncomp must be 0 < ncomp le # cols of x. was',ncomp:);

call epprint(

'

# of columns of x was

',kin:);

go to finish;

endif;

if(norows(y).ne.norows(x))then;

call epprint('ERROR: # of obs in y and x not the same':);

go to finish;

endif;

if(kk.gt.1)then;

call epprint(

'ERROR: This release of pls1_reg limited to one left hand variable':);

go to finish;

endif;

meany=mean(y);

meanx=array(kin:);

y0=y-meany;

x0=x;

do i=1,(kin-1);

meanx(i)=mean(x(,i));

x0(,i)=mfam(afam(x(,i))-sfam(afam(meanx(i))));

enddo;

s=svd(x0,ibad,21,u,v );

if(ibad.ne.0)then;

call epprint('ERROR: SVD x0 failed':);

go to finish;

endif;

L=afam(s)*afam(s);

k=idint(sum(L.gt.(sfam(L(1))/1.e+14)));

L=L(integers(1,k));

ncomp=min1(ncomp,k);

u=submatrix(u,1,norows(u),1,k);

v=submatrix(v,1,norows(v),1,k);

Lgam=vfam(afam(L)**gamma);

rho=(y0*u);

bigt=matrix(k,ncomp:);

rss=vector(ncomp:);

do i=1,ncomp;

maxcol=max1(1,i-1);

t=afam(Lgam)*afam(rho);

Special Topics in OLS Estimation

t=t-

afam(submatrix(bigt,1,k,1,maxcol)*

(transpose(submatrix(bigt,1,k,1,maxcol))*vfam(t)));

t=vfam(afam(t)/sqrt(sumsq((t))));

bigt(,i)=vfam(t);

rho=rho-(vfam(t)*(vfam(t)*vfam(rho)));

/; Equation (16)

(10.4-13)

pls_beta=y0*u*bigt;

yhat=(u*bigt*pls_beta) + meany;

rss(i)=sumsq((afam(y)-afam(yhat)));

enddo;

/; Equation (14)

(10.4-8)

r=v*(diagmat((1./dsqrt(afam(L))))*bigt);

/; Equation (22)

(10.4-13)

pls_coef=r*pls_beta;

i=norows(pls_coef);

pls_coef(i)=sfam(meany)-sfam(vfam(meanx)*pls_coef);

/; Equation (11) (10.4-7)

bigt=u*bigt;

yhat=x*pls_coef;

pls_res=vfam(afam(y)-afam(yhat));

tss=variance(y)*dfloat(norows(y)-1);

rsq=1.0-afam(rss(ncomp)/tss);

if(iprint.ne.0)then;

call print(' ':);

iix=nocols(x);

call print('Partial Least Squares PLS1 - 9 May 2011 Version.

' :);

call print('Logic from de Jong, Wise, Ricker (2001) Matlab Code':);

call print('Number of rows in original data

',

norows(x):);

call print('Number Columns in origional data

',iix:);

call print('Number Columns in PLS Coefficient Vector

',

ncomp:);

if(ncomp.lt.ncomp1)

call print('Note: PLS coefficient vector reduced due to rank of X':);

call print('Gamma

',

gamma:);

call print('Mean of left hand variable

',

meany:);

call print('PLS sum of squared errors

',

rss(ncomp):);

call print('Total sum of squares

',tss:);

call print('PLS R^2

',rsq:);

if(iprint.ne.2)then;

call tabulate(pls_beta,pls_coef

:title '(T*pls_beta)+mean(y) = x*pls_coef');

endif;

endif;

/;

go to done;

finish continue;

yhat=missing();

phs_coef=missing();

done continue;

return;

end;

subroutine crmtest(y,x,ncomp,gammag,rsstest,rote,iprint,noshow);

/;

/; Investigates the effect of changes in gamma on the RSS

/; Various gamma => alternate Continuum Regression Models

/;

/; y

=> left hand variable. Must be vector

/; x

=> right hand variable matrix with constant included

/; ncomp => # of PLS/CR vectors

/; gammg => Vector of gamma values

/; rsstest=> Matrix of RSS values for 1-ncomp vectors and gammag

/; rote

=> Sets rotation

/; iprint => =0 do not give pls1_reg output

/;

=1 give pls1_reg output

/;

=2 do not give pls1_coef list

10-33

10-34

Chapter 10

/; noshow => =1 Just produce graph in crm_test.wmf

/;

=0 show graph and save graph

/;

/;

/;

/;

0 < gamma < 1 => Multicollinearity taken from X matrix

/;

1 < gamma < 15.=> Multicollinearity added to X matrix.

/;

gamma = 1 => PLS Model. a large value of gamma

/;

approachs PC

/;

/; Subroutine crmtest built 23 May 2010 by Houston H. Stokes

/; +++++++++++++++++++++++++++++++++++++++++++++++++++++++++

/;

ii=ncomp;

jj=norows(gammag);

rsstest=matrix(ii,jj:);

do j=1,jj;

gamma=gammag(j);

call pls1_reg(y,x,y0,x0,r,c,,u,v,s,pls2coef,yhat,

pls_res,pls_rss,

ncomp,gamma,iprint);

rsstest(,j)=pls_rss;

enddo;

scaleadj=array(4: dfloat(1), gammag(1),

dfloat(ii),gammag(norows(gammag)));

if(noshow.eq.1)then;

call graph(rsstest :plottype meshc :d3axis :d3border :grid :noshow

:rotation rote :pgborder :file 'crm_test.wmf'

:xlabel '# Vectors' :ylabelleft 'Gamma'

:pgunits scaleadj

:heading 'RSS vs # vectors and gamma' );

endif;

if(noshow.ne.1)then;

call graph(rsstest :plottype meshc :d3axis :d3border :grid

:rotation rote :pgborder :file 'crm_test.wmf'

:xlabel '# Vectors' :ylabelleft 'Gamma'

:pgunits scaleadj

:heading 'RSS vs # vectors and gamma' );

endif;

return;

end;

Table 10.6 is the driving program to analyse the gas data using the PLS and CRM

methods of analysis.

Special Topics in OLS Estimation

Table 10.6 Effect on the RSS of PCM, PLS and CRM Models of Varying Degrees

b34sexec options ginclude('gas.b34'); b34srun;

b34sexec matrix;

call loaddata;

call load(pls_reg);

call load(pls1_reg);

call load(pc_reg);

call echooff;

nn=6;

call olsq(gasout gasin{1 to nn} gasout{1 to nn} :print :savex);

ols_coef=%coef;

ols_rss =%rss;

iprint=1;

%xhold=%x;

%yhold=%y;

/; Test model

- As setup will stop

testmod=1;

if(testmod.ne.0)then;

iprint=2;

noshow=0;

ncomp=4;

gammag=grid(.05,1.,.01);

call print(gammag);

rote=270.;

call crmtest(%y,%x, ncomp,gammag,rsstest,rote,iprint,noshow);

call print(rsstest);

call stop;

endif;

call pc_reg(%y,%x,ols_coef,ols_rss,tss,pc_coef,

pcrss,pc_size,u,iprint);

jj=integers(norows(pcrss),1,-1);

pc_rss=pcrss(jj);

/;