3. NPCA-based Variable Forgetting Factor RLS Algorithm

advertisement

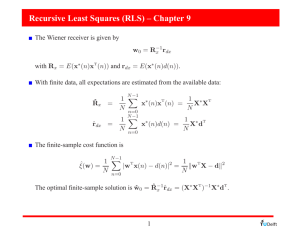

A Nonlinear PCA-based Variable Forgetting Factor RLS Algorithm for Blind Source Separation Liu Yang, Hang Zhang Institute of Communication Engineering, PLA University of Science and Technology, Nanjing, 210007, China Abstract The forgetting factor plays an important role in the recursive least-squares (RLS) algorithm. This parameter leads to a compromise between the tracking capability and the stability. In this paper, the influence aroused by the forgetting factor is discussed and a variable forgetting factor RLS algorithm based on nonlinear principal component analysis (NPCA) is proposed for blind source separation (BSS). Simulations are performed in static and non-stationary environments, respectively. The results show that the new RLS algorithm has faster convergence rate and better tracking capability than the fixed forgetting factor one. And in the meantime, it has good anti-jamming performance. Keywords:variable forgetting factor, recursive least-squares (RLS), blind source separation (BSS), nonlinear principal component analysis (NPCA) 1. Introduction Recent years, BSS has gained great popularity due to its adaptation to a wider range of applications. For it has the ability to recover a set of source signals only from the output observed signals without any training data or priori information. BSS techniques have found research in a variety of areas including wireless communication, speech processing, bioelectricity processing and image processing. One of the valuable applications of BSS is the communication antijamming which is just what will be discussed in this paper. The convergence rate, tracking capability and stability of BSS problems have always been receiving considerable attention by people. And lots of efforts have been made to promote the performance. The RLS algorithm, as one typical adaptive algorithm known for its faster convergence rate and better stability than the LMS algorithm has been extensively used. By incorporating nonlinear PCA (NPCA) with the project approximation subspace tracking (PAST) algorithm [1], Pajunen et al. [2] presented the RLS algorithm for BSS. By using the natural gradient on the Stiefel manifold to minimize a NPCA criterion, X. Zhu [3] proposes a new adaptive RLS algorithm with prewhitening for BSS. In addition, Kalman filtering-based RLS algorithms have also been discussed in [4, 5]. However, the papers are lack of the detailed discussion about the influence aroused by the forgetting factor. The forgetting factor represents the degree of forgetfulness for past data, if it is large, the algorithm “forgets” the past data slowly, which leads to a better stability but slower convergence rate. And if it is small, the case is on the contrary. Then the question arises, if there is a way to make the forgetting factor change adaptively according to the separating matrix and observed signals, the performance of convergence speed and tracking capability may be improved under the condition of the guaranteed stability. As a result, in this paper, we propose a RLS algorithm with variable forgetting factor, and the mechanism that controls the forgetting factor is based on the NPCA criterion. It has been proved that both in static and non-stationary environment, the variable forgetting factor RLS algorithm has faster convergence rate and better tracking capability than the fixed forgetting factor one. In addition, it is demonstrated that the new algorithm has good anti-jamming ability. The rest of the paper is organized as follows: Section 2 introduces the BSS model for instantaneous mixture and the NPCA criterion. Section 3 presents the NPCA-based variable forgetting factor RLS algorithm and the simulation results are given in section 4. Finally, some conclusions are drawn in section 5. 2. Problem Formulation and the NPCA Criterion 2.1 The BSS model We consider the simple instantaneous mixture of BSS and its universal model is shown in Fig. 1. Sources n1 (t ) Mixtures x1 (t ) s1 (t ) s2 (t ) + … Mixing system A(t) + n2 (t ) … + sN (t ) x2 (t ) nN (t ) xN (t ) Whitened Mixtures v1 (t ) Whitening v (t ) 2 system V(t) … Separating system W(t) vN (t ) Estimated Sources y1 (t ) y2 (t ) … yN (t ) Unkown Fig. 1 Instantaneous mixture model of BSS Mathematically, the BSS problem is formulated as x(t ) A(t )s(t ) n(t ) (1) where s(t )=[s1 (t),s2 (t),... ,s N (t)]T is the vector consists of N unknown source signals which are assumed to be mutually independent. x(t )=[x1 (t),x 2 (t),... ,x N (t)]T and n(t )=[n1 (t),n 2 (t),... ,n N (t)]T represent the observed signals and noise, respectively. A (t ) denotes a n n mixing matrix and if A (t ) changes by time, then the system is time-varying. In general, especially for the NPCA criterion which we will introduce later needs a pre-whitening for the mixtures x(t ) . We adopt the RLS-type whitening algorithm to prewhiten x(t ) on-line. Define v(t)=[v1 (t), v2 (t),... , vN (t)]T as the whitened mixture, then v(t ) V (t )x(t ) (2) where V (t) is the whitening matrix which is adaptively updated in the form of V (t +1)= where 1 1 [V (t ) v(t ) vT (t ) V (t )] vT (t ) v(t ) (3) , and denotes the forgetting factor we will discuss later. The purpose of BSS is to recover the source signals from the mixtures by the following model y (t )=W(t)v(t ) (4) T where W(t) denotes the separating matrix, and y(t )=[y1 (t),y2 (t),... ,y N (t)] is an estimation of source signals. As a matter of the uncertainty of the amplitude and the order of BSS, it is accounted to be an effective BSS only if the overall separating matrix B WVA is a generalized permutation matrix in which only one nonzero element is at each row or each column. 2.2 The NPCA Criterion Once the observed signals are pre-whitened, it is easy to prove that the separating matrix is orthogonal [6]. Then we can use the NPCA criterion to determine the orthogonal separating matrix. The contrast function of NPCA is formulated as follow: J (W(t))=E v(t ) WT (t ) f ( W(t ) v(t )) 2 (5) where E denotes the expectation operation and f () is a nonlinear activation function which is determined by the distribution of the sources. And through the nonlinear function, high order statistics information is lead in to solve the BSS problem by principle component analysis. 3. NPCA-based Variable Forgetting Factor RLS Algorithm Since the expectation operation in (5) is not easy for computation, and in order to consider the influence aroused by both of the current data and the past data, forgetting factor is introduced. Replacing the mean-square error in (5) by exponentially weighted sum of error squares, and approximating the unknown vector f (W (t)v (t)) by f (W(t-1)v(t)) [1], we have the least-squares type criterion [2] t W J (W(t))= t -i v(i) WT (t ) f ( W(t 1) v(i )) (6) i=1 in which is the positive number less than unity equals to which we have just mentioned in the pre-whitening part. The ordinary gradient of J (W(t)) in (6) with respect to W(t) is t W J (W(t))= t -i f y (i) f [y (i)]T W(t) v (i)T (7) i=1 It is widely recognized that for Euclidean space, stochastic gradient denotes the steepest descent direction. However, in Riemannian space, the natural gradient represents the true steepest descent direction [7] .As a result, we use the natural gradient instead of the stochastic gradient in classical RLS algorithm. Then the natural gradient with orthogonality constraint is given by ~ W J (W(t))=W(t)WT (t )W J (W(t))-WW J (W(t))W (8) Computing the above natural gradient, and letting it equal to zero, we will get the optimal weighting matrix at time t described by t t i 1 i 1 W(t )opt [ t i y (i)zT (i)]1 [ t i z(i) vT (i)] (9) Applying the matrix inverse lemma to the solution of the optimal separating matrix, the RLS algorithm can be summarized as follows: z (t ) f ( W(t 1) v(t )) f (y (t )) (10) G (t ) H(t 1)y (t ) zT (t )H(t 1)y (t ) H(t ) 1[H(t 1) G(t )zT (t )H(t 1)] (11) (12) (13) W(t ) W(t 1) H(t )z(t ) vT (t ) G(t )zT (t )W(t 1) The forgetting factor is a key parameter for the above iteration. A small leads to a quick forgetfulness for the past data but the stability is declined. On the contrary, a large makes it “forget” the past data slowly and the stability will increase simultaneously. To accelerate the convergence speed and tracking capability of the RLS algorithm under the condition of not declining the stability, we make the forgetting factor change adaptively in accordance with the cost function in (5). To simplify the computing, we use the current observed data instead of the expectation data. Then the cost function of W(t ) can be written as 1 J (W (t))= || (t ) ||2 2 Where (t ) v(t ) WT (t 1)z(t ) . The gradient of J (W(t)) in (14) with respect to (t ) is (t ) J (W(t))= (t ) zT (t )ψ(t 1) (t ) (t ) in which ψ (t 1) W(t 1) (t 1) (14) (15) (16) Let S (t ) denotes the derivative of H (t ) with respect to (t ) S(t ) H(t ) (t ) (17) Substituting (12) into (11), we can obtain G (t ) H(t )y (t ) then using (13), (17), and (18) in (16) yields (18) ψ(t ) [I G(t )zT (t )]ψ(t 1) S(t )z(t ) vT (t ) S(t )y(t )zT (t )W(t 1) For the recursion to compute S (t ) , we first use (11) and (12) to write H (t ) 1 (t ) H(t 1) 1 (t )H(t 1) y (t ) z T (t ) H(t 1) (t ) z T (t ) H(t 1) y (t ) (19) (20) Hence, S (t ) can be updated by the following equation S(t ) 1 (t )[I G(t )zT (t )]S(t 1)[I z(t )GT (t )] 1(t )G(t )GT (t ) 1(t )H(t ) (21) As a result, the forgetting factor may be adaptively updated by the following equation (22) (t ) (t 1) J (W(t )) (t 1) zT (t )ψ(t 1) (t ) Where is a small, positive learning-rate. Thus, we may summarize the RLS algorithm with variable forgetting factor as follows: Start with the initial values W(1) , P(1) , S (1) , ψ (1) and (1) , compute for t 1 :the first four steps are from (10) to (13), and the following three steps are (19), (21) and (22). We should notice that to avoid the case that (t ) is too large or too small, we should give a upper level and a lower level of truncation through experimentation. 4. Simulation results In this part, section A shows the performance of the NPCA-based variable forgetting factor RLS algorithm compared with the fixed forgetting factor one both in static and non-stationary environment; and section B shows the antijamming performance of the algorithms in non-stationary environment. For convenience, the two algorithms are called V-RLS and F-RLS, respectively. The sources include one multi-tone interference and one BPSK signal, in which the sample frequency is f s =76.8kHz , the carrier frequency is fc =9.6kHz , the bit rate is fb =2.4kb/s , the shaping filter is raised cosine filter with the roll off factor of 0.5 , the up sampling rate is nsamp 4 of the BPSK signal; And the frequencies of the multi-tone interference are f1 8.4kHz , f 2 9.6kHz and f3 10.6kHz . In order to evaluate the performance of the algorithms, we use the performance index (PI) which is a function of the overall separating matrix B given as 1 n n bij bij 1 n n 1 1 (30) n j 1 i 1 max bkj n i 1 j 1 max bik k k in which bij is the ij th element of B . Just as we have mentioned above, only PI(B)= when B WVA is a generalized permutation matrix, the algorithm achieves the perfect performance. In other words, the smaller of PI(B) , the better of the performance. A. PI Curves in Static and Non-stationary Environment We set the forgetting factor of F-RLS at 0.995 , 0.985 and 0.975 for comparison, the initial forgetting factor of V-RLS is (1) 0.975 , and the upper level is 0.9999 , the lower level is 0.96 .The learning rate for V-RLS is 1 106 . Both of the two algorithms use the same initial matrix: W (1) I , P (1) I , S(1) I and ψ (1) I . Furthermore, the same nonlinear function f (t ) tanh(t ) is used, and the JSR is 5dB, SNR is 18dB for both. We perform 100 independent runs in each simulation. Fig. 2 shows the PI curves for static environment, in which the mixing matrix A is constant over each simulation. We can see from the figure that the forgetting factor heavily affects the convergence rate and the stability. Firstly, when 0.995 for F-RLS, its stability is worse than or almost the same as V-RLS, but it converges much slower than V-RLS; secondly, when 0.985 for F-RLS, its convergence speed is accelerated but in the meantime, the stability is decreased. Thirdly, when 0.975 for F-RLS, which is the same as the initial value of VRLS, it is clear that the two algorithms have almost the same convergence speed, but the stability of F-RLS is the worst. Then we can conclude that when the same stability is achieved, V-RLS has a faster convergence speed than F-RLS. 1.4 F-RLS(=0.995) F-RLS(=0.985) 1.2 F-RLS(=0.975) V-RLS((1)=0.975) 1 0.8 PI 0.08 0.06 0.6 0.04 0.02 0.4 0 2000 2100 2200 2300 0.2 0 0 500 1000 1500 2000 2500 3000 Iterations 3500 4000 4500 5000 Fig.2 PI curves in static environment Fig. 3 and Fig. 4 show the PI curves for non-stationary environment, in which the mixing matrix A (t ) changes by the way in [8]: A(t ) A 0 R (t ) R (t ) R (t 1) σ (31) where R (t ) represents a small perturbation matrix having the same size as A 0 , and the initial value of R (t ) is set as zeros with dimension n n , 0.9 , is the parameter that controls the changing speed of the environment, and it is set at 0.01 and 0.05 in Fig. 3 and Fig. 4, respectively. σ denotes a normal distribution random variable with zero mean and standard deviation one. We can see that the larger of , the worse of the stability. Although the stability is worse than that of the static environment, we can achieve the similar conclusion as that of Fig. 2. Furthermore, we can conclude that the proposed V-RLS algorithm has better tracking capability in time-varying system than the F-RLS one. 1.4 F-RLS(=0.995) F-RLS(=0.985) 1.2 F-RLS(=0.975) V-RLS((1)=0.975) 1 0.8 PI 0.1 0.08 0.6 0.06 0.4 0.04 1800 1900 2000 2100 0.2 0 0 500 1000 1500 2000 2500 3000 Iterations 3500 4000 4500 5000 Fig.3 PI curves in non-stationary environment ( =0.01) 1.4 F-RLS(=0.995) F-RLS(=0.985) 1.2 F-RLS(=0.975) V-RLS((1)=0.975) 1 0.24 0.8 PI 0.22 0.2 0.6 0.18 0.16 2900 0.4 3000 3100 3200 0.2 0 0 500 1000 1500 2000 2500 3000 Iterations 3500 4000 4500 5000 Fig.4 PI curves in non-stationary environment ( =0.05) B. Anti-jamming Performance Concerning that the anti-jamming performance in steady environment is surely better than that for non-stationary environment, for simplicity, we just give the performance of BER versus SNR in non-stationary environment. The other parameters are the same as that of Section A, 0.01 . The SNR is in [0, 10] dB. From Fig. 5, we can see that both the two algorithms perform better than the unhandled mixed signal. And the performance is not quite sensitive to the forgetting factor. Then we can come to the conclusion that under the condition of faster convergence rate and better tracking capability, the V-RLS blind source separation algorithm has good anti-jamming performance. 0 10 -1 10 -2 BER 10 -3 10 -4 Mixed signal 10 BPSK separated by V-RLS((1)=0.975) BPSK separated by F-RLS( =0.995) -5 BPSK separated by F-RLS( =0.985) 10 BPSK separated by F-RLS( =0.975) -6 10 0 1 2 3 4 5 SNR(dB) 6 7 8 9 10 Fig.5 Anti-jamming performance in non-stationary environment 5. Summary In this paper, a NPCA-based variable forgetting factor RLS algorithm is proposed. Simulations using sources of communication signal and multi-tone interference in both static environment and non-stationary environment show that it has faster convergence rate and better tracking capability than the fixed forgetting factor one. In addition, the proposed algorithm has good anti-jamming performance. References [1] B. Yang, “Projection approximation subspace tracking”, IEEE Trans. Signal Processing, vol. 43, no. 1, pp. 95-107, Jan. 1995. [2] P. Pajunen and J. Karhunen, “Least-squares methods for blind source separation based on nonlinear PCA”, Int. J. Neural Syst., vol. 8, no. 5-6, pp. 601-612, Dec. 1998. [3] X. Zhu and X. Zhang, “Adaptive RLS algorithm for blind source separation using a natural gradient”, IEEE Signal Processing Letters, vol. 9, no. 12, pp. 432-435, Dec. 2002. [4] Q. Lv, X. Zhang and Y. Jia, “Kalman filtering algorithm for blind source separation”, IEEE Intel. Conf. on ICASSP, pp. 257-260, March 18-23, 2005. [5] F. Gu, H. Zhang and Y. Xiao, “Kalman filtering algorithm for blind separation of convolutive mixtures”, Intel. Conf. on ISSPA, pp. 1045-1049, July 2, 2012. [6] A. Hyvarinen, J. Karhunen and E. Oja, “Independent component analysis”, Beijing: Prentice Hall, pp. 103-104, 2007. [7] S. Amari, “Natural gradient works efficiently in learning”, Neural Comput, vol. 10, pp. 251-276, 1998. [8] F. Gu, H. Zhang, X. Tan et al., “RLS algorithm for blind source separation in non-stationary environments”, IEEE Intel. Conf. on CIICT, July 4-5, 2012. Author A was born in Jiangsu Province, China, in 1978. He received the B.S. degree from the University of Science and Technology of China (USTC), Hefei, in 2001 and the M.S. degree from the University of Florida (UF), Gainesville, in 2003, both in electrical engineering. He is currently pursuing the Ph.D. degree with the Department of Electrical and Computer Engineering, UF. His research interests include spectral estimation, array signal processing, and information theory Firstname Lastname includes the biography here.