SIGMAA Stat-Ed - Villanova University

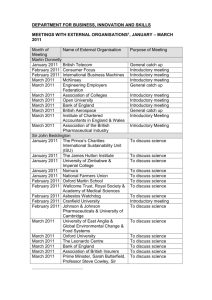

advertisement

SIGMAA Stat-Ed News Mathematical Association of America February 2006 Contents: News from the Joint Math Meetings, San Antonio, Jan. 11-15 SIGMAA Stat-Ed Business Meeting SIGMAA Stat-Ed Panel Sessions SIGMAA Stat-Ed Contributed Papers Other Stat-Ed related presentations Upcoming Events SIGMAA Stat-Ed Business Meeting The business meeting for the SIGMAA was held Friday evening. The new officers were introduced: Chair: Ginger Holmes-Rowell, Middle Tennessee State Univ., rowell@mtsu.edu Chair-Elect: Chris Lacke, Rowan University, lacke@rowan.edu Treasurer: Murray Siegel, South Carolina GSSM, mhsiegel@adelphia.net Tom Moore, mooret@grinnell.edu moves to Past-Chair; Sue Schou, Idaho State University, schousue@cob.isu.edu continues as Secretary for another year. Tom announced the first award for the best contributed paper would be awarded following tabulation of audience responses. But, the inaugural award was presented to Dex Whittinghill, Rowan University, for his contributions to the beginning of the SIGMAA. In future, this will be called the Dexter Whittinghill Contributed Paper Award. The basis for the award is the highest average score on “overall presentation” by those in attendance. The possibility of amendments to the charter due to changes from MAA (especially about elections) was discussed. They propose to have all SIGMAA conducted through their electronic system in one of two “windows:” April or October, neither of which is consistent with our current charter. A proposed award for “Outstanding Statistics TA” was discussed. Several good ideas were voiced. This discussion will have to continue electronically. Dov Chelst from DeVry University volunteered to be the new webmaster. A Sudoku team face-off led off the prize round. The top five teams of three then answered questions such as “How many members are in the MAA?” for a chance to select a prize from textbooks (Duxbury, Prentice-Hall, etc), Minitab-14, a Minitab polo shirt and assorted goodies. All players received a Minitab pen. SIGMAA Stat-Ed Panel Sessions Implications of the new ASA (GAISE) Guidelines for teaching Statistics Panelists were: Gary Kader (gdk@math.appstate.edu) and Mike Perry (perrylm@appstate.edu) on PK-12; Jessica Utts (utts@wald.ucdavis.edu), and Robin Lock (rlock@stlawu.edu) on College Level. For anyone not familiar with the GAISE report, there are two parts: one for PK-12 and another for introductory college courses. The full text (with appendices) is available at www.amstat.org/education/gaise/. For the PK-12 recommendations, the focus is on teachers (and students) not recognizing the difference between statistics and mathematics and that up to now, the statistics curriculum has not been seen as cohesive. For the first, consider two questions: 1. Assuming a coin is fair, if it is tossed 10 times, how many heads will you get? This is a math (probability) question. 2. You pick up a coin. Is it fair? This is a statistics question, which requires knowing information about question 1 to answer. For cohesion, the report envisions students moving through a framework model of increasing problem solving capabilities and increasing levels of sophistication – using proportional thinking, recognizing association and sampling variability. At the college introductory course level, we must recognize there are many flavors: consumers vs producers; discipline specific vs general, large lecture vs small section, etc. At this level, there are six overarching recommendations: 1. Emphasize Statistical Literacy and Develop Statistical Thinking 2. Use Real Data 3. Stress Conceptual Understanding Rather than Mere Knowledge of Procedures 4. Foster Active learning in the Classroom 5. Use Technology for Developing Concepts and Analyzing Data 6. Use Assessments to Improve and Evaluate Student Learning. Our goals for a Statistically Educated Individual include that he/she should: 1. 2. 3. 4. 5. 6. Understand that variability is natural, predictable, and quantifiable Understand the difference between random sampling and random assignment Recognize sources of bias Be able to determine the population to which results extend Be able to determine when cause/effect can be drawn Understand the processes of obtaining and generating data, producing and explaining graphs and numerical summaries, be able to determine the appropriate inference procedure, an interpret and communicate results 7. Understand the basic ideas of inference 8. Know how to critique news and journal articles 9. Know when to call for help. How can we make this happen? There were two major recommendations: 1) Start with small steps; deformulize, use applets, use real data with interesting questions and projects and 2) Help students think statistically: start examples with a research question, use open ended projects (again!), have students practice selecting which procedure is appropriate, and assess and give feedback on their thinking. Requiring Statistics of Every Mathematics Major: Model Courses. This panel was co-sponsored by the SIGMAA and the CUPM. It featured Robin Lock (rlock@stlawu.edu), George Cobb (gcobb@mtholyoke.edu), Deborah Nolan (nolan@stat.berrkeley.edu) and Allan Rossman (arossman@calpoly.edu). Deb Nolan advocated a reformation of Math Stats – we should do the application first, and the method afterwards. We also need to simplify how we do what we do and not what we do. We need to engage in constructive reasoning using simulation to illustrate many concepts. She has created a new course combining intensive computing and math stats. See her materials at www.berkeley.edu/~statlabs and at www.berkeley.edu/~nolan for STAT 133 materials. Allan Rossman advocated adding an understanding of the mathematical underpinnings to intro stats. He said we should emphasize the connections between study design, inference techniques, and the scope of conclusions. His new text (with Beth Chance) allows students to experience the process over and over (the whole process is in each chapter). He also uses simulation frequently. Robin Lock described an alternative to the traditional first course for the math major: Time Series. St. Lawrence University uses this with a Calc II prereq. There is no text, as he hasn’t found one yet that he likes. Students get lots of the basic concepts (plots, data = mean + error, interval estimates, etc) as well as other unique concepts (autocorrelation, seasonality, etc). For more information go to angel.stlawu.edu, select Course Search and enter the keyword Statistics, then select the time series course. George Cobb is offering a Discrete Markov Chain Monte Carlo course as Statistics for Math Majors. He believes there are four failings of traditional math stat: outdated content, too many prereqs, no true applications, and BORING mathematics. He questioned why LaPlace, Gauss, LeGendre et al did applied as well as pure math. The answer is most likely that an application should be a challenge, and not just an illustration. Abstraction should be a process and not a product – it’s not about purity but the unexpected connections revealed by the abstraction. His course has four major applications: Darwin’s finches (assessing inter-species competition), Political killings in Kosovo (estimating an unknown total from multiple recapture data), Molecular alignment (which segments of several large molecules correspond?), and Berkeley bicycles (What is the effect of designated bike lanes on bicycle use?). SIGMAA Stat-Ed Contributed Papers The contributed paper session Saturday afternoon began with Ginger Holmes-Rowell giving an introduction on the GAISE recommendations for the college introductory course. There were fifteen presentations. “The Evolution of a Modern Statistics Course, Preliminary Report,” Barbara Wainwright, Salisbury State University, bawainwright@salisbury.edu. The course she described began about 10 years ago to integrate parametric and nonparametric statistics for Nursing and Geography majors. It has since evolved to be required for other majors (Biology, Social Work, Psychology, Education, etc). In this course students are required to critique a research article, do lots of Minitab work, and some portfolio-type assignments. They are currently incorporating applets (being developed by computer science students), and more hands-on activities. “National Science Foundation Statistics Education Projects that model Assessment and Instruction Guidelines,” Megan Hall and Ginger Holmes Rowell, Middle Tennessee State University, rowell@mtsu.edu. They reported on a survey of NSF projects which referenced the 1992 Cobb Report. Of 159 projects 1993-2004, 112 were for the intro course, 54 addressed majors and/ro minors, and some both. Of those, 107 met at least one of the current GAISE recommendations, and 76 more than one. Some good resources developed at least partially with NSF money are: Rice Virtual Laboratories in Statistics (online curriculum) www.ruf.rice.edu/~lane/rvls.html; Tools for Teaching and Assessing Statistical Inference (instructional activities using Sampling SIM software) www.gen.umn.edu//research/stat_tools/; Electronic Encyclopedia of Statistical Experiments and Examples (stories for developing critical thinking about real-world topics) www.whfreeman.com/eesee/eesee.html; StatCrunch (free statistical software) www.statcrunch.com; ARTIST (online database of assessment tools) https://www.gen.umn.edu/artist/; and CAUSEweb’s online digital library of materials for teaching and learning statistics www.causeweb.org. “Using Web-based Practice Problems to Improve and Evaluate Student Learning, Preliminary Report,” Julie Clark, Hollins University, jclark@hollins.edu. She is using Blackboard and the Rossman/Chance text. She has set things up so that students submit practice problems through Blackboard and immediately receive correct answers after submission. Since Blackboard insists on a point value for grading, problems were scored as 0 for no attempt, 1 for late or no real effort and 2 for a reasonable attempt. In her last class of 15 students it generally took her less than 20 minutes to look at the submissions (she said it’s easy to spot what you want). It was somewhat time consuming to set things up the first semester of use, but recycling saves time after that. She handed out some examples of both the type of questions used and some student responses (free response). For more information on her class, see http://www1.hollins.edu/faculty/clarkjm/Stat251/STAT251fall05.htm. “Developing a Conceptual Understanding of Average, Preliminary Report.” Sharon S. EmersonStonell, Longwood University, emersonstonellss@longwood.edu. Her course was a K-8 Teachers summer institute, although she indicated that the aterials could be used in a general education undergraduate statistics course. When asked about an “average” teachers could describe the median and mode, but not the mean. She had a demo with cubes for the mean, median, and mode; and a visual balance (yardstick with weights) for demonstrating the mean. There was also a demo using rabbit families (three different distributions with the same mean) for students to think about. “Emphasizing Conceptual Le3arning in Statistics by Using Technology and Classroom Data, Preliminary Report,” Semra Kilic-Bahi, Colby-Sawyer College, skilic-bahi@colby-sawyer.edu. She described several activities. The first day of class, students are asked what percent of classmates they know by name. Students are asked to attach an adjective to their name and to describe what they did on break – these are repeated and added to as they progress around the class. By the time they are finished, they know many – but there is confounding – is it the adjective or the repetition? Class size also has an affect. They used Leonardo DaVinci’s idea of man as a square to address the question “Who is more square: males or females?” and took measurements of the students in the class. In thinking about how many hours they sleep per night, students are asked to think about what might be reasonable values for the mean and standard deviation for a normal curve. For the question “How old is your favorite actress?’ students are asked how confident they are about the age, and how they might be 100% confident. She demonstrated the applet for confidence intervals (basketball shooting) from the Moore BPS website. “Using Fathom to Explain Central Tendency and Variability,” Chris Lacke, Rowan University, lacke@rowan.edu. Chris demonstrated how Fathom and its interactive capabilities can be used to demonstrate these concepts – and how things can change given certain constraints. For example, start with data values 5 – 1’s, 4 – 2’s, 3 – 3’s, 2 – 4’s. and 1 - 5. What happens if the 4’s and 5’s become 3.5’s and 4’s? is it possible the change the two 4’s and 5’s to values that will cause the median to decrease? What happens if a data value becomes extremely small or extremely large, relative to the rest of the data set? The same idea was explored with spread – eg, given an initial set, add 4 data points so that: all four have the same value, and the variance of the result must be as small (large) as possible. “Did the educational program improve students’ knowledge?” Madhuri Mulekar, University of South Alabama, mmulekar@jaguar1.usouthal.edu. Is technology a boon or a curse? With most text problems it is easy to determine the procedure, get statistics, etc. But real life situations are not well defined and tabled. He discussed a program to organize different educational programs for school-age children about sickle cell disease. They wanted to know the effect of the program on improving knowledge of these children about the disease. Students were given a 10 question true/false quiz before and after the program. This was used with his statistics students to illustrate a paired data situation. “Not Quite a Semester-Long Project for Getting Your Students to Look at Real Data That They Obtain Themselves.” Dexter Whittinghill, Rowan University, whittinghill@rowan.edu. We know we want to use real data – either in individual labs or mini projects, or in a semester long project that involves data analysis. There are pros to semester-long projects: students see the “whole picture,” it helps with their writing skills, and can become some interesting studies. There are also cons: lots of work management issues (group dynamics), grading load at the end of the semester, possible IRB problems…Last Spring, he had students find a data set of interest to their particular major of at least 40 observations. There were timed deliverables throughout the semester: the data, reference, and entry into Excel, SPSS, or Jmp; a descriptive analysis, and a statistical inference (confidence interval for the mean of their variable with normal plots, etc) with appropriate interpretation. The pros of the new approach were that students see some but maybe not much of the whole picture, no IRB problems, and there were some interesting data sets, plus students were investigating something of interest to them. The cons: they don’t see the whole picture, there is still end-of-semester grading (although not as much), less opportunity to help with writing, and students don’t have one large package at the end. He plans to use the same approach Spring 2006 with some modifications: add boxplots, increase the worth of the project in terms of the final grade, and have an additional deliverable. He will insist on group projects with self-selected groups and peer evaluation. “Two Steps Forward and One Step Back: This Kind of Implementation of the Guidelines for Assessment an Instruction in Statistics Education (GAISE) Can Ever Laswt.” John McKenzie, Babson College, mckenzie@babson.edu. Emphasize Literacy and Thinking: Step Forward: Statistics is not a math course – plot the damn data! Step Back: He can’t do it all – no more projects. Real Data: Step Forward: Make data driven decisions, use class-generated data. Step Back: No more data cleansing (he can’t do the whole process including letting students see messy data. Conceptual Understanding: Step Forward: Use equations for understanding (like the V-word). Step Back: Few questions are really conceptual. Foster Active Learning: Step forward: Lots of class participation, and anonymous feedback used. Step Back: limited teamwork (it takes too much time). Use Technology: Step Forward: software for analysis and concepts. Step Back: There are a few (3 or 4 that he uses) really good statistical applets. Assessment: Step Forward: He uses a variety of assessments including minute papers. Step Back: no oral presentations. Why the issues? There is a perceived need by client disciplines, common text and software, no support for innovation and resistance to change among both students an faculty. “Using Simulations to Discover the Truth About the Sampling Distribution of Means,” Murray Siegel, South Carolina Governor’s School for Science and Math, siegel@gssm.k12.sc.us. He showed how a TI-83/84 can be used to draw samples of a fixed size from the population of random digits 0-9. Students generate 40 samples of each size, and compute a mean and standard deviation for each. The sample size(s) are stored in list L1, the means in L2, and the standard deviations in L3. A scatter plot of L1 vs L2 along with Y1=4.5 demonstrates the expected value of the mean is the population mean. A scatter plot of L1 vs L3 will show a non- linear pattern of decreasing standard error as the sample size increases. Have students try to guess a function to fit the plot (hint: the population standard deviation is 2.87). Histograms of the 40 means for various sample sizes (eg, n – 10 and n – 200) can be graphed as a histogram to show the approximate “normal” nature of the distribution. “Statistics, Lively and Involved,” Dorothy Anway, University of Wisconsin-Superior, danway@uwsuper.edu. Last fall, she taught a seminar class in introductory statistics. The class chose to do a study of the university’s orientation sessions, which involved creating the questions, helping administer them, analyzing the data, and giving reports on it. The questions were put online, and all freshmen were sent an email asking them to complete it. Those who participated were entered to win a $50 gift certificate at the bookstore. There were only 79 responses (of 350 freshmen), and due to timing they could only do descriptive statistics on most of the items. But, a final report was prepared for the University, and results were displayed in a Fiert Year Seminar Expo poster session. “A Statistical Consulting Project in an Introductory Statistics Course,” Lisa Green, Middle Tennessee State University, lbgreen@mtsu.edu. Lisa’s presentation was given by Ginger Holmes Rowell due to a family emergency. The intent was to mimic how groups work in the “real world” and to emphasize the importance of statistics in planning a research project. Students from an Introductory class served as consultants to students in an upper-division nursing class, who chose the question to research, and formed hypotheses after a literature search. One of the topics: Do nurses have more urinary infections? The stat students had to determine whether or not the hypothesis was testable with the data collected and recommend changes to proposed experiments. It turned out that most of the hypotheses were too vague to test. Sample size was also an issue (a study of liver disease and its relation to childhood obesity had a suggested n = 200, but liver disease has a low occurrence) as was obtaining a sample (a question about the effects of changing shifts on job satisfaction found it hard to get people to sign up for the study). The major impact to her class was a necessity of changing the course outline – tests were covered before probability. When compared to a class without the project on 5 questions from the Artist database, there was no significant difference. “Comparison of two Different Project-based Strategies for Teaching Introductory Statistics,” Anand Pardhanani, Southwestern University, pardhana@southwestern.edu. He compared outcomes from a class with several “miniprojects” to one with a semesterlong comprehensive project. The class with miniprojects worked in groups of 2 or 3 on 5 specific and task-oriented projects which counted as 20% of the final grade. In the other class, students picked their own topic of interest, did some survey-based research, analysis and graphs and wrote a report. The comprehensive project counted as 10% of the final grade. In reporting the results, he said it was challenging to assess any difference in effectiveness – there was no big difference in grades on the final exam. Students did get the bog picture better with the big project, but it didn’t excite or motivate them. He found they exerted a minimum of effort to seek guidance. “The Statistics Concept Inventory: An Instrument for Assessing Student Understanding of Statistics Outcomes,” Teri Murphy, University of Oklahoma, tjmurphy@math.ou.edu. She reported on a project which began in Fall 2002 to write a test to assess learning similar to the Force Concept Inventory for Physics. The focus is on conceptual understanding. There are 38 multiple choice items in the areas of descriptive statistics, probability, inference and graphics. It has been a pencil-and-paper test, but an on-line version is now available. They have been doing psychometric analysis of the data and need more people to increase n! She presented some examples of test items like which graph shows a different data set (<50% got this one correct), and how much can we increase the highest number so that the median does not increase (77% correct responses on the post test). For more information, see coecs.ou.edu/sci/. “Examples of Assessments to Improve Student Learning in Introductory Statistics.” Allan Rossman, Cal Poly San Luis Obispo, arossman@calpoly.edu. He advocated several types of different assessments. “What Went Wrong?” Exercises (Formative Assessment): Students are given other students’ mistakes and asked to tell what was done incorrectly, for example, given a set of data a student reported the median number of roller coaster inversions was 3.5 (they didn’t take into account that not all values were equally likely). “Investigation Assignments (Formative/Summative): these build on student activities and homework. For example, given data on how many people you know with last names appearing on a list of 250 names, students are asked to produce a stem-and-leaf plot, write a paragraph commenting on the distribution, calculate and report the mean and standard deviation, determine what percentage of these scores fall within one standard deviation of the mean (is it close to 68%?), determine and report the 5-number summary, deterif there are outliers, and produce a boxplot of the data. The last category was Exam Questions (Summative Assessment). Many of his examples were very conceptual, for example, “It can be shown that the sum of residuals from a least squares regression must always equal 0. Does it follow that the mean of the residuals must always equal zero – explain. Does it follow that the median of the residuals must always equal zero – explain. Other Stat-Ed related presentations Other statistics related presentations (I give the title, presenter, title and e-mail contact for most as we all know it’s impossible to attend all the talks!) were submitted to other sessions or were not included in the SIGMAA’s session. Abstracts can be found by presenter’s name on the AMS website at www.ams.org/amsmtgs/2095_presenters.html. My apologies to anyone’s presentation that may have been missed. “Thinking Statistically in Writing: Journals and Discussion Boards in an Introductory Statistics Course,” Julie Theoret, Lyndon State College, Julie.Theoret@LyndonState.edu “Web Quests for Introductory Statistics Students,” Kimberly Presser, Shippensburg University, kjpres@ship.edu. “Statistics: Meet Me Online,” Sarah Mabrouk, Framingham State College, smabrouk@frc.mass.edu. “Teaching Calculus Based Probability and Statistics with Technology,” Andrew Glen, USMA (no email address available). “Runs of Heads and Tails – Genuine of Bogus?” Bill Linderman, King College, wclinder@king.edu. “The Probability of a Tied Vote under a Multiple Vote Scheme,” Dennis Walsh, dwalsh@mtsu.edu. “Picking the President.” Joseph Evan, King’s College, jmevan@kings.edu. “Hosting a Probability and Statistics Institute,” Sharon Emerson-Stonnell, emersonstonnellss@longwood.edu. “Theoretical Lenses for Examining Undergraduate Students’ Statistical Thinking,” Randall Groth, Salisbury University, regroth@salisbury.edu. “How Well can Random Walkers Rank Football Teams?” Mason Porter, California Institute of Technology, mason@caltech.edu. “Paired comparison ranking systems: and old topic and a new application,” Douglas Drinan, Sewanee: The University of the South, ddrinen@sewanee.edu. “The Price is Right’s Three Strikes Game and Other Possibilities,” Paula Stickles, Indiana University, pstickles@indiana.edu. “Bill James as an exemplar of Statistical Writing,” William Branson, St. Cloud State University, wbbranson@stcloudstate.edu. “Chances in Life – Creating a Statistics/Biology Learning Community,” William Ardis, Collin County Community College Preston Ridge Campus, bardis@ccccd.edu. “Curtailment Procedure for Selecting Among Bernoulli Populations,” Elaina Buzaianu, Syracuse University, etomsa@syr.edu. “Visualizing the Method of Finding Volumes of Revolution by Cross Sections: An Eggsperiment,” Patricia Humphrey, Georgia Southern University, phumphre@geogiasouthern.edu. An application of actual data gathering and analysis (paired t test) in a Calculus I class. “Statistics Before Your Eyes: Photographs of Statistical Concepts,” Robert Jernigan, American University, Jernigan@american.edu. He had some really neat photos – the way people grab door handles, etc makes a bell curve! “Student Statistical Analysis of a Student Tutoring Laboratory,” Richard Summers, Reinhardt College, rds@reinhardt.edu. “Whaddya Bet? Probability Investigations through Sinulation,”Jean McGivney-Burelle, University of Hartford, burelle@hartford.edu “Adventures with Regression in the RI State Legislature,” Barry Schiller, Rhode Island College, bschiller@ric.edu “”Coin Tossing as a Favorite Class Experience, What is it Good For?” Bill Rybolt, Babson College, rybolt@babson.edu Upcoming Events July 2 – 7, 2006 ICOTS-7 in Salvadore Brazil,. Information can be found at http://www.maths.otago.ac.nz/icots7/icots7.php August 5 – 10, 2006 Joint Statistical Meetings, Seattle WA August 10 – 12, 2006 MathFest, Knoxville TN January 4 – 7, 2007 Joint Math Meetings, New Orleans