A central activity within the Canadian Army's combat development

advertisement

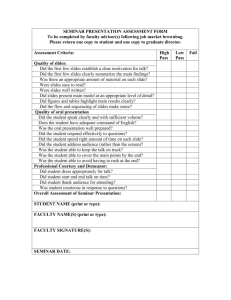

21st International Symposium on Military Operational Research September 2nd, 2004 An Introduction to the Development, Application, Uses and Limitations of STAMPER: Systematic Task Analysis for Measuring Performance and Evaluating Risk Eugenia Kalantzis Operations Research Analyst Director General Land Combat Development Fort Frontenac, PO Box 17000 Station Forces Kingston, Ontario, Canada K7K 7B4 (613) 541-5010 x 2469 Kalantzis.E@forces.gc.ca Abstract A central activity within the Canadian Army's combat development process is a series of seminar wargames used to evaluate experimental force structures and capabilities. In support of this goal, the Director General Land Combat Development (DGLCD) operational research team was tasked with providing analytical support and guidance to the seminar process and to develop a robust methodology to measure the performance of competing systems. Working closely with the client, the team developed a methodology based on the evaluation of a system’s performance of a predetermined list of tasks. This methodology is referred to as STAMPER, or Systematic Task Analysis for Measuring Performance and Evaluating Risk. The methodology involved the development of a set of task lists, as well as software-supported tools for the measurement of performance and risk, and for the computation of overall measures of performance for each of the five operational functions of Command, Sense, Act, Shield and Sustain. These measures of performance are based on a simple model that, although useful in providing indications of general trends, must be interpreted with extreme caution. This paper describes the steps involved in the development and application of the STAMPER process. In particular, it explains the crucial role of the client-analyst collaboration, it describes the simple models used to assign measures of risk and to calculate overall performance measures, and it lists the strengths and limitations of these models along with examples of possible misuse and misinterpretation of the results. BACKGROUND In June 2003, the Director of Army Doctrine (DAD) was tasked with the development of a force employment concept for the Interim Army. In support of this initiative, DAD directed the design and execution of a series of wargames to assess the evolving concepts. Warfighting was selected as the focus of the initial seminar wargames; however, in due course, the seminar series will expand to include peace support, non-combatant evacuation and emergency domestic operations. The first seminar wargame, Force Employment Wargame 0401, was held in February 2004 and examined the performance of a Main Contingency Force brigade group and battle group. The Canadian force structure for the first seminar was based on equipment available at the time, including Leopard tanks and M109 medium guns. The aim of the first wargame was to establish a baseline of performance against which the impact of proposed organizational changes may be assessed, with the intent of furthering the force employment concept for the Interim Army. The second seminar wargame, Force Employment Wargame 0402, was held in May 2004 and was modelled on the baseline seminar wargame. The purpose of this wargame was to evaluate the performance of a proposed Interim Army force structure and to compare these results with those obtained 1/10 21st International Symposium on Military Operational Research September 2nd, 2004 in the baseline wargame. To support this aim, the scenarios and the Red Force remained essentially unchanged; however, the Blue Force structure was modified to include weapons systems and equipment that are programmed to be in place for the Interim Army. Of particular note was the removal of tanks and M109s and the insertion of the Mobile Gun System, the TOW missile system on a LAV chassis and the Multi-Mission Effects Vehicle Version 1, all part of the direct fire system. A mobile artillery vehicle using a 105 mm gun mounted on the bed of a variant of the Mobile Support Vehicle System was also introduced. SPONSOR OBJECTIVES The ongoing objectives of the sponsor are to assess the impact of changes in structures, equipment and capabilities that will come into effect during the Interim Army timeframe with a view to further refining the force employment concept in preparation for field trials at Canadian Manoeuvre Training Centre and eventual incorporation into doctrine, including Tactics, Techniques and Procedures (TTPs) and Standard Operating Procedures (SOPs). Insights and judgments resulting from these seminar wargames are intended to guide follow-on seminar wargame iterations, to further the Interim Army Force Employment Concept development process, and to prioritize operational research activities by identifying spin-off issues that would be most effectively dealt with using more traditional computer-assisted wargaming techniques. SEMINAR WARGAME SCENARIOS The scenario used for the wargame series was based on the Department of National Defence’s Force Planning Scenario 11, but in a time frame situated 10 years after the original conflict. The scenario included an emphasis on urban operations and the ‘three block war’. Six vignettes were wargamed - three by the brigade group and three by the battle group. On Day 1, conducted in open terrain, the Canadian brigade group and battle group were tasked with capturing objectives and destroying enemy forces that were established in a fairly conventional defensive position. On Day 2, conducted in urban terrain, operations involved seizing key nodes within the city core. On Day 3, set three days after the cessation of formal hostilities, operations included a mission in mountainous terrain, concurrent with stability operations over a large sector. OPERATIONAL RESEARCH TEAM ROLES AND OBJECTIVES The operational research (OR) team was responsible for defining the problem and scope of the exercise, selecting and designing the appropriate methodologies and criteria for investigating the issues, balancing the methodologies against constraints or time and resources, implementing the data collection plan, and collecting, extracting and presenting the results. In addition to these traditional OR responsibilities, the specific objectives for this exercise were to develop a comprehensive and robust methodology to collect quantitative and qualitative measures of performance, and to design a process by which performance and risk may be evaluated quantitatively and compared across vignettes and seminar wargames. This later objective included the design of a final product that was both simple and easy to interpret by a diverse audience within the Canadian Army. DESIGN OF THE DATA COLLECTION METHODOLOGY The importance of the client-analyst collaboration was evident in the first stage of the project, i.e. the design of the data collection methodology. The design of an appropriate methodology required a perfect understanding of the sponsor’s objectives and requirements, as well as an understanding of the constraints imposed by the seminar wargame process itself. Given the very tight timelines, the design of this methodology was performed in parallel with the sponsor’s design of the seminar wargame process itself. 2/10 21st International Symposium on Military Operational Research September 2nd, 2004 Daily interaction and close collaboration was essential to ensure the two activities converged to form a well-knit process. In the design of the data collection methodology, the following points were of particular importance: The nature of a seminar wargame is such that there is much hidden complexity in the verbal interplay. Essentially, every discussion potentially contained an argument related to strengths or limitations buried within it. If not actively recorded, these points risked being lost. Particularly in the case of the Interim Army, there is imperfect or incomplete knowledge related to capabilities, equipment, and structures. As such, any model designed must not require input that is more in depth than what is available. Stated simply, the model should not be over designed. The transient nature of the wargaming participants, particularly the combat developers that make up the core team, requires that the methodology be simple and quick to adopt by new players. Participants are classified as either part of the core team, as subject matter experts, or as observers. Different mechanisms must be put into place to record and classify observations from each of these groups. The task loading for the core team, i.e. the combat developers, and the time constraints imposed by the seminar wargame process necessitate a data collection scheme that is relatively quick and easy. In consideration of the aforementioned points, a two-tier approach was taken in the development of the analysis methodology to address both the quanlitative nature of the seminar wargame format and the requirement for quantitative results. First, the qualitative data collection process involved the conduct of formal judgment and insight session, the submission of observation sheets from all participants, as well as the compilation of strengths, weaknesses and issues matrices by the combat developers for each of the five operational functions. Qualitative data was collected and categorized in an ACCESS database designed to allow the analyst to selectively filter the observations, and to produce reports that could be channelled to an appropriate member of the staff for further action. Qualitative data collection will not be discussed further in this report. Quantitative data analysis was conducted using the STAMPER methodology, as described herein. STAMPER – SYSTEMATIC TASK ANALYSIS FOR MEASURING PERFORMANCE AND EVALUATING RISK The purpose of the STAMPER methodology was to provide a systematic framework to measure performance and identify risk factors, and to compare variations in system performance between different vignettes, as well as from one seminar wargame iteration to the next. This was done with a series of survey instruments used to elicit the subjective judgements of a team of assessors in the evaluation of the system’s performance of essential tasks. In addition, these task lists provided a framework for discussion during the seminar wargame sessions, as each combat developer assessed the performance of the tasks under his operational function. In the selection of the models to calculate overall performance and to assess risk, the use of a simple model was deemed most appropriate due to the incomplete knowledge of the innovative, and often exploratory concepts and equipment introduced during the course of this seminar wargame series. The expected model fidelity was matched to the question at hand, and the model was designed to provide a level of detail that was equal to and supported by the level of fidelity of the inputs available to the model itself. The development of the STAMPER methodology began three months prior to the baseline seminar wargame, and consisted of the following exercises: the development of the DGLCD Task Lists, the creation of the survey instruments and automated tools, and the development of a measurement methodology to identify risk factors and assign appropriate scores to individual tasks, and to the overall operational functions. 3/10 21st International Symposium on Military Operational Research September 2nd, 2004 DGLCD Task List During the task list development stage, a comprehensive task list analysis exercise was performed by the DAD combat developers and their staff. The objective of this exercise was to produce a complete list of tasks under the responsibility of the Army, divided into the five operational functions of Command, Sense, Act, Shield and Sustain. These are referred to as the DGLCD Task Lists (DTLs). The DTLs draw their origins from recognized task lists such as the Canadian Joint Task List, the subsequently developed Canadian Army Task List, the Canadian Brigade Battle Task Standards, as well as other informally developed task lists. For each operational function, Level 1, Level 2, and Level 3 tasks were identified, with each level depicting finer granularity than the previous. Figure 1 depicts a sample of the Command task list breakdown. Figure 1 Sample of the Command Task List Breakdown Survey Instruments The DTLs form the foundation of the survey instruments used to facilitate the elicitation of expert opinion, and to measure performance and evaluate risks factors observed during each of the seminar wargames. In the completion of the survey instruments, assessors were asked to evaluate tasks along two dimensions: performance level, and impact/importance of the task on the completion of the higher-level task. Table 1 presents the two questions that appeared on the survey instruments, as well as the response options available to the participants. Additionally, the table specifies the category of tasks applicable to each questions. Of particular note is that participants were required to score the performance of Level 3 tasks only. The performance of Level 2 and Level 1 tasks were automatically calculated from the performance scores of Level 3 tasks in combination with the impact scores of Level 3, Level 2 and Level 1 tasks. 4/10 21st International Symposium on Military Operational Research September 2nd, 2004 Table 1 Survey Instrument Questions and Response Options Question Response Options Question 1: Performance Question 1 Given your experience and the discussions held during the seminar wargame, please indicate the level of overall performance you believe we would most likely achieve in the completion of this task during this mission, given the capability-set assumed for this seminar wargame. 1. Unacceptable: task incomplete 2. Undesirable: task completed below standard 3. Acceptable: task completed to minimum standards 4. Superior: task completed to above minimum standards Question 2: Impact Question 2 For this particular mission, how 1. Critical: Highly correlated with the would you characterize the impact or successful completion of the task importance of this task in the overall 2. Important: Correlated with the performance of the higher-level task it successful completion of the task belongs to. 3. Minor: Low correlation with the successful completion of the task 4. Negligible: Minimally affecting the successful completion of the task Action Required Respond to Question 1 for Level 3 tasks only. Review default Question 2 results for Level 3, 2, and 1 tasks; update if necessary. Calculating Performance Scores and Assigning Risk For each of the Level 2 and Level 1 tasks, as well as the overall operational functions, a measure of performance was calculated based on Question 1 and Question 2 results for Level 3 tasks, in combination with Question 2 results for Level 2 and Level 1 tasks. The calculation of performance is based on an inner-product rule in which each task is attributed points as a function of performance and impact. Points are attributed as per the Point Allocation Matrix in Figure 2. Essentially, the measure of performance of a Level 2 task is the weighted average of the performance scores assigned to the Level 3 tasks belonging to it. Weights were assigned to tasks based on the survey responses to Question 2 on impact; tasks estimated to be of a higher importance were assigned a higher weight. As such, Performance score of a Level 2 task = Sum of points for Level 3 tasks belonging to the Level 2 task Sum of weights for Level 3 tasks belonging to the Level 2 task In a similar fashion, the measure of performance of a Level 1 task is the weighted average of the performance of the Level 2 tasks belonging to it. And finally, the measure of performance of an operational function is the weighted average of the performance of the Level 1 tasks belonging to it. However, in the case of the roll-up for Level 1 tasks and for overall operational function performance 5/10 21st International Symposium on Military Operational Research September 2nd, 2004 scores, the points assigned to each sub-task are calculated as the product of the sub-task performance score, as calculated in the previous step, and the appropriate weight assigned as a function of the response to Question 2 on impact. Figure 2 Point Allocation Matrix For each of the Level 3 tasks, a measure of risk was obtained using the results of Question 1 and Question 2. A risk indicator was assigned to each task following the Risk Assessment Matrix in Figure 3. The lower the score, the higher the risk associated with that task. Low scores, colour-coded in red and yellow, identify tasks that would endanger mission success, whereas high scores, colour-coded in green and blue, identify tasks that would contribute to mission success. The assigned risk indicators were not rolled up as in the analysis of the overall performance measures. This was done to ensure that visibility of high-risk tasks is maintained throughout the exercise, and that this information was not obscured when results are merged to obtain measures of performance at higher levels. Instead, these scores would be compared with those collected in future iterations, and changes would be examined at this level of granularity. Figure 3 Risk Assessment Matrix 6/10 21st International Symposium on Military Operational Research September 2nd, 2004 Automated Tool to Calculate Performance, Risk and Deltas In preparation for the seminar wargame, an Excel-based analysis tool was designed to automatically assemble responses from participants, to assign a measure of risk as a function of performance and impact scores, to calculate a score of overall performance for Level 2 and Level 1 tasks, and to roll-up these scores into an overall measure of performance at the operational function level. In addition, following the second seminar wargame, the tool was updated to automatically display the change is performance and impact/importance ratings, as well as the change in calculated performance and risk scores. This analysis was performed for each of the six vignettes, and for each of the five operational functions. Figures 4 and 5 depict snapshots of the tool as it is used to automatically calculate Level 2 and Level 1 scores, and to display changes in performance of scores from one seminar wargame to the next, respectively. Figure 4 Automatic Calculation of Performance Scores for Level 2 and Level 1 Tasks 7/10 21st International Symposium on Military Operational Research September 2nd, 2004 Figure 5 Automatic Calculation of Change in Performance Scores from Baseline to Iteration 1 Strengths Among others, the use of the STAMPER methodology presented following benefits: The development of the process itself required a close collaboration between the sponsor and the analyst. This collaboration engaged the participants fully, and instilled a sense of ownership in the process that was essential to the success of the exercise. The process provided a framework for a structured evaluation of performance across a wide and complete range of tasks. The quantitative results complemented the results extracted from the qualitative data collection. As a visualization tool, the methodology was successful in providing a quick identification of performance and risk results, as well as changes in these measures from one iteration to the next. The automated tool ensured the availability of near real-time results. These results were then available at end-of-day judgments & insights sessions, to be used as required. Limitations Limitations inherent to the STAMPER methodology are as follows: Quantitative results are currently based on the opinion of a small pool of subject matter experts. Sensitivity analysis results for performance scores vary across operational functions. As such, performance scores should not be compared across these functions. However, within a given 8/10 21st International Symposium on Military Operational Research September 2nd, 2004 operational function, comparisons can be made across scenarios/vignettes (e.g. open vs. urban) and across capability sets (i.e. across seminar wargames). Changes in performance levels should be interpreted with care. These deltas should be used as indicators or pointers to an underlying phenomenon requiring further investigation. The quantitative results alone do not provide a complete picture of the issue, nor do they provide an explanation of the reason for the level of performance achieved or the change in this score. These results should therefore be interpreted jointly with the qualitative results to provide a complete picture. Examples of Misuse Very often, quantitative results such as these can be misinterpreted or used out of context; and the results tend to take on their own life. A common mistake in the use of this model is the tendency to focus on the higher-level performance scores, and to ignore the performance scores of individual sub-tasks. For example, if an operational function performance score of 80 is obtained in a particular situation, there is a tendency to assume that all sub-tasks within this function were performed at an equally acceptable level. This is not necessarily true. Particular sub-tasks within that operational function may have been performed at less than acceptable levels. Only an investigation of performance scores at all task levels would reveal the complete picture. Another possible misuse of the model is the comparison of performance scores across operational functions. For example, in the case where the Sustain and Command functions obtained scores of 70 and 80, respectively, there is a tendency to conclude that one function was performed better than another. Although this may be true, the level of fidelity inherent in this model cannot justify this type of broad statement. CONCLUSION The seminar format provided a suitable setting for discussion of organizational strengths and weaknesses, as well as the identification of more specific issues warranting further study. The STAMPER methodology provided quantitative results that supported the qualitative results collected in seminar discussions and judgements & insights sessions; the two-tier approach to data collection yielded a complete picture of events. In addition, it included a final product that was both simple and easy to interpret by a diverse audience within the Canadian Army. As with other simple models, results should be interpreted with caution. Conclusions drawn from the results should match the level of fidelity inherent to this model itself, and should not go beyond. This being said, the tool is useful in highlighting changes in levels of performance and indicating the presence of underlying phenomena warranting further study. 9/10 21st International Symposium on Military Operational Research September 2nd, 2004 REFERENCES 1. Alberts, D.S., July 2002, Code of Best Practice for Experimentation, Command and Control Research Program, Department of Defense, Washington DC, United States 2. Anon, 1998, A Tool for Evaluating Force Modernization Options, RAND, Santa Monica, CA, United States 3. Ayyub, B.M., 2001, Elicitation of Expert Opinions for Uncertainty and Risks, CRC Press, Boca Raton FL, United States 4. Ayyub, B.M., 2001, A Practical Guide on Conducting Expert-Opinion Elicitation of Probabilities and Consequences for Corps Facilities, Institute for Water Resources, U.S. Army Corps of Engineers, Alexandria, VA, United States 5. Davis, P.K., 2002, Analytic Architecture for Capabilities-Based Planning, Mission System Analysis, and Transformation, RAND National Defense Research Institute, Santa Monica, CA, United States 6. DuBois, D.A., Shalin, V.L., Levi, K.R., Borman, W.C., December 1995, A Cognitively-Oriented Approach to Task Analysis and Test Design, Personnel Decisions Research Institutes Inc. Doctrine, September 2003, A Force Employment Discussion Paper, Canada 7. Director General Land Combat Development, October 2003, Seminar Wargame Initiating Directive: Interim Army Force Employment Concept, Kingston, Canada 8. Entin, E.E., Entin, E.B., June 2001, Measures for Evaluation of Team Processes and Performance in Experiments and Exercises, 6th Annual International Command and Control Research and Technology Symposium (ICCRTS), National Defense University, Washington DC, United States for Office of Naval Research, Minneapolis, MN, United States 9. Hunter, D., Bailey. A., and Taylor, B., 1992, The Art of Facilitation: How to create Group Synergy, Fisher Books, Tucson AZ, United States 10. Kalantzis, E., Cameron, F., and Roy, R., 2004, Force Employment Wargame 0401: A Baseline of the Interim Army in Warfighting Tasks, Director General Land Combat Development, Kingston, Canada 11. Murphey, J., Grynovicki, J.O., Kysor, K. P., June 2003, Case Study of a Prototype Set of Behaviorally Anchored Rating Scales (BARS) for C2 Assessment, 8th Annual ICCRTS, National Defense University, Washington DC, United States 12. Page, P.B., McFarlane, S., July 1998, Land Operations 2020 Technology Seminar Wargaming: Final Report, Centre for Defence Analysis (DERA), Kent, England 13. Sienknecht, R. T., June 1999, An Empirical Analysis of Rating Effectiveness for a State Quality Award, Thesis: Master of Science in Industrial and Systems Engineering, Virginia Polytechnic Institute and State University, Blacksburg, VA, United States 14. Zsambok, C.E., Klein, G., 1996, Naturalistic Decision Making, Lawrence Erlbaum, NJ, United States 10/10