UNC-C Methodology decisions v8

A framework for deciding how to pick the right methodology

Dr. Bjarne Berg

Table of Content

INTRODUCTION ......................................................................................................................................................... 3

BACKGROUND ........................................................................................................................................................... 3

HISTORICAL PERSPECTIVE OF FOUR DIFFERENT APPROACHES .................................................................. 5

A.

J OINT A PPLICATION D ESIGN ................................................................................................................................. 5

B.

R APID A PPLICATION D EVELOPMENT ..................................................................................................................... 8

C.

S YSTEM D EVELOPMENT L IFE C YCLE M ETHODOLOGIES ...................................................................................... 12

D.

E XTREME P ROGRAMMING ................................................................................................................................... 14

PROPOSED FRAMEWORK ...................................................................................................................................... 16

LIMITATIONS, ASSUMPTIONS AND RISKS ........................................................................................................ 18

CONCLUSIONS ......................................................................................................................................................... 19

WORKS CITED .......................................................................................................................................................... 20

2

Introduction

One of the fists steps in developing a custom information technology system is to gather the right requirements. This is often done in a variety of ways based on the methodology that the company employs. This paper explores some of the methods and approaches to gather business requirements from a current and historical perspective. It also proposes a framework for deciding which method to employ under various conditions.

Background

Gathering business requirements is a complex process and involves a period of discovery and education, as well as formal communication, reviews and final approvals. There are many ways to accomplish these objectives and several approaches have been proposed over the years.

Currently, the most common methodologies are the Rapid Application Development (RAD), the

Joint Application Design (JAD), a collection of methodologies known as the System

Development Life-Cycle (SDLC) methodologies named as a group after the approach they are based, as well as the newly formed Extreme Programming (XP) that became formalized over the last decade.

While these methods propose unique ways to achieve the same objective, they all rely on the same criteria for achieving the final outcomes; the need for accurate business requirements.

It is also important to note that most successful information technology implementations are based on meeting multiple defined business requirements. This means that there is not a simple

“one right requirement”, but a series of requirements that must be met. Unfortunately many of these requirements can also be mutually exclusive or simply contradictory. Therefore, an information technology implementation does not simply involve a series of black-and-white technical decisions; just because something is technically feasible does not mean it is wise or

3

desirable from a business perspective. The core issue of a successful implementation is to strike a balance of what is technically feasible, within the resource framework of the project, and what the users expects from a functional standpoint. Information system managers must therefore first bring a strategic perspective to the project by offering insight into potential technologies. The tactical aspects of the project can then be presented to the project team, explored, discussed and decided upon.

Depending on the methodology employed, the focus on the requirements gathering varies. Most traditional methodologies are based on the System development Life Cycle (SDLC) approach. Under this approach, the two key strategic tasks are to completely define applications in the context of business requirements and to select technology based on compatibility and organizational know-how. Under the SDLC methodologies, defining an enterprise's requirements as completely as possible is extremely important, because even modest changes in the applications' functions is assumed to cause dramatically changes to the resulting tactical choices.

For a SDLC methodology, the longer the project duration, the more important the methodology is to keep the project on-track.

Another issue is the execution of the requirements gathering. Under all methodologies, the business requirement analyst is responsible for collecting, interviewing/meeting and consolidating business requirements from the business organization. The analyst must then present the findings in a way that is useful to the project team, and in a way that can be agreed to by the project sponsor. As a result, the business analyst must also have solid “diplomacy” skills.

Normally the role of a business analyst is performed by an individual who is familiar with the company’s line of business, or business processes. Typical skill requirements also include excellent interviewing skills, good at consolidating and prioritizing information, highly

4

organized and detail oriented, ability to recognize other opportunities, and the to be able to manage expectations as part of the interview process (Berg, 1997).

Before we propose a new framework, let us review the history and purposes of the four core methodologies used today.

Historical Perspective of Four Different Approaches

A. Joint Application Design

In the late 1970s as a result of these complaints, the first versions of Joint Application Design

(JAD) were developed by IBM. The methodology development team consisted of two key individuals, Tony Crawford of Canada and Chuck Morris of North Carolina. The first success of this method was in Canada, where the developers of the JAD approach conducted a set of classes and seminars. With Tony Crawford as the “evangelist” of JAD it was soon adopted by many companies and organizations. However, the first use of JAD was limited to the areas of user requirements gathering and sometimes designs of the applications as well (Soltys & Crawford,

1997). The JAD workshop approach to obtaining the requirements was an alternative to the structured interviews that the SDLC methodologies advocated.

The following narrative illustrate the SDLC challenges of obtaining system requirements; “In most organizations, the systems development life cycle begins with the identification of a need, assignment of a project leader and team, and often the selection of a catchy acronym for the project. The leader pursues a series of separate meetings with the people who will use the system or be affected by it …… when it becomes apparent that the requirements from, say Accounting, don’t mesh with what the Sales department wants, the leader calls Sales and finds out the contact there is in the field and will not be back until tomorrow. Next day the leader reaches Sales, gets the information, calls accounting, and of course the person in Accounting is now out of the

5

office, and so on. When everyone is finally in agreement, alas, the leader discovers that even more people should have been consulted because their needs require something entirely different. In the end, everyone is reluctant to "sign off" on the specifications. Other times, signing off comes easily. But when the system is delivered, it often has little to do with what the users really need” (Wood & Silver, 1995). "A user sign off is a powerless piece of paper" when matched against the fury of top management (Wetherbe, 1991).

JAD did not initially extend into the construction phase and the implementation phase of the project. Mr. Crawford first advocated that JAD was a set of workshops and not a comprehensive methodology. The core challenge that JAD was addressing was the merging of business knowledge with information technology design, to provide useful interaction and thereby improve the quality of the systems being developed. However, other companies soon adopted

JAD and tailored it to their needs. A concept called JRP, or Joint Requirements Planning, is an approach to focus on the whole plan and execution of getting the “right” requirements. The JRP is often a supplement to the JAD sessions that is used for the specific program components and not a replacement for JAD (Yatco, 1999).

So, while JAD started a method for obtaining requirements only, JAD has evolved over time to become much broader. The University of Maine, as part of their GIS publications suggests using JAD as “one of the tools through out the system development process to keep the users involved all the time” and not merely as part of the requirements gathering phase where it started

(Botkin, 1999). This view is reinforced by several universities that currently teach and advocates that JAD now covers a complete development life cycle of a system and is no longer just confined to the requirements gathering part of the project (The University of Texas, 2004). The term JAD is today being used so broadly that it has been described as a “joint venture among any

6

people who need to make decisions affecting multiple areas of an organization”. In this case,

JAD is defined as a structured workshop where people come together to plan projects, design systems, or make business decisions” (Daminen, et.al., 1999).

The proponents of JAD claims that the advantages of JAD include a dramatic shortening of the time it takes to complete a project. It also improves the quality of the final product by focusing on the up-front portion of the development lifecycle, thus reducing the likelihood of errors that are expensive to correct later on. The rational of JAD was that system development had some inherited laws of diminishing returns. The argument was that the traditional methods have several built-in delay factors that get worse as more people become involved. As a result, the traditional methods were not scalable to larger information technology projects (Wood &

Silver, 1995). The guiding principles of a JAD sessions are:

1.

Keep it very focused

2.

Conducted in a dedicated environment

3.

Quickly drive major requirements and interface "look & feel"

JAD participants typically include:

1.

Facilitator – facilitates discussions, enforces rules

2.

End users – 3 to 5, attend all sessions

3.

Developers – 2 or 3, question for clarity

4.

Tie Breaker – Senior manager. Breaks end user ties, usually doesn’t attend

5.

Observers – 2 or 3, do not speak

6.

Subject Matter Experts – limited number for understanding business & technology

Today, JAD session is so all-encompassing that it covers all from simple work sessions to more advanced brainstorming session with tools and technology to capture ideas. JAD quickly

7

became a tool for Business Process Re-engineering (BPR), information system architecture definition, business strategy planning, project management and data modeling (Jennerich, 1990).

In the 1990s, it even evolved into group dynamics and team formation theory including concepts such as "storming, norming, forming and performing" and has also evolved into conflict management (Soltys & Crawford, 1999).

As technology became more available, the JAD session also often became virtual. When

JAD first was adapted as a methodology, a major benefit was proclaimed to be the unity of the team effort. However, as JAD gain adaptation in the 1990s many started to cut the cost of the sessions by reducing the travel expenses ( Journal of Systems Management, 1995). It is also becoming very common to employ distributed prototype teams that were enabled by computersupported cooperative work (Nobuhiro et. Al., 1998).

It is important to note that the distribution of the team and the reduced interaction was for many the reason why JAD became less popular as a methodology in the lat 1990s and is today rather uncommon as the sole development approach. It did however, lay the framework for other more radical approaches such as Rapid

Application Development (RAD) and Extreme Programming (XP).

B. Rapid Application Development

As JAD got established as a formal methodology, many critics of the SDLC methodologies had started various approaches to using Computer Aided Software engineering (CASE) tools to do interactive prototyping with the users. (Soltys & Crawford, 1999). An early critic of the traditional System Development Life Cycle Methodologies was Barry Boehm. As the leading software development manager at TRW, a credit management company, he early introduced a technique he called the “Spiral Model”. This model was an approach to software development that was not driven by typical programming, but instead by the risk of the project. He therefore

8

believed that correct approach should be focusing on process modeling instead of phases as proposed in the SDLC methods. A core proposition to reduce the risk of the projects was to rely heavily on prototyping (Boehn, 1986).

The development approach of Bohem’s methodology was to separate the final deliverable into critical parts and performing a risk assessment of each of these parts. This was to be followed by prototyping for high risk areas as a way to drive the requirements and refine the final deliverables in an interactive way to the user community (Boehm, 1988). The heavy use of prototyping already had a strong following. In the late 1970s a development method known as the Evolutionary Life Cycle (ELC) had been developed by Tom Gilb. Under this approach there were no formal phase of a project, but each parts of the project was developed together with the users through interactive sessions where prototypes were demonstrated and feedback was sought

(Gilb, XXXX ).

Both of these methods were considered so complimentary that a logical framework had to be established to integrate these approaches in a formal manner. This framework methodology soon became known as the Rapid Iterative Production Prototyping (RIPP). The core user of this methodology and a driving force of the method was software development work done at DuPont in the 1980s. The RIPP soon was needed to be integrated into the most prevailing SDLC methods that were employed in the software industry and in most corporations. An early advocate of this merging of best of features from the RIPP and the SLDC methods was James

Martin. Over time, he merged these approaches and proposed the first definition RAD as “a development lifecycle designed to give much faster development and higher-quality results than those achieved with the traditional lifecycle. It is designed to take the maximum advantage of powerful development software that has evolved recently.” (Martin, 1990). In general, RAD

9

compresses the phases of a SDLC methodology and introduces an interactive approach for each of these phases. It also combines the design and construction stages into a single phase where the development process is interactive and not sequential.

The core benefit is the time-to-delivery of the system. However, advocates of RAD also claims improved user satisfaction and less risk to the overall information system development effort. This is very important attributes. According to The Gartner Group, an influential thinktank for strategy and information technology, most organizations are faced with a large backlog of new systems to be developed. Over 65% of the typical budget is spent on the maintenance of existing systems. These systems have little documentation and were developed with programming languages and database systems that are difficult and time consuming to change.

These organizations are thus faced with upgrading their aging systems or building new applications. Traditional development lifecycles, however, are too slow and rigid to meet the business demands of today’s economy. A new methodology must be implemented, one that allows organizations to build software applications faster, better, and cheaper. RAD enables such development.

Alternative, but similar definitions to those introduced above have been proposed by Dr.

Kettemborough. He broaden the definition to defined RAD as “an approach to building computer systems which combines Computer-Assisted Software Engineering (CASE) tools and techniques, user-driven prototyping, and stringent project delivery time limits into a potent, tested, reliable formula for top-notch quality and productivity. RAD drastically raises the quality of finished systems while reducing the time it takes to build them.” (Kettemborough, 1999).

In addition to RAD’s reliance on prototyping, the characteristic of RAD is its abbreviated analysis stage where meetings are executed in short succession to get the requirements and where

10

the design and construction stages of the project are combined. It also typically involves extensive early prototyping and tends to be used for smaller and stand alone system that require less integration into other larger environments (University of California at Davis, 1996). In general, applications that uses RAD tends to be characterized by the few interfaces that are build to maintain the application in a larger system landscape.

The critical method of RAD business requirements gathering is the initial information requirement gathering sessions. Typical ways to conduct these sessions is a one or two days meeting with uninterrupted time where lunch is provided on-site. Normally mobile phones, PDA and pagers are not allowed. The audience for these sessions is typically power users, casual users, people who today interact with the current system and managers who have a stake in the outcome of the information system development. A rapid pace is kept in these meetings and the number of attendees is kept at a manageable level, with typically no more than twenty people in attendance. The coordinators and business analysts will focus on shared information needs and conduct multiple sessions if needed. A guiding principle is that the meeting should not be trapped in details, since people are given a chance to provide feedback in writing for follow-up sessions with individuals.

The RAD initial information gathering sessions typically employs instruments such as a simple form called "information request form" and use it to gather the core relevant information about each report, processes and information availability being requested by the business community. This instrument is used to initially document requirements in a standardized format, prioritize the requirements, consolidate requirements and for follow-up discussions and reviews.

Companies that use RAD as a development method often post such a form on the intranet and thereby give stakeholders an easy way to communicate with the project team. A similar approach

11

is often also used for security requirements and for dispositioning the requirement. Not all requirements may belong in the information system, and it is important that the developers do not use the system as a "dumping group". The project management and the business analysts are charged with making cost effective decisions. In general, the project team is responsible for making conscious decisions on what to include in the system and how to delivery the solution.

However, without strong “guiding principles” there is a significant risk that the development designs and the system architecture becomes evolutionary and therefore has a high cost of ownership. The benefits of RAD are many. Beyond those mentioned earlier, advocates of RAD claims increased user involvement, less disruption to the business, more likely to avoid individual opinions and get more group consensus on system requirements, and the ability to use the RAD sessions as an information sharing and education event for the user community.

C. System Development Life Cycle Methodologies

According to the System Development Life Cycle (SLDC) methodologies, the business analyst is responsible for the verification of the development of the information system that supports the functional requirements of the project. During the analysis phase this individual is responsible for gathering detailed reporting requirements from the business users and existing groups engaged in the process within the organization. This is done through a set of structured interviews with people who are identified as “stakeholders”. A key deliverable from this effort is detailed functional requirements for the information system. To achieve these functional specifications, the business analyst must have detailed knowledge of the industry that the company operates in, and a solid understanding of the information needs of such an organization.

According to the SDLC methodologies, this analyst will also be managing the user acceptance testing and feedback process from representatives for the user community, and assure that those

12

requirements are being met by the system being built. During the roll-out of the system the business analyst are engaged in the user documentation development as well as the development and execution of the user training.

This traditional lifecycle based methodologies devised in the 1970s, and still widely used today. In general, they are all based upon this structured step-by-step approach to developing systems. This rigid sequence of steps forces a user to “sign-off” after the completion of each specification before development can proceed to the next step. The requirements and design are then frozen and the system is coded, tested, and implemented. With such conventional methods, there is a long delay before the customer gets to see any results and the development process can take so long that the customer’s business could fundamentally change before the system is even ready for use. This has been the area of highest contention among the critics of the SDLC methodologies.

The SDLC methodologies typically structures the wok into a project preparation phase, an analysis phase, a design phase, a construction and testing phase, and a final implementation phase. However, each vendor refers to each of these phases by different names. Companies such as SAP, the world’s second largest software company, refers to the design and construction phases as “blue printing” and “realization”, while others stick with the more traditional names

(Berg, 2004).

13

D. Extreme Programming (XP)

Extreme programming is a methodology that has evolved over the last decade. The argument is that traditional methodologies were developed to build software for low levels of change and reasonably predictable desired outcomes. But, the business world is no longer very predictable, and software requirements change at extremely high rates (Caristi, 2002). XP therefore started as programmers who was engaged in development with the users decided that the SDLC and JAD/RAD requirements gathering sessions took too much time and often just verified what they already knew. Therefore, development could be completed faster with collaborative efforts of teamed programmers. The core of the phases of the project still exists, but the development is highly interactive and work done in the design phase is subject to change during the construction phase as better solutions are found (Beck, 2000). The phases of the project is therefore not following a “waterfall” methodology, but instead they are revisited until the final solution is completed. The core premise of XP is that you can only pick 3 out of these 4 dimensions: cost, quality, scope, time.

The best way to understand XP is to review its approach to work. The first piece of work is the planning. In this stage user stories are written from a user interaction standpoint. This is followed by determining the project development schedule which is designed as a result of the software release plan. In XP, the release plan should consist of many smaller releases. Also, the momentum of the project, known as the “project velocity” is measured and the project is divided into smaller iterations. People on the project may be reassigned to different work in the various iterations. Finally, from an execution standpoint, a stand-up meeting starts each day and replanning occurs based on yesterday’s work progress (Caristi, 2002).

14

The next phase is the design phase. The guiding principle of the design is simplicity and

CRC cards are used for short interactive design sessions. During the design work, stand-alone simple solutions are encouraged to reduce risk and no value added functionality is included during the design stage. This is done later, based on available time during the construction phase.

The key to a successful design under XP is to refactor the work whenever and wherever possible.

During the construction phase, also known as the coding, the customer has always to be present. To assure quality, all coding must adhere to previous agreed to standards and developers are responsible for unit testing all code developed. A unique feature of XP is that all coding is done in pairs and only one pair of programmers can integrate their code into the system at any given time. This implies that the system source code is collectively owned and final performance optimization is done after all the code components are integrated.

Prior to the testing stage, all code must pass unit test. In addition, whenever a bug is discovered, a test case is created to verify the extent of the error and possible impact. The testing stage also relies heavily on acceptance testing and multiple sessions are created for each iteration, as well as for each software release. In XP, the test results from the acceptance testing is also scored and published to the team (Beck, 2000).

Many critics have raised concerns with the way XP approaches information system development. Among the most frequent has been the reliance on verbal communication which may lead to “finger pointing” when things go wrong. It is also based on intuition-based decision making and a high degree of dependency on individual skills. Critics have also charged that is managed by insufficient planning and does not contain any sound approach to system verification and validation. As a result, XP leads to rework as a norm, error prone systems and non-maintainable systems that finally leads to frustrated staff, and few heroes (Keefer, 2003).

15

Even advocates of XP have pointed to its limitations. It has been claimed that XP does not work in “Dilbertesque” companies (companies with high degree of control for the sake of control). XP does not work in groups of more than about 20 programmers. It is hard to implement in environment where there is a high degree of commitment to existing code due to service level agreements. XP is also hard to implement in outsourced development environment when programmers are separated by space (Caristi, 2002). Others argue that there are certain core requirements that must be met in order to create an environment where XP can thrive. First you need open, honest communication among programmers and between programmers and customers. Programmers must enjoy their jobs, and as motivators there are no project mangers, but rather project coaches (they may still be called “managers”). A major issue is that collaboration is really hard and normally not rewarded in business where winners are typically selected as individuals and not as teams. Despite these critics XP has a strong following in the programming community.

While some see XP as a result of programmers rebelling against the structure enforced by companies, other sees this as a path back to “sanity” without artificial non-value-add paper work.

We will explore the application of XP as we look into a proposed framework for deciding when to employ the various methodologies.

Proposed framework

The core drivers for selecting a methodology are the time to delivery and the impact of failure is the project does not succeed. If an organization has ample time to delivery, and the impact of failure is high, it is very unlikely that XP would be suitable. The impact of failure is the core reason why organizations such as NASA are not a great proponent of XP. The impact of failure is simply too high. It is also a reason why extreme programming has reached such a large

16

following in the web development community. The core traits here are the time to delivery of the web pages (development) and the impact of failure is low i.e. if CNN cannot deliver the news rapidly, the content is worthless after a short time period. However, if formatting is sub-optimal, it is not detrimental to the organization and nobody dies.

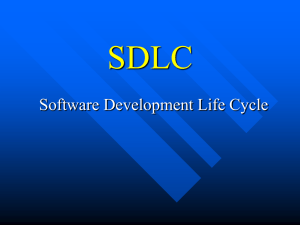

Using these two dimensions and classifying them from low to high, we obtain a resulting two-by-two “magic quadrant”.

Figure 1: When to select different methodologies

When to Select Different Methodologies

High

Joint Application Design

(JAD)

System development Life-Cycle based methodologies

(SDLC)

Time to

Delivery

Extreme Programming

(EP)

Rapid Application Development

(RAD)

Low

Low High

Impact of Failure

SDLC is the appropriate technique when the impact of failure is high and the time to “get it right” is available. It is a rigid structure that follows clearly defined deliverables and formal checkpoints in form of “structured walkthroughs” of deliverables and a formal approval process.

This is why these methodologies are favored method among mission critical application development.

17

RAD is the preferred methodology if the time to delivery is compressed while the impact of failure remains high. Since RAD relies heavily on prototyping, the application is developed faster and the end outcome is better known earlier in the process.

Extreme programming (XP) is a highly preferred method when developing web pages and stand-alone applications. This is due to the rapid development cycle and the fact that most web and stand-alone applications can rapidly be rewritten with minimal disruption to the organization (the impact of failure is typically low).

JAD is the appropriate technique when the delivery time is relatively high as compared with XP and RAD. At the same time the impact of failure is low and group consensus can be achieved since more time is available relatively to RAD and XP. Since the requirements are gathered by group sessions, it is unlikely that this technique will lead to the optimal requirements for applications, but rather the consensus of the community. This is fine when building user based applications, but when the system is launching a plane or the impact of failure is higher, a better approach might be to prototype or to have structured interviews as proposed by the RAD and SDLC methodologies, respectively.

Limitations, Assumptions and Risks

This paper has only examined the methodologies from a historical perspective and assumes that the reader has a base understanding of each methodology. The paper also examines the prevailing views of the methods, but acknowledges that different views exist in the field as to the validity of the scope of each method. This is particularly true in many vendor provided

SDLC methodologies where components from JAD, RAD and XP have been added to give their methodologies more flexibility.

18

Another limitation is the areas of the proposed framework. While presented as clearly delineated areas of selection, there are in fact several dimensions when multiple methodologies can be employed. I.e. when time to delivery is moderate, or when the impact of failure is moderate. As a result, the framework is intended to illustrate the differences among the appropriateness of each methodology. This decision is clearer in the extreme. However, in reality there may be “gray zones” where more than one answer may be correct.

Conclusions

There are many ways to build information systems, from the highly structured SDLC methodologies to the free-flowing XP. Instead of continuing a rather fruitless discussion on which is “best”, a better approach would be to discuss the circumstances under which to employ the methods. In management this is knows as situation based management, where the approach is tailored to the needs and the situation. SDLC based methodologies have their place as long as it employed in a flexible manner tailored to each project. However, XP also has a place in software development when the impact of failure is low and the need for flexibility and delivery in a compressed timeframe is high.

If we are to progress as a science, software development must mature to a level, where there are many tools available to the managers, and to a level where the “hammer” does not continuously argue that it is better than the “saw”…

19

Works Cited

Beck, Kent (1997) “Chrysler Comprehensive Compensation with XP”., published Feb. 2001:

The Agile Software Development Alliance

Beck, Kent (2000)., Extreme Programming Explained: embrace change., Addison Wesley

Longman, Inc., 2000

Berg, Bjarne (1997) “Introduction to Data Warehousing”., module 2., pp.88-107. New York.,

NY., Price Waterhouse LLP. October 1997.

Berg, Bjarne (2004) “Managing BW projects – part-2”., SAP project management conference,

Las Vegas, NV, WIS publishing, November.

Boehm, Barry (1986) "A Spiral Model of Software Development and Enhancement",

ACM SIGSOFT Software Engineering Notes, August.

Boehm, Barry (1988) "A Spiral Model of Software Development and Enhancement"

IEEE Computer, vol.21, #5, May, pp 61-72.

Botkin, John (1998)., "Customer Involved Participation as Part of the Application Development

Process." University of Maine.

Caristi, James (2002) “Extreme Programming: Theory & Practices”., Valparaiso University.,

SIGSEE 2002 conference tutorial.

Damian, Adrian., Hong Danfeng., Li, Holly., Pan, Dong (1999) "Joint Application Development

and Participatory Design". University of Calgary, Dept. of Computer Science.

Dennis, Alan R., Hayes, Glenda S., Daniels, Robert M. Jr. (1990) "Business process modeling

with group support systems". Journal of Management Information Systems. 115-142. Spring.

Gilb, Tom (1989)., “Principles of Software Engineering Management”., Addison-Wesley

Longman.

Jennerich, Bill (1990)., "Joint Application Design -- Business Requirements Analysis for

Successful Re- Engineering." UniSphere Ltd., November.

Journal of Systems Management (1995)., "JAD basics"., Sept./Oct. ed.

Keefer, Gerold (2003)., “Extreme Programming Considered Harmful for Reliable Software

Development”., AVOCA GmbH., September ed.

Kettemborough, Clifford (1999)., “The Prototyping Methodology“., Whitehead College,

20

University of Redlands

Larman , CraigVictor R. Basili (2003)., Iterative and Incremental Development: A Brief

History”., IEEE Computer, pp. 47-56

Martin, James (1990)., “Information Engineering Planning & Analysis, Book II”.,

Prentice-Hall, Inc.

Nobuhiro, Kataoka., Hisao, Koizumi., Kinya, Takasaki., & Norio Shiratori (1998). "Remote

Joint Application Design Process Using Package Software". 13th International Conference on

Information Networking (ICOIN '98)., January., pp. 0495

Soltys, Roman., Crawford, Anthony (1998) "JAD for business plans and designs"., The Process

Improvement Institute., October 1998.

Univerity of California (1996) ., “Application Development Methodology”., UCD., on-line

at http://sysdev.ucdavis.edu/WEBADM/document/toc.html

The University of Texas at Austin (2004) "Joint Application Development (JAD) What do you

really want?" http://www.utexas.edu/admin/ohr/is/pubs/jad.html. Accessed on October 24.

Yatco, Mei (1999) “Joint Application Design/Development”., School of Business, University of

Missouri-St. Louis.

Wood, J. and D. Silver (1995),. “ Joint Application Development”., 2nd ed., New York : Wiley.

Wetherbe, James C. (1991)., "Executive Information Requirements: Getting It Right", MIS

Quarterly, March., p. 51.

21