Chapter 3 - Chris Bilder's

advertisement

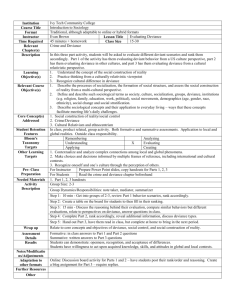

Add.3.1 These are additional notes for Chapter 3 Cumulative distribution functions (CDFs) Example: Binomial distribution (binomial_ch3.R) n! Binomial distribution: P(Y=y) = y (1 )n y y!(n y)! for y=0,1,…,n Suppose =0.6, n=5. What is the probability distribution? P(Y=0) = 5! 0.60 (1 0.6)50 0.45 0.0102 0!(5 0)! For Y=0,…,5: Y P(Y=y) 0 0.0102 1 0.0768 2 0.2304 3 0.3456 4 0.2592 5 0.0778 The cumulative distribution function is: 2010 Christopher R. Bilder Add.3.2 Y 0 1 2 3 4 5 P(Y=y) 0.0102 0.0768 0.2304 0.3456 0.2592 0.0778 P(Yy) 0.0102 0.0102+0.0768 = 0.0870 0.3174 0.6630 0.9222 1.0000 R code and output: > > > > > 1 2 3 4 5 6 #n=5, pi=0.6 pdf<-dbinom(x = 0:5, size = 5, prob = 0.6) cdf<-pbinom(q = 0:5, size = 5, prob = 0.6) save<-data.frame(Y = 0:5, prob = round(pdf,4), cdf = round(cdf,4)) save Y prob cdf 0 0.0102 0.0102 1 0.0768 0.0870 2 0.2304 0.3174 3 0.3456 0.6630 4 0.2592 0.9222 5 0.0778 1.0000 > plot(x = save$Y, y = save$cdf, type = "s", xlab = "X", ylab = "Probability", lwd = 2, col = "blue", , panel.first=grid(col = "gray"), main = expression( paste("CDF of a binomial distribution for n=5, ", pi == 0.6))) > abline(h=0) > segments(x0 = 5, y0 = 1, x1 = 10, y1 = 1, lwd = 2, col = "blue") > segments(x0 = -5, y0 = 0, x1 = 0, y1 = 0, lwd = 2, col = "blue") > segments(x0 = 0, y0 = 0, x1 = 0, y1 = dbinom(0, 5, 0.6), lwd = 2, col = "blue") 2010 Christopher R. Bilder Add.3.3 0.6 0.6 0.4 0.0 0.2 Probability 0.8 1.0 CDF of a binomial distribution for N=5, 0 1 2 3 4 5 y Example: Uniform distribution Let X have a uniform probability distribution. The probability distribution function for X can be represented by f(x) 1 for a x b ba The parameters, a and b, control the location of the distribution. In general, this is what a graph of the distribution looks like. 2010 Christopher R. Bilder Add.3.4 Area = 1 1 ba f(X) f(X) a b X The cumulative distribution function can be found by finding P(Xx): x 1 1 x a F(x) f(u)du du u ba a ba aba x x For example, F(2) = (2-a)/(b-a) for a ≤ 2 ≤ b. 2010 Christopher R. Bilder Add.3.5 Mean and variance for logistic probability distribution Let X have a logistic probability distribution. The probability distribution function for X can be represented by f(x) 1 e( x ) / 1 e( x ) / 2 for -<x< and parameters -<< and >0. Note that E(X) = and Var(X) = 22/3 > 2. Below is some Maple code and output showing the E(X) and Var(X). > f(x):=1/sigma * exp(-(xmu)/sigma)/(1+exp(-(x-mu)/sigma))^2; f( x ) := e x 1e x 2 > assume(sigma>0); > int(f(x), x=-infinity..infinity); 1 > E(X):=int(x*f(x), x = -infinity..infinity); E( X ) := ln e > Var(X):=int((x-E(X))^2*f(x), x = -infinity..infinity); Var( X ) := 1 2 2 3 2010 Christopher R. Bilder Add.3.6 Quasi-Poisson models for count data In order to find the maximum likelihood estimates for and , we need log (, | y1,...,yn ) and log (, | y1,...,yn ) These equations are set equal to 0 and solved for and . In general, equations that are set equal to 0 and then solved for parameter estimates are called “estimating equations.” This makes sense here because our “estimates” result from these “equations.” Wedderburn (1974) suggested using quasi-likelihood methods to find parameter estimates for a generalized linear model. In this setting, one assumes a particular relationship between the mean and variance, BUT no particular distribution for the response variable. In the count data situation, we can proceed in a similar manner as before, but with some adjustments. Let the relationship between the mean and variance be Var(Y) = E(Y) for some constant , but do not assume Y has a Poisson distribution. Notice that when > 1, we would have overdispersion for the regular Poisson distribution. Models of this type are called quasi-Poisson. The estimating equation used for the quasi-likelihood approach for count data is 2010 Christopher R. Bilder Add.3.7 Yi i 0 i1 i n There are some nice similarities between this quasilikelihood and regular likelihood approaches: 1)The estimating equations end up being the same except for ! 2)The estimated variances for ̂ and ̂ are the same except the quasi-likelihood variances are times those of the regular likelihood approach. What does one use for the numerical value of ? Wedderburn suggests to use X2/(n-p) where X2 is the Pearson statistic from the regular likelihood approach model. Note that we no longer can do likelihood ratio tests here because the regular likelihood approach is not being used. Please see Agresti (2002, p. 149-151) for more details. Example: Horseshoe crabs and satellites (horseshoe.R, horseshoe.txt) The model is estimated by glm() again, but now the family option has changed to quasipoisson(link = log). 2010 Christopher R. Bilder Add.3.8 > mod.fit.quasi<-glm(formula = satellite ~ width, data = crab, family = quasipoisson(link = log), na.action = na.exclude, control = list(epsilon = 0.0001, maxit = 50, trace = T)) Deviance = 759.6346 Iterations - 1 Deviance = 580.078 Iterations - 2 Deviance = 567.9793 Iterations - 3 Deviance = 567.8786 Iterations - 4 Deviance = 567.8786 Iterations - 5 > summary(mod.fit.quasi) Call: glm(formula = satellite ~ width, family = quasipoisson(link = log), data = crab, na.action = na.exclude, control = list(epsilon = 1e-04, maxit = 50, trace = T)) Deviance residuals: Min 1Q Median -2.8526 -1.9884 -0.4933 3Q 1.0970 Max 4.9221 Coefficients: Estimate Std. Error t value Pr(>|t|) (Intercept) -3.30476 0.96731 -3.416 0.000793 *** width 0.16405 0.03562 4.606 8e-06 *** --Signif. codes: 0 ‘***’ 0.001 ‘**’ 0.01 ‘*’ 0.05 ‘.’ 0.1 ‘ ’ 1 (Dispersion parameter for quasipoisson family taken to be 3.182624) Null deviance: 632.79 Residual deviance: 567.88 AIC: NA on 172 on 171 degrees of freedom degrees of freedom Number of Fisher Scoring iterations: 5 2010 Christopher R. Bilder Add.3.9 Notice the output here is exactly the same as what we had for the regular Poisson regression model except for a few differences: 1)The “dispersion parameter” is the estimate of , say ̂ , and it is given to be 3.18. Because this value is greater than 1, there may be overdispersion. 2)The estimated standard deviations for the model parameter estimates are Var(ˆ ) = 0.96731 and Var(ˆ ) = 0.03562. The regular Poisson regression model had values of 0.01996. Notice that Var(ˆ ) = 0.5422 and Var(ˆ ) = > 0.54222*sqrt(3.18) [1] 0.9669168 > 0.01996*sqrt(3.18) [1] 0.03559378 3)With the larger standard errors, the Wald statistics are smaller and the p-values larger. 4)There is no AIC listed because this is not a full likelihood method (more on the AIC in later chapters). Poisson regression for rate data To help fix potential problems with the standard normal approximation, the Poisson data can be condensed over the same explanatory variable values. This was done in 2010 Christopher R. Bilder Add.3.10 the horseshoe crab example by condensing the data over width and transforming the data into “rate” data. Thus, let yj denote the number count for tj observations. The “j” subscript is used to help differentiate between the different coding. The Pearson residual now becomes y j ˆ j ˆ j ˆ +ˆ x j where ˆ j t je . The standardized residual is found in its expected way. For a moderate to large yj or ̂ j , the Pearson residual has an approximate standard normal distribution. Pearson residuals outside of Z0.975 = 1.96 (or Z0.995 = 2.576) may need to be investigated for being potential outliers. Example: Horseshoe crabs and satellites (horseshoe.R, horseshoe.txt) Below is the continued analysis of the Pearson residuals using the rate data. > pearson.rate<-resid(object = mod.fit.rate, type="pearson") > > > #Standardized Pearson residuals h.rate<-lm.influence(model = mod.fit.rate)$h head(h.rate) 1 2 3 4 5 6 0.01917172 0.01656730 0.04546699 0.04198090 0.02740088 0.04022005 2010 Christopher R. Bilder Add.3.11 > > standard.pearson.rate<-pearson.rate/sqrt(1-h.rate) head(standard.pearson.rate) 1 2 -1.0830421 -1.1740631 > > > > 3 4 5 6 0.2852841 -0.3358816 -1.2449897 -2.2528002 par(mfrow = c(1,2)) plot(x = 1:length(standard.pearson.rate), y = standard.pearson.rate, xlab="Obs. number", ylab="Standardized residuals", main = "Stand. residuals (rate model) vs. obs. number") abline(h = c(qnorm(0.975), qnorm(0.995)), lty=3, col="red") abline(h = c(qnorm(0.025), qnorm(0.005)), lty=3, col="red") > > plot(x = rate.data$width, y = standard.pearson.rate, xlab="Width", ylab="Standardized residuals", main = "Stand. residuals (rate model) vs. width") > abline(h = c(qnorm(0.975), qnorm(0.995)), lty=3, col="red") > abline(h = c(qnorm(0.025), qnorm(0.005)), lty=3, col="red") > identify(x = rate.data$width, y = standard.pearson.rate) [1] 13 21 26 31 33 49 50 2010 Christopher R. Bilder Add.3.12 6 Stand. residuals (rate model) vs. width 6 Stand. residuals (rate model) vs. obs. number 31 4 4 21 50 13 0 -2 2 Standardized residuals 2 0 -2 Standardized residuals 49 33 26 0 10 20 30 40 50 60 Obs. number > > > > > 22 24 26 28 30 32 34 Width par(mfrow = c(1,1)) plot(x = rate.data$width, y = standard.pearson.rate, xlab="Width", ylab="Standardized residuals", main = "Stand. residuals (rate model) vs. width", type = "n") text(x = rate.data$width, y = standard.pearson.rate, labels = rate.data$y, cex=0.75) abline(h = c(qnorm(0.975), qnorm(0.995)), lty=3, col="red") abline(h = c(qnorm(0.025), qnorm(0.005)), lty=3, 2010 Christopher R. Bilder Add.3.13 col="red") 6 Stand. residuals (rate model) vs. width 38 4 28 23 10 16 13 2 Standardized residuals 26 22 4 11 10 13 5 0 7 4 1888 6 2 4 5 4 13 6 8 2 0 1 0 4 7 5 0 4 13 7 16 1 13 2 00 5 4 16 13 2 3 3 20 1 3 2 0 0 4 2 3 5 -2 0 14 8 0 10 0 22 24 26 28 30 32 34 Width Notes: There are some potential outliers - if we believe the standard normal approximation is appropriate. Note that some of the observations with the larger counts are the outliers. 2010 Christopher R. Bilder Add.3.14 The identify() function allows one to interactively identify a point on a plot by clicking on it. The label given on the plot defaults to the row number of a vector. Example: Horseshoe crabs and satellites (rate_data_horseshoe.R, horseshoe.txt) See the rate_data_horseshoe.R program for interesting ways to graphically see the fit of the model. For example, here is one of the plots: Horseshoe crab data set with poisson regression model fit (rate data) 30 6 6 3 20 3 6 5 6 6 2 3 7 5 67 2 4 10 2 2 3 8 2 22 2 3 2 3 1 3 3 2 3 3 1 3 1 1 1 2 1 2 1 1 22 1 3 11 3 1 2 24 2 21 2 0 Number of satellites 4 1 1 1 1 1 1 1 1 1 1 3 26 28 Width (cm) 2010 Christopher R. Bilder 30 32 34 Add.3.15 Example: Horseshoe crabs and satellites (horseshoe.R, horseshoe.txt) – Examining the overall model fit. Because the 2 approximation is often poor, other methods are often used to determine if the model fits the data poorly. Agresti (1996) forms groups for the data so that ̂i >5 where i denotes a group number (not in 2007 edition). The observed and predicted values are summed within each group. X2 and G2 are found then for each group. Another alternative suggested by Agresti (1996) is to fit a model to the grouped data and find X2 and G2 for this new data set. Using both of these methods, Agresti concludes the Poisson modeling approach is appropriate. The second method is illustrated below. > temp2<-c(22.69, 23.84, 14, 20, 24.77, 28, 67, 25.84, 39, 105, 26.79, 22, 63, 27.74, 24, 93, 28.67, 18, 71, 30.41, 14, 72) 14, 14, > temp3<-matrix(data=temp2, nrow=8, ncol=3, byrow=T) > crab.tab4.3<-data.frame(width=temp3[,1], cases=temp3[,2], satell=temp3[,3]) > mod.fit <- glm(formula = satell ~ width + offset(log(cases)), data = crab.tab4.3, family = poisson(link = log), na.action = na.exclude, control = list(epsilon = 0.0001, 2010 Christopher R. Bilder Add.3.16 Deviance Deviance Deviance Deviance Deviance Deviance = = = = = = maxit = 50, trace = T)) 6.563769 Iterations - 1 6.516798 Iterations - 2 6.516796 Iterations - 3 75.01137 Iterations - 1 72.3803 Iterations - 2 72.37717 Iterations - 3 > summary(mod.fit) Call: glm(formula = satell ~ width + offset(log(cases)), family = poisson(link = log), data = crab.tab4.3, na.action = na.exclude, control = list(epsilon = 1e-04, maxit = 50, trace = T)) Deviance Residuals: Min 1Q Median -1.5329 -0.7734 -0.3452 3Q 0.7120 Max 1.0392 Coefficients: Estimate Std. Error z value Pr(>|z|) (Intercept) -3.53547 0.57602 -6.138 8.37e-10 *** width 0.17272 0.02123 8.135 4.12e-16 *** --Signif. codes: 0 `***' 0.001 `**' 0.01 `*' 0.05 `.' 0.1 ` ' 1 (Dispersion parameter for poisson family taken to be 1) Null deviance: 72.3772 on 7 degrees of freedom Residual deviance: 6.5168 on 6 degrees of freedom AIC: 56.962 Number of Fisher Scoring iterations: 3 > #LRT p-value > 1-pchisq(mod.fit$deviance, mod.fit$df.residual) [1] 0.3678497 > #Pearson statistic and p-value > pearson3<-residuals(mod.fit, type="pearson") > sum(pearson3^2) [1] 6.246998 > 1-pchisq(sum(pearson3^2), mod.fit$df.residual) [1] 0.3960978 2010 Christopher R. Bilder Add.3.17 Fitting generalized linear models Newton-Raphson method To make the notation easier, let (, ) = (1, 2) = be a vector of the model parameters. log[ ( )] Let denote the first partial derivative of the log i likelihood function taken with respect to i. Let log[ ( )] log[ ( )] L(1)()= be a 21 vector of these , 1 2 2 log[ ( )] first partial derivatives. Let be the second i j partial derivative taken with respect to i and j. Let L(2)() be a 22 matrix of these second partial derivatives and assume it is nonsingular. Iterative estimates of can be found using the following equation: 1 ˆ (g) ˆ (g1) L(2) (ˆ (g1) ) L(1) (ˆ (g1) ) for g=1,2,… (1) To begin, initial estimates are found for - call this ̂(0) . These initial estimates can be found using weighted least squares with a “regular” regression model. The initial 2010 Christopher R. Bilder Add.3.18 estimates are put into (1) to obtain ̂(1) . The ̂(1) is then put back into (1) to obtain ̂(2) . The ̂(2) is then put back… . The iteration process stops when these ̂(g) ’s converge to ̂ . This is often said to happen when ˆ (g) ˆ (g1) for some small number >0. R uses 2 2 G(g) G(g 1) where G2 denotes the LRT G 0.1 goodness-of-fit statistic. R uses by default: = 0.0001 and 10 maximum iterations. Notice in the call to glm(), we have always used the control option: 2 (g) control = list(epsilon = 0.0001, maxit = 50, trace = T) The maximum iterations are changed to 50. The trace option shows the G2 values for each iteration. Note that the Poisson regression model with an offset prints an additional G2 comparing the saturated model to the model without any explanatory variables (I can not find any documentation on why this happens). The Newton-Raphson process can be simplified sometimes by using “Fisher-scoring”. In this case, - L(2)() is replace by the “Fisher information matrix”, -E[L(2)()]. Both methods will yield the same parameter estimates here. Equation (1) simplifies in the Fisherscoring problem to an equation that looks similar to the 2010 Christopher R. Bilder Add.3.19 one used in weighted least squares. Because of this, the Fisher scoring algorithm is often called iteratively reweighted least squares (IRLS). This is the actual method used by R. For more on these numerical methods, see Agresti (2002, p.143-149) and the on-line SAS help for PROC LOGISTIC at http://support.sas.com/91doc/getDoc/ statug.hlp/logistic_index.htm. Both discuss how these methods are used in logistic regression. Example: Placekicking (placekick_ch4.R, place.s.csv) > mod.fit <- glm(formula = good1 ~ dist, data = place.s, family = binomial(link = logit), na.action = na.exclude, control = list(epsilon = 0.0001, maxit = 50, trace = T)) Deviance = 836.7715 Iterations - 1 Deviance = 781.1072 Iterations - 2 Deviance = 775.8357 Iterations - 3 Deviance = 775.7451 Iterations - 4 Deviance = 775.745 Iterations - 5 > summary(mod.fit) Call: glm(formula = good1 ~ dist, family = binomial(link = logit), data = place.s, na.action = na.exclude, control = list(epsilon = 1e-04, maxit = 50, trace = T)) Deviance Residuals: Min 1Q Median -2.7441 0.2425 0.2425 3Q 0.3801 Max 1.6091 Coefficients: Estimate Std. Error z value Pr(>|z|) 2010 Christopher R. Bilder Add.3.20 (Intercept) 5.812079 0.326158 17.82 <2e-16 *** dist -0.115027 0.008337 -13.80 <2e-16 *** --Signif. codes: 0 `***' 0.001 `**' 0.01 `*' 0.05 `.' 0.1 ` ' 1 (Dispersion parameter for binomial family taken to be 1) Null deviance: 1013.43 Residual deviance: 775.75 AIC: 779.75 on 1424 on 1423 degrees of freedom degrees of freedom Number of Fisher Scoring iterations: 5 There were 5 iterations before convergence was obtained. Notice the difference between successive G2 is 2 2 G(5) G(24) / G(5) 0.1 = |775.745 - 775.7467| / (775.745 + 0.1) = 0.00000219 < 0.0001. Covariance matrix for the estimators The covariance matrix can be obtained through “standard” maximum likelihood techniques. Let ̂ be a vector of maximum likelihood estimates (i.e., ̂(g) ). Then 1 (2) ̂ is approximately distributed as N , E L ( ) ˆ for large n where E L(2) () ˆ is the “expected Fisher information matrix” evaluated at ̂ . Note that hypothesis tests for Ho: i=i0 can be done using: 2010 Christopher R. Bilder Add.3.21 Z ˆ i i0 Var(ˆ i ) where Var(ˆ i ) can be found from the ith diagonal element 1 of E L ( ) ˆ and Z has an approximate N(0,1) distribution for a large sample. (2) 2010 Christopher R. Bilder