- Optimal Workshop

advertisement

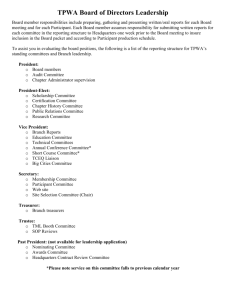

Tree testing: a quick way to evaluate your IA Dave O’Brien, Optimal Usability (Wellington, New Zealand) Abstract A big part of information architecture is organisation – creating the structure of a site. For most sites – particularly large ones – this means creating a hierarchical “tree” of topics. But to date, the IA community hasn’t found an effective, simple technique (or tool) to test site structures. The most common method used – closed card sorting – is neither widespread nor particularly suited to this task. Some years ago, Donna Spencer pioneered a simple paper-based technique to test trees of topics. Recent refinements to that method, some made possible by online experimentation, have now made “tree testing” more effective and agile. This article describes the current state of tree testing, a web tool for automating it, and what we’ve learned from running tree tests to improve IAs for several large sites. Callout quote - “Getting to the right page within a website or intranet is the inevitable prerequisite to getting anything done.” Jakob Nielsen Introduction Some time ago, we were working on an information-architecture project for a large government client here in Wellington. It was a classic IA situation – their current site’s structure (the hierarchical “tree” of topics) was a mess, they knew they had outgrown it, and they wanted to start fresh. We jumped in and did some research, including card-sorting exercises with various user groups. We’ve always found card sorts (in person or online) to be a great way to generate ideas for a new IA. Brainstorming sessions followed, and we worked with the client to come up with several possible new site trees. But were they better than the old one? And which new one was best? After a certain amount of debate, it became clear that debate wasn’t the way to decide. We needed some real data – data from users. And, like all projects, we needed it quickly. What kind of data? At this early stage, we weren’t concerned with visual design or navigation methods; we just wanted to test organisation – specifically, findability and labeling. We wanted to know: o Could users successfully find particular items in the tree? o Could they find those items directly, without having to backtrack? o Could they choose between topics quickly, without having to think too much (the Krug Test1)? o Overall, which parts of the tree worked well, and which fell down? Not only did we want to test each proposed tree, we wanted to test them against each other, so we could pick the best ideas from each. And finally, we needed to test the proposed trees against the existing tree. After all, we hadn’t just contracted to deliver a different IA – we had promised a better IA, and we needed a quantifiable way to prove it. The problem This, then, was our IA challenge: o getting objective data on the relative effectiveness of several tree structures, and o getting it done quickly, without having to build the actual site first. As mentioned earlier, we had already used open card sorting to generate ideas for the new site structure. We had done in-person sorts (to get some of the “why” behind our users’ mental models) as well as online sorts (to get a larger sample from a wider range of users). But while open card sorting is a good “detective” technique, it doesn’t yield the final site structure - it just provides clues and ideas. And it certainly doesn’t help in evaluating structures. For that, information architects have traditionally turned to closed card sorting, where the user is provided with predefined category “buckets” and ask to sort a pile of content cards into those buckets. The thinking goes that if there is general agreement about which cards go in which buckets, then the buckets (the categories) should perform well in the delivered IA. The problem here is that, while closed card sorting mimics how users may file a particular item of content (e.g. where they might store a new document in a document-management system), it doesn’t necessarily model how users find information in a site. They don’t start with a document – they start with a task, just as they do in a usability test. What we wanted was a technique that more closely simulates how users browse sites when looking for something specific. Yes, closed card sorting was better than nothing, but it just didn’t feel like the right approach. Other information architects have grappled with this same problem. We know some who wait until they are far enough along in the wireframing process that they can include some IA testing in the first rounds of usability testing. That piggybacking saves effort, but it also means that we don’t get to evaluate the IA until later in the design process, which means more risk. We know others who have thrown together quick-and-dirty HTML with a proposed site structure and placeholder content. This lets them run early usability tests that 1 Don’t Make Me Think, Steve Krug focus on how easily participants can find various sublevels of the site. While that gets results sooner, it also means creating a throw-away set of pages and running an extra round of user testing. With these needs in mind, we looked for a new technique – one that could: o Test topic trees for effective organisation o Provide a way to compare alternative trees o Be set up and run with minimal time and effort o Give clear results that could be acted on quickly The technique – tree testing Luckily, the technique we were looking for already existed. Even luckier was that we got to hear about it firsthand from its inventor, Donna Spencer, the well-regarded information architect out of Australia2, and author of the recently released book Card Sorting. During an IA course that Donna was teaching in Wellington, she was asked how she tested the site structures she created for clients. She mentioned closed card sorting, but like us, she wasn’t satisfied with it. She then went on to describe a technique she called card-based classification, which she had used on some of her IA projects. Basically, it involved modeling the site structure on index cards, then giving participants a “find-it” task and asking them to navigate through the index cards until they found what they were looking for. To test a shopping site, for example, she might give them a task like “Your 9-year-old son asks for a new belt with a cowboy buckle”. She would then show them an index card with the top-level categories of the site: The participant would choose a topic from that card, leading to another index card with the subtopics under that topic. 2 An island located just northwest of New Zealand. The participant would continue choosing topics, moving down the tree, until they found their answer. If they didn’t find a topic that satisfied them, they could backtrack (go back up one or more levels). If they still couldn’t find what they were looking for, they could give up and move on to the next task. During the task, the moderator would record: o the path taken through the tree (using the reference numbers on the cards) o whether the participant found the correct topic o where the participant hesitated or backtracked By choosing a small number of representative tasks to try on participants, Donna found that she could quickly determine which parts of the tree performed well and which were letting the side down. And she could do this without building the site itself – all that was needed was a textual structure, some tasks, and a bunch of index cards. Donna was careful to point out that this technique only tests the top-down organisation of a site and the labeling of its topics. It does not try to include other factors that affect findability, such as: o the visual design and layout of the site o other navigation routes (e.g. cross links) o search While it’s true that this technique does not measure everything that determines a site’s ease of browsing, that can also be a strength. By isolating the site structure - by removing other variables at this early stage of design - we can more clearly see how the tree itself performs, and revise until we have a solid structure. We can then move on in the design process with confidence. It’s like unit-testing a site’s organisation and labeling. Or as my colleague Sam Ng says, “Think of it as analytics for a website you haven’t built yet.” Treejack - a tree-testing tool As we started experimenting with “card-based classification” on paper, it became clear that, while the technique was simple, it was tedious to create the cards on paper, recruit participants, record the results manually, and enter the data into a spreadsheet for analysis. The steps were easy enough, but they were time eaters. It didn’t take too much to imagine all this turned into a web app – both for the information architect running the study and the participant browsing the tree. Card sorting had gone online with good results, so why not card-based classification? Ah yes, that was the other thing that needed work – the name. During the paper exercises, it got called “tree testing”, and because that seemed to stick with participants and clients, it stuck with us. And it sure was a lot easier to type. To create a good web app, we knew we had to be absolutely clear about what it was supposed to do. For online tree testing, we aimed for something that was: o Quick for an information architect to learn and get going on o Simple for participants to do the test o Able to handle a large sample of users o Able to present clear results We created a rudimentary application as a proof of concept, running a few client pilots to see how well tree testing worked online. After working with the results in Excel, it became very clear which parts of the trees were failing users, and how they were failing. The technique was working. However, it also became obvious that a wall of spreadsheet data did not qualify as “clear results”. So when we sat down to design the next version of the tool – the version that information architects could use to run their own tree tests – reworking the results was our number-one priority. Optimal Workshop has now released that beastie into the wild – it’s called Treejack, and it’s available for anyone to try tree testing on their own site structures. Participating in a tree test So, what does online tree testing look like? As an example, let’s look at what a participant sees when they do a test in Treejack. Suppose we’ve emailed an invitation to a list of possible participants. (We recommend at least 30 to get reasonable results – more is good, especially if you have different types of users.) Clicking a link in that email takes them to the Treejack site, where they’re welcomed and instructed in what to do. Once they start the test, they’ll see a task to perform. The tree is presented as a simple list of top-level topics: They click down the tree one topic at a time. Each click shows them the next level of the tree: Once they click to the end of a branch, they have 3 choices: o Choose the current topic as their answer (“I’d find it here”). o Go back up the tree and try a different path (by clicking a higher-level topic). o Give up on this task and move to the next one (“Skip this task”). Once they’ve finished all the tasks, they’re done – that’s it. For a typical test of 10 tasks on a medium-sized tree, most participants take 5-10 minutes. As a bonus, we’ve found that participants usually find tree tests less taxing than card sorts, so we get lower drop-out rates. Setting up a tree test Let’s peek behind the scenes to see how the tree test was set up. Entering the tree The heart of a tree test is…um…the tree. You might already have this modeled as a Visio diagram, an Excel spreadsheet, a Word outline, etc. We couldn’t find a standard for representing tree structures, so we stuck with simple. You can get your tree into Treejack by either: o Typing it in and indenting the levels, or o Paste it in from Excel, Word, a text editor (or what have you) and having it convert the tabs or columns to the corresponding levels. The tree can be any size, depending on how deep we want to test. For very large trees, we often cut the tree down to size by: o Only using the upper levels (say 3-4 levels deep), or o Testing sections of a tree separately (which assumes that users can navigate the top level easily – a big assumption) One lesson that we learned early was to build the tree based on the content of the site, not simply its page structure. For example, suppose a North American company had a Contact Us page that listed general contact information (for North America), with subpages for other regions like South America and Europe. The page structure would be this: o Contact Us o South America o Europe …but the content structure is really this: o Contact Us o North America o South America o Europe Because a tree test shows no content, implicit topics (like “North America” above) have to be made explicit, so they can be selected by tree-test participants. Also, because we want to measure the effectiveness of the site’s topic structure, we typically omit “helper” topics such as Search, Site Map, Help, and yes, Contact Us. If we leave them in, it makes it too easy for users to choose them as alternatives to browsing the tree, and we don’t learn as much. Entering tasks We test the tree by getting participants to look for specific things – to perform “find it” tasks. Just as in a usability test, a good task is clear, specific, and representative of the tasks that actual users will do on the real site. How many tasks? You might think that more is better (short of exhausting the participant), but we’ve found a sizable learning effect in tree tests. After a participant has browsed through the tree several times looking for various items, they start to remember where things are, and that can skew later tasks. For that reason, we recommend a maximum of 10 tasks per test –a few less if the tree is small, perhaps a few more if the tree is very large. Another way we reduce the learning effect is by providing an option to randomise the order of tasks. If task 10 is always the last task, participants would presumably do better at it because they’ve already browsed the tree 9 times before. Unless you need to present tasks in a certain order (which is rare in tree testing), always randomise. Entering correct answers In a tree test, the most obvious thing to measure is the success rate – for a given task, how many participants actually find the right topic in the tree? In most medium-to-large site structures, there can often be more than one “correct” destination – more than 1 page that answers the user’s question. Consider also that, in well-designed sites, the IA has anticipated some of the alternate paths that users will follow. The IA knows, for example, that instead of browsing “properly” to page A, some users will end up at a certain page B. Assuming that page B clearly redirects those users to page A, we could consider both A and B to be “correct” destinations – they both lead to user success. Accordingly, for each task, Treejack lets us mark several pages as correct: Entering the tree, the tasks, and the answers – that’s it. We send out our test URL (as an email invitation or a link on a web page), and the users go put our tree through its paces. Skimming the high-level results Just as we wanted the test to be easy to set up and to participate in, we also wanted it to give us some clear high-level results. The details can wait for later; up front we want to find out “How’d we do?” As mentioned earlier, we wanted to know: o Could users successfully find particular items in the tree? o Could they choose between topics quickly? o Could they find those items with a minimum of backtracking? We designed the high-level results to show just that: o Success - % of participants who found the correct answer. This is the single most important metric, and is weighted highest in the overall score. o Speed – how fast participants clicked through the tree. In general, confident choices are made quickly (i.e. a high Speed score), while hesitation (taking extra time) suggests that the topics are either not clear enough or not distinguishable enough. o Directness – how directly participants found an answer. Ideally, they reach their destination without wandering or backtracking. Each of these is measured per task, normalised to a 0-100 scale, so we can see how each task fared. There’s also an overall score averaged across all tasks: The overall score, of course, is what people look at first. If we see an 8/10 success rate for the entire test, we’ve earned ourselves a beer. Often, though, we’ll find ourselves looking at a 5 or 6, and realise that there’s more work to be done. The good news is that our miserable overall score of 5/10 is often some 8’s brought down by a few 2’s and 3’s. This is where tree testing really shines – separating the good parts of the tree from the bad, so we can spend our time and effort fixing the latter. Diving into the details Speaking of the latter, let’s find out what’s behind those 2’s and 3’s. From the summary gauges, we can generally see which measure (success, speed, or directness) is dragging the score down. To delve deeper, we can download the detailed results as an annotated spreadsheet. Destinations The “Destination” page shows, for each task, how many participants chose a given topic as the answer: For tasks with low success rates, we look for wrong answers following two patterns: o High totals - many participants choosing the same wrong answer. This suggests a problem with that particular topic (perhaps in relation to its siblings). o Clusters of totals – many participants choosing wrong answers in the same subsection of the site. This suggests a problem with the parent level. First clicks The “First click” page shows where participants went on their first click for a task – which top-level section they chose: The first click is the one most highly correlated with eventual success – if we can get users to the right section of a site, they’re much more likely to find the right topic. This page also shows which top-level sections were visited sometime during the task. Perhaps the participants completely ignored the correct section, or maybe they visited it, but backed out and went somewhere else instead. Paths The “Paths” page shows the click-by-click paths that participants took through the tree as they tried each task. Browsing these paths is useful when: o We’re trying to figure out how the heck a participant got to some far-flung corner of the tree. o The task shows a lot of backtracking in general (i.e. a low Directness score), and we’re looking for any patterns in where participants backed up. That can indicate that the parent topic (the topic just clicked) was misleading. We also look for other signs of trouble, such as: o High skip rates – Participants can elect to skip a task at any time. Rates above 10% suggest that they found the task particularly difficult. o Evil attractors – These topics lure clicks even though they have little to do with the task at hand. Often this is caused by a too-generic label. We saw this happen to a consumer-review site that had a “Personal” category; they meant personal-care products like electric shavers, but participants also went there for “personal” items like cell phones, watches, etc. In general, we’ve found that tree-testing results are much easier to analyse than cardsorting results. The high-level results pinpoint where the problems are, and the detailed results usually make the reason plain. In cases where a result has us scratching our heads, we do a few in-person tree tests, prompting the participant to think aloud and asking them about the reasons behind their choices. Lessons learned We’ve run several tree tests now for large clients, and we’re very pleased with the technique. Along the way, we’ve learned a few things too: o Test a few different alternatives. Because tree tests are quick to do, we can take several proposed structures and test them against each other. This is a quick way of resolving opinion-based debates over which is better. For the government web project we discussed earlier, one proposed structure had much lower success rates than the others, so we were able to discard it without regrets or doubts. o Test new against old. Remember how we promised that government agency that we would deliver a better IA, not just a different one? Tree testing proved to be a great way to demonstrate this. In our baseline test, the original structure notched a 31% success rate. Using the same tasks, the new structure scored 67% - a solid quantitative improvement. o Do iterations. Everyone talks about developing designs iteratively, but schedules and budgets often quash that ideal. Tree testing, on the other hand, has proved quick enough that we’ve been able to do two or three revision cycles for a given tree, using each set of results to progressively tweak and improve it. o Identify critical areas to test, and tailor your tasks to exercise them. Normally we try to cover all parts of the tree with our tasks. If, however, there are certain sections that are especially critical, it’s a good idea to run more tasks that involve those sections. That can reveal subtleties that you may have missed with a “vanilla” test. For example, in another study we did, the client was considering renaming an important top-level section, but was worried that the new term (while more accurate) was less clear. Tree testing showed both terms to be equally effective, so the client was free to choose based on other criteria. o Crack the toughest nuts with “live” testing. Online tree tests suffer from the same basic limitation as most other online studies – they give us loads of useful data, but not always the “why” behind it. Moderated testing (either in person or by remote session) can fill in this gap when it occurs. Summary To sum up, tree testing has given us the IA tool we were after – a quick, clear, quantitative method to test site structures. Like user testing, it shows us (and our clients) where we need to focus our efforts, and injects some user-based data into the IA design process. The simplicity of the technique means that we can do variations and iterations until we get a really good end result. Tree testing also makes our clients happy. They quickly “get” the concept, the highlevel results are easy for them to understand, and they love having data to show their management and to measure their progress against. On the “but” side, tree testing is still an embryonic technique. Our metrics and scoring algorithms are first-generation, and we have a long list of improvements we’re keen to make to Treejack, our online tool. Also, simplicity is a two-sided coin – what we gain by having a clear single-purpose tool, we give up in comprehensiveness. Tree testing is a narrow technique – it only tells us how a structure performs in vacuo. A good tree still needs to be married up to an effective navigation system, content design, and visual treatment before we can say we’ve built a good information architecture. Tree testing has proved effective for us. We think it can be useful for anyone designing a large content structure. If you’re keen, try it as described here and in Donna’s original article, or put your own spin on it for your own needs. Either way, our hope is that tree testing, as a quick way to evaluate site structures, earns a place in the information architect’s toolkit.