Measuring the Risk Factor

advertisement

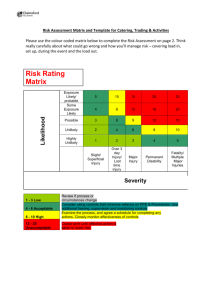

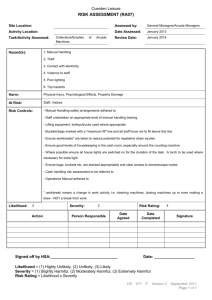

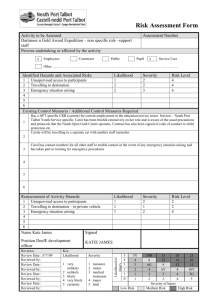

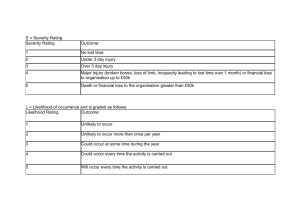

Measuring the Risk Factor By Yamini Munipalli Jul 25, 2005 Summary: The concept of risk is inherent in any development effort. Therefore, the best way to deal with risk is to contain it. One way to contain risk is through risk management, which involves three aspects: identifying risks, analyzing exposure to the risks in a development effort, and execution of the risk management plan. In this article, Yamini Munipalli details one way of assigning and managing risk to a software development plan. This version of risk analysis, drawn from many schools of thought, remains flexible enough to use within any company for any project. Risk management is tricky because the process involves subjective thinking on the part of individuals in the organization. Identification of risks is generally based on an individual's experience and knowledge of the system. Since experience and knowledge are unique to each individual, it is important to employ a wide range of individuals on the risk management team. Risk management also involves an assessment of the risk tolerance level in the organization. Companies that are more tolerant of risk will be less likely to develop a risk management approach. However, in some industries like the medical industry, there is little tolerance for risk. While risk management can be applied to any type of industry, Yamini discusses a software risk management technique: risk analysis. What is Risk Analysis? Risk analysis is part of an organization's overall risk management strategy. One definition of risk analysis is the "process of exploring risks on the list, determining, and documenting their relative importance." It is a method used to assess the probability of a bad event; and it can be done by businesses as part of disaster recovery planning as well as part of the software development lifecycle. The analysis usually involves assessing the expected impact of a bad event such as a hurricane or tornado. Furthermore, an assessment of the likelihood of that bad event's occurrence is also taken. Proposed methods of risk analysis include different indicators. Since one method might not fit perfectly for your project, I suggest pooling together a multitude of expert insight to see if one, or a combination of many methods, works well for you. One suggestion from Payson Hall recommends a risk analysis matrix that includes expected impact, probability, and surprise or the difficulty of timely detection of the risk. Rex Black proposes using the indicators of severity, priority, and likelihood of failure to complete a risk analysis. Johanna Rothman suggests using severity and probability of occurrence as indicators in doing a risk analysis. In the article, "Software Risk Management Makes Good Business Sense," Steve Goodwin advises using severity as the only indicator of risk. Dr. Ingrid B. Ottevanger multiplies the "chance of failure X damage." (This is essentially the likelihood of failure multiplied by the expected impact.) James Bach also considers likelihood of failure and impact of failure as good indicators of the magnitude of risk, while Geoff Horne proposes using the indicators of expected impact and likelihood of failure. The method adopted here modifies Rick Craig and Stefan Jaskiel's work in Systematic Software Testing, presenting a method to complete software risk analysis using other indicators than "expected impact" and "likelihood of failure." Before we do a risk analysis, however, we must understand what is meant by the term "risk." Definitions of Risk Risk is the probability that a loss will occur, "a weighted pattern of possible outcomes and their associated consequences." It indicates "the probability that a software project will experience undesirable events, such as schedule delays, cost overruns, or outright cancellation. Risk is proportional to size and inversely proportional to skill and technology levels." Thus, the larger the project the greater the risk. These definitions indicate that risk involves possible outcomes and consequences of those outcomes. Potential outcomes include both negative and positive outcomes. Negative outcomes such as undesirable events can occur, and when they occur, there will be a loss to someone. The loss can occur in terms of money, lives, or damage to property. Risk reduction strategies differ based on the level of maturity of the organization. The more mature the organization, the less likely it will be to take risks. Thus, the more mature the organization, the more likely it is for a software team in that organization to do risk analysis of software. This leads us to the justification for doing a risk analysis. Why Perform a Risk Analysis? In the medical industry, risk analysis is done for the following reasons: 1. Risk analysis is required by law. 2. Identification of device design problems prior to distribution eliminates costs associated with recalls. 3. Risk analysis offers a measure of protection from product liability damage awards. 4. Regulatory submissions checklists (PMA and 510k) used by the FDA now call for inclusion of risk analysis. 5. It is the right thing to do. Some of these reasons could also apply to software risk analysis and disaster recovery planning in that risk analysis offers protection from product liability damages. Also, it is cheaper to fix a software defect if found in the development stage rather than if a customer finds the defect. The risk analysis process "provides the foundation for the entire recovery planning effort." Similarly, in software development, risk analysis provides the foundation for the entire test planning effort. It should be included as an integral part of the test plan as a method to guide the test team in determining the order of testing. The argument here is that testing reduces risks associated with software development and the software risk analysis allows us to prioritize those features and requirements with the highest risk. Testing high-risk items first reduces the overall risk of the software release significantly. Risk analysis also allows the test team to set expectations about what can be tested in the given amount of time. What are the risks associated with software development? Jones mentions sixty software risks in his book, Assessment and Control of Software Risks. Among these are cost overruns, canceled projects, high maintenance costs, false productivity claims, low quality, missed schedules, and low user satisfaction. The cause of low user satisfaction comes from inadequate requirements in the sense that software may have been built without adequately considering the needs of the user community. This leads us to the scope of risk analysis. Scope of the Risk Analysis The method I present is limited to assessments of software requirement specifications and features. It does not refer to the more general software risks mentioned above. Who performs the software risk analysis? Typically, everyone involved with the software development lifecycle. The users, business analysts, developers, and software testers are all involved in conducting risk analysis. However, it is not always possible to have everyone's input, especially the users. In that case, the testers should conduct the software risk analysis as early as possible in the software development lifecycle. Typically, risk analysis is done in the requirements stage of the software development lifecycle. Two indicators have been proposed as indicators of risk: the expected impact of failure and the likelihood of failure. Let's talk about these in turn. Expected Impact Indicator The software team should ask the question, "What would be the impact on the user if this feature or attribute failed to operate correctly?" Impact is usually expressed in terms of money or the cost of failure. For each requirement or feature, it is possible to assign a value in terms of the expected impact of the failure of that requirement or feature. Assign a value of high, medium, or low for each requirement as a measure of the expected impact. You should concentrate your focus only on those features and attributes that directly impact the user, not necessarily on the testing effort. If you run into the situation where every feature or requirement is ranked the same, then limit the number of values each user can assign. Let's look at the expected impact and likelihood of failure for a hypothetical Login system: Table 1 – Expected Impact and Likelihood of Failure for the Login Functionality The requirement that the "UserId shall be 4 characters" has a low expected impact of failure because there is not much of an impact to a user if the userid is more or less than 4 characters. The same reasoning can be applied to the requirement that the "Password shall be 5 characters." However, the requirement that the "System shall validate each userID and password for uniqueness" has a high impact of failure because there could be multiple users with the same userID and password. If the developer does not code for this, security is at risk. Likelihood of Failure Indicator As part of the risk analysis process, the software team should assign an indicator for the relative likelihood of failure of each requirement or feature. Assign H for a relatively high likelihood of failure, M for medium, and L for low. When the software team assigns a value for each feature, they should be answering the question, "Based on our current knowledge of the system, what is the likelihood that this feature or attribute will fail or fail to operate correctly?" At this point, Craig and I differ in that he argues that complexity is a systemic characteristic and should be included as part of the likelihood indicator. My argument is that complexity should be an indicator on its own. Furthermore, severity should also be considered. Four indicators provide more granularity and detail than just the two typical indicators. In Table 2, I have shown that if the prioritization is the same between two different requirements then it is not possible to discern which requirement is more risky. If we have three or more indicators we are in a better position to evaluate risk. Complexity Indicator Something that is complex is intricate and complicated. The argument here is that the greater the complexity of the feature, the greater the risk. More interfaces means that there will be more risk involved with each interface as well as the overall system. According to Craig and Jaskiel, Tom McCabe devised a metric known as cyclomatic complexity that is based on the number of decisions in a program. His studies have shown a correlation between a program's cyclomatic complexity and its error frequency. "A low cyclomatic complexity contributes to a program's understandability and indicates it is amenable to modification at lower risk than a more complex program." He, along with others, has shown that those parts of the system with high cyclomatic complexity are more prone to defects than those with a lower value. Cyclomatic complexity can be used in the test planning phase in that "Mathematical analysis has shown that cyclomatic complexity gives the exact number of tests needed to test every decision point in a program for each outcome. Thus, the analysis can be used for test planning. An excessively complex module will require a prohibitive number of test steps; that number can be reduced to a practical size by breaking the module into smaller, less-complex sub-modules." There are other measures of complexity that can be used for risk analysis. These are the Halstead Complexity Measures, Henry and Kafura metrics, and Bowles metrics. Assign a value of H for high, M for medium, or L for low for each requirement based on its complexity. Severity Indicator My approach is also different from Craig and Jaskiel in another way. I consider the severity of failure as a separate indicator. Severity is defined as "harshness" of the failure. Harshness of failure indicates how much damage there will be to the user community and also implies that there will be some suffering on the part of the user if the failure is realized. This suffering could be in the form of money, emotional stress, poor health, death, etc. Consider the following case of a software failure that resulted in deaths. In 1986, two cancer patients at the East Texas Cancer Center in Tyler received fatal radiation overdoses from the Therac-25, a computer-controlled radiation-therapy machine. There were several errors, among them the failure of the programmer to detect a race condition (i.e., miscoordination between concurrent tasks). Or consider the case in which a New Jersey inmate escaped from computer-monitored house arrest in the spring of 1992. He removed the rivets holding his electronic anklet together and went off to commit a murder. A computer detected the tampering. However, when it called a second computer to report the incident, the first computer received a busy signal and never called back. These examples illustrate that software failures can both be fatal and cause suffering to those whose lives are affected by the deaths of loved ones. Thus, severity is different from expected impact in that expected impact does not consider the suffering imposed on the user but merely considers the effect of the failure. Therefore, I argue that the greater the severity, the higher the risk. Assign a value of H for high, M for medium, or L for low for each requirement based on its severity. Table 2 – Expected Impact, Likelihood of Failure, Complexity, and Severity for the Login Functionality The Method of Risk Analysis At this point, the software team should assign a number to each high, medium, or low value for likelihood, expected impact, complexity, and severity indicators. It is possible to use a range of 1 to 3 with 3 being the highest or 1 to 5 with 5 being the highest. If you use the 1 to 5 range, there will be more detail. To keep the technique simple, let's use a range of 1 to 3 with 3 for high, 2 for medium, and 1 for low. As Craig and Jaskiel state, "Once a scale has been selected, you must use that same scale throughout the entire risk analysis." Furthermore, they state that "If your system is safetycritical, it's important that those features that can cause death or loss of limb are always assigned a high priority for test even if the overall risk was low due to an exceptionally low likelihood of failure." Next, the values assigned to likelihood of failure, expected impact, complexity, and severity should be added together. If a value of 3 for high, 2 for medium, and 1 for low is used, then 9 risk priority levels are possible ( i.e., 12, 11, 10, 9, 8, 7, 6, 5, 4). Table 3 - RiskPriority Cube Notice that the requirement "System shall validate each userID and password for uniqueness" has a relatively high likelihood of failure, high degree of complexity, high expected impact of failure, and high severity of failure which would give it a risk priority of twelve (3 + 3 + 3 + 3). The requirement that each password be five characters long has a risk priority of seven. The next step is to reorganize the list of requirements in order of risk priority. This sorted list provides clear insight into which requirements to test first. As Craig and Jaskiel point out, however, this technique, "doesn't take into account the testing dependencies." Table 4 – Sorted Priorities for the Login Function After this, the software team should establish a "cut line" to indicate the line below which features will be tested less. Table 5 – "Cut Line" for Login Function Requirements Table 5 indicates that the requirement, "Upon successful login, a welcome screen shall be presented" will be tested less in the current release of the software. An optional issue to consider is mitigation of risk. For example, the mitigation strategy for the highest priority risk in Table 5 may be to make code reviews a mandatory part of the software development process. Conclusion Risk analysis should be done early in the software development lifecycle. While there are many indicators of risk, I propose that expected impact, likelihood of failure, complexity, and severity should all be considered as good indicators of risk. Risk analysis allows you to prioritize those requirements that should be tested first. The process allows the test team to set expectations about what can be tested within the project deadline. The risk analysis method presented here is flexible and easy to adopt. Many different indicators can be used. It is also possible to use different rankings rather than one through three. The higher the scale, the more granular the analysis. Further Reading "Risk-Based Testing" by James Bach "The Risks to System Quality" by Rex Black "Systematic Software Testing" by Rick Craig and Stefan Jaskiel "Waltzing with Bears: Managing Risk on Software Projects" by Tom DeMarco and Timothy Lister "Software Risk Management Makes Good Business Sense" by Steve Goodwin "A Calculated Gamble" by Payson Hall "Testing in a Squeezed, Squeezed World" by Geoff Horne "How Software Doesn't Work" by Alan Joch and Oliver Sharp "Assessment and Control of Software Risks" by Capers Jones "An Introduction to Risk/Hazard Analysis for Medical Devices" by Daniel Kamm Ottevanger, Dr. Ingrid B. "A Risk-Based Test Strategy," IQUIP Informatica B.V. 3 (November 22, 2000). "Risk Analysis Basics" by Johanna Rothman "Cyclomatic Complexity" by Edmond VanDoren Wold, Geoffrey H., and Robert F. Shriver. "Risk Analysis Techniques," Disaster Recovery Journal: Vol. 7 no. 3. About the Author Yamini Munipalli is a CSTE-certified SQA analyst who has worked in software development for the last seven years at Landstar System, Inc., a $2 billion, nonasset-based transportation services company serving nearly 10,000 small-business owners. Yamini holds a Masters degree in Political Science and teaches evening classes in American Government at Florida Community College at Jacksonville in Florida.