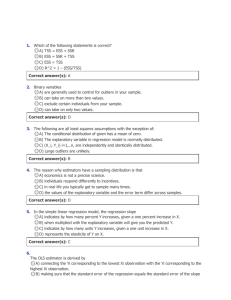

Topic 1: Introduction to Econometrics

advertisement