Instructional Objectives

advertisement

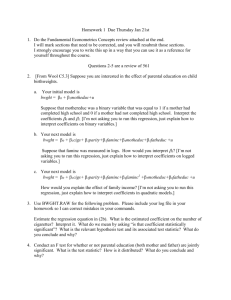

Instructional Objectives ST 508-001 Chapter 9: Linear Regression and Correlation Sections 9.1 – 9.5 1. Identify the response variable and explanatory variable 2. Explain the basic idea behind least squares. 3. Give the general formula for the prediction equation (least squares line). 4. Define and interpret residuals. 5. Define and interpret the SSE. 6. Give the formulas for estimating the intercept and slope using the Sums of Squares for X and Y and the Cross Product for X and Y. 7. Give the formula and interpret the standard deviation of the points around the least squares line. (This value is also known as the root mean square error.) 8. Define and interpret correlation. 9. Discuss the properties of correlation and how to calculate correlation. 10. Discuss the difference between association and causation. 11. Define and interpret the coefficient of determination. 12. Conduct a significance test to determine if X and Y are independent. 13. Give the formula for the estimated standard error of b. 14. Compute a confidence interval for the true slope . 15. Interpret linear model output from SAS and StatCrunch. Chapter 10: Multivariate Relationships Sections 10.1 – 10.3 1. Discuss the 3 criteria that a relationship must satisfy to be considered a causal relationship. 2. Define a control variable and explain what is meant by a variable being controlled. 3. Illustrate how bivariate tables and partial tables are used to determine if there is an association between 2 variables using the difference of proportions method (see p. 266) 4. Define a suppressor variable and an intervening variable. 5. Discuss the following types of multivariate relationships, and give examples of each (See table 10.8): a. Spurious associations b. Chain relationship c. Multiple causes d. Statistical interaction Chapter 11: Multiple Regression Sections 11.1-11.6 1. Define the multiple regression model. 2. Identify the parameters in the model and their representation. 3. Discuss what is meant by partial effects. 4. Give the general form of the prediction equation. 5. Explain the relationship between the prediction equation and the sum of squared errors. 6. Discuss the following: multiple correlation, coefficient of multiple determination, and multicollinearity. 7. Conduct a global significance test for independence. 8. Conduct a test for independence, controlling for other variables. 9. Discuss the F-distribution. 10. Construct a confidence interval for the partial regression coefficients. 11. Define interaction, and explain how to incorporate interaction in multiple regression models using cross product terms. 12. Conduct a test for interaction. 13. Explain what is meant by a complete model and a reduced model. 14. Conduct a test to determine if the complete model is better than the reduced model. 15. Interpret output from SAS. Chapter 12: ANOVA Sections 12.1-12.7 1. Discuss the sum of squares: BSS, WSS, TSS. Explain the relationship between these three sums. 2. Give another name for WSS and BSS. 3. Give a within variance estimate of the population variance (and the population standard deviation) 4. Discuss how to form the F test statistic based on the sum of squares. 5. Determine the degrees of freedom associated with the F test statistic. 6. Explain the goal of one-way ANOVA 7. Conduct a 5-step test for equality of group means (i.e., the one-way ANOVA F test). 8. Compute the confidence interval for a single mean and a difference of means. 9. Discuss and compute Bonferroni confidence intervals. 10. Explain how to perform one-way ANOVA by regression modeling. 11. Explain the goal of two-way ANOVA 12. Define interaction. 13. Explain a graphical approach for determining interaction. 14. List the 3 possible null hypotheses that are tested in a two-way ANOVA F test, and determine the order in which they should be tested. 15. Determine which hypotheses are main effect hypotheses. 16. Conduct a 5-step two-way ANOVA F test. 17. Explain how to perform two-way ANOVA by regression modeling. 18. Discuss Bonferroni confidence intervals for two-way ANOVA 19. Define partial sum of squares. 20. Define factors and levels. 21. Discuss ANOVA with repeated measures 22. Define fixed effects and random effects. 23. Conduct a hypothesis test for the fixed effect parameters. 24. Discuss Bonferroni confidence intervals for repeated measures ANOVA. 25. Represent the repeated measures ANOVA model in terms of fixed and random effects. 26. Explain what is meant by a mixed model. 27. Discuss two-way ANOVA with repeated measures 28. Identify the between subject factor and the within subject factor. 29. Interpret SAS and/or StatCrunch output for all analyses above except the two-way ANOVA with repeated measures. Chapter 13 ANCOVA Sections 13.1 – 13.6 1. Describe the method of ANCOVA. 2. Describe the ANCOVA models assuming interaction and without interaction. Interpret the meaning of each parameter. 3. Give separate prediction equations corresponding the different categories of qualitative variable. 4. Describe the inferential procedures for ANCOVA Models. Give the 3 tests that are commonly done (For final, test for interaction only). 5. Give the F Test Statistic involved. 6. Define, compute and discuss adjusted means. 7. Interpret SAS output for all above procedures. Chapter 14 Model Selection Section 14.1 1. Discuss and apply the 3 most common automated variable selection methods: backward elimination, forward selection and stepwise regression. 2. Discuss the Cp statistic. 3. Interpret related SAS output. Chapter 15: Logistic Regression Sections 15.1-15.2 1. Give the situation in which one would use logistic regression. 2. Describe the logistic regression model. 3. Explain why logistic regression model is better than the linear probability model. 4. Give the logistic regression model in mathematical notation. 5. Give the equation for the probability of success. 6. Give the prediction equation for the probability of success and predict probabilities at particular values of X. 7. Define the odds and explain what the odds mean. 8. Discuss the multiplicative effect of X on the odds, e 9. Discuss how to test the hypothesis that X has no effect on using the Wald statistic. 10. Explain multiple logistic regression. 11. Perform the F-test for comparing complete and reduced models for multiple logistic regression. 12. Interpret SAS output for the above items.