The Fourier Integral

advertisement

Jean Baptiste Joseph Fourier

• Born: 21 March 1768

in Auxerre,

Bourgogne, France

Died: 16 May 1830 in

Paris, France

The Fourier transform, a pervasive and

versatile tool, is used in many fields of

science as a mathematical or physical tool

to alter a problem into one that can be

more easily solved. Some scientists

understand Fourier theory as a physical

phenomenon, not simply as a mathematical

tool.

Forrest Hoffman

http://mathworld.wolfram.com/FourierSeries.html

Fourier Series

A Fourier series is an expansion of a periodic function f(x) in terms of an infinite sum of

sines and cosines. Fourier series make use of the orthogonality relationships of the sine

and cosine functions. The computation and study of Fourier series is known as harmonic

analysis and is extremely useful as a way to break up an arbitrary periodic function into a

a set of simple terms than can be plugged in, solved individually, and then recombined to

obtain the solution to the original problem or an approximation to it to whatever accuracy

is desired or practical.

In particular, since the superposition principle holds for solutions of a linear homogeneous

ordinary differential equation, if such an equation can be solved in the case a single

sinusoid, the solution for an arbitrary function is immediately available by expressing the

original function as a Fourier series and then plugging in the solution for each sinusoidal

component. In some special cases where the Fourier series can be summed in closed form,

this technique can even yield analytic solutions.

Any set of functions that form a complete orthogonal system have a corresponding

generalized Fourier series analogous to the Fourier series. For example, using

orthogonality of the roots of a Bessel function of the first kind gives a so-called FourierBessel series.

The computation of the (usual) Fourier series is based on the integral identities

(1)

(2)

(3)

(4)

(5)

for

, where

is the Kronecker delta.

Using the method for a generalized Fourier series, the usual Fourier series involving sines

and cosines is obtained by taking

and

functions form a complete orthogonal system over

function f(x) is given by

. Since these

, the Fourier series of a

(6)

where

(7)

(8)

(9)

and n = 1, 2, 3, .... Note that the coefficient of the constant term

has been written in a

special form compared fo the general form for a generalized Fourier series in order to

preserve symmetry with the definitions of

and

.

A Fourier series converges to the function

(equal to the original function at points of

continuity or to the average of the two limits at points of discontinuity)

(10)

if the function satisfies so-called Dirichlet conditions.

As a result, near points of discontinuity, a "ringing" known as the Gibbs phenomenon,

illustrated above, can occur.

For a function f(x) periodic on an interval

instead of

variables can be used to transform the interval of integration from

Let

, a simple change of

to

.

(11)

(12)

Solving for

gives

, and plugging this in gives

(13)

Therefore,

(14)

(15)

(16)

Similarly, the function is instead defined on the interval

simply become

, the above equations

(17)

(18)

(19)

In fact, for f(x) periodic with period

, any interval

can be used, with the

choice being one of convenience or personal preference (Arfken 1985, p. 769).

The coefficients for Fourier series expansions of a few common functions are given in

Beyer (1987, pp. 411-412) and Byerly (1959, p. 51). One of the most common functions

usually analyzed by this technique is the square wave. The Fourier series for a number of

common functions are summarized in the table below.

function

f(x)

Fourier series

Fourier series-sawtooth wave

Fourier series-square wave

Fourier series-triangle

waveFourier

Series Triangle

Wave

T(x)

If a function is even so that

since

Therefore,

, then

is odd. (This follows

is odd and an even function times an odd function is an odd function.)

for all n. Similarly, if a function is odd so that

is odd. (This follows since

odd function is an odd function.) Therefore,

, then

is even and an even function times an

for all n.

The notion of a Fourier series can also be extended to complex coefficients. Consider a

real-valued function f(x). Write

(20)

Now examine

(21)

(22)

(23)

(24)

so

(25)

The coefficients can be expressed in terms of those in the Fourier series

(26)

(27)

(28)

For a function periodic in

, these become

(29)

(30)

These equations are the basis for the extremely important Fourier transform, which is

obtained by transforming

.

from a discrete variable to a continuous one as the length

Complete Set of Functions, Dirichlet Fourier Series Conditions, Fourier-Bessel Series,

Fourier Cosine Series, Fourier-Legendre Series, Fourier Series--Power Series, Fourier

Series--Sawtooth Wave, Fourier Series--Semicircle, Fourier Series--Square Wave, Fourier

Series--Triangle Wave, Fourier Sine Series, Fourier Transform, Generalized Fourier Series,

Gibbs Phenomenon, Harmonic Addition Theorem, Harmonic Analysis, Lacunary Fourier

Series, Lebesgue Constants, Power Spectrum, Riesz-Fischer Theorem, Simple Harmonic

Motion, Superposition Principle

References

Arfken, G. "Fourier Series." Ch. 14 in Mathematical Methods for Physicists, 3rd ed.

Orlando, FL: Academic Press, pp. 760-793, 1985.

Askey, R. and Haimo, D. T. "Similarities between Fourier and Power Series." Amer. Math.

Monthly 103, 297-304, 1996.

Beyer, W. H. (Ed.). CRC Standard Mathematical Tables, 28th ed. Boca Raton, FL: CRC

Press, 1987.

Brown, J. W. and Churchill, R. V. Fourier Series and Boundary Value Problems, 5th ed.

New York: McGraw-Hill, 1993.

Byerly, W. E. An Elementary Treatise on Fourier's Series, and Spherical, Cylindrical, and

Ellipsoidal Harmonics, with Applications to Problems in Mathematical Physics. New York:

Dover, 1959.

Carslaw, H. S. Introduction to the Theory of Fourier's Series and Integrals, 3rd ed., rev.

and enl. New York: Dover, 1950.

Davis, H. F. Fourier Series and Orthogonal Functions. New York: Dover, 1963.

Dym, H. and McKean, H. P. Fourier Series and Integrals. New York: Academic Press, 1972.

Folland, G. B. Fourier Analysis and Its Applications. Pacific Grove, CA: Brooks/Cole, 1992.

Groemer, H. Geometric Applications of Fourier Series and Spherical Harmonics. New York:

Cambridge University Press, 1996.

Körner, T. W. Fourier Analysis. Cambridge, England: Cambridge University Press, 1988.

Körner, T. W. Exercises for Fourier Analysis. New York: Cambridge University Press, 1993.

Krantz, S. G. "Fourier Series." §15.1 in Handbook of Complex Variables. Boston, MA:

Birkhäuser, pp. 195-202, 1999.

Lighthill, M. J. Introduction to Fourier Analysis and Generalised Functions. Cambridge,

England: Cambridge University Press, 1958.

Morrison, N. Introduction to Fourier Analysis. New York: Wiley, 1994.

Sansone, G. "Expansions in Fourier Series." Ch. 2 in Orthogonal Functions, rev. English ed.

New York: Dover, pp. 39-168, 1991.

Weisstein, E. W. "Books about Fourier Transforms."

http://www.ericweisstein.com/encyclopedias/books/FourierTransforms.html.

Whittaker, E. T. and Robinson, G. "Practical Fourier Analysis." Ch. 10 in The Calculus of

Observations: A Treatise on Numerical Mathematics, 4th ed. New York: Dover, pp. 260284, 1967.

Eric W. Weisstein. "Fourier Series." From MathWorld--A Wolfram Web Resource.

http://mathworld.wolfram.com/FourierSeries.html

Listen to Fourier Series

http://www.jhu.edu/~signals/listen/music1rm.html

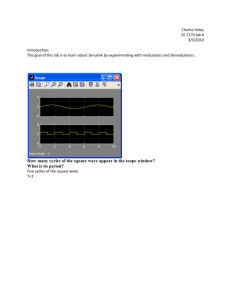

Audio signals describe pressure variations on the ear that are perceived as sound. We

focus on periodic audio signals, that is, on tones.

A pure tone can be written as a cosinusoidal signal of amplitude a > 0, frequency o > 0,

and phase angle :

x(t) = a cos(ot + )

The frequency o is in units of radians/second, and o/2 is the frequency in Hertz.

The perceived loudness of a tone is proportional to a0.6.

The pitch of a pure tone is logarithmically related to the frequency. Perceptually,

tones separated by an octave (factor of 2 in frequency) are very similar. For the

purpose of Western music, the octave is subdivided into 12 notes, equally spaced on a

logarithmic scale. The ordering of notes in the octave beginning with 220 Hz is shown

in the following table. Click on the waveform to listen to the corresponding tone.

Fourier Synthesis

http://www.phy.ntnu.edu.tw/java/sound/sound.html

How to play:

1. Left click and drag the [ball, green] circles to change the magnitude of each

Fourier functions [Sin nf, Cos nf].

2. Right click the mouse button to change the magnitude between 0 and 1.0

3. Click Play to turn on the sound effect, Stop to turn it off.

4. The coefficient of sin(0f) is used as amplification factor for all modes.

(Use it to change the sound level),the coefficient of cos(0f) is the DC

component.

5. Click the checkbox at the top(after stop) will show square the the amplitude of

the signal.

The default value for base frequency is f=100Hz, you can change it from the

TextField. (20 < f < 2000). The ear is 1000 times more sensitive at 1kHz than at

100Hz.

http://www.math.ohio-state.edu/~gerlach/math/BVtypset/node30.html

Transition from Fourier Series to Fourier Integral

We now extend the domain of definition of a linear system from a finite interval, say

, to the infinite interval

. We shall do this by means of a line of

arguments which is heuristic (``serving to discover or stimulate investigation''). Even

though it pretty much ignores issues of convergence, it has the advantage of giving us

at a glance the general direction of the arguments. Treatments which do not ignore the

questions of convergence can be found in §61-65, ``Fourier Series and Boundary

Value Problems'' by Churchill and Brown or in §4.8, ``Methods in Theoretical

Physics'' by Morse and Feshbach.

We start with the complete set of basis functions orthonormal on the interval

The Fourier series for

If

is continuous at

,

is

, then

Second, we let

and, after rearranging some factors, obtain

(220)

Third, by introducing the points

we partition the real -axis into equally spaced subintervals of size

We introduce these points into the Fourier sum, Eq.(2.20),

.

(221)

(222)

Note that this Fourier sum is, in fact, a Riemann sum, a precursor (i.e. approximation)

to the definite integral

(223)

over the limit of the interval

as

The fourth and final step is to let

in order to obtain the result

(224)

Let us summarize the chain of reasoning that leads to the result expressed by Eq.(2.24):

(i) We are given the function

, and from it compute the integral

(225)

(ii) We claim that from

one can reconstruct

by using the formula

(226)

This claim is precisely Eq.(2.24), and it is known as the Fourier Transform Theorem.

Except for the final step, each of the four steps leading to Eq.(2.24) can be verified easily.

That final step, which takes us from Eq.(2.22) to Eq.(2.24) suggests that the infinite sum

approaches an improper integral as

. In other words,

But there are problems with this suggestion, and they are

1.

becomes a different function as

, and

2. an improper integral is defined by

and not by the limit of the discrete approximation given above.

These two objections can, however, be met. Churchill and Brown in §63 accomplish this in

one way. Morse and Feshbach in §4.8 accomplish this in another way. Using their more

careful line of reasoning one, therefore, finds that a function which

1. is piecewise continuous on every bounded interval of the

2. is absolutely integrable along the -axis,

has the property that as

improper integral indeed:

-axis,

the limit of the Fourier series, Eq.(2.20) is an

This result can be restated as a linear transformation and its inverse:

Alternatively, by interchanging the order in which the integration is done, one has

This holds for all (``well behaved'') functions. Consequently, the integration kernel

is an expression for the Dirac delta function.

Either of the last two equations is a generalized completeness relation for the set of

``wave train'' functions

1. These functions are not normalizable, i.e., they

. Instead,

they are said to be `` -function normalized'', as the second equation implies.

2. That the first equation is a completeness relation can be seen from the fact that

it implies Parseval's identity. For

we have

3.

Thus we obtain Parseval's identity (= completeness relation). The only proviso

is (a) that the function

be square-inegrable and (b) that its Fourier transform

be given by the Fourier transform integral.

Remark 1: Note that the Fourier transform is a one-to-one linear transformation from the

set of square-integrable functions onto the set of square integrable functions:

. In other words, the Fourier

transform is what in linear algebra is called an ``isomorphism''.

Remark 2: The line of reasoning leading to Parseval's identity also leads to

whenever

.

Remark 3: The above two remarks imply that the Fourier transform can be viewed as a

unitary transformation in

. Unitary transformations are ``isometries''

because they preserve lengths and inner products. One says, therefore, that the space of

functions defined on the spatial domain is ``isometric'' to the space of functions defined

on the Fourier domain. Thus the Fourier transform operator is a linear isometric mapping.

This fact is depicted by Figure 2.3

Figure 2.3: The Fourier transform is an isometry between

, the Hilbert space of

square integrable functions on the spatial domain, and

, the Hilbert space of

square integrable functions on the Fourier domain.

Note, however, that even though the Fourier transform and its inverse,

(227)

take square integrable functions into square integrable functions, the ``basis elements''

are not square integrable. Instead, they are ``Dirac delta function'' normalized,

i.e.,

Thus they do not belong to

. Nevertheless linear combinations such as

Eq.(2.27) are square integrable, and that is what counts.

Exercise 23.1 (THE FOURIER TRANSFORM: ITS EIGENVALUES)

The Fourier transform, call it

, is a linear one-to-one operator from the space of

square-integrable functions onto itself. Indeed,

Note that here

and

(a)

and

are viewed as points on the common domain

of

.

Consider the linear operator

and its eigenvalue equation

What are the eigenvalues and the eigenfunctions of

?

(b)

Identify the operator

? What are its eigenvalues?

(c)

What are the eigenvalues of

Exercise 23.2 (THE FOURIER TRANSFORM: ITS EIGENVECTORS)

Recall that the Fourier transform

is a linear one-to-one transformation from

onto itself.

Let

be an element of

Let

.

, the Fourier transform of

, be defined by

It is clear that

are square-integable functions, i.e. elements of

Consider the SUBSPACE

(a)

Show that

Show that

is finite dimensional.

is finite dimensional.

.

spanned by these vectors, namely

What is

?

(Hint: Compute

, etc. in terms of

)

(b)

Exhibit a basis for

.

(c)

It is evident that

is a (unitary) transformation on

Find the representation matrix of

part b).

.

, relative to the basis

found in

(d)

Find the secular determinant, the eigenvalues and the corresponding

eigenvectors of

.

(e)

For

, exhibit an alternative basis which consists entirely of eigenvectors of

, each one labelled by its respective eigenvalue.

(f)

What can you say about the eigenvalues of

as compared to

viewed as a transformation on

which acts on a finite-dimensional

vector space?

Exercise 23.3 (EQUIVALENT WIDTHS)

Suppose we define for a square-integrable function

and its Fourier transform

the equivalent width as

and the equivalent Fourier width as

(a)

Show that

is independent of the function

(b)

, and determine the value of this

Determine the equivalent width and the equivalent Fourier width for the

unnormalized Gaussian

and compare them with its full width as defined by its inflection points.

Exercise 23.4 (AUTO CORRELATION SPECTRUM)

Consider the auto-correlation

of the function

whose Fourier transform is

Compute the Fourier transform of the auto correlation function and thereby show that

it equals the ``spectral intensity'' (a.k.a. power spectrum) of whenever is a realvalued function. This equality is known as the Wiener-Khintchine formula.

Exercise 23.5 (MATCHED FILTER)

Consider a linear time-invariant system. Assume its response to a specific driving force,

say

, can be written as

Here

, the ``unit impulse response' (a.k.a. ``Green's function'', as developed

in CHAPTER 4 and used in Section 4.2.1), is a function which characterizes the

system completely. The system is said to be matched to the particular forcing function

if

(Here the bar means complex conjugate.) In that case the system response to a generic

forcing function

is

A system characterized by such a unit impulse response is called a matched filter

because its design is matched to the particular signal

called the cross correlation between

(a)

Compute the total energy

of the cross correlation

and

. The response

is

.

in terms of the Fourier amplitudes

and

(b)

Consider the family of forcing functions

and the corresponding family of normalized cross correlations (i.e. the

corresponding responses of the system)

Show that

(i)

is the peak intensity, i.e., that

(Nota bene: The function

correlation function of

(ii)

corresponding to

). Also show that

equality holds if the forcing function

has the form

is called the auto

http://www.math.ohio-state.edu/~gerlach/math/BVtypset/node29.html

The Fourier Integral

Question: What aspect of nature is responsible for the pervasive importance of Fourier

analysis?

Answer: Translation invariance. Suppose a linear system is invariant under time or space

translations. Then that system's behaviour becomes particularly perspicuous, physically

and mathematically, when it is described in terms of translation eigenfunctions, i.e., in

terms of exponentials which oscillate under time or space translations. (Nota bene: real

exponentials are also translation eigenfunctions, but they won't do because they blow up

at

or

.) In other words, it is the translation invariance in nature which makes

Fourier analysis possible and profitable.

http://www.ma.iup.edu/projects/CalcDEMma/fouriertrans/fouriertrans04.html

Fourier Integral Examples

Let's look at the Fourier integral representation of the pulse from -1 to 1.

FourierTransform[UnitStep[t+1]-UnitStep[t-1],t,w]

Recall that C(w) is 1/2 F(w).

f[t_]:=1/Pi Integrate[Sin[w]/w Exp[I w t],{w,-Infinity,Infinity}]

f[t]

Do not be thrown off by the output. Look at the graph.

Plot[Evaluate[f[t]], {t,-2,2}];

Does the fourier transform in Mathematica converge to the proper value? (Ignore the

warning messages you see.)

f[-1]

f[1]

Let's look at the Fourier Integral representation of the function that is sin(t) from -3Pi to Pi

and zero elsewhere.

FourierTransform[(UnitStep[t+3 Pi]-UnitStep[t-Pi])Sin[t],t,w]

Yuck! There is a valuable lesson to be learned here. It may be better to evaluate the

Fourier transform from the definition instead of using the Mathematica command.

F[w_]:=Integrate[Sin[t] Exp[- I w t],{t,-3 Pi, Pi}]

F[w]

Simplify[ComplexExpand[F[w]]]

Recall that C(w) is 1/2 F(w).

g[t_]:=1/Pi Integrate[1/2 F[w] Exp[I w t],{w,-Infinity,Infinity}]

Plot[Evaluate[g[t]], {t,-6 Pi,3 Pi}];

Another valuable lesson. Look at the scale of your graph before you judge!

Plot[Evaluate[g[t]], {t,-6 Pi,3 Pi},PlotRange->{-2,2}];

http://aurora.phys.utk.edu/~forrest/papers/fourier/

An Introduction to Fourier Theory

by Forrest Hoffman

This paper is also available in DVI, and PostScript® formats.

Table of Contents

Introduction

The Fourier Transform

The Two Domains

Fourier Transform Properties

o Scaling Property

o Shifting Property

o Convolution Theorem

o Correlation Theorem

Parseval's Theorem

Sampling Theorem

Aliasing

Discrete Fourier Transform (DFT)

Fast Fourier Transform (FFT)

Summary

References

Introduction

Linear transforms, especially Fourier and Laplace transforms, are widely used in

solving problems in science and engineering. The Fourier transform is used in linear

systems analysis, antenna studies, optics, random process modeling, probability

theory, quantum physics, and boundary-value problems (Brigham, 2-3) and has been

very successfully applied to restoration of astronomical data (Brault and White). The

Fourier transform, a pervasive and versatile tool, is used in many fields of science as a

mathematical or physical tool to alter a problem into one that can be more easily

solved. Some scientists understand Fourier theory as a physical phenomenon, not

simply as a mathematical tool. In some branches of science, the Fourier transform of

one function may yield another physical function (Bracewell, 1-2).

The Fourier Transform

The Fourier transform, in essence, decomposes or separates a waveform or function

into sinusoids of different frequency which sum to the original waveform. It identifies

or distinguishes the different frequency sinusoids and their respective amplitudes

(Brigham, 4). The Fourier transform of f(x) is defined as

F(s) =

f(x) exp(-i 2

xs) dx.

Applying the same transform to F(s) gives

f(w) =

F(s) exp(-i 2

ws) ds.

If f(x) is an even function of x, that is f(x) = f(-x), then f(w) = f(x). If f(x) is an odd

function of x, that is f(x) = -f(-x), then f(w) = f(-x). When f(x) is neither even nor odd, it

can often be split into even or odd parts.

To avoid confusion, it is customary to write the Fourier transform and its inverse so that

they exhibit reversibility:

F(s) =

f(x) exp(-i 2

f(x) =

F(s) exp(i 2

xs) dx

xs) ds

so that

f(x) =

f(x) exp(-i 2

xs) dx

exp(i 2

xs) ds

as long as the integral exists and any discontinuities, usually represented by multiple

integrals of the form ½[f(x+) + f(x-)], are finite. The transform quantity F(s) is often

represented as

(Bracewell, 6-8).

and the Fourier transform is often represented by the operator

There are functions for which the Fourier transform does not exist; however, most physical

functions have a Fourier transform, especially if the transform represents a physical

quantity. Other functions can be treated with Fourier theory as limiting cases. Many of the

common theoretical functions are actually limiting cases in Fourier theory.

Usually functions or waveforms can be split into even and odd parts as follows

f(x) = E(x) + O(x)

where

E(x) = ½ [f(x) + f(-x)]

O(x) = ½ [f(x) - f(-x)]

and E(x), O(x) are, in general, complex. In this representation, the Fourier transform of

f(x) reduces to

2

E(x) cos(2

xs) dx - 2i

O(x) sin(2

xs) dx

It follows then that an even function has an even transform and that an odd function has

an odd transform. Additional symmetry properties are shown in Table 1 (Bracewell, 14).

Table 1: Symmetry Properties of the Fourier Transform

function

transform

----------------------------------------------------------real and even

real and even

real and odd

imaginary and odd

imaginary and even

imaginary and even

complex and even

complex and even

complex and odd

complex and odd

real and asymmetrical

complex and asymmetrical

imaginary and asymmetrical

complex and asymmetrical

real even plus imaginary odd

real

real odd plus imaginary even

imaginary

even

even

odd

odd

An important case from Table 1 is that of an Hermitian function, one in which the real

part is even and the imaginary part is odd, i.e., f(x) = f*(-x). The Fourier transform of

an Hermitian function is even. In addition, the Fourier transform of the complex

conjugate of a function f(x) is F*(-s), the reflection of the conjugate of the transform.

The cosine transform of a function f(x) is defined as

Fc(s) = 2

f(x) cos 2

sx dx.

This is equivalent to the Fourier transform if f(x) is an even function. In general, the even

part of the Fourier transform of f(x) is the cosine transform of the even part of f(x). The

cosine transform has a reverse transform given by

f(x) = 2

Fc(s) cos 2

sx ds.

Likewise, the sine transform of f(x) is defined by

FS(s) = 2

f(x) sin 2

sx dx.

As a result, i times the odd part of the Fourier transform of f(x) is the sine transform of the

odd part of f(x).

Combining the sine and cosine transforms of the even and odd parts of f(x) leads to the

Fourier transform of the whole of f(x):

f(x) =

CE(x)

-i

SO(x)

where

,

C, and

S stand for -i times the Fourier transform, the cosine

transform, and the sine transform respectively, or

F(s) = ½FC(s) - ½iFS(s)

(Bracewell, 17-18).

Since the Fourier transform F(s) is a frequency domain representation of a function f(x),

the s characterizes the frequency of the decomposed cosinusoids and sinusoids and is

equal to the number of cycles per unit of x (Bracewell, 18-21). If a function or waveform is

not periodic, then the Fourier transform of the function will be a continuous function of

frequency (Brigham, 4).

The Two Domains

It is often useful to think of functions and their transforms as occupying two domains.

These domains are referred to as the upper and the lower domains in older texts, ``as

if functions circulated at ground level and their transforms in the underworld''

(Bracewell, 135). They are also referred to as the function and transform domains, but

in most physics applications they are called the time and frequency domains

respectively. Operations performed in one domain have corresponding operations in

the other. For example, as will be shown below, the convolution operation in the time

domain becomes a multiplication operation in the frequency domain, that is, f(x)

g(x)

F(s) G(s). The reverse is also true, F(s)

G(s)

f(x) g(x). Such

theorems allow one to move between domains so that operations can be performed

where they are easiest or most advantageous.

Fourier Transform Properties

Scaling Property

If

{f(x)} = F(s) and a is a real, nonzero constant, then

{f(ax)} =

= |a|-1

f(

f(ax) exp(i 2

) exp(i 2

sx) dx

s

/a) d

= |a|-1 F(s/a).

From this, the time scaling property, it is evident that if the width of a function is

decreased while its height is kept constant, then its Fourier transform becomes wider and

shorter. If its width is increased, its transform becomes narrower and taller.

A similar frequency scaling property is given by

{|a|-1 f(x/a)} = F(as).

Shifting Property

If

{f(x)} = F(s) and x0 is a real constant, then

{f(x - x0)} =

=

f(

= exp(i 2

f(x - x0) exp(i 2

) exp(i 2

s(

x0s)

= F(s) exp(i 2

f(

sx) dx

+ x0)) d

) exp(i 2

s

)d

x0s).

This time shifting property states that the Fourier transform of a shifted function is just the

transform of the unshifted function multiplied by an exponential factor having a linear

phase.

Likewise, the frequency shifting property states that if F(s) is shifted by a constant s0, its

inverse transform is multiplied by exp(i 2

{f(x) exp(i 2

xs0)} = F(s-s0).

xs0)

Convolution Theorem

We now derive the aforementioned time convolution theorem. Suppose that g(x) =

f(x)

h(x). Then, given that

= H(s),

G(s) =

{f(x)

{g(x)} = G(s),

{

f(

) h(x -

)d

}

=

[

f(

) h(x -

)d

] exp(-i 2

=

f(

)[

f(

{h(x)}

h(x)}

=

= H(s)

{f(x)} = F(s), and

h(x -

) exp(-i 2

) exp(-i 2

s

sx) dx

sx) dx ] d

)d

= F(s) H(s).

This extremely powerful result demonstrates that the Fourier transform of a convolution is

simply given by the product of the individual transforms, that is

{f(x)

h(x)} = F(s) H(s).

Using a similar derivation, it can be shown that the Fourier transform of a product is given

by the convolution of the individual transforms, that is

{f(x) h(x)} = F(s)

H(s)

This is the statement of the frequency convolution theorem (Gaskill, 194-197; Brigham,

60-65).

Correlation Theorem

The correlation integral, like the convolution integral, is important in theoretical and

practical applications. The correction integral is defined as

h(x) =

f(u) g(x+u) du

and like the convolution integral, it forms a Fourier transform pair given by

{h(x)} = F(s) G*(s).

This is the statement of the correlation theorem. If f(x) and g(x) are the same function,

the integral above is normally called the autocorrelation function, and the crosscorrelation

if they differ (Brigham, 65-69). The Fourier transform pair for the autocorrelation is simply

f(u) f(x+u) du

= |F |2.

Parseval's Theorem

Parseval's Theorem states that the power of a signal represented by a function h(t) is

the same whether computed in signal space or frequency (transform) space; that is,

h2(t) dt =

|H(f) |2 df

(Brigham, 23). The power spectrum, P(f), is given by

P(f) = |H(f) |2,

f

.

Sampling Theorem

A bandlimited signal is a signal, f(t), which has no spectral components beyond a

frequency B Hz; that is, F(s) = 0 for |s| > 2

B. The sampling theorem states that a

real signal, f(t), which is bandlimited to B Hz can be reconstructed without error from

samples taken uniformly at a rate R > 2B samples per second. This minimum

sampling frequency,

s = 2B Hz, is called the Nyquist rate or the Nyquist

frequency. The corresponding sampling interval, T = 1/2B (where t = nT), is called

the Nyquist interval. A signal bandlimited to B Hz which is sampled at less than the

Nyquist frequency of 2B, i.e., which was sampled at an interval T > 1/2B, is said to be

undersampled.

Aliasing

A number of practical difficulties are encountered in reconstructing a signal from its

samples. The sampling theorem assumes that a signal is bandlimited. In practice,

however, signals are timelimited, not bandlimited. As a result, determining an

adequate sampling frequency which does not lose desired information can be difficult.

When a signal is undersampled, its spectrum has overlapping tails; that is F(s) no

longer has complete information about the spectrum and it is no longer possible to

recover f(t) from the sampled signal. In this case, the tailing spectrum does not go to

zero, but is folded back onto the apparent spectrum. This inversion of the tail is called

spectral folding or aliasing (Lathi, 532-535).

Figure 1: Undersampled, oversampled, and critically-sampled unit area gaussian curves.

As an example, Figure 1 shows a unit gaussian curve sampled at three different rates. The

FFT (or Fast Fourier Transform) of the undersampled gaussian appears flattened and its

tails do not reach zero. This is a result of aliasing. Additional spectral components have

been folded back onto the ends of the spectrum or added to the edges to produce this

curve.

The FFT of the oversampled gaussian reaches zero very quickly. Much of its spectrum is

zero and is not needed to reconstruct the original gaussian.

Finally, the FFT of the critically-sampled gaussian has tails which reach zero at their ends.

The data in the spectrum of the critically-sampled gaussian is just sufficient to reconstruct

the original. This gaussian was sampled at the Nyquist frequency.

Figure 1 was generated using IDL with the following code:

!P.Multi=[0,3,2]

a=gauss(256,2.0,2) ; undersampled

fa=fft(a,-1)

b=gauss(256,2.0,0.1) ; oversampled

fb=fft(b,-1)

c=gauss(256,2.0,0.8) ; critically sampled

fc=fft(c,-1)

plot,a,title='!6Undersampled Gaussian'

plot,b,title='!6Oversampled Gaussian'

plot,c,title='!6Critically-Sampled Gaussian'

plot,shift(abs(fa),128),title='!6FFT of Undersampled Gaussian'

plot,shift(abs(fb),128),title='!6FFT of Oversampled Gaussian'

plot,shift(abs(fc),128),title='!6FFT of Critically-Sampled

Gaussian'

The gauss function is as follows:

function gauss,dim,fwhm,interval

;

; gauss - produce a normalized gaussian curve centered in dim data

;

points with a full width at half maximum of fwhm sampled

;

with a periodicity of interval

;

; dim

= the number of points

; fwhm

= full width half max of gaussian

; interval = sampling interval

;

center=dim/2.0 ; automatically center gaussian in dim points

x=findgen(dim)-center

sigma=fwhm/sqrt(8.0 * alog(2.0)) ; fwhm is in data points

coeff=1.0 / ( sqrt(2.0*!Pi) * (sigma/interval) )

data=coeff * exp( -(interval * x)^2.0 / (2.0*sigma^2.0) )

return,data

end

Discrete Fourier Transform (DFT)

Because a digital computer works only with discrete data, numerical computation of

the Fourier transform of f(t) requires discrete sample values of f(t), which we will call

fk. In addition, a computer can compute the transform F(s) only at discrete values of s,

that is, it can only provide discrete samples of the transform, Fr. If f(kT) and F(rs0) are

the kth and rth samples of f(t) and F(s), respectively, and N0 is the number of samples

in the signal in one period T0, then

fk = T f(kT) = T0N0-1 f(kT)

and

Fr = F(rs0)

where

s0 = 2

0

=2

T0-1.

The discrete Fourier transform (DFT) is defined as

Fr =

where

fk exp(-i r

0

=2

0k)

N0-1. Its inverse is

fk = N0-1

Fr exp(i r

0k).

These equations can be used to compute transforms and inverse transforms of

appropriately-sampled data. Proofs of these relationships are in Lathi (546-548).

Fast Fourier Transform (FFT)

The Fast Fourier Transform (FFT) is a DFT algorithm developed by Tukey and

Cooley in 1965 which reduces the number of computations from something on the

order of N02 to N0 log N0. There are basically two types of Tukey-Cooley FFT

algorithms in use: decimation-in-time and decimation-in-frequency. The algorithm is

simplified if N0 is chosen to be a power of 2, but it is not a requirement.

Summary

The Fourier transform, an invaluable tool in science and engineering, has been

introduced and defined. Its symmetry and computational properties have been

described and the significance of the time or signal space (or domain) vs. the

frequency or spectral domain has been mentioned. In addition, important concepts in

sampling required for the understanding of the sampling theorem and the problem of

aliasing have been discussed. An example of aliasing was provided along with a short

description of the discrete Fourier transform (DFT) and its popular offspring, the Fast

Fourier Transform (FFT) algorithm.

References

Blass, William E. and Halsey, George W., 1981, Deconvolution of Absorption

Spectra, New York: Academic Press, 158 pp.

Bracewell, Ron N., 1965, The Fourier Transform and Its Applications, New York: McGrawHill Book Company, 381 pp.

Brault, J. W. and White, O. R., 1971, The analysis and restoration of astronomical data via

the fast Fourier transform, Astron. & Astrophys., 13, pp. 169-189.

Brigham, E. Oren, 1988, The Fast Fourier Transform and Its Applications, Englewood Cliffs,

NJ: Prentice-Hall, Inc., 448 pp.

Cooley, J. W. and Tukey, J. W., 1965, An algorithm for the machine calculation of complex

Fourier series, Mathematics of Computation, 19, 90, pp. 297-301.

Gabel, Robert A. and Roberts, Richard A., 1973, Signals and Linear Systems, New York:

John Wiley & Sons, 415 pp.

Gaskill, Jack D., 1978, Linear Systems, Fourier Transforms, and Optics, New York: John

Wiley & Sons, 554 pp.

Lathi, B. P., 1992, Linear Systems and Signals, Carmichael, Calif: Berkeley-Cambridge

Press, 656 pp.

Physics 641- Instrument Design and Signal Enhancement / Forrest Hoffman