1 Introduction - Electrical and Computer Engineering

advertisement

MK&RE® Telecommunications

Linear Orthogonal Error Correcting Codes

Abstract

Coding theory is the branch of mathematics concerned with transmitting data across

noisy channels and recovering the message. Coding theory is about making messages

easy to read: don't confuse it with cryptography, which is the art of making messages

hard to read! The key challenge coding theorists face is to construct "good" codes and

efficient algorithms for encoding and decoding them.

Following section 2 of this report, the reader may be more familiar with basic theory and

mathematics behind error coding science. We assume that our message is in the form of

binary digits or bits, strings of 0 or 1. We have to transmit these bits along a channel

(such as a telephone line) in which errors occur randomly, but at a predictable overall

rate.

To compensate for the errors we need to transmit more bits than there are in the original

message. A more conventional example is the typical spell checker. A spell checker

examines each word in your document, then compares it to the ones found in its memory.

If the word in your document is not found, the computer warns you. This is an example

of an error detecting code.

Our program which is developed in C++ gives the user the chance to generate, view, and

cross-reference the M-value and the actual “error-correction codes” for the particular

system that he/she is studying. The input parameters are n, q, and dmin. Please refer to

section 2 for more explanations of the symbols. The results are tested to be orthogonal

and fairly consistent compared to the theoretical calculations, using code bounds.

An integer value for k means the code is linear, whereas a real number means the code is

non-linear. For the orthogonal codes, looking at the chart reveals, once again, that for q =

Reza Esmailzadeh

Page 1 of 23

Mike Kooznetsoff

MK&RE® Telecommunications

Linear Orthogonal Error Correcting Codes

2 the codes are linear, but for q = 3, the codes are mostly linear as well, except for the

cases when n = 9 and dmin = 4, 5, and 6. At first glance, it would appear that for the q = 4

case, the codes are partly non-linear, due to the fact that about half the values of k are real

numbers, as opposed to integers. But, if the k value is calculated using q = 2 in the above

equation, all the k values are indeed integers, making the code words all linear. This

expresses a unique property of code words generated using the number base q = 4.

The orthogonal and non-orthogonal programs were both run for q values of 2, 3, and 4.

For each of these q values, n and dmin were both chosen to be between 2 and 9, and all

possible combinations of n and dmin were tried. The programs displayed all the code

words fitting these three parameters, as well as M, the total number of code words. By

using the relation:

k = logq M -> q^k = M -> log10 q^k = log10 M -> k = log10 M / log10 q

At appendix D, our main project results are tabulated consistently, using the n, q, and

dmin as given parameters. The tables will give the resulting M-value that the user can

use to double-check his/her work. Also, by running the C++ codes attached to

Appendices A&B, the user could generate and get a printout of the error correction codes,

in only a few seconds.

Reza Esmailzadeh

Page 2 of 23

Mike Kooznetsoff

MK&RE® Telecommunications

Linear Orthogonal Error Correcting Codes

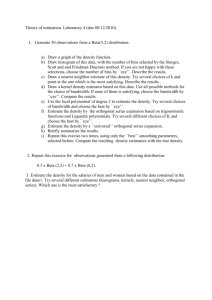

1 Introduction

MK&RE® Telecommunications is a university group consisting of two electrical

engineering undergraduate students, supervised by Dr. Aaron Gulliver. Our research

focus is in developing computerized methods for creating Linear Binary/Non-Binary

Orthogonal Error Correction Codes, applied intensively in communications research.

The time duration for this work is four months as per University of Victoria’s

ELEC/CENG 499A course guidelines.

Wireless is revolutionizing telecommunications; new devices and personal connectivity

together will drive the wireless future - no cables allowed. The global demand for

wireless technologies is booming and is impacting our lives and business environments.

We are living in information driven world where people require flexible access to a

network in any conceivable situation. People no longer work in the traditional office,

they also work while sitting in traffic, riding a cab, or relaxing in a hotel room.

One of the most important topics in the field of communications is the theory of Error

Detection and Correction. The idea relies on the fact that any digital data, transferred

through channel of any sort, would be subjected to channel noise and errors. Error

correction theory came into existence shortly after the Second World War, and has

improved greatly, catching up with the wireless communication systems today. One

major task of a scientist in this field is to develop reliable and efficient “error-correction

codes”, in order to mathematically detect and correct any errors that occur at the receiver.

Up to now, things have not been easily done, since complex matrices and vector algebra

are not pleasant tools to utilize, due to their time-consuming and eye-tiring mathematics.

As an example, the error correction codes of a typical 16-bit system will require the

scientist to write 216-1 incrementing code vectors, provided that no mistakes occur in

locating the digits at their appropriate positions, then check for their linearity and

orthogonality to pick the certain code words, which satisfy all the requirements.

Reza Esmailzadeh

Page 3 of 23

Mike Kooznetsoff

MK&RE® Telecommunications

Linear Orthogonal Error Correcting Codes

The MK&RE® Telecommunications project focused on developing a C program, which

generates the set of orthogonal and linear error correction codes, given the following

parameters:

n

length of the code word (i.e. the number of bits),

q

the arithmetic base or MODULO,

dmin

the minimum Hamming distance (number of different bits) between any

two code words.

The designed program would work correctly for any given values of (n, q ,dmin), as tested

and verified accurately, using the Bloodshed Dev C++ Software compiler.

This document outlines the work conducted for the MK&RE design project, and may be

broken down into four major sections:

Background Information

Software Design and Details

Results and Discussions

Conclusions

The first section of this report shall provide background knowledge of coding theory,

greedy codes, and the exact mathematical meaning of the four basic parameters, dot

products, MOD addition, orthogonality, and linearity. The discussion will then introduce

three of the more popular coding schemes, and will eventually focus on greedy codes

algorithm.

The second section presents an in-depth explanation of software design, the tests

performed, the functions of the different sections of the program, the results, optimization

techniques used, and recommendations for the future. Our designed program may be

thought of more as a successful method of creating error correction codes, as well as a

more practical tool for research. Additional information would also be provided in the

second section, giving a precise illustration of the meaning of our results.

The conclusion will give a few other examples, and discuss the project as a whole.

Reza Esmailzadeh

Page 4 of 23

Mike Kooznetsoff

MK&RE® Telecommunications

Linear Orthogonal Error Correcting Codes

2 Background Information

This portion of the report will emphasize on the Coding Theory, Self-orthogonal Codes,

Greedy Algorithm, Linear Codes, Orthogonal Codes, and Exact Interpretation of

Parameters.

2.1 Error Coding Theory

Coding theory is the branch of mathematics concerned with transmitting data across

noisy channels and recovering the message. Coding theory is about making messages

easy to read: don't confuse it with cryptography, which is the art of making messages

hard to read!

We assume that our message is in the form of binary digits or bits, strings of 0 or 1. We

have to transmit these bits along a channel (such as a telephone line) in which errors

occur randomly, but at a predictable overall rate. To compensate for the errors we need to

transmit more bits than there are in the original message.

Error correction coding is used to combat distortions that occur in the transmission and

storage of data. Encoding introduces redundancy into a stream of data, and decoding uses

the redundancy to correct errors and extract the original data. The key challenge coding

theorists face is to construct "good" codes and efficient algorithms for encoding and

decoding them. In the last five years a new approach, called iterative decoding, was

developed which is closely related to Bayesian inference and belief propagation

algorithms. Iterative decoding is a tremendous improvement over previous decoding

algorithms, both in efficiency and in the decoding success rate.

The “iterative algorithm” is not well understood mathematically, and the construction of

good codes is an active area of research. In particular there is a fundamental debate over

whether the best codes will be found by systematic methods or using randomly generated

codes.

Reza Esmailzadeh

Page 5 of 23

Mike Kooznetsoff

MK&RE® Telecommunications

Linear Orthogonal Error Correcting Codes

Figure 1: Typical Transmission System

The simplest method for detecting errors in binary data is the parity code, which

transmits an extra "parity" bit after every 7 bits from the source message. However, this

method can only detect errors, the only way to correct them is to ask for the data to be

transmitted again!

A simple way to correct as well as detect errors is to repeat each bit a set number of

times. The recipient sees which value, 0 or 1, occurs more often and assumes that that

was the intended bit. The scheme can tolerate error rates up to 1 error in every 2 bits

transmitted at the expense of increasing the amount of repetition.

From the definition, an error-correction code is capable of detecting up to (dmin – 1)

errors, and correcting up to FLOOR{(dmin-1) / 2}. Please refer to Table 1 for a better

cross-reference.

Code’s dmin

# of Detectable Errors (t)

# of Correctable Errors ()

1

0

0

2

1

0

3

2

1

4

3

1

5

4

2

6

5

2

Reza Esmailzadeh

Page 6 of 23

Mike Kooznetsoff

MK&RE® Telecommunications

7

Linear Orthogonal Error Correcting Codes

6

3

Table 1: Relation of dmin with the Capability for Detection and Correction

2.2 Major Error- Coding Theory Problem

A well-developed (n, M, dmin) code (M being the number of code words) would have

small n in order to increase the transmission speed, high M value so that transmission of a

wide variety of messages would be feasible, and finally high dmin in order to be able to

correct many errors. As a matter of fact, coding theory is focused on optimizing one of

the three parameters for given values of the other two.

The major issue here is to find the most optimized code (i.e. the largest value of M, such

that there exists a q-ary (n, M ,dmin) code), given certain n and dmin, denoted by Aq(n,

dmin). Our program gets fed through a series of values for n, q, and dmin, all of which are

entered in by the user. Then, through a series of processes, the error-correction codes,

along with the M-value would be displayed on the screen, as well as being saved in to a

text file.

Example The results may then be used to generate the G-matrix, and the H-matrix, for

syndrome decoding, among with other decoding techniques, as showed on Table 2. The

syndromes are calculated via S(r) = r HT . If the vector r is the received code word, we

would calculate S(r) and match to the first column of the Table 2.

SYNDROME S

COSET FUNCTION f(z)

000 = 0

00000

110 = 6

10000

011 = 3

01000

100 = 4

00100

010 = 2

00010

Reza Esmailzadeh

Page 7 of 23

Mike Kooznetsoff

MK&RE® Telecommunications

Linear Orthogonal Error Correcting Codes

001 =1

00001

Table 2: Syndrome Decoding Cross-Reference

If the received vector doesn’t not appear, we would ask for another try of transmission,

otherwise, we would find the matching “coset function”. Since S(r) = r HT = e HT,

where e is the error pattern, then we could easily subtract e to the received code word r,

in order to achieve the corrected transmitted code word, c.

This project utilized the conception of “Greedy Algorithm” to generate the error

correction codes, using the three key parameters.

2.3 Orthogonal and Self-Orthogonal Codes/Matrices

We will use the following notations from this point on:

Vn

n

k

(n,k)

x

m

H

G

Ik

A

The vector space of n-tuples with elements from Z2.

The length of the code.

The dimension of the codes subspace and the length of the message words.

A linear code of length n and dimension k.

The code vector with elements x1,...,xn written x = [x1x2...xn].

The message vector with elements m1,...,mk written m = [m1m2...mk].

The “n-k x n” parity check matrix.

The “k x n” generator matrix

The “k x k” identity matrix.

The “k x n-k” matrix with transpose AT

Orthogonal codes are vector spaces, at which all the code words are orthogonal to the

other code words inside the space.

Self-orthogonal codes are orthogonal codes, in which every single code word is also

orthogonal to itself. This could be easily defined as the inner product of any two code

words being zero.

For example, in (4,2) at GF(2), the two code words <1001> and <0001> are

“orthogonal”, since:

Reza Esmailzadeh

Page 8 of 23

Mike Kooznetsoff

MK&RE® Telecommunications

Linear Orthogonal Error Correcting Codes

<1001> . <0001> = (1*1) + (0*0) + (0*0) + (1*1) = 2MOD2 = 0

However, 1111 and 1110 are “non-orthogonal”, since:

<1111> . <1110> = (1*1) + (1*1) + (1*1) + (0*0) = 3MOD2 = 1 ≠ 0

The dot products between two code words (code vectors) could be done in either binary

or non-binary formats.

For the “binary” case, the dot product is essentially a summation of the AND

operation between the same weighted bits. In this case,

uv . ij = 0, if ui = vj = 1

uv . ij = 1, if ui = 0 or vj = 0

For the “non-binary” case, the dot product is a summation of arithmetic products

between the same-weighted bits. For example, for a (3,8) code:

<143> . <127> = (1*1) + (4*2) + (3*7) = 30MOD8 = 6

2.3.1 Orthogonal Matrices

A square matrix A over Vn is said to be orthogonal if A . AT = I; where I denotes an

identity matrix of appropriate dimension. Equivalently, A is orthogonal just when each

row of A is orthogonal to every other row of A but has a scalar product of 1 with itself.

Note that A is orthogonal just when AT = A-1, and hence AT . A = I, so that AT is also

orthogonal. An orthogonal matrix is not only non-singular but always has a determinant

that is either +1 or -1 because 1 = det(I) = det(A . AT ) = det(A) . det(AT ) = (det(A))2. An

example of an orthogonal matrix over the finite field GF(2) is

[0111

1011

1101

1110]

Orthogonal matrices over the field, R, of real numbers are of great importance in the

theory of isometries of Rn. For a general non-square matrix A, we can say that it is roworthogonal if A.AT = I, as this is equivalent to the condition that each row of A is

orthogonal to every other row of A but has a scalar product of 1 with itself. A row-

Reza Esmailzadeh

Page 9 of 23

Mike Kooznetsoff

MK&RE® Telecommunications

Linear Orthogonal Error Correcting Codes

orthogonal matrix always has full row rank and thus must have at least as many columns

as rows.

Note that if A is row-orthogonal but non-square, then A . AT cannot have full row rank

and thus cannot also be row-orthogonal. Deleting rows of an orthogonal matrix gives a

row-orthogonal matrix, but not every row-orthogonal matrix can be so constructed. For

instance, over the field GF(2), the matrix A = [1 1 1] is trivially row-orthogonal but there

is no orthogonal matrix having [1 1 1] as a row.

2.4 Greedy Algorithm

The greedy method is a “locally-optimization” method. It assumes that the path to the

best global optimum is a series of locally optimal steps. At each step, one adds the pairing

that has the next shortest distance.

The idea behind a greedy algorithm is to perform a single procedure in the recipe over

and over again until it can't be done any more and see what kind of results it will produce.

It may not completely solve the problem, or, if it produces a solution, it may not be the

very best one, but it is one way of approaching the problem and sometimes yields very

good (or even the best possible) results.

Greedy codes are constructed by an algorithm, which chooses code words, greedily, from

an ordered vector space. A fundamental criterion for choosing code words is that they

must satisfy a distance property. Other criteria may be introduced to get various types of

codes e.g. self-orthogonal greedy codes.

Different orderings of vectors and base field elements can result in codes with different

parameters and properties. We are interested in finding properties satisfied by these

codes.

Greedy Code (n, dmin, q) will return a Greedy code with design distance d over q. The

code is constructed using the Greedy algorithm on the list of vectors n. This algorithm

Reza Esmailzadeh

Page 10 of 23

Mike Kooznetsoff

MK&RE® Telecommunications

Linear Orthogonal Error Correcting Codes

checks each vector in n, then, adds it to the code if its distance to the current code is

greater than or equal to dmin. It is obvious that the resulting code has a minimum distance

of at least dmin. Note that Greedy codes are often linear codes, and we’ll explore this

property based on the results of our program later on in the report.

2.5 Linear Codes

This paper is limited to the field of integers modulo 2, which I will denote by Z2, though

many of the results can be generalized to any finite field. Vn will represent the vector

space of n-tuples with elements in Z2.

A linear (n,k) code over the finite field, Z2, is a k dimensional subspace of Vn(Z2). As a

result, the linear code of length n is a subspace of Vn which is spanned by k, linearly

independent vectors. In other words, all code words can be written as a linear

combination of the k basis vectors, v1,...,vk, as shown below:

x = m1v1 + ... + mkvk

Since a different code word is obtained for each different combination of coefficients we

have an easy method of encoding if we simply make m = [m1m2...mk] the message to be

encoded.

Example 1

Let's take a look at how we would construct a (4,2) code.

Our code words will belong to the vector space V4 and our message words will be all of

the words of length 2; {[00], [01], [10], [11]}. We want to encode each of these message

words into code words of length 4 that will form a 2 dimensional subspace of V4. To do

this we simply pick 2 linearly independent vectors to be a basis for the 2 dimensional

subspace. (For now we'll do it at random but will see later that this is will not be

satisfactory.)

The vectors v1 = [1000] and v2 = [0110] are linearly independent since neither is a

multiple of the other so we'll use them.

Reza Esmailzadeh

Page 11 of 23

Mike Kooznetsoff

MK&RE® Telecommunications

Linear Orthogonal Error Correcting Codes

Now to find the code word, x, corresponding to the message m = [m1 m2] we compute:

x = m1[1000] + m2[0110]

Thus the message m = [11] is mapped to

x = 1[1000] + 1[0110] = [1110]

Likewise,

m = [00] is mapped to x = [0000]

m = [01] is mapped to x = [0110]

m = [10] is mapped to x = [1000]

We can shorten the notation a little bit by using the matrix G, called the generating

matrix, whose rows are the basis vectors v1 through vk. For the example above we would

have used

G = [ 1000

0110 ]

Then the message words can be encoded by matrix multiplication.

x=mG

Notice that in example 1 the first two digits of each code word are exactly the same as the

message bits. This is especially nice for decoding since, after correction, retrieval of the

message can be achieved by discarding the last 2 bits (n - k bits in general). In general

this can be achieved by insuring that your chosen basis vectors form a generating matrix

of the form [Ik A].

Notice also that the Hamming distance (the number of places where the digits of the code

words differ) between the first and third is only 1. In general, to detect t errors the

minimum distance between code words had to be at least t+1, and to correct t errors the

minimum distance had to be 2t + 1.

Reza Esmailzadeh

Page 12 of 23

Mike Kooznetsoff

MK&RE® Telecommunications

Linear Orthogonal Error Correcting Codes

It could easily have been predicted that there would have been code words within

distance one of each other. Since the code words belong to a subspace we know that they

are closed under addition. Since 1000 is a basis vector we know it is in the code. Thus x +

1000 is also in the code for any code word, x. But the distance between x and x + 1000 is

only 1 since they differ only in the first place. This suggests that if we want a code with a

minimum distance of d we had better make all our basis vectors have weight greater than

or equal to d. In the next section we explore this further.

2.5.1 The Parity Check Matrix

The parity check matrix, H, is an n - k by n matrix created so that when multiplied by the

transpose of any code word, x, the transpose of the product, called the syndrome, will be

zero. In symbols,

H xT = sT = 0

It can be shown by matrix arithmetic that given a generator matrix of the form [Ik A] the

parity check matrix can be defined to be H = [-AT In-k] = [AT In-k] (remember that -1 = 1

in Z2).

In the discussion above we noted that for example 1 the generating matrix G would be

G = [ 1000

0110 ]

G is of the form [I2 A] where

A = [ 00

10 ]

The transpose of A is

[ 01

00 ]

So we have H = [AT I2]

Reza Esmailzadeh

Page 13 of 23

Mike Kooznetsoff

MK&RE® Telecommunications

Linear Orthogonal Error Correcting Codes

2.5.2 Theorem

Let H be a parity check matrix for an (n,k) code. Then every set of s-1 columns of H is

linearly independent if and only if the code has a minimum distance of at least s.

What this says about example 1 is that if we want a minimum distance of 2 we must

insure that every column of the parity check matrix is linearly independent. The zero

vector can never be part of a set of linearly independent vectors (examine the definition

of linear independence). As you can see, in the parity check matrix of example 1 there is

a zero column.

H = [ 1110

0001 ]

Now AT = [ 11

00 ]

So A must = [ 10

10 ]

and G can be computed to

G = [1010

0110 ]

Using this generator matrix

m = [00] is mapped to x = [0000]

m = [01] is mapped to x = [0110]

m = [10] is mapped to x = [1010]

m = [00] is mapped to x = [1100]

(Remember that 1+1 = 2MOD2 = 0 in Z2) Now we see that each code word is at least a

distance of two away from any other code word. Thus this code could be used to detect

errors. It cannot correct errors however.

Reza Esmailzadeh

Page 14 of 23

Mike Kooznetsoff

MK&RE® Telecommunications

Linear Orthogonal Error Correcting Codes

2.5.3 Decoding Linear Codes

Single error correcting codes are very simple to decode. All you have to do is find the

syndrome, s, (remember sT = H xT, where x is your received word) and compare it to the

columns of your parity check matrix. If the syndrome equals 000 then no error occurred.

If the syndrome is equal to the third column of H then you know you know the third digit

of your received word is in error. If the syndrome is not equal to any of the columns in

the parity check matrix then you know that more than one error occurred.

In general, if more than one error occurs the code will decode to the wrong code word in

a Hamming code. This is because in a Hamming code the code words are packed so

tightly that a distance of 2 away from one code word is a distance of 1 away from another

code word.

Example 2

Let's construct a single error correcting Hamming code and explore its encoding and

decoding mechanisms. For it to be a perfect code, as noted above, the length, n, must

equal 2n-k-1, where n-k is the number of check digits so if we choose 2 we get a code of

length 3 and message length of k = 1. We already created that code in example 2. Let's

consider a code with 3 check digits of length 7. In this code our message vectors can be

four digits long.

First we create our parity check matrix. We want H in the form [A T I3] so that we'll have

easy message retrieval. For the columns of A we just use the rest of the vectors of length

three that are left.

H = [1101100

1011010

1110001]

Now G = [I4 A], or

G = [1000111

0100101

0010011

0001110]

Reza Esmailzadeh

Page 15 of 23

Mike Kooznetsoff

MK&RE® Telecommunications

Linear Orthogonal Error Correcting Codes

Suppose now that we were to send the message vector [1100]. To encode this message

we'd multiply it by G to get the code word [1100010].

Suppose then that along the channel and error occurs and we receive x = [1110010]

instead. To decode this received word we multiply the parity check matrix by the

transpose of the received word to get the transpose of the syndrome.

H xT = sT

, which in this case equals [0] [1] [1]

This column matches the third column from the left in the parity check matrix so we

know that an error occurred in that position. Thus we know that [1110010] needs to be

corrected to [1100010]. We then take the first four digits and that is our message [1100].

3 Software

3.1 Introduction

These programs were written using the Bloodshed Dev-C++ compiler, but were coded

using C language and will also work on a C compiler. Two different programs were

written, one to develop orthogonal base q codes, and one for non-orthogonal base q

codes. The format of the two are relatively the same, except for the orthogonality check

included for the orthogonal codes. Both programs scan in values of n (length of the

desired code word, in bits), dmin (minimum number of bits that each code word differs by)

and q (the number base used), which uses these variables to determine how many loops

the program will perform. The programs scrolls through all the numbers between zero

and q^n – 1 and performs a series of tests to see if the current number is the minimum

distance away from (and orthogonal to, in the case of the orthogonal codes) the code

words already selected. If it is, the number is stored in an array, and the program moves

on to the next number to perform the same operations. Pseudo code for the orthogonal

codes has been written below (for the non-orthogonal codes, just delete the orthogonality

checks), as well as an in-depth explanation of each part. Software code for the two

programs are included in Appendix A and B.

Reza Esmailzadeh

Page 16 of 23

Mike Kooznetsoff

MK&RE® Telecommunications

Linear Orthogonal Error Correcting Codes

3.2 Pseudo Code

1. Declaration of variables

2. Use of “power” function to obtain the value of q^n

The C++ compiler did not have a function to generate the result of one number to

the power of another, so a function had to be written to perform that task. The

function consists of a simple loop that multiplies the base q by itself n times.

3. Ask user for values of q, n, and dmin and stores these values as variables of the same

name.

4. Calculate q^n and store the result as “maxnumber”

5. Print the all zero code word (of length n bits)

This is the first code word in any set of code words. All “printing” is done to the

screen and to a text file called “ortho.txt” (called “codes.txt” for the nonorthogonal case) that can be opened once the program has completed.

6. Start of loop to cycle through all possible code words between 1 and maxnumber – 1,

incrementing the code word by one each time a new loop is performed

This loop ensures that all the possible code words are checked

7. Convert the current number from base 10 into base q, and store this result in a

1 x n array called test, where zeroes are placed in the unused bits of the array

Before any tests can be performed, the current code word must be converted

into the number base specified by the user, base q. Zero’s are added to the

unused bits of test (up to size n) so all the code words are of length n

8. Calculate the weight (number of non-zero bits) of the number in test, and

continue with the next step if the weight is greater than or equal to the

entered value of dmin. If the weight is less than dmin, exit loop and start from 6.

again with the next code word.

This builds on the property discussed in 5.2 where the weight of the code

words has to be greater than or equal to dmin. If the weight is less, ignore this

code word, and move on to the next one to check.

Reza Esmailzadeh

Page 17 of 23

Mike Kooznetsoff

MK&RE® Telecommunications

Linear Orthogonal Error Correcting Codes

9. Calculate the dot product MOD q of test to itself, and if the result is zero,

continue with the next step. Otherwise exit loop and start again from 6. with the

next code word

10. Start another loop to check test against all the values currently in the keeper array

(n x k array used to store the code words if they pass all the tests).

To become a code word in keeper, test must have distance greater than or

equal to all the code words in keeper (with the first code word in keeper

being the all zero code word) , plus be orthogonal to all values in keeper and

itself

11. Calculate the distance of test against the first code word in keeper

12. Calculate the dot product MOD q (orthogonality check) of test and the first

code word in keeper

13. If the distance of test and the first code word in keeper is greater than or

equal to dmin, increment the value of dmin-counter by one.

dmin-counter is a variable used to figure out if test is the minimum

distance away from all entries currently in keeper. If dmin-counter is

equal to the number of entries currently in keeper (variable called

size_keeper), test passes the minimum distance test

14. If the dot product MOD q of test and the first code word in keeper is 0,

increment the value of orthocounter by one.

orthocounter is a variable used to figure out if test is orthogonal to all

entries currently in keeper. If orthocounter is equal to size_keeper, test

passes the orthogonality test

15. Perform steps 11. to 14. again, except calculate the distance and dot product

MOD q of test and the second code word in keeper, then the third, etc.

Keep cycling from 9. to 13. until the minimum distance and orthogonality

tests have been performed on test and every code word in keeper, then

exit this loop and continue to step 16.

16. If dmincounter and orthocounter are equal to size_keeper, put the code word

currently in test into the keeper array, print the new value of keeper, and

increase the value of size_keeper by one to account for the new entry. If

Reza Esmailzadeh

Page 18 of 23

Mike Kooznetsoff

MK&RE® Telecommunications

Linear Orthogonal Error Correcting Codes

dmincounter and orthocounter are not equal to size_keeper, start again from 6.

with the next code word

3.3 Testing the Software

As a result of the numerous loops, iterations, and calculations involved in the program, it

was very difficult at times to tell what was wrong with the developing program when

incorrect results were displayed on the screen. To help remedy this, a test version of the

program was first created where majority of the calculations and iterations are shown on

the screen, so you can follow exactly what the program is doing. This test version prints

out each code word that it cycles through (from 1 to maxnumber – 1), and the results of

the tests that it performs against each entry of the keeper array. It states why a code

word passes or fails certain tests, as well as acknowledges when a code word is added to

keeper, and what entry number it is in the keeper array.

The displayed results make it

easy to do the calculations in your head as the program goes along to double check if the

displayed results are correct. This makes debugging a lot easier, as it narrows the

problem down to specific variable calculations and/or loops. To create the final program,

which is specified to just display the code words in the keeper array as well as the

number of code words in keeper, all of the intermediate calculations and variables used

for the test version were taken out. The test version was kept as a separate program, and

can be used to test further modifications to the code, if they are made. The test version is

also an excellent way to display how the program works, as it shows almost every

iteration and calculation for every loop. An example of the test version output is included

in Appendix C for values of n = 5, q = 3, dmin = 3.

3.4 Results of Running the Software

The orthogonal and non-orthogonal programs were both run for q values of 2, 3, and 4.

For each of these q values, n and dmin were both chosen to be between 2 and 9, and all

possible combinations of n and dmin were tried. The programs displayed all the code

words fitting these three parameters, as well as M, the total number of code words. By

using the relation:

Reza Esmailzadeh

Page 19 of 23

Mike Kooznetsoff

MK&RE® Telecommunications

Linear Orthogonal Error Correcting Codes

k = logq M -> q^k = M -> log10 q^k = log10 M -> k = log10 M / log10 q

k values were obtained for all the values of q, dmin, and n. These results, for both

programs, are shown as a series of charts in Appendix D. By analyzing these charts, it

can be shown that for the non-orthogonal codes, values of q = 2 and q = 4 result in linear

code words, whereas for q = 3, the codes are mostly non-linear. This property can be

seen simply by looking at the k values. An integer value for k means the code is linear,

whereas a real number means the code is non-linear. For the orthogonal codes, looking at

the chart reveals, once again, that for q = 2 the codes are linear, but for q = 3, the codes

are mostly linear as well, except for the cases when n = 9 and dmin = 4, 5, and 6. At first

glance, it would appear that for the q = 4 case, the codes are partly non-linear, due to the

fact that about half the values of k are real numbers, as opposed to integers. But, if the k

value is calculated using q = 2 in the above equation, all the k values are indeed integers,

making the code words all linear. This expresses a unique property of code words

generated using the number base q = 4.

3.5 Software Optimization

To make the programs as efficient as possible, it is necessary to reduce the number of

calculations performed by reducing the number of iterations of the various loops. The

implementation of the weight test (step 8. in the pseudo code) was designed to reduce the

number of iterations performed. Simply by calculating the weight, and checking it

against the value of dmin, you can possibly eliminate a code word without having to

calculate the distance and dot product MOD q of the test value and all the entries in the

keeper array. This optimization technique will reduce the amount of iterations by a

small amount, but since majority of the code words tested will have weight greater than

or equal to dmin, the amount of time saved is minimal; a few seconds at best. The selforthogonal check at the beginning of the code (step 9. in the pseudo code), however,

greatly reduces the number of calculations and iterations performed. Since there are a lot

of code words that are not orthogonal to themselves, that simple calculation eliminates

possible code words without having to check against all values in the keeper array. For

example, the orthogonal program was run with values of n = 10, q = 4, dmin = 4 with the

Reza Esmailzadeh

Page 20 of 23

Mike Kooznetsoff

MK&RE® Telecommunications

Linear Orthogonal Error Correcting Codes

self-orthogonal check where it is now for optimization (step 9. in the pseudo code), and

where it was initially, at the end of the program as a final check. The optimized code

took 40 seconds to complete, whereas the original program took 150 seconds to

complete. This is a significant improvement over the initial order the code was in. To

further optimize both programs, it is recommended that another check be performed, so

as soon as the test codeword is either non-orthogonal to, or not the minimum distance

from, one of the values in the keeper array, that it exits the loop and continues with the

next code word. At present, it calculates dot product MOD q and distance away from all

the entries in keeper, no matter what.

3.6 Problems Encountered

Majority of the problems encountered involved trying to track down where in the code

errors were occurring, since numerous iterations and loops are being performed. The test

version of the programs helped quite a bit in that respect, and a lot of time was put into

displaying and checking all the “background” calculations that the program was

performing.

One specific problem that arose was if the desired q value was greater than 10. To handle

this in the non-orthogonal program, we stored all the bits in the arrays as characters, and

used letters to represent the numbers above ten (for example, a = 11, b = 12, c = 13, etc.).

The part of the program that converts a number into base q would check if the number

base is above 10, and if it was, implemented the correct equation to convert the number

into a letter of the alphabet. For the orthogonal program, on the other hand, this method

could not be used, since the modulus operator (%) was used in the program to calculate

orthogonality and this operator can only by used between two integers, not characters.

Some research was done, and it is possible to convert a character into an integer, but the

integer returns the ASCII value, so some sort of offset would have to be used if the same

representation as the non-orthogonal program is to be used (a = 11, b = 12, c = 13,etc.).

Due to time constraints, and the main purpose of the programs being for q = 2, 3, and 4,

this was not implemented into our program, so for q greater than 10, the orthogonal

program will not display the correct codewords.

Reza Esmailzadeh

Page 21 of 23

Mike Kooznetsoff

MK&RE® Telecommunications

Linear Orthogonal Error Correcting Codes

As the q and n values increased, and dmin decreased, the time the program would take to

complete increased significantly. To make these programs efficient for such values, it

will be necessary to perform the optimization technique discusses in section 3.5, as well

as looking into other optimization techniques to reduce the number of iterations and time

the programs take to complete.

Reza Esmailzadeh

Page 22 of 23

Mike Kooznetsoff

MK&RE® Telecommunications

4

Linear Orthogonal Error Correcting Codes

Conclusions

According to the findings of this report, the self-orthogonal and non-orthogonal error

correction codes are generated, using our developed C++ program, which gets the

parameters of n, dmin, and q as inputs.

The orthogonal and non-orthogonal programs were both run for q values of 2, 3, and 4.

For each of these q values, n and dmin were both chosen to be between 2 and 9, and all

possible combinations of n and dmin were tried. The programs displayed all the code

words fitting these three parameters, as well as M, the total number of code words. By

using the relation:

k = logq M -> q^k = M -> log10 q^k = log10 M -> k = log10 M / log10 q

The program scrolls through all the numbers between zero and q^n – 1 and performs a

series of tests to see if the current number is the minimum distance away from (and

orthogonal to, in the case of the orthogonal codes) the code words already selected. If it

is, the number is stored in an array, and the program moves on to the next number to

perform the same operations. However, it would appear that for the q = 4 case, the codes

are partly non-linear, due to the fact that about half the values of k are real numbers, as

opposed to integers. This expresses a unique property of code words generated using the

number base q = 4.

The full details of the software are described in section 3 and the necessary theoretical

background is mentioned in section 2. A complete cross-reference table of the results

obtained for several different input parameters is included at Appendix D.

Reza Esmailzadeh

Page 23 of 23

Mike Kooznetsoff