Chapter 6-3. Imperfect Reference Tests

advertisement

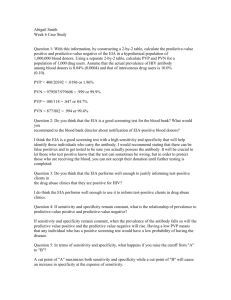

Chapter 6-3. Imperfect Reference Tests *** This chapter is under construction *** Pepe (2003, p.194-196) states the following about imperfect reference standards, or imperfect reference tests, “The best available reference tests may themselves be subject to error. When used as a reference against which new tests are compared, error in the reference test can influence the perceived accuracy of new tests. …sensitivity and specificity can be biased in either direction, depending on the error in the reference. The terms relative sensitivity and relative specificity are sometimes used for sensitivity and specificity when the reference test is subject to error.” The FDA (2007, p.24) suggests the following when using in imperfect reference test. In place of “gold standard” or “reference test”, label the imperfect reference test “non-reference standard”. Make it explicitly clear which of the two tests being compared is acting as the non-reference standard. Then, compute sensitivity in the usual way, using the non-reference standard as if it were the gold standard, but call this statistic “positive percent agreement.” Compute specificity in the usual way, but call it “negative percent agreement.” _________________ Chapter 6-3 (revision 16 May 2010) p. 1 Source: Stoddard GJ. Biostatistics and Epidemiology Using Stata: A Course Manual [unpublished manuscript] University of Utah School of Medicine, 2010. Chapter 6-3 (revision 16 May 2010) p. 2 Article Suggestion Here is an example of what you could state in your Statistical Methods: When the reference test, or gold standard, is subject to error, the perceived accuracy of the new test can be affected, where sensitivity and specificity can be biased in either direction, depending on the error in the reference test (Pepe, 2003). The simplest approach to this test comparison situation is simply to compute sensitivity and specificity using the usual formulas, but then call the test characteristics something else to inform the reader that an imperfect reference standard has been used. The terms relative sensitivity and relative specificity in place of sensitivity and specificity have been suggested (Pepe, 2003). A similar suggestion is positive percent agreement and negative percent agreement in place of sensitivity and specificity (FDA, 2007). Considering Direct Fluorescent Antibody as a reference standard subject to error, or imperfect gold standard, the terms positive % agreement and negative % agreement are used to report the test characteristics of the new test, FilmArray RP. Positive % agreement (sensitivity) is the percent of time that FilmArray RP detected a virus when Direct Fluorescent Antibody detected it. Similarly, negative % agreement (specificity) is the percent of time that FilmArray RP did not detect a virus when Direct Fluorescent Antibody did not detect it. When the two tests disagree, this shows up in the paired 2 x 2 table in the off-diagonal cells. Such tables are reported in Table 2. If the disagreements are random, just as likely to disagree in either direction, the two off-diagnonal cell counts should be approximately equal. To test this, the McNemar test was used. If the two cells counts did not sum to one, an exact McNemar test was used (Siegel and Castellan, 1988). It was hypothesized that FilmArray RP would detect virsus more frequently than Direct Fluorescent Antibody, and so the McNemar test is also reported in Table 2. Of course, if this is so, the argument that it is due to greater sensitivity of FilmArray RP, rather than simply more misclassifications in the positive direction, requires a justification beyond the test characteristics computed in this study. This justification could take the form of a separate study where both tests are compared against a true gold standard, or an indisputable advantage of the new test predicting this direction of the discordance. Chapter 6-3 (revision 16 May 2010) p. 3 References FDA. (2007). Statistical guidance on reporting results from studies evaluating diagnostic tests, Center for Devices and Radiological Health, March 13, 2007. http://www.fda.gov/cdrh/osb/guidance/1620.pdf. Pepe MS. (2003). The Statistical Evaluation of Medical Tests for Classification and Prediction, New York, Oxford University Press. Rutjes AW, Reitsma JB, Coomarasamy A, et al. (2007). Evaluation of diagnostic tests when there is no gold standard. A review of methods. Health Technol Assess 11(50):iii, ix-51. Siegel S and Castellan NJ Jr (1988). Nonparametric Statistics for the Behavioral Sciences, 2nd ed. New York, McGraw-Hill. Chapter 6-3 (revision 16 May 2010) p. 4