Academic Advising Center

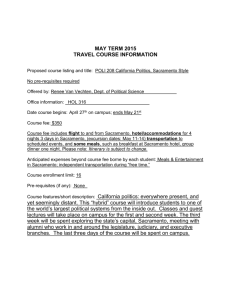

advertisement