Revised Chapter 4 in Specifying and Diagnostically Testing Econometric Models (Edition 3)

© by Houston H. Stokes 10 March 2012 All rights reserved. Preliminary Draft

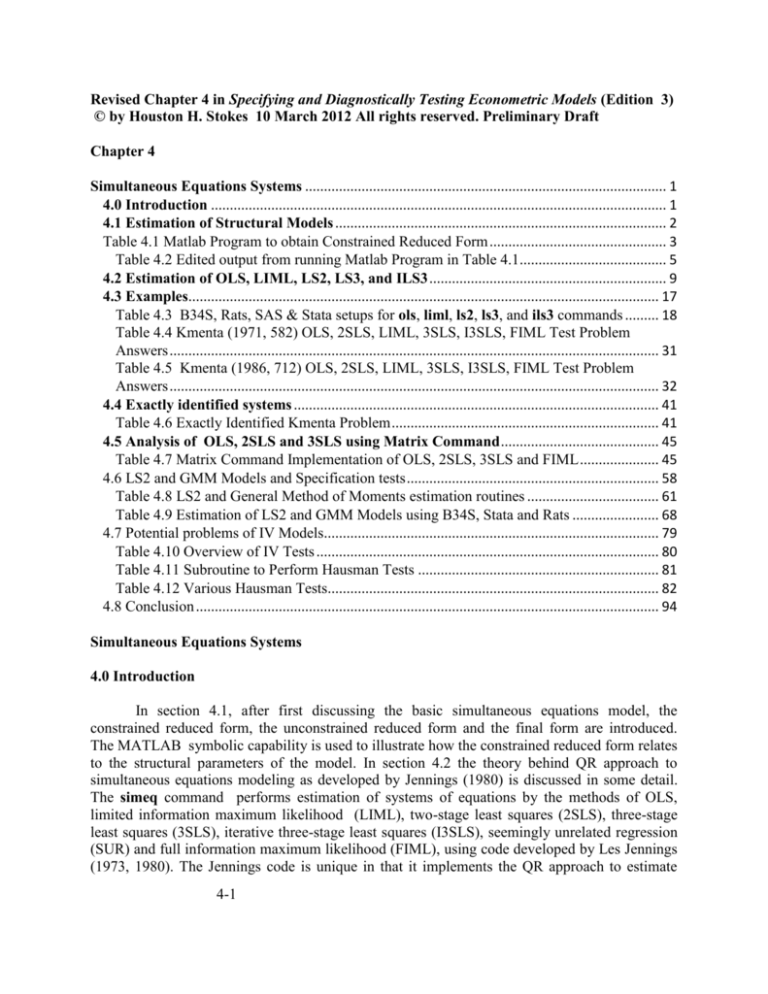

Chapter 4

Simultaneous Equations Systems ................................................................................................ 1

4.0 Introduction ......................................................................................................................... 1

4.1 Estimation of Structural Models ........................................................................................ 2

Table 4.1 Matlab Program to obtain Constrained Reduced Form ............................................... 3

Table 4.2 Edited output from running Matlab Program in Table 4.1....................................... 5

4.2 Estimation of OLS, LIML, LS2, LS3, and ILS3 ............................................................... 9

4.3 Examples............................................................................................................................. 17

Table 4.3 B34S, Rats, SAS & Stata setups for ols, liml, ls2, ls3, and ils3 commands ......... 18

Table 4.4 Kmenta (1971, 582) OLS, 2SLS, LIML, 3SLS, I3SLS, FIML Test Problem

Answers .................................................................................................................................. 31

Table 4.5 Kmenta (1986, 712) OLS, 2SLS, LIML, 3SLS, I3SLS, FIML Test Problem

Answers .................................................................................................................................. 32

4.4 Exactly identified systems ................................................................................................. 41

Table 4.6 Exactly Identified Kmenta Problem ....................................................................... 41

4.5 Analysis of OLS, 2SLS and 3SLS using Matrix Command .......................................... 45

Table 4.7 Matrix Command Implementation of OLS, 2SLS, 3SLS and FIML ..................... 45

4.6 LS2 and GMM Models and Specification tests ................................................................... 58

Table 4.8 LS2 and General Method of Moments estimation routines ................................... 61

Table 4.9 Estimation of LS2 and GMM Models using B34S, Stata and Rats ....................... 68

4.7 Potential problems of IV Models......................................................................................... 79

Table 4.10 Overview of IV Tests ........................................................................................... 80

Table 4.11 Subroutine to Perform Hausman Tests ................................................................ 81

Table 4.12 Various Hausman Tests........................................................................................ 82

4.8 Conclusion ........................................................................................................................... 94

Simultaneous Equations Systems

4.0 Introduction

In section 4.1, after first discussing the basic simultaneous equations model, the

constrained reduced form, the unconstrained reduced form and the final form are introduced.

The MATLAB symbolic capability is used to illustrate how the constrained reduced form relates

to the structural parameters of the model. In section 4.2 the theory behind QR approach to

simultaneous equations modeling as developed by Jennings (1980) is discussed in some detail.

The simeq command performs estimation of systems of equations by the methods of OLS,

limited information maximum likelihood (LIML), two-stage least squares (2SLS), three-stage

least squares (3SLS), iterative three-stage least squares (I3SLS), seemingly unrelated regression

(SUR) and full information maximum likelihood (FIML), using code developed by Les Jennings

(1973, 1980). The Jennings code is unique in that it implements the QR approach to estimate

4-1

4-2

Chapter 4

systems of equations, which results in both substantial savings in time and increased accuracy.1

The estimation methods are well known and covered in detail in such books as Johnston (1963,

1972, 1984), Kmenta (1971, 1986), and Pindyck and Rubinfeld (1976, 1981, 1990) and will only

be sketched here. What will be discussed are the contributions of Jennings and others. The

discussion of these techniques follows closely material in Jennings (1980) and Strang (1976).

Section 4.3 illustrates estimation of variants of the Kmenta model using RATS, B34S and

SAS while section 4.4 illustrates an exactly identified model. Section 4.5 shows how using the

matrix command OLS, LIMF, 3SLS and FIML can be estimated. The code here is for

illustration purposes, benchmarking but not production. Section 4.6 shows matrix command

subroutines LS2 and GAMEST that respectively do single equation 2SLS and GMM models.

This code is 100% production.

4.1 Estimation of Structural Models

Assume a system of G equations with K exogenous variables2

b11 y1i ... b1G yG i 11 x1i ... 1 K xK i u1i

b 21 y1i ... b 2G yG i 21 x1i ... 2 K xK i u2 i

.....................................................................

(4.1-1)

b G1 y1i ... bGG yG i G1 x1i ... G K xK i uG i

where xk i is the kth exogenous variable for the ith period, y j i is the jth endogenous variable for the

ith period, and u j i is the jth equation error term for the ith period. If we define

b11 b12 ... b1G

b b ... b

2G

B= 21 22

....................

bG1 bG2 ... bGG

y1i

x1i

u1i

1112 ... 1K

y

x

u

...

21 22

2K

2i

2i

yi

xi

u i 2i

.

.

.

................

G1 G 2 ... GK

yGi

xKi

uGi

equation (4.1-1) can be written as

1 The B34S qr command is designed to provide up to 16 digits of accuracy. This command,

which also allows estimation of the principal component (PC) regression, uses LINPACK code

and is documented in Chapter 10. The qr command is distinct from the code in the simeq

command. The matrix command contains extensive and programmable QR capability. For

further examples see Chapter 10 and 16. and sections of chapter 2

2 For further discussion see Pindyck and Rubinfeld (1981, 339-349).

Simultaneous Equations Systems

Byi x i ui

4-3

(4.1-2)

If all observations in yi , x i and ui are included, then

u11u12 ... u1N

x11 x12 ... x1N

y11 y12 ... y1N

x x ... x

y y ... y

u21u22 ... u2 N

21 22

2N

21 22

2N

X=

Y=

U=

...............

...............

...............

xk 1 xk 2 ... xk N

yG1 yG 2 ... yG N

uG1uG 2 ... uG N

and equation (4.1-2) can be written as

BY X U

(4.1-3)

From equation (4.1-3), the constrained reduced form can be calculated as

Y= B-1X B-1U= X V

(4.1-4)

If is estimated directly with OLS, then it is called the unconstrained reduced form. The B34S

simeq command estimates B, using either OLS, 2SLS, LIML, 3SLS, I3SLS, or FIML. For each

estimated vector B, the associated reduced form coefficient vector π can be optionally

calculated.3 If B is estimated by OLS, the coefficients will be biased since the key OLS

assumption that the right-hand-side variables are orthogonal with the error term is violated.

Model (4.1-3) can be normalized such that the coefficients bi j 1 for i j . The necessary

condition for identification of each equation is that the number of endogenous variables - 1 be

less than or equal to the number of excluded exogenous variables. The reason for this restriction

is that otherwise it would not be possible to solve for the elements of uniquely in terms of the

other parameters of the model. A short example from Greene (2003) that is self documented

using MATLAB illustrates this problem.

Table 4.1 Matlab Program to obtain Constrained Reduced Form

%

%

%

%

%

Greene (2003) Chapter 15 Problem # 1

y1= g1*y2 + b11*x1 + b21*x2 + b31*x3

y2= g2*y1 + b12*x1 + b22*x2 + b32*x3

We know BY+GX=E

syms g1 g2 b11 b21 b31 b12 b22 b32

B =[ 1, -g1;

-g2,

1]

3 If the model is exactly identified, the constrained reduced form can be directly estimated

by OLS or using (4.1-4) from LIML, 2SLS or 3SLS. This is shown empirically in section 4.5.

4-4

Chapter 4

G =[-b11,-b21,-b31;

-b12,-b22,-b32]

a= -1*inv(B)*G

p11=a(1,1)

p12=a(1,2)

p13=a(1,3)

p21=a(2,1)

p22=a(2,2)

p23=a(2,3)

% Hopeless. Have 6 equations BUT more than 6 variables

'

Now impose restrictions'

'

b21=0 b32=0'

G =[-b11,

0, -b31;

-b12,-b22, 0

]

B,G

a= -1*inv(B)*G

' Here 6 equations and six unknowns g1 g2 b11 b31 b12 b22 '

p11=a(1,1)

p12=a(1,2)

p13=a(1,3)

p21=a(2,1)

p22=a(2,2)

p23=a(2,3)

Simultaneous Equations Systems

4-5

Table 4.2 Edited output from running Matlab Program in Table 4.1

p11

p12

p13

p21

p22

p23

=

=

=

=

=

=

-1/(-1+g1*g2)*b11+g1/(-1+g1*g2)*b12

-1/(-1+g1*g2)*b21+g1/(-1+g1*g2)*b22

-1/(-1+g1*g2)*b31+g1/(-1+g1*g2)*b32

-g2/(-1+g1*g2)*b11+1/ (-1+g1*g2)*b12

-g2/(-1+g1*g2)*b21+1/ (-1+g1*g2)*b22

-g2/(-1+g1*g2)*b31+1/ (-1+g1*g2)*b32

Here 6 equations and six unknowns g1 g2 b11 b31 b12 b22

p11

p12

p13

p21

p22

p23

= -1/(-1+g1*g2)*b11+g1/(-1+g1*g2)*b12

=

-g1/(-1+g1*g2)*b22

=

-1/(-1+g1*g2)*b31

= -g2/(-1+g1*g2)*b11+1/(-1+g1*g2)*b12

=

-1/(-1+g1*g2)*b22

=

-g2/(-1+g1*g2)*b31

If the excluded exogenous variables of the ith equation are not significant in any other equation,

then the ith equation will not be identified, even if it is correctly specified. We note that

E (ui | xi ) 0 and E (uiui' ) where ui [u1i , , uGi ] ' and xi [ x1i , , xK i ]' . The reduced form

disturbance is not correlated with the exogenous variables or

E (vi | xi ) B 1 0 0 .

E (vi vi' | xi ) E[ B 1ui ui' ( B ' ) 1 ] B 1( B ' ) 1 from which we deduce that

BB'

(4.1-5)

In summary, = G by K exogenous variable coefficient matrix, B = G by G nonsingular

endogenous variable coefficient matrix, = K by K symmetric positive definite matrix structural

covariance matrix, =G by K constrained reduced form coefficient matrix and = G by G

reduced form covariance matrix. The importance of this is that since and can be estimated

consistently by OLS, following Greene (2003, 387) if B were known, we could obtain B

from (4.1-4) and from (4.1-5). If there are no endogenous variables on the right, yet a number

of equations are estimated where there is covariance in the error term across equations, the

seemingly unrelated regression model (SUR) can be estimated as

ˆ ( X ' 1 X ) 1 ( X ' 1Y ).

(4.1-6)

ˆ (ˆ ) can be estimated if OLS is used on each of the G equations and

Elements of

ij

ˆ ii uˆiuˆi' /(T Ki )

ˆ i j uˆiuˆ 'j / (T Ki )(T K j )

(4.1-7)

4-6

Chapter 4

For more detail see Greene (2003) or other advanced econometric books. Pindyck and

Rubinfield (1976, 1981, 1990) provides a particularly good treatment that is consistent with the

notation in this chapter.

From (4.1-4) Theil (1971, 463-468) suggests calculating the final form. First partition the

i observation of the exogenous variables into lagged endogenous, current exogenous and lagged

exogenous where identifies are used to express lags > 1.

th

[d 0 , D1 , D2 , D3 ]

yi1

x x i

x i 1

yi d 0 D1yi 1 D2 x i D3 x i 1 i*

*

i

(4.1-8)

Theil (1971) shows that (4.1-8) can be expressed as

t 1

t 0

yi (I D1 ) 1 d 0 D2 x i D1t 1 ( D1D2 D3 )x i 1 D1t i*1

(4.1-9)

where D2 is the impact multiplier. If there are no lagged endogenous variables in the system,

D1 0 and the constrained reduced form and the final form are the same. In this case

[ D2 , D3 ] . The interim multipliers are D2 , ( D1D2 D3 ), D1 ( D1D2 D3 ), , D1 ( D1D2 D3 )

which, when summed, form the total multiplier G*

G* D2 (I D1 D12

)( D1D2 D3 )

D2 (I D1 ) 1 ( D1D2 D3 )

(I D1 ) 1[(I D1 ) D2 D1D2 D3 )

(4.1-10)

(I D1 ) 1 ( D2 D3 )

Goldberger (1959) and Kmenta (1971, 592) provide added detail. The importance of (4.1-8) is

that it shows the effect on all endogenous variables of a change in any exogenous variable after

all effects have had a chance to work themselves out in the system.

There are several common mistakes made in setting up simultaneous equations systems

that include the following:

- Not fully checking for multicollinearity in the equations system.

Simultaneous Equations Systems

4-7

- Attempting to interpret the estimated B and Γ coefficients as partial derivatives, rather

than looking at the reduced form G by K matrix π.

- Not effectively testing whether excluded exogenous variables are significant in at least

one other equation in the system.

- Not building into the solution procedure provisions for taking into account the number

of significant digits in the data.

The simeq code has unique design characteristics that allow solutions for some of these

problems. In the next sections, we will briefly outline some of these features.

Assume for a moment that X is a T by K matrix of observations of the exogenous

variables, Y is a T by 1 vector of observations of the endogenous variable, and β is a K element

array of OLS coefficients, then the OLS solution for the estimated β from equation (2.1-8) is

( X ' X ) 1 X ' Y . The problem with this approach is that some accuracy is lost by forming the

matrix X ' X . The QR approach4 proceeds by operating directly on the matrix X to express it in

terms of the upper triangular K by K matrix R and the T by T orthogonal matrix Q. X is factored

as

R

R

X=Q [Q1 | Q2 ] Q1R

0

0

(4.1-11)

Since Q'Q = I, then

(X'X)-1X'Y=(R 'Q1' Q1R)-1R 'Q1' Y=(R 'R) 1R 'Q1' Y=R 1Q1' Y

(4.1-12)

4 A good discussion of the QR factorization is contained in Strang (1976). Other references

include Jennings (1980) and Dongarra, Bunch, Moler, and Stewart (1979).

4-8

Chapter 4

Following Jennings (1980), we define the condition number of matrix X, (C(X)), as the

ratio of the square root of the largest eigenvalue of X ' X , [ Emax ( X ' X )] to the smallest

eigenvalue of X ' X , [ Emin ( X ' X )]

C(X)= [Emax (X'X)/Emin (X'X)]

(4.1-13)

If | | X||= Emax (X'X) , and X is square and nonsingular, then

C(X)=||X|| ||X1 ||

(4.1-14)

Throughout B34S, 1/C(X) is checked to test for rank problems. Jennings (1980) notes that C(X)

can also be used as a measure of relative error. If μ is a measure of round-off error, then

[C ( X )]2 is the bound for the relative error of the calculated solution. In an IBM 370 running

double precision, μ is approximately .1E-16. If C(X) is > .1E+8 (1 /C(X) is < .1E-8), then

[C(X)]2 1 , meaning that no digits in the reported solution are significant. Jennings (1980)

looks at the problem from another perspective. If matrix X has a round-off error of τX such that

the actual X used is X+τX, then || X|| / ||X|| must be less than 1/C(X) for a solution to exist. If

|| X|| / ||X|| = 1/C(X)

(4.1-15)

then there exists a X such that X X is singular.5 The user can inspect the estimate of the

condition and determine the degree of multicollinearity. Most programs only report problems

when the matrix is singular. Inspection of C(X) gives warning of the degree of the problem. The

simeq command contains the IPR parameter option with which the user can inform the program

of the number of significant digits in X. This information is used to terminate the iterative threestage (ILS3) iterations when the relative change in the solution is within what would be

expected, given the number of significant digits in the data.

Jennings (1980) notes that the relative error of the QR solution to the OLS problem given

in equation (4.1-10) has the form

n1C ( X ) n2C ( X ) 2 (|| eˆ || / || ˆ ||)

(4.1-16)

where n1 and n2 are of the order of machine precision and || eˆ || ˆ || are the lengths of the

estimated residual and estimated coefficients, respectively. (The length or L2NORM of a vector

5 For more detail on techniques used in simeq to avoid numerical error in the calculations arising

from differences in the means of the data, see Jennings (1980).

Simultaneous Equations Systems

ei is defined as

e

2

i

4-9

) . Equation (4.1-14) indicates that as the relative error of the computer

i

solution improves, the closer the model fits. An estimate of this relative error is made for OLS,

LIML and 2SLS estimators reported by simeq.

4.2 Estimation of OLS, LIML, LS2, LS3, and ILS3

For OLS estimation of a system of equations, simeq uses the QR approach discussed

earlier. If the reduced option is used, once the structural coefficients B and Γ in equation (4.1-3)

are known, the constrained reduced form coefficients π from equation (4.1-4) are displayed. If B

and Γ are estimated using OLS, and all structural equations are exactly identified, then the

constraints on π imposed from the structural coefficients B and Γ are not binding and π could be

estimated directly with OLS or indirectly via (4.1-4). However, if one or more of the equations

in the structural equations system (4.1-2) are overidentified, π must be estimated as B1 .

Although the reduced-form coefficients π exist and may be calculated from any set of

structural estimates B and Γ, in practice it is not desirable to report those derived from OLS

estimation because in the presence of endogenous variables on the right-hand side of an

equation, the OLS assumption that the error term is orthogonal with the explanatory variables is

violated. Since OLS imposes this constraint as a part of the estimation process, the resulting

estimated B and Γ are biased.

The reason that OLS is often used as a benchmark is because from among the class of all

linear estimators, OLS produces minimum variance. The loss in predictive power of LIML and

2SLS has to be weighed against the fact that OLS produces biased estimates. If reduced-form

coefficients are desired, identities in the system must be entered. The number of identities plus

the number of estimated equations must equal the number of endogenous variables in the model.

The simeq command requires that the number of model sentences and identity sentences is

equal to the number of variables listed in the endogenous sentence.

The 2SLS estimator first estimates all endogenous variables as a function of all

exogenous variables. This is equivalent to estimating an unconstrained form of the reduced-form

equation (4.1-4). Next, in stage 2 the estimated values of the endogenous variables on the right in

the jth equation Yj* are used in place of the actual values of the endogenous variables Yj on the

right to estimate equation (4.1-2). Since the estimated values of the endogenous variables on the

right are only a function of exogenous variables, the theory suggests they can be assumed to be

orthogonal with the population error, and OLS can be safely used for the second stage. In terms

of our prior notation, the two-stage estimator for the first equation is

4-10

Chapter 4

b11

.

1

ˆ 'Yˆ Yˆ ' X Yˆ ' y

b1g

Y

1

1

1

1

1 1

ˆ X )'(Y

ˆ X )}-1 (Y

ˆ X )' y

{(Y

'

1 1

1

1

1 1

1

' ˆ

'

11

X 1Y1 X 1 X 1 X 1 y1

.

1g

(4.2-1)

where Ŷ1' is the matrix of predicted endogenous variables in the first equation and X1 is the

matrix of exogenous variables in the first equation. For further details on this traditional

estimation approach, see Pindyck and Rubinfeld (1981, 345-347).

The QR approach used by Jennings (1980) involves estimating equation (4.2-1) as the

solution of

Z'j (XX )Z j j Z'j (XX )y j

(4.2-2)

For j , where 'j {(b11,..., big )',(11,.., 1k )'}, Z j [X j | Yj ] and X+ pseudoinverse6 of X. Zj

consists of the X and Y variables in the jth equation. XX+ is not calculated directly but is

expressed in terms of the QR factorization of X. By working directly on X, and not forming

X'X, substantial accuracy is obtained. Jennings proceeds by writing

I 0

XX + Q r Q '

0 0

(4.2-3)

where Ir is the r by r identity matrix and r is the rank of X. Using equation (4.2-3), equation (4.22) becomes

ˆ Ir 0 Z

ˆ Z

ˆ Ir 0 yˆ

Z

j

j j

j

j

0 0

0 0

(4.2-4)

6 If we define X+ as the pseudoinverse of the T by K matrix X, then it can be shown (Strang

1976, 138, exercise 3.4.5) that the following four conditions hold: 1. XX+X=X; 2. X+XX+=X+;

3. (XX+)'=XX+; and 4. (X+X)'=X+X . The pseudoinverse can be obtained from the singular value

decomposition or the QR factorization of X.

Simultaneous Equations Systems

4-11

where Ẑ j Q'Z j and ŷ j Q' y j .

The 2SLS covariance matrix can be estimated as

(|| e j ||2 d f )(Z'jXX+ Z j )1

(4.2-5)

where d f is the degrees of freedom and || e j ||2 is the residual sum of squares (or the square of

the L2NORM of the residual). There is a substantial controversy in the literature about the

appropriate value for d f . Since the SEs of the estimated 2SLS coefficients are known only

asymptotically, Theil (1971) suggests that d f be set equal to T, the number of observations used

to estimate the model. Others suggest that d f be set to T-K, similar to what is being used in

OLS. If Theil's suggestion is used, the estimated SEs of the coefficients are larger. The T-K

option is more conservative. The simeq command produces both estimates of the coefficient

standard errors to facilitate comparison with other programs and researcher preferences.

Two-stage least squares estimation of an equation with endogenous variables on the right,

in contrast with OLS estimation, in theory produces unbiased coefficients at the cost of some loss

of efficiency. If a large system is estimated, it is often impossible to use all exogenous variables

in the system because of loss of degrees of freedom. The usual practice is to select a subset of the

exogenous variables. The greater the number of exogenous variables relative to the degrees of

freedom, the closer the predicted Y variables on the right are to the raw Y variables on the right.

In this situation, the 2SLS estimator sum of squares of residuals will approach the OLS estimator

sum of squares of residuals. Such an estimator will lose the unbiased property of the 2SLS

estimator. Usual econometric practice is to use OLS and 2SLS and compare the results to see

how sensitive the OLS results are to simultaneity problems.

While 2SLS results are sensitive to the variable that is used to normalize the system,

limited information maximum likelihood (LIML) estimation, which can be used in place of

2SLS, is not so sensitive. Kmenta (1971, 568-570) has a clear discussion which is summarized

below. The LIML estimator,7 which is hard to explain in simple terms, involves selecting values

for b and δ for each equation such that L is minimized where L = SSE1 / SSE. We define SSE1

as the residual variance of estimating a weighted average of the y variables in the equation on all

exogenous variables in the equation, while SSE is the residual variance of estimating a weighted

average of the y variables on all the exogenous variables in the system. Since SSE SSE1, L is

bounded at 1. The difficulty in LIML estimation is selecting the weights for combining the y

variables in the equation. Assume equation 1 of (4.1-1)

b11 y1i ... b1G yG i 11 xi 1 ... 1K xK i u1i

7 Kmenta (1971, 565-572) has one of the clearest descriptions. The discussion here

complements that material.

(4.2-6)

4-12

Chapter 4

Ignoring time subscripts, we can define

y1* y1 [b12 y2 ... b1G yG ]

(4.2-7)

'

[1, b12 ,..., b1G ] we would know y*1

If we define Y1* [ y1i ,..., y1G ] and we knew the vector B1*

since y1* Y1*B1* and could regress y* on all x variables on the right in that equation and call the

residual variance SSE1 and next regress y1* on all x variables in the system and call the residual

variance SSE. If we define X1 as a matrix consisting of the columns of the x variables on the

right X1= [x1i,...,x1K], and we knew B1*, then we could estimate 1 [11 ,..., 1K ] as

1 [X1' X1 ]1 X1' y1* (X1' X1 ) 1 X1*Y1*B1*

(4.2-8)

However, we do not know B1*. If we define

W1* Y1*' Y1* (Y1*' X1 )(X1*X1 ) 1 X1*Y1*

W1 Y1*' Y1* (Y1*' X)(X'X)1X'Y1*

(4.2-9)

(4.2-10)

where X is the matrix of all X variables in the system, then L can be written as

'

'

L [B1*

W1*B1* ] / B1*

W1B1*

(4.2-11)

Minimizing L implies that

det (W1* LW1 )B1* 0

(4.2-12)

The LIML estimator uses eigenvalue analysis to select the vector B1* such that L is minimized.

This calculation involves solving the system

det(W1* LW1 ) 0

(4.2-13)

for the smallest root L which we will call . This root can be substituted back into equation (4.212) to get B1* and into equation (4.2-8) to get Γ1. Jennings shows that equation (4.2-13) can be

rewritten as

det | Y1*' {(I X1X1+ ) (I-XX + )}Y1* | 0 .

(4.2-14)

Further factorizations lead to accuracy improvements and speed over the traditional methods of

solution outlined in Johnston (1984), Kmenta (1971), and other books. Jennings (1973, 1980)

briefly discusses tests made for computational accuracy, given the number of significant digits in

the data and various tests for nonunique solutions. One of the main objectives of the simeq code

Simultaneous Equations Systems

4-13

was to be able to inform the user if there were problems in identification in theory and in

practice. Since the LIML standard errors are known only asymptotically and are, in fact, equal

to the 2SLS estimated standard errors, these are used for both the 2SLS and LIML estimators.

In the first stage of 2SLS, π is the unconstrained, reduced form.

Y = πX + V

(4.2-15)

and is estimated to obtain the Yˆ predicted variables. 2SLS, OLS, and LIML are all special cases

of the Theil (1971) k class estimators. The general formula for the k class estimator for the first

equation (Kmenta 1971, 565) is

ˆ (k ) Y'Y kV

ˆ 'V

ˆ

B

1

1 1

1 1

(k )

'

ˆ

1 X1Y1

1

ˆ 'y

Y1'X1 Y1'Y1 kV

1 1

'

'

X1X1

X1 y1

(4.2-16)

where Vˆ1 is the predicted residual from estimating all but the 1st y variable in equation (4.2-15),

Yˆ1 Y1 Vˆ , and X1 is the X variables on the right-hand side of the first equation. (4.2-16) follows

directly from (4.2-1). If k=0, equation (4.2-15) is the formula for OLS estimation of the first

equation. If k=1, equation (4.2-16) is the formula for 2SLS estimation of the first equation and

can be transformed to equation (4.2-5). If k = , the minimum root of equation (4.2-13),

equation (4.2-16) is the formula for the LIML estimator (Theil 1971, 504). Hence, OLS, 2SLS,

and LIML are all members of the k class of estimators.

Three-stage least squares utilizes the covariance of the residuals across equations from

the estimated 2SLS model to improve the estimated coefficients B and Γ. If the model has only

exogenous variables on the right-hand side ( B I ) which implies that the OLS estimates can be

used to calculate the covariance of the residuals across equations. The resulting estimator is the

seemingly unrelated regression model (SUR). In this discussion, we will look at the 3SLS model

only, since the SUR model is a special case. From (4.2-2) we rewrite the 2SLS estimator for the

ith equation as

i [Zi' X(X ' X) 1X ' Zi ]1 Zi' X(X ' X) 1X ' yi ,

(4.2-17)

which estimates the ith 2SLS equation

yi Zii ui .

If we define8 (X' X)-1 PP' and multiply equation (4.2-18) by P'X', we obtain

8 This discussion is based on material contained in Johnston (1984, 486).

(4.2-18)

4-14

Chapter 4

P' X ' yi P' X ' Zi i P' X 'ui

(4.2-19)

which can be written

w i Wii i

(4.2-20)

where w i P ' X ' yi , Wi =P'X ' Zi , and i P 'X 'ui . If all G 2SLS equations are written as

w1 W1 0 ...... 0 1 1

w 0 W ...... 0

2

2

2 2

. ....................

. .

WG G G

w G 0 0

(4.2-21)

then the system can be written as

w = Wα + ε.

(4.2-22)

For each equation, i=j and

E[i ( j )' ] E[P'X( i ( j )' XP)= i j P'X'XP= i j I

(4.2-23)

while the covariance of the error term for the system becomes

11 I 12 I... 1G I

I I... 2G I

21 22

........................

G1I G 2 I... 1G I

24)

(4.2-

Equation (4.2-24) indicates that for each equation there is no heteroskedasticity, but that there is

contemporaneous correlation of the residuals across equations. Equation (4.2-24) can be

estimated from the 2SLS estimates of the residuals of each equation for 3SLS or the OLS

estimates of the residuals of each equation for SUR models. Let

ˆ ˆ I

V=

25)

be such an estimate. The 3SLS estimator of the system , where ' [B ] becomes

(4.2-

Simultaneous Equations Systems

ˆ ' W) 1W ' V

ˆ 1w

(W ' V

4-15

(4.2-26)

Jennings (1980) uses two alternative approaches to solve (4.2-26) depending on whether the

covariance of the 3SLS estimator

Var( ) (Wˆ 'Vˆ 1Wˆ ) 1

27)

(4.2-

is required or not. In the former case, a orthogonal factorization method is used. In the latter

case to save space the conjugate gradiant interative algorithm (Lanczos reductyion) suggested

by Paige and Sanders (1973) is used. This latter approach may or may not converge. For added

detail see Jennings (1980). If the switch kcov=diag is used there will not be convergence

issues, since the QR approach will be used. Since many software systems use inversion methods,

slight differences in the estimated coefficients will be observed since the QR approach is in

theory more accurate. Implementation of the "textbook" approach is illustrated using the matrix

command in section 4.4.

In a model with G equations, if the equation of interest is the jth equation, then assuming

the exogenous variables in the system are selected correctly and the jth equation is specified

correctly, 2SLS estimates are invariant to any other equation. 3SLS of the j th equation, in

contrast, is sensitive to the specification of other equations in the system since changes in other

equation specifications will alter the estimate of V and thus the 3SLS estimator of δ from

equation (4.2-26). Because of this fact, it is imperative that users first inspect the 2SLS estimates

closely. The constrained reduced form estimates, π, should be calculated from the OLS and 2SLS

models and compared. The differences show the effects of correcting for simultaneity. Next,

3SLS should be performed. A study of the resulting changes in δ and π will show the gain of

moving to a system-wide estimation procedure. Since changes in the functional form of one

equation i can possibly impact the estimates of another equation j, in this step of model building,

sensitivity analysis should be attempted. In a multiequation system, the movement from 2SLS to

3SLS often produces changes in the estimate of δi for one equation but not for another equation.

In a model in which all equations are over identified, in general the 3SLS estimators will differ

from the 2SLS estimators. If all equations are exactly identified, then V is a diagonal matrix

(Theil 1971, 511) and there is no gain for any equation from using 3SLS. In the test problem

from Kmenta (1971, 565), which is discussed in the next section, one equation is over identified

and one equation is exactly identified. In this case, only the exactly identified equation will be

changed by 3SLS. This is because the exactly identified equation gains from information in the

over identified equation but the reverse is not true. The over identified equation does not gain

from information from the exactly identified equation.

In SUR models, if all equations contain the same variables, there is no gain over OLS

from going to SUR, since V is again a diagonal matrix. Just as the LIML method of estimation is

an alternative to 2SLS, the FIML is a more costly alternative to 3SLS and I3SLS.

4-16

Chapter 4

FIML9 is a generalization of LIML for systems of models. Like LIML, it is invariant to

the variable used to normalize the model. FIML, in contrast with 3SLS, is highly nonlinear and,

as a consequence, much more costly to estimate. Because FIML is asymptotically equivalent to

3SLS (Theil 1971, 525) and the simeq code does not contain any major advantages over other

programs, the discussion of FIML is left to Theil (1971), Kmenta (1971) and Johnston (1984)

except for an annotated FIML example using the matrix command. In the next section, an

annotated output is presented.

Iterative 3SLS is an alternative final step in which the estimate of V is updated

from the information from the 3SLS estimates. The problem now becomes where do you stop

iterating on the estimates of V? The simeq command uses the information on the number of

significant digits (see ipr parameter) in the raw data and equation (4.1-8) to terminate the I3SLS

iterations if the relative change is within what would be expected, given the number of

significant digits in the raw data. If ipr is not set, the simeq command assumes ten digits.

9 The fiml section of the simeq command is the weakest link. In addition to a probably a scaler

error in the fiml standard errors, there often are convergence problems that appear to be data

related. In view of this and the fact that 3SLS is an inexpensive substitute, users are encouraged

to employ 3SLS and I3SLS in place of FIML. Future releases of B34S will endeavor to improve

the FIML code or disable the option. The matrix command implementation of FIML, shown

later in section 4.4, provides a look into how such a model might be implemented.

Simultaneous Equations Systems

4-17

4.3 Examples

Using data on supply and demand from Kmenta (1971, 565), Table 4.3 shows b34s code

to estimate models for OLS, LIML, 2SLS, and 3SLS. The reduced-form estimates for each

model are calculated. Not all output is shown to save space. The results are the same, digit for

digit, as those reported in Kmenta (1971, 582) which is shown in Table 4.4. Kmenta (1986, 712)

reported the results for the same problem for all models with the same coefficients except for

I3SLS for the supply equation. These answers are shown in Table 4.5 Note the use of the

keyword ls2 for 2SLS and ls3 for 3SLS since the b34s parser will not recognize 2SLS and 3SLS

as keywords. The b34s setup in Table 4.3 also shows Rats, SAS and Stata commands by which it

is possible to benchmark each software system. These results will be discussed in turn.

4-18

Chapter 4

Table 4.3 B34S, Rats, SAS & Stata setups for ols, liml, ls2, ls3, and ils3 commands

==KMENTA1

%b34slet runsimeq=1;

%b34slet runsas =1;

%b34slet runrats =1;

%b34slet runstata=1;

B34sexec data nohead corr$

Input q p d f a $

Label q = 'Food consumption per head'$

Label p = 'Ratio of food prices to consumer prices'$

Label d = 'Disposable income in constant prices'$

Label f = 'Ratio of t 1 years price to general p'$

Label a = 'Time'$

Comment=('Kmenta (1971) page 565 answers page 582')$

Datacards$

98.485 100.323 87.4 98.0 1 99.187 104.264 97.6

102.163 103.435 96.7 99.1 3 101.504 104.506 98.2

104.240

98.001 99.8 110.8 5 103.243

99.456 100.5

103.993 101.066 103.2 105.6 7 99.900 104.763 107.8

100.350

96.446 96.6 108.7 9 102.820

91.228 88.9

95.435

93.085 75.1 81.0 11 92.424

98.801 76.9

94.535 102.908 84.6 70.9 13 98.757

98.756 90.6

105.797

95.119 103.1 102.3 15 100.225

98.451 105.1

103.522

86.498 96.4 110.5 17 99.929 104.016 104.4

105.223 105.769 110.7 89.3 19 106.232 113.490 127.1

B34sreturn$

B34seend$

99.1

98.1

108.2

109.8

100.6

68.6

81.4

105.0

92.5

93.0

2

4

6

8

10

12

14

16

18

20

%b34sif(&runsimeq.ne.0)%then;

B34sexec simeq printsys reduced ols liml ls2 ls3 ils3 kcov=diag

ipr=6$

Heading=('Test Case from Kmenta (1971) Pages 565

582 ' ) $

Exogenous constant d f a $

Endogenous p q $

Model lvar=q rvar=(constant p d)

Name=('Demand Equation')$

Model lvar=q rvar=(constant p f a) name=('Supply Equation')$

B34seend$

%b34sendif;

%b34sif(&runsas.ne.0)%then;

B34SEXEC

B34SRUN$

OPTIONS

OPEN('testsas.sas')

UNIT(29)

DISP=UNKNOWN$

Simultaneous Equations Systems

4-19

B34SEXEC OPTIONS CLEAN(29) $ B34SEEND$

B34SEXEC PGMCALL IDATA=29 ICNTRL=29$

SAS

$

PGMCARDS$

proc means; run;

proc syslin 3sls reduced;

instruments d f a constant;

endogenous p q;

demand:

supply:

run;

model q = p d;

model q = p f a;

proc syslin it3sls reduced;

instruments d f a constant;

endogenous p q;

demand:

supply:

run;

model q = p d;

model q = p f a;

B34SRETURN$

B34SRUN $

B34SEXEC OPTIONS CLOSE(29)$ B34SRUN$

/$ The next card has to be modified to point to SAS location

/$ Be sure and wait until SAS gets done before letting B34S

resume

B34SEXEC OPTIONS dodos('start /w /r sas testsas')

dounix('sas testsas')$

B34SRUN$

B34SEXEC OPTIONS NPAGEOUT NOHEADER

WRITEOUT('

','Output from SAS',' ',' ')

WRITELOG('

','Output from SAS',' ',' ')

COPYFOUT('testsas.lst')

COPYFLOG('testsas.log')

dodos('erase

testsas.sas','erase

testsas.lst','erase

testsas.log')

dounix('rm

testsas.sas','rm

testsas.lst','rm

testsas.log')$

B34SRUN$

%b34sendif;

%b34sif(&runrats.ne.0)%then;

4-20

Chapter 4

B34SEXEC OPTIONS HEADER$ B34SRUN$

b34sexec

options

open('rats.dat')

unit(28)

disp=unknown$

b34srun$

b34sexec options open('rats.in') unit(29) disp=unknown$ b34srun$

b34sexec options clean(28)$ b34srun$

b34sexec options clean(29)$ b34srun$

b34sexec pgmcall$

rats passasts

pcomments('* ',

'* Data passed from B34S(r) system to RATS',

'*

',

"display

@1

%dateandtime()

@33

'

%ratsversion()"

'* ') $

Rats

Version

PGMCARDS$

*

*

heading=('test case from kmenta 1971 page 565 - 582 ' ) $

*

exogenous constant d f a $

*

endogenous p q $

*

model lvar=q rvar=(constant p d)

name=('demand eq.') $

*

model lvar=q rvar=(constant p f a) name=('supply eq.') $

linreg q

# constant p d

linreg q

# constant p f a

instruments constant d f a

linreg(inst) q

# constant p d

linreg(inst) q

# constant p f a

source d:\r\liml.src

@liml q

# constant p d

@liml q

# constant p f a

equation demand q

# constant p d

equation supply q

'

Simultaneous Equations Systems

4-21

# constant p f a

* Supply does not match known answers!!

sur(inst,iterations=200) 2

# demand resid1

# supply resid2

nonlin(parmset=structural) c0 c1 c2 d0 d1 d2 d3

compute

compute

compute

compute

compute

compute

compute

c0

c1

c2

d0

d1

d2

d3

=

=

=

=

=

=

=

.1

.1

.1

.1

.1

.1

.1

frml d_eq q = c0 + c1*p + c2*d

frml s_eq q = d0 + d1*p + d2*f + d3*a

nlsystem(inst,parmset=structural,outsigma=v) * * d_eq s_eq

b34sreturn$

b34srun $

b34sexec options close(28)$ b34srun$

b34sexec options close(29)$ b34srun$

b34sexec options

/$

dodos(' rats386 rats.in rats.out ')

dodos('start /w /r

rats32s rats.in /run')

dounix('rats

rats.in rats.out')$ B34SRUN$

b34sexec options npageout

WRITEOUT('Output from RATS',' ',' ')

COPYFOUT('rats.out')

dodos('ERASE rats.in','ERASE rats.out','ERASE

dounix('rm

rats.in','rm

rats.out','rm

$

B34SRUN$

%b34sendif;

%b34sif(&runstata.ne.0)%then;

/$ This name is required unless filename option used

rats.dat')

rats.dat')

4-22

Chapter 4

b34sexec options open('statdata.do') unit(28) disp=unknown$

b34srun$

b34sexec options clean(28)$ b34srun$

b34sexec

options

open('stata.do')

unit(29)

disp=unknown$

b34srun$

b34sexec options clean(29)$ b34srun$

b34sexec pgmcall idata=28 icntrl=29$

stata$

pgmcards$

//

uncomment if do not use /e

//

log using stata.log, text

// version info

about

describe

summarize

reg3 (q p d) (q p f a), 2sls endog(p)

reg3 (q p d) (q p f a), 3sls endog(p)

reg3 (q p d) (q p f a), ireg3 endog(p)

b34sreturn$

b34seend$

b34sexec options close(28); b34srun;

b34sexec options close(29); b34srun;

b34sexec options

dodos('stata /e do stata.do');

b34srun;

b34sexec options npageout

writeout('output from stata',' ',' ')

copyfout('stata.log')

dodos('erase

stata.do','erase

stata.log','erase

statdata.do') $

b34srun$

%b34sendif;

==

Simultaneous Equations Systems

4-23

The OLS results from b34s match Kmenta to every digit and are shown next:

Test Case from Kmenta (1971) Pages 565 - 582

Summary of Input Parameters and Model

Number of systems to be estimated - Number of identities - - - - - - - Number of exogenous variables - - Number of endogenous variables - - Number of data points in time - - - Maximum number of unknowns per system

Print Parameter - - - - - - - - - - Solutions wanted 0 => no, 1 => yes Reduced form coefficients - - - - - Ordinary Least Squares - - - - - - LIMLE Solution - - - - - - - - - - Two Stage Least Squares - - - - - - Three Stage Least Squares - - - - - Three Stage Covariance Matrix - - - Iterated Three Stage Least Squares Covariance Matrix for I3SLSQ - - - Maximum number of iterations - - - Functional Minimization 3SLSQ - - - Covariance Matrix for Functional Min.

-

2

0

4

2

20

4

2

1

1

1

1

1

1

1

1

25

0

0

Systems described by the following columns of data

Name of the System

LHS

Demand Equation

B34S 8.10R

4

Q

2

Q

(D:M:Y)

11/ 4/04 (H:M:S) 11:13:19

Least Squares Solution for System Number

1

Condition Number of Matrix is greater than

Relative Numerical Error in the Solution

LHS Endogenous Variable No.

2

Exogenous Variables (Predetermined)

1

CONSTANT

2

D

SIMEQ STEP

PAGE

Demand Equation

21.04911571706159

1.301987681166638E-11

Q

99.89542

0.3346356

Std. Error

7.519362

0.4542183E-01

t

13.28509

7.367285

Endogenous Variables (Jointly Dependent)

3

P

-0.3162988

Std. Error

0.9067741E-01

t

-3.488177

Residual Variance for Structural Disturbances

Ratio of Norm Residual to Norm LHS

3.725391173733892

1.762488253954560E-02

Covariance Matrix of Estimated Parameters

1

2

3

CONSTANT

D

1

2

56.54

0.3216E-01 0.2063E-02

-0.5948

-0.2333E-02

P

3

0.8222E-02

Correlation Matrix of Estimated Parameters

CONSTANT

D

P

1

2

3

NO. Y

3

1

1 CONSTANT

1 P

3 F

4 A

* * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * *

Test Case from Kmenta (1971) Pages 565 - 582

CONSTANT

D

P

(Variables)

2

1

1 CONSTANT

1 P

2 D

* * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * *

Supply Equation

2

No. X

CONSTANT

D

1

2

1.000

0.9417E-01

1.000

-0.8724

-0.5665

P

3

1.000

Test Case from Kmenta (1971) Pages 565 - 582

Least Squares Solution for System Number

Condition Number of Matrix is greater than

Relative Numerical Error in the Solution

LHS Endogenous Variable No.

2

Q

2

Supply Equation

17.67594711864223

1.318741471618151E-11

4-24

Chapter 4

Exogenous Variables (Predetermined)

1

CONSTANT

2

F

3

A

58.27543

0.2481333

0.2483023

Std. Error

11.46291

0.4618785E-01

0.9751777E-01

t

5.083825

5.372263

2.546227

Endogenous Variables (Jointly Dependent)

4

P

0.1603666

Std. Error

0.9488394E-01

t

1.690134

Residual Variance for Structural Disturbances

Ratio of Norm Residual to Norm LHS

5.784441135907554

2.130622575072544E-02

Covariance Matrix of Estimated Parameters

CONSTANT

F

A

P

1

2

3

4

CONSTANT

1

131.4

-0.3044

-0.2792

-0.9875

F

A

P

2

3

4

0.2133E-02

0.1316E-02

0.8440E-03

0.9510E-02

0.5220E-03

0.9003E-02

Correlation Matrix of Estimated Parameters

CONSTANT

F

A

P

1

2

3

4

CONSTANT

1

1.000

-0.5749

-0.2498

-0.9079

F

A

2

1.000

0.2921

0.1926

P

3

1.000

0.5642E-01

4

1.000

Test Case from Kmenta (1971) Pages 565 - 582

Contemporaneous Covariance of Residuals (Structural Disturbances)

For Least Squares Solution.

Condition Number of residual columns,

Demand E

Supply E

1

2

Demand E

1

3.167

3.411

2.664758

Supply E

2

4.628

Correlation Matrix of Residuals

Demand E

Supply E

1

2

Demand E

1

1.000

0.8912

Supply E

2

1.000

Test Case from Kmenta (1971) Pages 565 - 582

Coefficients of the Reduced Form Equations.

Least Squares Solution.

Condition number of matrix used to find the reduced form coefficients is no smaller than

P

CONSTANT

D

F

A

1

2

3

4

1

87.31

0.7020

-0.5206

-0.5209

4.195815340351579

Q

2

72.28

0.1126

0.1647

0.1648

Mean sum of squares of residuals for the reduced form equations.

1

2

P

Q

0.42748D+01

0.39192D+01

Condition Number of columns of exogenous variables,

11.845

For each estimated equation, the condition number of the matrix, equation (4.1-7), and the

relative numerical errors in the solution, equation (4.1-8), are given. The relative numerical

errors for the supply and demand equations were .1302E-10 and .13187E-10, respectively.

Estimated coefficients agree with Kmenta (1971, 582). From the estimated B and Γ coefficients,

the constrained reduced form π coefficients are calculated. The condition number of the

exogenous columns, .11845E+2, shows little multicollinearity among the exogenous variables.

The next outputs show the corresponding estimates for LIML, 2SLS, and 3LSL. As was

discussed earlier, since the asymptotic SEs for LIML are the same as for 2SLS, the simeq

Simultaneous Equations Systems

4-25

command does not print these values. Kmenta, however, reports standard errors on the LIML

estimates. Note that b34s reports both the large and small sample standard errors.

Test Case from Kmenta (1971) Pages 565 - 582

Limited Information - Maximum Likelihood Solution f

1

Demand Equation

Rank and Condition Number of Exogenous Columns

Rank and Condition Number of Endogenous Variables orthogonal to X(K)

Rank and Condition Number of Endogenous Variables orthogonal to X

Value of LIML

Parameter is

2

2

2

8.5174634

6.5593694

2.3005812

3

1

2

8.2098363

1.0000000

1.0000000

1.173867141559841

Condition Number of Matrix is greater than

Relative Numerical Error in the Solution

LHS Endogenous Variable No.

2

8.517463415017575

4.487883690647531E-12

Q

Standard Deviation Equals 2SLSQ Standard Deviation.

Exogenous Variables (Predetermined)

1

CONSTANT

2

D

93.61922

0.3100134

Endogenous Variables (Jointly Dependent)

3

P

-0.2295381

Residual Variance for Structural Disturbances

Ratio of Norm Residual to Norm LHS

3.926009688207962

1.809322459330604E-02

Test Case from Kmenta (1971) Pages 565 - 582

Limited Information - Maximum Likelihood Solution f

2

Supply Equation

Rank and Condition Number of Exogenous Columns

Rank and Condition Number of Endogenous Variables orthogonal to X(K)

Rank and Condition Number of Endogenous Variables orthogonal to X

Value of LIML

Parameter is

1.000000000000000

Condition Number of Matrix is greater than

Relative Numerical Error in the Solution

LHS Endogenous Variable No.

2

8.209836250820180

4.943047984855735E-12

Q

Standard Deviation Equals 2SLSQ Standard Deviation.

Exogenous Variables (Predetermined)

1

CONSTANT

2

F

3

A

49.53244

0.2556057

0.2529242

Endogenous Variables (Jointly Dependent)

4

P

0.2400758

Residual Variance for Structural Disturbances

Ratio of Norm Residual to Norm LHS

6.039577731391617

2.177103664979223E-02

Test Case from Kmenta (1971) Pages 565 - 582

Contemporaneous Covariance of Residuals (Structural Disturbances)

For LIMLE Solution.

Condition Number of residual columns,

Demand E

Supply E

Demand E

1

3.337

3.629

1

2

2.811594

Supply E

2

4.832

Correlation Matrix of Residuals

Demand E

Supply E

1

2

Demand E

1

1.000

0.9038

Supply E

2

1.000

Test Case from Kmenta (1971) Pages 565 - 582

Coefficients of the Reduced Form Equations.

LIMLE Solution.

Condition number of matrix used to find the reduced form coefficients is no smaller than

CONSTANT

D

1

2

P

Q

1

93.88

0.6601

2

72.07

0.1585

4.258817996669486

4-26

F

A

Chapter 4

3

4

-0.5443

-0.5386

0.1249

0.1236

Mean sum of squares of residuals for the reduced form equations.

1

2

P

Q

0.41286D+01

0.38401D+01

Test Case from Kmenta (1971) Pages 565 - 582

Two Stage Least Squares Solution for System Number

Condition Number of Matrix is greater than

Relative Numerical Error in the Solution

LHS Endogenous Variable No.

2

Exogenous Variables (Predetermined)

1

CONSTANT

2

D

1

Demand Equation

21.98482284147018

1.411421448020441E-11

Q

94.63330

0.3139918

Std. Error

7.920838

0.4694366E-01

t

11.94738

6.688695

Theil SE

7.302652

0.4327991E-01

Theil t

12.95876

7.254908

Endogenous Variables (Jointly Dependent)

3

P

-0.2435565

Std. Error

0.9648429E-01

t

-2.524313

Theil SE

0.8895412E-01

Theil t

-2.738002

Residual Variance for Structural Disturbances

Ratio of Norm Residual to Norm LHS

3.866416929101937

1.795538131264630E-02

Covariance Matrix of Estimated Parameters

CONSTANT

D

P

1

2

3

CONSTANT

D

1

2

62.74

0.4930E-01 0.2204E-02

-0.6734

-0.2642E-02

P

3

0.9309E-02

Correlation Matrix of Estimated Parameters

CONSTANT

D

P

1

2

3

CONSTANT

1

1.000

0.1326

-0.8812

D

P

2

3

1.000

-0.5833

1.000

Test Case from Kmenta (1971) Pages 565 - 582

Two Stage Least Squares Solution for System Number

Condition Number of Matrix is greater than

Relative Numerical Error in the Solution

LHS Endogenous Variable No.

2

Exogenous Variables (Predetermined)

1

CONSTANT

2

F

3

A

2

Supply Equation

18.21923089332271

1.431397195953368E-11

Q

49.53244

0.2556057

0.2529242

Std. Error

12.01053

0.4725007E-01

0.9965509E-01

t

4.124086

5.409637

2.537996

Theil SE

10.74254

0.4226175E-01

0.8913422E-01

Theil t

4.610868

6.048158

2.837565

Endogenous Variables (Jointly Dependent)

4

P

0.2400758

Std. Error

0.9993385E-01

t

2.402347

Theil SE

0.8938355E-01

Theil t

2.685905

Residual Variance for Structural Disturbances

Ratio of Norm Residual to Norm LHS

6.039577731391617

2.177103664979223E-02

Covariance Matrix of Estimated Parameters

CONSTANT

F

A

P

1

2

3

4

CONSTANT

1

144.3

-0.3238

-0.2952

-1.095

F

A

P

2

3

4

0.2233E-02

0.1377E-02

0.9362E-03

0.9931E-02

0.5791E-03

0.9987E-02

Correlation Matrix of Estimated Parameters

CONSTANT

F

A

P

1

2

3

4

CONSTANT

1

1.000

-0.5706

-0.2467

-0.9126

F

A

2

1.000

0.2924

0.1983

P

3

1.000

0.5815E-01

4

1.000

Test Case from Kmenta (1971) Pages 565 - 582

Contemporaneous Covariance of Residuals (Structural Disturbances)

For Two Stage Least Squares Solution.

Condition Number of residual columns,

Demand E

1

Demand E

1

3.286

Supply E

2

2.804709

Simultaneous Equations Systems

Supply E

2

3.593

4-27

4.832

Correlation Matrix of Residuals

Demand E

Supply E

1

2

Demand E

1

1.000

0.9017

Supply E

2

1.000

Test Case from Kmenta (1971) Pages 565 - 582

Coefficients of the Reduced Form Equations.

Two Stage Least Squares Solution

Condition number of matrix used to find the reduced form coefficients is no smaller than

P

CONSTANT

D

F

A

1

2

3

4

1

93.25

0.6492

-0.5285

-0.5230

Q

2

71.92

0.1559

0.1287

0.1274

Mean sum of squares of residuals for the reduced form equations.

1

2

P

Q

0.39831D+01

0.38317D+01

Condition number of the large matrix in Three Stage Least Squares

60.70221

4.135372945327849

4-28

Chapter 4

Test Case from Kmenta (1971) Pages 565 - 582

Three Stage Least Squares Solution for System Number

LHS Endogenous Variable No.

2

1

Demand Equation

Q

Exogenous Variables (Predetermined)

1

CONSTANT

2

D

94.63330

0.3139918

Std. Error

7.920838

0.4694366E-01

t

11.94738

6.688695

Theil SE

7.302652

0.4327991E-01

Theil t

12.95876

7.254908

Endogenous Variables (Jointly Dependent)

3

P

-0.2435565

Std. Error

0.9648429E-01

t

-2.524313

Theil SE

0.8895412E-01

Theil t

-2.738002

Residual Variance (For Structural Disturbances)

3.286454

Three Stage Least Squares Covariance for System

CONSTANT

D

P

CONSTANT

D

1

2

62.74

0.4930E-01 0.2204E-02

-0.6734

-0.2642E-02

1

2

3

Demand Equation

P

3

0.9309E-02

Three Stage Least Squares Solution for System Number

LHS Endogenous Variable No.

2

2

Supply Equation

Q

Exogenous Variables (Predetermined)

1

CONSTANT

2

F

3

A

52.11764

0.2289775

0.3579074

Std. Error

11.89337

0.4399381E-01

0.7288940E-01

t

4.382074

5.204767

4.910281

Theil SE

10.63776

0.3934926E-01

0.6519426E-01

Theil t

4.899308

5.819106

5.489861

Endogenous Variables (Jointly Dependent)

4

P

0.2289322

Std. Error

0.9967317E-01

t

2.296828

Theil SE

0.8915039E-01

Theil t

2.567932

Residual Variance (For Structural Disturbances)

5.360809

Three Stage Least Squares Covariance for System

CONSTANT

F

A

P

CONSTANT

1

141.5

-0.2950

-0.4090

-1.083

1

2

3

4

F

A

Supply Equation

P

2

3

0.1935E-02

0.2548E-02

0.8119E-03

0.5313E-02

0.1069E-02

4

0.9935E-02

Test Case from Kmenta (1971) Pages 565 - 582

Contemporaneous Covariance of Residuals (Structural Disturbances)

For Three Stage Least Squares Solution.

Condition Number of residual columns,

Demand E

Supply E

1

2

Demand E

1

3.286

4.111

6.321462

Supply E

2

5.361

Correlation Matrix of Residuals

Demand E

1

1

1.000

2

0.9794

Demand E

Supply E

Supply E

2

1.000

Test Case from Kmenta (1971) Pages 565 - 582

Coefficients of the Reduced Form Equations.

Three Stage Least Squares Solution using Orthogonal Factorization.

Condition number of matrix used to find the reduced form coefficients is no smaller than

P

CONSTANT

D

F

A

1

2

3

4

1

89.98

0.6645

-0.4846

-0.7575

Q

2

72.72

0.1521

0.1180

0.1845

Mean sum of squares of residuals for the reduced form equations.

1

2

P

Q

0.19065D+01

0.42494D+01

Iterated Three Stage Least Squares Results are given next.

4.232905401139098

Simultaneous Equations Systems

4-29

Iteration begins for Iterated 3SLSQ.

Condition number of the large matrix in Three Stage Least Squares

147.2220

Test Case from Kmenta (1971) Pages 565 - 582

Iterated Three Stage Least Squares Solution for System No.

LHS Endogenous Variable No.

2

1

Demand Equation

Q

Exogenous Variables (Predetermined)

1

CONSTANT

2

D

94.63330

0.3139918

Std. Error

7.920838

0.4694366E-01

t

11.94738

6.688695

Theil SE

7.302652

0.4327991E-01

Theil t

12.95876

7.254908

Endogenous Variables (Jointly Dependent)

3

P

-0.2435565

Std. Error

0.9648429E-01

t

-2.524313

Theil SE

0.8895412E-01

Theil t

-2.738002

Residual Variance (For Structural Disturbances)

3.286454

Iterated Three Stage Least Squares Covariance for System

Demand Equation

CONSTANT

D

P

CONSTANT

D

1

2

62.74

0.4930E-01 0.2204E-02

-0.6734

-0.2642E-02

1

2

3

P

3

0.9309E-02

Iterated Three Stage Least Squares Solution for System No.

LHS Endogenous Variable No.

2

2

Supply Equation

Q

Exogenous Variables (Predetermined)

1

CONSTANT

2

F

3

A

52.55269

0.2244964

0.3755747

Endogenous Variables (Jointly Dependent)

4

P

0.2270569

Std. Error

12.74080

0.4653972E-01

0.7166061E-01

t

4.124755

4.823758

5.241020

Theil SE

11.39572

0.4162639E-01

0.6409520E-01

Theil t

4.611616

5.393126

5.859638

Std. Error

0.1069194

t

2.123627

Theil SE

0.9563159E-01

Theil t

2.374287

Residual Variance (For Structural Disturbances)

5.565111

Iterated Three Stage Least Squares Covariance for System

Supply Equation

CONSTANT

F

A

P

CONSTANT

1

162.3

-0.3336

-0.4953

-1.245

1

2

3

4

F

A

P

2

3

4

0.2166E-02

0.3185E-02

0.9086E-03

0.5135E-02

0.1336E-02

0.1143E-01

Test Case from Kmenta (1971) Pages 565 - 582

Contemporaneous Covariance of Residuals (Structural Disturbances)

For Iterated Three Stage Least Squares Solution.

Condition Number of residual columns,

Demand E

Supply E

Demand E

1

3.286

4.198

1

2

6.814796

Supply E

2

5.565

Correlation Matrix of Residuals

Demand E

Supply E

1

2

Demand E

1

1.000

0.9816

Supply E

2

1.000

Test Case from Kmenta (1971) Pages 565 - 582

Coefficients of the Reduced Form Equations.

Iterated Three Stage Least Squares Solution.

Condition number of matrix used to find the reduced form coefficients is no smaller than

P

CONSTANT

D

F

A

1

2

3

4

1

89.42

0.6672

-0.4770

-0.7981

Q

2

72.86

0.1515

0.1162

0.1944

Mean sum of squares of residuals for the reduced form equations.

1

P

0.20576D+01

4.249772824974006

4-30

2

Chapter 4

Q

0.43519D+01

In Table 4.4 Kmenta (1971, 582) reports the 3SLS and iterative three squares coefficients for the

supply equation 1

3SLS 52.1972 (11.8934), .2286 (.0997)

I3SLS 55.5527 (12.7408), .2271 (.1069), .2245(.0465 ) and .3756 (.0717)

B34s gets

52.55269 (12.7408), .2270569 (.1069194), .2244964 (.04653972) and .3755747 (.07166061)

The coefficient 55.5527 reported by Kmenta and underlined in table 4.4 appears in error.

In Table 4.5 Kmenta (1986, 712) changes the estimated coefficients for iterative three stage least

squares. The new numbers are

52.6618 (12.8051) , .2266(.1075), .2234(.0468) and .3800 (.0720).

These numbers are quite different from the prior ones and bear some investigation. In the

Kmenta test problem, one equation (demand) was overidentified and one equation (supply) was

exactly identified. As was mentioned earlier, the 2SLS and 3SLS results for the overidentified

equation are the same because the other equation was exactly identified. However, the 3SLS

results for the exactly identified equation (supply) differ from the 2SLS results because the other

equation (demand) is over identified. Close inspection of the results for 3SLS for the demand

equation shows that they are the same as those of Kmenta (1971, 582) and Kmenta (1986, 712).

As notes the iterative least squares supply-equation results are the same as those of Kmenta

(1971) but differ slightly from those of Kmenta (1986), which appear to be in error. 10 To

facilitate testing, SAS and RATS setups are shown in Tables 4.2 and 4.3 and their output

discussed in some detail.

10 The file example.mac contains an extension of the above test case that calls RATS, SAS and a

B34S matrix implementation. For the supply equation SAS gets the Kmenta (1986) results which

are 52.1972 (11.8934), .2286 (.0997), .2282 (.0440), (.3611). What RATS calls 3SLS produces

what B34S calls I3SLS. Readers are encouraged to use the code in tables 4.4 and 4.5 to further

investigate this issue. A major difficulty for the researcher to be able to tell exactly what is being

estimated by a software system. For this reason attempting the model on multiple software

systems is strongly advised.

Simultaneous Equations Systems

Table 4.4 Kmenta (1971, 582) OLS, 2SLS, LIML, 3SLS, I3SLS, FIML Test Problem Answers

31

32

Chapter 4

Table 4.5 Kmenta (1986, 712) OLS, 2SLS, LIML, 3SLS, I3SLS, FIML Test Problem Answers

As noted earlier, the 2SLS and 3SLS results for the over- identified equation (demand)

are the same. However, the printout shows that the residual variance for the 2SLS result is

3.8664, while the residual variance for the 3SLS result is 3.2865. The reason for this apparent

error is that the 2SLS residual variance equals the sum of squared residuals divided by T-K,

while the 3SLS calculation uses T; hence, 3.8664 = 3.2865 *(20/17).

Simultaneous Equations Systems

33

To investigate the differences in the supply equation that occur in Kmenta (1971) and (1986),

edited and annotated SAS, RATS and Stata output is shown next. SAS 3SLS and I3SLS

output is shown to agree with Kmenta (1986) for both demand and supply equations. Note that

these numbers do not agree with Kmenta (1971)!

The SYSLIN Procedure

Three-Stage Least Squares Estimation

Parameter Estimates

Variable

Intercept

P

D

DF

Parameter

Estimate

Standard

Error

t Value

Pr > |t|

1

1

1

94.63330

-0.24356

0.313992

7.920838

0.096484

0.046944

11.95

-2.52

6.69

<.0001

0.0218

<.0001

Model

Dependent Variable

SUPPLY

Q

Parameter Estimates

Variable

Intercept

P

F

A

DF

Parameter

Estimate

Standard

Error

t Value

Pr > |t|

1

1

1

1

52.19720

0.228589

0.228158

0.361138

11.89337

0.099673

0.043994

0.072889

4.39

2.29

5.19

4.95

0.0005

0.0357

<.0001

0.0001

Endogenous Variables

DEMAND

SUPPLY

P

Q

0.243557

-0.22859

1

1

Exogenous Variables

DEMAND

SUPPLY

Intercept

D

F

A

94.6333

52.1972

0.313992

0

0

0.228158

0

0.361138

Inverse Endogenous Variables

P

Q

DEMAND

SUPPLY

2.11799

0.48415

-2.11799

0.51585

34

Chapter 4

The SYSLIN Procedure

Three-Stage Least Squares Estimation

Reduced Form

P

Q

Intercept

D

F

A

89.87924

72.74263

0.665032

0.152019

-0.48324

0.117695

-0.76489

0.186293

The SYSLIN Procedure

Iterative Three-Stage Least Squares Estimation

Parameter Estimates

Variable

Intercept

P

D

DF

Parameter

Estimate

Standard

Error

t Value

Pr > |t|

1

1

1

94.63330

-0.24356

0.313992

7.920838

0.096484

0.046944

11.95

-2.52

6.69

<.0001

0.0218

<.0001

Model

Dependent Variable

SUPPLY

Q

Parameter Estimates

Variable

Intercept

P

F

A

DF

Parameter

Estimate

Standard

Error

t Value

Pr > |t|

1

1

1

1

52.66182

0.226586

0.223372

0.380006

12.80511

0.107459

0.046774

0.072010

4.11

2.11

4.78

5.28

0.0008

0.0511

0.0002

<.0001

Endogenous Variables

DEMAND

SUPPLY

P

Q

0.243557

-0.22659

1

1

Exogenous Variables

DEMAND

SUPPLY

Intercept

D

F

A

94.6333

52.66182

0.313992

0

0

0.223372

0

0.380006

Inverse Endogenous Variables

P

Q

DEMAND

SUPPLY

2.127012

0.481952

-2.12701

0.518048

The SYSLIN Procedure

Iterative Three-Stage Least Squares Estimation

Reduced Form

P

Q

Intercept

D

F

A

89.27387

72.89007

0.667864

0.151329

-0.47512

0.115718

-0.80828

0.196861

RATS output is shown next for OLS, 2SLS, LIML, and 3SLS two ways. Note that the 3SLS

results 100% agree with what b34s and Kmenta get for I3SLS, not 3SLS. Rats is using the large

sample SE. Rats output for the same problem using the nonlin procedure gets the same answers.

The Rats Pro version 8.1 was used to make the calculations.

Output from RATS

*

* Data passed from B34S(r) system to RATS

*

display @1 %dateandtime() @33 ' Rats Version ' %ratsversion()

03/10/2012 15:05

Rats Version

8.10000

Simultaneous Equations Systems

*

CALENDAR(IRREGULAR)

ALLOCATE

20

OPEN DATA rats.dat

DATA(FORMAT=FREE,ORG=OBS,

MISSING=

0.1000000000000000E+32

Q

P

D

F

A

CONSTANT

SET TREND = T

TABLE

Series

Obs

Mean

Q

20 100.898200000

P

20 100.019050000

D

20 97.535000000

F

20 96.625000000

A

20 10.500000000

TREND

20 10.500000000

$

) / $

$

$

$

$

$

Std Error

3.756498224

5.926086394

11.830481371

12.708798237

5.916079783

5.916079783

Minimum

92.424000000

86.498000000

75.100000000

68.600000000

1.000000000

1.000000000

Maximum

106.232000000

113.490000000

127.100000000

110.800000000

20.000000000

20.000000000

*

*

heading=('test case from kmenta 1971 page 565 - 582 ' ) $

*

exogenous constant d f a $

*

endogenous p q $

*

model lvar=q rvar=(constant p d)

name=('demand eq.') $

*

model lvar=q rvar=(constant p f a) name=('supply eq.') $

linreg q

# constant p d

Linear Regression - Estimation by Least Squares

Dependent Variable Q

Usable Observations

20

Degrees of Freedom

17

Centered R^2

0.7637886

R-Bar^2

0.7359990

Uncentered R^2

0.9996894

Mean of Dependent Variable

100.89820000

Std Error of Dependent Variable

3.75649822

Standard Error of Estimate

1.93012724

Sum of Squared Residuals

63.331649953

Regression F(2,17)

27.4847

Significance Level of F

0.0000047

Log Likelihood

-39.9053

Durbin-Watson Statistic

1.7442

Variable

Coeff

Std Error

T-Stat

Signif

************************************************************************************

1. Constant

99.89542291

7.51936214

13.28509 0.00000000

2. P

-0.31629880

0.09067741

-3.48818 0.00281529

3. D

0.33463560

0.04542183

7.36729 0.00000110

linreg q

# constant p f a

Linear Regression - Estimation by Least Squares

Dependent Variable Q

Usable Observations

20

Degrees of Freedom

16

Centered R^2

0.6548075

R-Bar^2

0.5900838

Uncentered R^2

0.9995460

Mean of Dependent Variable

100.89820000

Std Error of Dependent Variable

3.75649822

Standard Error of Estimate

2.40508651

Sum of Squared Residuals

92.551058175

Regression F(3,16)

10.1170

Significance Level of F

0.0005602

Log Likelihood

-43.6991

Durbin-Watson Statistic

2.1097

Variable

Coeff

Std Error

T-Stat

Signif

************************************************************************************

1. Constant

58.275431202 11.462909888

5.08383 0.00011056

2. P

0.160366596 0.094883937

1.69013 0.11038810

3. F

0.248133295 0.046187854

5.37226 0.00006227

4. A

0.248302347 0.097517767

2.54623 0.02156713

instruments constant d f a

linreg(inst) q

# constant p d

Linear Regression - Estimation by Instrumental Variables

Dependent Variable Q

Usable Observations

20

Degrees of Freedom

17

Mean of Dependent Variable

100.89820000

Std Error of Dependent Variable

3.75649822

Standard Error of Estimate

1.96632066

Sum of Squared Residuals

65.729087795

35

36

Chapter 4

J-Specification(1)

Significance Level of J

Durbin-Watson Statistic

2.5357

0.1113010

2.0092

Variable

Coeff

Std Error

T-Stat

Signif

************************************************************************************

1. Constant

94.63330387

7.92083831

11.94738 0.00000000

2. P

-0.24355654

0.09648429

-2.52431 0.02183240

3. D

0.31399179

0.04694366

6.68869 0.00000381

linreg(inst) q

# constant p f a

Linear Regression - Estimation by Instrumental Variables

Dependent Variable Q

Usable Observations

20

Degrees of Freedom

16

Mean of Dependent Variable

100.89820000

Std Error of Dependent Variable

3.75649822

Standard Error of Estimate

2.45755523

Sum of Squared Residuals

96.633243702

Durbin-Watson Statistic

2.3846

Variable

Coeff

Std Error

T-Stat

Signif

************************************************************************************

1. Constant

49.532441699 12.010526407

4.12409 0.00079536

2. P

0.240075779 0.099933852

2.40235 0.02878451

3. F

0.255605724 0.047250071

5.40964 0.00005785

4. A