DependentRegressors

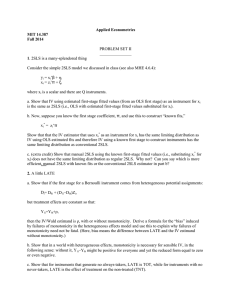

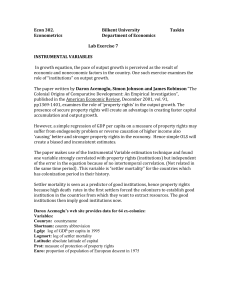

advertisement

Today we discussed the issue of Dependent Regressors Suppose that we have a CORRECTLY specified regression model as: Yt = o + 1X t + t where X t and t are independent. We usually assume that Xt and t are independent. OLS requires that Xt and t are independent. However, now we consider the problem of where Xt and t are NOT independent. We should not use OLS. If we use OLS, then (i) E[ ˆ1 ] 1 ˆ1 is biased (ii) lim P [ | ˆ1 1 | ] 0 (iii) var( ˆ1 ) is not smallest among linear estimators ˆ1 is inefficient as N ˆ1 is inconsistent These are ALL bad. What can we do if we want to estimate the regression above? Option #1. Change the model. NO, remember that we assumed that the model is correctly specified. Option #2. Get more data. NO, more data will not cause the OLS estimator to become unbiased or consistent. Option #3. plim( ˆ1 ) 1 Try another estimation method. YES, use the Instrumental Variable (IV) Estimator Here is the theory to IV estimation, which is a form of 2SLS estimation Suppose Yt = o + 1X t + t That is, corr (X t, t) 0 . where X t and t are correlated. Now, suppose that we can find another variable Zt such that (1) corr (Z t, X t) high It is NOT easy to find such a Z t variable. and (2) corr (Z t, t) low But, let’s assume we can find Z t. We first regress X t = o + 1Zt + t . Next, get the predicted values, which are Xˆ t ˆ o ˆ 1 Z t . Now regress Yt = o + 1 X̂ t + t. This last regression gives us the IV estimates, which are equal to the 2SLS estimates ( in this simple regression case). The resulting IV (or 2SLS) estimates ( ˆ o and ˆ1 ) will be biased, but they will be consistent. This means that as the sample size increases, the probability that the estimates will get close to the actual values o and 1, will be high. Let’s see an example of the 2SLS estimation procedure. . EXAMPLE: (2SLS) Suppose that Yt = o + 1X t + t and that we know everything. We even know that o = 1 and 1 = 3. Now suppose that we have data: t : 1 2 3 4 5 6 7 8 Yt : 6 14 6 10 17 10 9 14 Xt : 2 4 2 3 5 3 3 4 Let’s suppose that we even know the ’s t: -1 1 -1 0 1 0 -1 1 Remember that we usually don’t know the ’s and ’s. We usually estimate them. In our example here, we assume that we have perfect knowledge of everything. We will then look and compare OLS with 2SLS to see if 2SLS estimates the ’s better. Suppose that we calculate the sample correlation between X t and t. Using the data above and the formula X , ( X X )( ) ( X X ) ( ) t t 2 t following result: 2 we get the t X , = 0.89 which is quite high. Therefore, we can see that X t and t have a high sample correlation. Probably X t and t are not independent. We know that OLS estimation of the ’s will be biased, inconsistent, and inefficient. We use the data on Yt and Xt to estimate the ’s by OLS. Our result is Yˆt 1.6 3.8 X t (-2.89) (23.3) R 2 = 0.987 D-W = 2.93 The estimation looks pretty good since the t statistics are all OK and the adjusted R2 is high. The D-W is not very good, but we might think that is because of the small However, we KNOW that o = 1 and 1 = 3. number of data points that we have. Therefore OLS has done a bad job of estimation. Can we use 2SLS and get better estimates? The answer in this case is yes. Suppose that we can find another variable (which we call the instrumental variable). We call this variable Zt. Zt: 3 Note: 3 2 0 4 corr (Zt, t) = 0.08 -1 4 LOW 3 and corr (Zt,Xt) = 0.34 HIGHER The 2SLS estimation of the regression equation is given by Yˆt 0.28 3.2 Xˆ t Note: 2SLS estimates are much closer. R 2 0.07 D-W = 2.28 Let’s look at a table to see this: Method o Actual 1.0 3.0 - 1.6 3.8 OLS 2SLS 0.28 1 3.2 Note: The R 2 statistic under 2SLS is negative. You should not look at the R2 or adjusted R2 statistics under 2SLS since these variables can be negative. They do not have the usual meaning under 2SLS. Here is a small problem: Suppose Y t = X t + t. Show that Indirect Least Squares estimation of equals 2SLS estimation of for this case. That is ILS = 2SLS. You may assume the instrumental variable is equal to Zt.