PFS3: A novel Parallel File System Scheduler Simulator Abstract

advertisement

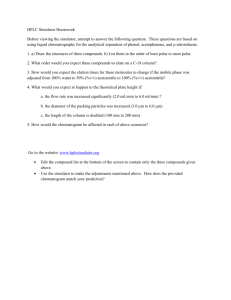

PFS3: A novel Parallel File System Scheduler Simulator Abstract Currently, many high-end computing centers and commercial data centers adopt parallel file systems (PFS) as their storage solution. In these systems, thousands of applications access the storage in parallel with large variety of I/O bandwidth requirements. Therefore, scheduling algorithms for data access play a very important role in the PFS performance. However, it is hardly possible to thoroughly research the scheduling mechanisms in petabyte scale systems because of the complex management and the expensive access cost. Unfortunately, very few study are conducted in PFS scheduling simulator yet. We propose a parallel file system scheduler simulator PFS3 for scheduling algorithm evaluation. It is based on the discrete event simulator platform OMNeT++ and the Disksim simulator environment. This simulator is scalable, easy-to-use and flexible. It is capable of simulating adequate system details while having acceptable simulation time. We have implemented the simulator with PVFS2 model, and several scheduling algorithms. Scalable on at least … machines. The error under %... clients with … requests, … data servers, it can be finished within … time. 1. Introduction (pfs overall) Recent years, parallel file systems are becoming more and more popular in HEC centers and commercial data centers[]. These parallel file systems include PVFS[], Lustre[], PanFS[], and Ceph[], etc. Parallel file systems outperform typical distributed file systems such as NFS, because the files stored in the parallel file systems are fully stripped onto multiple data servers. For this reason, different offsets within a single file can be accessed in parallel, also, this will enhance the load balance among data servers. Centralized metadata servers are used for the meta operations and the mapping from files to storage objects. (scheduling algorithms are important) In high-end computing systems, there are hundreds or thousands of applications access data in the storage, with large variety of I/O bandwidth requirements. Unfortunately, parallel file systems themselves do not have the capability of (and they do not need) managing I/O flows on a per-dataflow basis, so scheduling strategies for I/O access is very critical in this circumstance. (lacking algorithm for pfs) Even though scheduling strategies for file systems or relative fields such as packet switching network have long been discussed in numerous papers[][][][], no one guarantees any of these algorithms can be directly deployed in parallel file systems. Actually, as mentioned in the last paragraph, in high-end computing systems the I/O access amount is huge. In this context, centralized algorithms can hardly be deployed on a single point, while decentralized algorithms still need to be verified suitable for the parallel file systems. (developing the algorithms on real pfs is hard) While parallel file systems widely adopted in the high-end computing field, the scheduling research for these file systems are still very rare. Part of the reasons can be the difficulty of scheduler testing on the high-end computing systems. The most two significant factors that prevent the testing on real systems are: 1) the cost of scheduler testing on a peta- or exascale file system requires complex deployment, monitoring and resource management; 2) the storage resources used in high-end computing systems are very precious, typically with utilization of 24/7. (we need a simulator) Under this context, a simulator that allows developers to test and evaluate the scheduler designs will be very beneficial. It extricates the developers from tedious deployment headaches in the real systems and cuts their cost in the algorithm development. Even the simulation results will have discrepancy with the real results, the results are still able to show the performance trends. (the goals of the simulator) In this paper, we propose a novel simulator, PFS3 (Parallel File System Scheduling Simulator). The purpose of this simulator is to be 1) scalable: provide up to thousands of data servers for middle / large scale scheduling algorithm testing; 2) easy-to-use: network topology, file distribution strategy (file stripping specification) and scheduling algorithms can be specified in script files; 3) efficient: the runtime/simulated-time ratio will be controlled under 20:1. (paper organization) In the second section, we introduce other known parallel file system simulators. In the third section, we talk about the system architecture. In the fourth section, we show the validation results. In the last section, we briefly conclude our work and talk about the future improvements. 2. Related Work As our best knowledge, there exist two parallel file system simulators in publications. One is the IMPIOUS simulator proposed by E. Molina-Estolano, et. al[], the other one is the simulator developed by P. H. Carns et.al[]. The IMPIOUS simulator is developed for fast evaluation of parallel file system design, such as data stripping and placement strategies. It simulates the parallel file system abstraction with user provided file system specifications. In this simulator, the clients read the I/O traces and issue them to the Object Storage Devices (OSDs) according to the file system specifications. The OSDs can be simulated accurately with the DiskSim disk model simulator[]. For the goal of fast and efficient simulation, IMPIOUS simplified the parallel file system model by omitting the metadata communications. And for the reason that it is designed for parallel file systems idea evaluation, it does not have scheduler model implemented. The other simulator is illustrated in the paper written by P. H. Carns et. al. This simulator is used for testing the overhead of metadata communications. So a detailed TCP/IP based network model is implemented by employing the INET extension[] of the OMNeT++ discrete event simulation framework[]. Learned from these simulators, we find out with different simulation goals, a simulator can be precise in a specific aspect. An “ideal” parallel file system simulator should have everything simulated in detail, and in our simulator we have these modules expendable. We used DiskSim to simulate detailed physical disks, and we adopted OMNeT++, which has the INET extension to support precise network simulations. 3. The PFS3 Simulator 3.1 General Model of Parallel File System Although different parallel file systems differ from each other in many ways, the key differences are in the data placement strategy, which means how to stripe the files and how to distribute the data chunks to leverage the access efficiency and system fault-tolerance. Generally, all parallel file systems share the same basic architecture: 1. There are a number of data servers, which run their own local file systems and export the storage objects in a flat name space; 2. There are one or multiple metadata servers, which keep the mapping from files to storage objects in the system, as well as taking care of the metadata operations; 3. The clients are run on system users' machines; they provide the interface for users' applications to access the file system. Note that in parallel file systems, users' files, directories, links, or a metadata file are all stored in storage objects on data servers. Big files are stripped to multiple data servers with specified stripping size and distribution scheme. The metadata server stores the stripping information for all objects. One file access request in parallel file system typically goes through the following steps: 1. By calling API, the application sends the access request to the parallel file system client running on user's machine. 2. The client queries the metadata server for file information. 3. The metadata server replies with the file’s properties, storage object IDs, and stripping information. 4. The client accesses the data by accessing the storage objects on the data servers. If the data is located on multiple storage objects on multiple data servers, the access is done in parallel. 3.2 Parallel File System Scheduling Simulator Based on the general model of parallel file systems, we have developed PFS3 (Parallel File System Scheduling Simulator) based on the discrete event simulation framework OMNeT++4.0 and the disk model simulator DiskSim4.0. The simulation is stimulated by the trace files provided on the client side. The trace files provide the operations to the parallel file system. Upon reading the trace from the input file, the client creates an object, and sends out it to the metadata server. Since the metadata server keeps the data stripping and placement strategy for the simulated parallel file system, the io server ID is sent back. If the operation is a 4. Validation and Performance Analysis In order to validate the simulator, we have conducted 2 set of tests. The test setup: 16 data servers and 1 metadata server, 256 clients, In this paper, we propose a novel parallel file system simulator, PFS3 (Parallel File System Scheduling Simulator) for parallel file system scheduling simulation. This simulator is developed based on the OMNeT++ discrete event simulator[7] and the Disksim disk model simulator[8]. The purpose of this simulator is to provide the users a simple, scalable accuracy-acceptable and easy-to-use simulator to test scheduling algorithms for parallel file systems. Currently, we have implemented and tested the interposed scheduler module on the simulator, as shown in fig. 1. Scheduling algorithms in distributed file systems plays a very important role in system performance. Typical large scale file systems have hundreds or thousands of applications accessing data in parallel, which all demand a proportional share of the system resource; it must be enforced by a scheduler. Also, especially for HPC file systems, applications need to periodically backup their states to recover by in case of a failure. Checkpoint workloads have huge amount of data access, which will easily overwhelm the normal data access of the system. In this case, proportional sharing is a necessity to enforce the checkpoint processes only using its proportion of resource. Problem: For checkpoint processes utilizing parallel file systems, how do we schedule the workloads to enhance the system fairness in a distributed circumstance? 1. Less scheduling overhead. 2. Enhance both the short-term fairness and the long-term fairness. 3. Adapt the characteristics of the checkpoint processes to make it efficient. For parallel file systems, the data are stored on multiple data servers. Each process may only know or access an subset of these data servers. The difficulty of scheduling fairness emerges for the reason of competing workloads and limited knowledge of the entire system. Parallel file systems have these unique characteristics: 1. Data are stripped onto multiple data servers, the data can be accessed from multiple data servers in parallel. 2. Data servers have high workload. To achieve system-wide scheduling, centralized schedulers can be implemented on the meta-data servers, since all the application data accesses start with the access to meta-data servers. But there are some drawbacks in this approach. First, in some parallel file systems, such as PVFS, the clients do not tell the meta-data servers the data size of the access when they are accessing the meta-data. So in this case a change to the file system is needed for getting scheduling information, which is not clean work and may introduce more problems. Second, this approach can only control the data access in the granularity of each client-side call, which includes the access to the meta-data server and a certain number of data accesses to the data servers. To better control the data flow to the data servers, one must be able to schedule every single request, which is impossible if the scheduler is implemented on the meta-data servers. Another thought can be implementing a stand-along centralized scheduler, residing on the router that all client-data server communications go through. However, this approach introduces a single point of failure, and brings performance overhead because of the scheduler processing time and the bandwidth on the router. The above limitations make a centralized scheduler not preferable for a parallel file system. So we suggest a distributed scheduling approach. But distributed scheduling mechanisms also bring up questions to us: What information shall the data servers share, and how frequently shall they share if the system can not afford much communication overhead while it still wants to achieve the schedule target? A scheme of propagating the information of each request to each data server [ProportionalShare Scheduling for Distributed Storage Systems] can achieve the global target easily under the assumption of ideal network. But in reality, especially in large scale parallel file systems, the networks are very busy and heavy-loaded, which makes it impossible to allow a big meta-data overhead. In this paper, we propose a virtual-time based scheme that counts the serving amount of each flow on every data server, so if the data servers periodically communicate to update the virtual-time of each flow, the Global queue tag information update interval: The longer the interval is, the more long-term fluctuation each individual throughput will have. But short update interval introduces more traffic. Bucket update interval / token number: The smaller, harder to catch up. The bigger, the more fluctuation. Bucket update interval, (update interval / token number) ratio does not change: The bigger, the more short-term fluctuation. The smaller, the harder to catch up. Bucket token number: The bigger, the easier to catch up, but the more fluctuation we will have. Assigning different token numbers References [1] L. Zhang, “VirutalClock: A new traffic control algorithm for packet switching networks”, in Proc. ACM SGCOMM’90, Aug. 1990, pp. 19-29 [2] A. K. Parekh, “A generalized processor sharing approach to flow control in integrated services networks”, Ph.D. thesis, Dept. Elec. Eng. Comput. Sci., MIT, 1992. [3] P. Goyal, H. M. Vin, “Start-Time Fair Queueing: A Scheduling Algorithm for Integrated Services Packet Switching Networks” [4] W. Jing, J. Chase, and J. Kaur. “Interposed proportional sharing for a storage service utility”, Proceedings of the International Conference on Measurement and Modeling of Computer Systems (SIGMETRICS), Jun 2004. [5] Y. Wang and A. Merchant, “Proportional Share Scheduling for Distributed Storage Systems”, File and Storage Technologies (FAST’07), San Jose, CA, February 2007. [6] Y. Saito, S. Frølund, A. Veitch, A. Merchant, and S. Spence, “Fab: Building distributed enterprise disk arrays from commodity components”, Proceedings of ASPLOS. ACM, Oct. 2004. [7] Vaga A 2001 Proceedings of the European Simulation Multiconference [8] John S. Bucy, Jiri Schindler, Steven W. Schlosser, and Gregory R. Ganger, “The DiskSim simulation environment version 4.0 reference manual”, Technical Report CMU-PDL-08-101, Carnegie Mellon University, May 2008. [9] Top500 2008 Performance development URL http://top500.org/lists/2008/11/performance.development