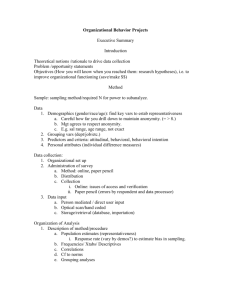

A Survey of Anonymous Communication Channels

advertisement