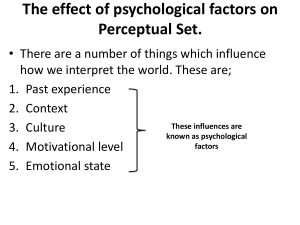

Hierarchical stages or emergence in perceptual

advertisement

Hierarchical stages or emergence in perceptual integration? Cees van Leeuwen Laboratory for Perceptual Dynamics University of Leuven To appear in: Oxford Handbook of Perceptual Organization Oxford University Press Edited by Johan Wagemans Acknowledgments The author is supported by an Odysseus research grant from the Flemish Organization for Science (FWO) and wishes to thank Lee de-Wit, Pieter Roelfsema, and Andrey Nikolaev for useful comments. 1. Visual hierarchy gives straightforward but unsatisfactory answers Ever since Hubel and Wiesel’s (1959) seminal investigations of primary visual cortex (V1), researchers have overwhelmingly been studying visual perception from a hierarchical perspective on information processing. The visual input signal proceeds from the retina through the Lateral Geniculate Nuclei (LGN), to reach the neurons in primary visual cortex. Their classical receptive fields, i.e. the stimulation these neurons maximally respond to, are mainly local (approx. 1 degree of visual angle in cat) orientation-selective transitions in luminance, i.e. static contours or perpendicular contour movement. Lateral connections between these neurons were disregarded or were understood mainly to be inhibitory and contrast-sharpening, and thus receptive fields were construed as largely context-independent. Thus the receptive fields provided the low-level features that form the basis of mid-and high level visual information processing. Hubel and Wiesel (1974) found the basic features to be systematically laid out in an orientation preference map. The map and that of other features such as color, form, location, and spatial frequency suggests combinatorial optimization; for instance iso-orientation gradients on the orientation map are orthogonal to iso-frequency gradients (Nauhaus, Nielsen, Disney, & Callaway, 2012). Such systematicity may be adaptive for projecting a multi-dimensional feature space onto an essentially two-dimensional sheet of cortical tissue. Whereas the basic features are all essentially separate, these are usually not part of our visual experience. Such properties are usually integral. These properties emerge from relationships between basic features. (More about them in the section on Garner interference. Garner distinguished integral and configural dimensions, a distinction that need not concern us here, see also Chapter 28, Townsend & Wenger, this volume). From the initial mosaic of features, in order to achieve an integral visual representation, visually-evoked activity continues its journey through a hierarchical progression of regions. Felleman and Van Essen (1991) already distinguished ten levels of cortical processing; fourteen if the front-end of retina and LGN, as well as at the top end the entorhinal cortex and hippocampus, are also taken into account. One visual pathway goes through V2 and V4 to areas of the inferior temporal cortex: posterior inferotemporal (PIT), central 1 inferotemporal (CIT), and anterior inferotemporal (AIT): the ventral stream; the other stream branches off after V2/V3 (Livingstone & Hubel, 1988): the dorsal stream. For perceptual organization the primary focus has typically been the ventral stream; this is where researchers situate the grouping of early features into tentative structures (Nikolaev & van Leeuwen, 2004); from which higher up in the hierarchy whole, recognizable objects are construed. The visual hierarchy achieves integral representation through convergence. Whereas LGN neurons are not selective for orientation, to obtain this feature in V1 requires the output of several LGN neurons to converge on V1 simple cells. Besides simple cells, complex cells were distinguished of which the receptive field is larger and more distinctive; Hubel and Wiesel proposed them to be the result of output from several simple cells converging on a complex cell. Convergence is understood to continue along the ventral stream (Kastner, et al., 2001), leading to receptive field properties not available at lower level (Hubel & Wiesel, 1998): e.g. a representation in V4 is based on convex and concave curvature (Carlson, Rasquinha, Zhang, & Connor, 2011). Correspondingly, these representations are becoming increasingly abstract; e.g. curvature representations in V4 in Macaque are invariant against color changes (Bushnell and Pasupathy, 2011). Also, the populations of neurons that carry the representations become increasingly sparse (Carlson et al., 2011). The higher up, the more the representations become integral and abstract, i.e. invariant under perturbations such as location or viewpoint changes (Nakatani, Pollatsek, & Johnson, 2002) or occlusion (e.g. Plomp, Liu, van Leeuwen et al., 2006). In individual neurons of macaque inferotemporal cortex (Tanaka, Saito, Fukada, & Moriya, 1991), although some of these cells respond specifically to whole, structured objects such as faces or hands, most of them are more responsive to simplified objects. These cells provide higher-order features with more or less position and orientation-invariant representation. The “more or less” is added because the classes of stimuli these neurons respond to vary widely; some are orientation invariant, some are not; some are invariant with respect to contrast polarity, some are not. Collectively, neurons in temporal areas represent objects by using a variety of combinations of active and inactive columns for individual features (Tsunoda, Yamane, Nishizaki, & Tanifuji, 2001). They are organized in spots, also known as columns, that are activated by the same stimulus. Some researchers proposed that these columns constitute a map, the dimensions of which representing some abstract parameters of object space (Op de Beeck, Wagemans, & Vogels, 2001). Whether or not this proposal holds, it remains true that realistic objects at this level are coded in a sparse and distributed population (Quiroga, Kreiman, Koch, & Fried, 2008; Young & Yamane, 1992). In the psychological literature, the hierarchical approach to the visual system has found a functional expression early on in the influential work of Neisser (1967), who identified the hierarchical levels with stages of processing. Although Neisser recalled much from these views in subsequent work (Neisser, 1976), these early ideas have remained remarkably persistent amongst psychologists. Most today acknowledge hierarchical stages in perception albeit ones that are ordered as cascades rather than strictly sequentially. Neisser (1967) regards the early stages of perception as automatic and the later ones as attentional. This notion has been elaborated by Anne Treisman, mostly in visual search experiments. Treisman & Gelade (1980) showed that visual detection of target elements in a field of distracters is easy when the target is distinguished by a single basic feature. When, however, a conjunction of features is needed to identify a target, search is slow and difficult. Presumably, this is because attention is deployed by visiting the spatial location of each item-by-item. Treisman concluded that spatially selective attention is needed for feature integration. 2 However, regardless of whether a basic feature identifies the target, the ease of finding it amongst non-targets depends on their homogeneity (Duncan & Humphreys, 1989); search for conjunctions of basic features need not involve spatial selection, as long as these conjunctions result in the emergence of a higher-order, integral feature that is salient enough (Nakayama & Silverman, 1986; Treisman & Sato, 1990; Wolfe, Cave, & Franzel, 1988). We will come back to this notion shortly. For now we may consider salience as the product of competition amongst target and distracter features, positively biased for relevant target features (Desimone & Duncan 1995) and/or negatively biased for nontarget features, including the target’s own components (Rensink & Enns, 1995). Rapid detection of conjunctions could, in principle, be explained by strategic selection of a higherorder feature map –but since in natural scenes, rather complex features including entire 3D objects could be efficiently searched (Enns & Rensink, 1990), this would require an arbitrary number of feature maps. These being unavailable, complex detection in this approach must be restricted to higher, object-level representations of the world (Duncan & Humphreys, 1989; Egeth & Yantis, 1997; Kahneman & Henik, 1981). To enable complex detection at the highest levels of processing, according to the hierarchical approach, it is required that widely spread visual information, including that from different regions along the ventral and dorsal pathways, converges on specific areas. Candidate regions are those that receive information from multiple modalities, such as the Lateral Occipital Complex (LOC). Neural representations here are found to be specific to combinations of dorsal and ventral stream information, e.g. neurons have been found in LOC that are selective for graspable visual objects over faces or houses (Amedi, Jacobson, Hendler, et al. 2002). A subset of these convergence areas may enable conscious access to visual representations (Dehaene, Changeux, Naccache et al., 2006), in other words: be held responsible for the content of our visual experience. 1.1. Unresolved problems in the hierarchical approach Contra to the hierarchical approach, in which visual consciousness “reads out” the visual information at the highest levels, Gaetano Kanizsa (1994) and earlier Gestaltists warned against such an “error of expectancy”: the hierarchical view to perception is misleading us about why objects look the way they do. It mistakes the content of perception for our reasoning about these contents. The latter is informed by instruction, background knowledge and our inferences. But consider Figure 1. What it tells us is that the highest level is not always in control of our experience. While discussing visual search, we have already encountered the concept of “salience”. Here, again, we might want to say that the perceptual content is salient; it “pops out” and automatically grabs our attention in a way similar to a loud noise or a high-contrast moving stimulus. But such notions are question-begging. For explaining why something pops out, we rely on common sense. A loud noise pops out because it is loud. But what is it about Figure 1? We might want to say that the event is salient, because it is unlikely. Recall, however, that we are then drawing on precisely the kind of knowledge and inferences that would prevent us from seeing what we are actually seeing here. We might say the event is salient, because mid-level vision is producing an unusual output. This requires conscious awareness to have access to the mid-level representations, in which, according to Wolfe & Cave (1999) targets and non-targets consist of loosely bundled collections of features. But as far as this level is concerned, there is nothing unusual about the scene; it is just a few bundles of surfaces, some of which are partially occluded. The event is salient because it seems a fist is being swallowed. This illusion, therefore, is taking the notion of popping out to the extreme: what is supposedly popping out is actually popping in. 3 Figure 1. Popping out or popping in? Seeing is not always believing. http://illutionista.blogspot.be/2011/07/eating-hand-illusion-punching-face.html. All things considered, perhaps perception scientists have focused too exclusively on the hierarchical approach. In fact, from a neuroscience point of view the hierarchical picture is not that clear-cut. On the one hand, hierarchy seems not always necessary: single cells in V1 have been found that code for grouping and e.g. are sensitive to occlusion information (Sugita, 1999). On the other hand, neurons selective for specific grouping configurations, irrespective of the sensory characteristics of their components, occur outside of the ventral stream hierarchy, in macaque lateral intraparietal sulcus (LIP) (Yokoi & Komatsu, 2009). The LIP belongs to the dorsal stream or “where” system, for processing location and/or action-relevant information (Ungerleider & Mishkin, 1982; Goodale & Milner, 1992), and is associated with attention and saccade targeting. Using fMRI, areas of both the ventral and dorsal stream showed object-selectively; in intermediate processing areas these representations were viewpoint and size specific, whereas in higher areas they were viewpointindependent (Konen & Kastner, 2008). Generally speaking, it is not surprising that the “where” system is involved in perceptual grouping. Consider, for instance, grouping by proximity, which is primarily an issue of “where” the components are localized in space (Gepshtein & Kubovy, 2007; Kubovy, Holcombe, & Wagemans, 1998; Nikolaev, Gepshtein, Kubovy, & van Leeuwen, 2008). These observations might suggest that hierarchy does not adequately characterize the distribution of labor in visual processing areas. 2. Approaches opposing the hierarchical view For long, some perceptual theorists and experimenters have been revolting against the hierarchical view: German “Ganzheitspsychologie”, Gestalt psychology, and Gibsonian ecological realism. All these approaches have sought to downplay the basic role of the mosaic of isolated local features, arguing from a variety of perspectives that basic visual information consists of holistic properties. Consider what Koffka addressed as “das Aufforderungscharacter der Stimuli” and Gibson with the apparently cognate notion of “Affordance”, both emphasizing that perception is dynamic in nature and samples over time the higher-order characteristics of the surrounding environment. Gibsonians considered the visual system is naturally or adaptively “tuned” to this information. Gestaltists considered it to be the product of a creative synthesis, guided by the valuation of the whole, for which sensory stimulation offers no more than boundary conditions. In Gestalt psychology, this 4 valuation was summarized under the notion of Prägnanz, meaning goodness of the figure. “Ganzheitspsychologie” (Sander, Krüger) regarded early perception to originate in the perceiver’s emotions, body and behavioral dispositions. Shape characteristics like “Roundedness”, “Spikiness” provide a context for further differentiation based on sensory information. These approaches claimed to have principled answers to why we see the world the way we do (Gestalt) or why we base our actions around certain properties (Gibson). However, they have left the mosaic of featural representations an uncontested neurophysiological reality. Without an account of how holistic properties could arise in the visual system, all this talk has to remain question-begging. 2.1. Integral properties challenge the hierarchical model Studies aiming to establish holistic perception early in the visual system have focused on integral properties. The prevalence of such properties in perception is confirmed in psychological studies in the integral superiority effect (Pomerantz et al., 1977; see also Pomerantz & Cragin, Ch 26, this volume). These authors found, for instance, that “( )” and “( (“ despite the presence of an extra, redundant parenthesis, were more easy to distinguish from each other than “)” and “(“. Kimchi and Bloch (1998) showed that whereas classification of two curved lines together or two straight lines together was easier than classifying mixtures of the two, the opposite occurred when the items formed configurations: e.g. a pattern of two straight lines is extremely difficult to distinguish from a pattern of two curved lines, if the two have a similar global configuration (e.g. both look like an Xshape), whereas mixtures that differ in their configuration e.g. “X” vs “()” are extremely easy. Thus, notwithstanding the hierarchical view, “how things look” matters in what is easy to perceive. How could it possibly be that these integral properties are present in early perception? After all, they are supposedly built out of elementary features. We should distinguish, however, between how we construe them and what is prior in processing. For constructing closure “()” you need “(“ and “)”. But that doesn’t mean that, when “()” is presented in a scene, you detect this by first analyzing “(“ and “)” separately and then putting them together. You could begin, for instance by fitting a closure “O” template to it, before segmenting the two halves. In that case you would have detected closure before seeing the “(“ and the “)”. Of course, a problem is that the number of possible templates is exploding. Perception can only operate with a limited number. How are they determined? 2.2. Reverse Hierarchy Theory One way in which this process could be understood is reversed hierarchy theory; Hochstein and Ahissar (2002), for instance, believed that a crucial part of perception is top-down activity. In this view, high-level object representations are pre-activated, and selected based on the extent they fit with the lower level information. Rather than being inert until external information comes in, the brain is actively anticipating visual stimulation. This state of the brain implies that prior experience and expectancies may bias visual perception (Hochstein & Ahissar 2002; Lee & Mumford 2003). Topdown connections would, in principle, effectuate such feedback from higher levels. Feedback activity might be able to make contact with the incoming lower-level information, at any level needed, selectively enhancing and repressing certain activity patterns everywhere in a coordinated fashion, and thus configure lower-order templates on the fly. This certainly sounds attractive as it would make sense of the abundant top-down connectivity between the areas of the visual system, but on the other hand it also has the ring of wishful thinking. Recall that the brain does not have room for indefinite numbers of higher-order feature maps. How does the higher-level system know which neural subpopulation at lower-level to selectively activate? 5 Treisman & Gelade (1980) at least provided a partial solution to this problem, by making selection a matter of spatially focused attention. Only items in the limited focus of attention are effectively integrated. Spatial selectivity is easy to project downward from areas such as LIP, since all downward areas preserve to some degree the spatial coordinates of the visual field –ignoring the complication of receptive fields trans-saccadic remapping in eye-movement (e.g. Melcher & Colby, 2008). On the other hand, the problem of how integration is achieved is not resolved merely by restricting it to a small spatial window. There are, moreover, a host of other forms of attentional selectivity besides purely spatial ones, such as object driven and divided attention, that pose greater selection problems. A modern, neurally-informed version of Treisman’s approach is found in Roelfsema (2006), which distinguishes between base and incremental grouping. Base grouping is easy; it can be done through a feedforward sweep of activity converging on single neurons. Grouping is hard, for instance, in the presence of nearby and/or similar distracter information. Incremental grouping is performed, according to these authors, through top-down feedback, all the way down to V1 (Roelfsema, Lamme, & Spekreijse, 1998). This, however, is a slow process that depends on the spreading of an attentional gradient through the early visual system, by way of a mechanism such as synchronous oscillations (see Chapter 53) or enhanced neuronal activity (Roelfsema, 2006). Neurons in macaque V1, for instance, responded more strongly to texture elements belonging to a figure defined by texture boundaries than to elements belonging to a background (Roelfsema et al., 1998; Lamme et al., 1998; Zipser et al., 1996). Yet this mechanism remains too slow to establish perceptual organization in the real-time processing of stimuli of realistic complexity. Whereas, as we will discuss, perceptual organization in complex stimuli arises within 60 ms (Nikolaev & van Leeuwen, 2004), attentional effects in humans have onset latencies in the order of 100 ms (Hillyard, Vogel, & Luck, 1998), and this is before recurrent feedback even begins to spread. 2.3. Predictive Coding According to Murray (2008), we must take care to distinguish effects of attention that are patternspecific from non-specific shifts in the baseline firing rates of neurons. Baseline shifts can strengthen or weaken a given lower-level signal and can selectively affect a certain brain region, independently of what is represented there; the firing rates of neurons, even when no stimulus is present in the receptive field (Luck, Chelazzi, & Desimone, 1997). Moreover, also reduction in activity has been reported as a result of attention allocation (Corthout & Supèr, 2004). Possibly, this top-down effect could be understood as predictive coding: this notion proposes that inferences of high-level areas are compared with incoming sensory information in lower areas through cortical feedback and the error between them is minimized by modifying the neural activities (Rao & Ballard, 1999). Using fMRI, Murray et al. 2002 found that whereas activity increases in the higher areas, in particular the lateral occipital complex; when elements grouped into objects as opposed to randomly arranged, reduction of activity occurs in primary visual cortex. This observation suggests that activity in early visual areas may be reduced as a result of grouping processes in higher areas. Reduced activity in early visual areas, as measured by fMRI was shown to indicate reduction of visual sensitivity (Hesselmann, Sadaghiani, Friston, & Kleinschmidt, 2010), presumably due to these processes. Reduction of activity has also been claimed to have the opposite effect: Kok, Jehee and de Lange (2012) found that the reduction corresponded to a sharpening of sensory representations. Sharpening is understood as top-down suppression of neural responses that are inconsistent with 6 the current expectations. These results suggest an active pruning of neural representations, in other words, active expectation making representations increasingly sparse. Then again, multi-unit recording studies in ferrets and rats have provided evidence against such active sparsification in visual cortex (Berkes, White, & Fiser, 2009). Overall, we may conclude that top-down effects on early visual perception are both ubiquitous and varied, sufficiently to accommodate contradicting theories; top-down effects may selectively or aselectively increase or decrease firing rates, change the tuning properties of neurons, including receptive field locations and sizes. Some of these effects may be predictive; perception does not begin when the light hits the retina. None of these mechanisms, however, are fast enough to enable the rapid detection of complex object properties that configural superiority requires. 3. Intrinsic generation of holistic representation Let us therefore consider the possibility of intrinsic holism: the view that the visual system has an intrinsic tendency to produce coherent patterns of activity from the visual input. Already at the level of early processing, in particular V1, intrinsic mechanisms for generating global structure may exist. Conversely, some “basic” grouping might occur at the higher levels. Gilaie-Dotan, Perry, Bonneh et al. (2009) offer a case in point. They observed a patient with severely deactivated mid-level visual areas (V2-V4). The patient lacked the specific, dedicated function of these areas: “looking at objects further than about 4 m, I can see the parts but I cannot see them integrated as coherent objects, which I could recognize; however, closer objects I can identify if they are not obstructed; sometimes I can see coherent integrated objects without being able to figure out what these objects are” (p. 1690). In addition, face perception is severely impaired. Nevertheless, the patient was capable of near-normal everyday behavior. Most interestingly, higher areas in this patient were selectively activated for object categories like houses and places. This suggests that activity in higher-order brain regions are not driven by lower-order activity, but that higher-level representations are “…generated ‘de novo’ by local amplification processes in each cortical region” (p 1700). 3.1. Early higher-order features. Some response properties of V1 neurons are suggestive of the power of early, intrinsic holism. I mentioned Sugita’s (1999) occlusion-selective V1 neurons. Moreover, some V1 neurons will respond with precise timing to a line 'passing over' their RFs even when the RF and surround are masked (Fiorani et al., 1992). Neurons in V1 and V2 have been observed to respond to complex contours, such as illusory boundaries (Grosof, Shapley, & Hawken, 1993; Von der Heydt & Peterhans 1989). Contours can, in principle, besides by simple luminance edges, be defined by more complex cues, such as texture (Kastner et al., 2001). Texture-defined boundaries as found in V1 defy the hierarchical model, as they are complex by definition. Kastner et al. (2001) showed that texture-based segregation can be found in the human visual cortex using fMRI. Line textures activated areas V1, besides V2/VP, V4, TEO, and V3A as compared with blank presentations. Kastner et al. (2001) also observed that texture checkerboard patterns evoked more activity, relative to uniform textures, in area V4 but not in V1 or V2. This means that here we have a later area being involved in processes typically believed to occur earlier -the early areas respond strongly to normal checkerboards of similar dimensions. Perhaps, larger spatial-scale receptive field sizes than V1 or V2 could provide were needed here. But perhaps, these areas lack specific long-range connections for 7 texture boundaries. We may, therefore, propose that integration occurs within each level subject to restrictions given by the layout of receptive fields and the nature of their intrinsic connections. 3.3. Contextual modulation Neurons in primary visual cortex (V1) respond differently to a simple visual element when it is presented in isolation from when it is embedded within a complex image (Das & Gilbert, 1995). Beyond their classical receptive field, there is a surround region; its diameter is estimated to be at least 2–5 times larger than the classical receptive field (Fitzpatrick, 2000). Stimulation of this region can cause both inhibition and facilitation of a cell’s responses, and modification of its RF (Blakemore and Tobin, 1972), spatial summation of low-contrast stimuli (Kapadia, Ito, Gilbert, et al., 1995), and cross-orientation modulation (Das & Gilbert, 1999; Khoe et al., 2004). Khoe et al. (2004) studied detection thresholds for low-contrast Gabor patches, in combination with event-related potentials (ERP) analyses of brain activity. Detection sensitivity increases for such stimuli when flanked by other patches in collinear orientation, as compared to ones in the orthogonal orientation. Collinear stimuli gave rise an increased ERP response between 80 to140 ms from stimulus onset, centered on the midline occipital scalp, which could be traced to primary visual cortex. Such interactions are thought to depend on local excitatory connections between cells in V1 (Kapadia et al. 1995; Polat et al. 1998). Das and Gilbert (1999) showed that the strength of these connections declines gradually with cortical distance in a manner that is largely radially symmetrical and relatively independent of orientation preferences. Contextual influence of flanking visual stimuli varies systematically with a neuron’s position within the cortical orientation map. The spread of connections could provide neurons with a graded specialization for processing angular visual features such as corners and T junctions. This means that already at the level of V1, complex features can be detected. In particular, T-junctions are an important clue that an object is partially hidden behind an occlude, in accordance with the observation that occlusion is detected early in perception (see also the chapter on Neural mechanisms of figure-ground organization: Border-ownership, competition and switching by Naoki Kogo & Raymond van Ee: Chapter 12, this volume). According to Das and Gilbert (1999), these features could have their own higher-order maps in V1, linked with the orientation map. In other words, higher-order maps thought to belong to mid-level may be found already in early visual areas. 3.4. Long-range contextual modulation An important further mechanism of early holism could be found in the way feature maps in V1 are linked beyond the surround region (see Alexander & van Leeuwen, 2010 for a review). Long range connectivity enables modulation of activity by stimuli well beyond the classical RF and its immediate surround. In contrast with short-range connections, long-range intrinsic connections are excitatory, and link patchy regions with similar response properties (Malach, Amir, Harel, et al., 1993; Lund, Yoshioka, & Levitt, 1993). Traditionally, the function of these long-range connections has been understood to be assembling the estimates of local orientation (within columns) into long curves. These connections may have other possible roles as well, such as representing texture flows; patterns of multiple locally near-parallel curves, or zebra stripes. (Ben-Shahar, Huggings, Izo, & Zucker, 2003). Texture flows are more than individual parallel curves; the flow is distributed across a region; consider, for instance, the “flow”patterns that can be observed in animal fur. The perception of contour flow enables to segregate complex (Das & Gilbert, 1995) and curvature perception (Ben Shahar & Zucker, 2004). Whereas this information is available early, it is emphasized in later processing areas. In V4, for instance, shape contours are collectively represented in terms of the minima and maxima in curvature they contain (Feldman & Singh, 2005). 8 From the survey of neural representation, we may conclude that the necessary architecture for early holism is available already at the level of V1. If so, what to make of the empirical evidence for convergence and the increasingly sparse representations in mid-level and higher visual areas? Sparsification may be a way to establish selectivity dynamically (e.g. Lörincz, Szirtes, Takács, Biederman, & Vogels, 2002). Now consider that basically all evidence for sparsification comes from animal studies. Training requires animals spending months of exposure with the same, restricted set of configurations. In other words, their representations will have been extremely sparsified. How much this encoding resembles what arises in more natural conditions remains unknown. Here, I have made efforts to show that the two needn’t be too similar. 3.5. Time course of contextual modulation Early holism could be achieved through spreading of activity through these lateral connections. Accordingly, the response properties of many cells in V1 are not static, but develop over time. In V1, and more predominantly in the adjacent area V2, Zhou, Friedman, and von der Heydt (2000) and Qiu, and von der Heydt (2005) observed in macaque, neurons sensitive to boundary assignment. One neuron will fire if the figure is on one side of an edge, but will remain silent and another will fire instead if the figure is on the other side of the edge. These distinctions are made as early as 30 ms after stimulus onset. Thus, even receptive fields in early areas such as V1 are sensitive to context almost instantaneously after a stimulus onset. In the input layers (4C) of V1 neurons reach a peak in orientation selectively with a latency of 30-45 milliseconds, persisting for 40-85 ms (Macaque). The output layers (layers 2, 3, 4B, 5 or 6), however, show a development in selectivity, in which often neurons shows several different peaks. This could be understood in terms of wide-range lateral inhibition needed for high-level of orientation selectivity in V1 (Ringach, Hawken, & Shapley, 1997) but also, I should add, as a result of modulation from long-range connections within V1. Along with the architecture of neural connectivity, the dynamics provides the machinery for early holism, through spreading of activity within the early visual areas. Due to activation spreading, the time course of activity in cells, regions and systems shows an increased context-dependency in early visual areas with time. Around 60 ms from stimulus onset the activity of neurons in V1 becomes dependent on that of their neighbors through horizontal connections (in the same neuronal layer), for instance the interactions of oriented contour segments through local association fields (Kapadia et al. 1995; Polat et al. 1998; Bauer & Heinze, 2002). These effects can be observed in human scalp EEG: the earliest ERP component C1—which peaks at 60–90 ms after stimulus onset—is not affected by attention (Clark et al. 2004; Martinez et al. 1999; Di Russo et al. 2003), although the later portion of this component may reflect contributions from visual areas other than V1 (Foxe and Simpson 2002). The earliest attentional processes in EEG reflect spatial attention. ERP studies (reviewed by Hillyard et al. 1998) showed that spatial attention affects ERP components not earlier than about 90 ms after stimulus onset. The 80-100 ms latency is generally understood to be the earliest moment where attentional feedback kicks in. 4. Time course of attention deployment According to the early holism proposal, in animal studies attentional modulation affects an already organized activity pattern in V1 –contra Treisman & Gelade (1980). This result has been contested In studies with humans using EEG. Using high-density event-related brain potentials, Han, Song, Ding, et 9 al. (2001) compared grouping by proximity with grouping by similarity, relative to a uniform grouping condition with static pictorial stimuli. They found that the time course and focus of activity of grouping by proximity and similarity differ. Proximity grouping gave rise to an early positivity (around 110 ms) in the medial occipital region in combination with an occipito-parietal negativity around 230 ms in the right hemisphere. Similarity grouping showed a negativity around 340 ms, with a maximum amplitude over the left occipito-parietal area. This is in accordance with Khoe (2004), who found that later effects of collinearity (latencies of 245–295 and 300–350) were found laterally, suggesting an origin in the LOC. With the criterion that beyond 100 ms, processes at low level vision are subject to feedback, Han et al. concluded that all processes involved in grouping are affected by attention. Han et al (2001) interpreted the early occipital activity as spatial parsing; the subsequent occipitoparietal negativity as suggesting the involvement of the “where” system. In case of similarity grouping, the late onset as well as the scalp distribution of the activity suggests that the “what” system is mostly doing the grouping work. Hence the hemispheric asymmetry in both processes: leftside processing tends to be oriented towards sub-structures, which typically are small-scale; righthemisphere processing favors macro-structures, which are typically of larger scale (Sergent 1982; Kitterle et al. 1992; Kenemans et al. 2000). Thus proximity grouping being centered on the right hemisphere and similarity grouping on the left hemisphere, reflects the fact that the former can be done on the basis of low-spatial resolution information, whereas the latter required a combination of low and high spatial resolution aspects of the stimuli. Eliminating low spatial frequency information from the stimuli, left hemisphere activity became dominant. Even though for proximity, the locus of these effects seems early, the time course of perceptual grouping might seem to confirm that it is attentionally driven. By varying the task, requiring spatial attention to be narrowly or widely focused, it is possible to observe differences in perceptual integration (Stins & van Leeuwen, 1993). Han et al. (2005) varied the target of the task by setting the task either to detect a target color in the center or more distributed across the stimulus. They measured the effects of this manipulation on evoked potentials. Han et al. (2005) found that all the grouping-related evoked activity not only started later than 100 ms, but also depended on the task. 10 Figure 2. From Nikolaev et al., (2008). Dot lattices. The dots appear to group into strips. A. The four most likely groupings are labeled a, b, c, and d, with the inter-dot distance increasing from a to d. Perception of lattices depends on their aspect ratio (AR), which is the ratio of two shortest inter-dot distances: along a (the shortest) and b. When AR = 1.0, the organizations parallel to a and b are equally likely. When AR > 1.0, the organization parallel to a is more likely than the organization parallel to b. These phenomena are manifestations of grouping by proximity. B Dot lattices of four aspect ratios. There are, however, earlier correlates of grouping in neural activity than the ones observed by Han et al (2001, 2005). In the dot-lattice display of Figure 2, Nikolaev, Gepshtein, Kubovy et al. (2008) studied grouping by proximity using a design based on a parametrized grouping strength. They found an effect of proximity, more precisely of aspect ratio (AR, see Figure 2) on C1 in the medial occipital region starting from 55 ms after onset of the stimulus. As mentioned, C1 is considered the earliest evoked response of the primary visual cortex; it is usually registered in the central occipital area 45– 100 ms after stimulus presentation. This result suggests that C1 activity reflects early spatial 11 grouping. The early activity was higher in the right than left hemisphere, consistently with Han et al.’s (2001) observation that low spatial frequencies are processed more in the right than left hemisphere. Therefore, proximity grouping at this stage depends more on low than high spatial frequency content of visual stimuli. One of the reasons this result was not observed in Han et al. (2001) may have been that their task never involved reporting grouping. In this respect it is interesting that in Nikolaev et al. (2008) the amplitude of C1 depended on individual sensitivity to subtle differences in AR. The more sensitive an observer, the better AR predicted the amplitude of C1. The absence of an effect of AR on C1 in low grouping sensitivity observers was compensated by an effect on the next peak. This is the P1 in posterior lateral occipital areas (without a clear asymmetry), having its earliest effect of proximity (AR) at 108 ms from stimulus onset, i.e. right at the onset of attentional feedback activity. The effect is present in all observers, but the trend is opposite to that of C1, in that the lower the proximity sensitivity, the larger its effect on P1 amplitude. Thus, the two events represent different aspects of perceptual grouping, with the transition between the two taking place on the interval from 55 to 108 ms after stimulus onset. Perceptual grouping, therefore, may be regarded as a multistage process, which consists of early attention-independent processes and later processes that depend on attention, where the latter may compensate the former if needed. 4.1. Traces of pre-attentional binding in attentional processes Like context-sensitivity within areas, attention-based grouping also seems to be spreading; in macaque V1 spatially selective attention spreads out in an approx 300 ms period from the focus of attention, following grouping criteria (Wanning, Stanisor, & Roelfsema (2011). Attention spreads through modally, but not amodally completed regions (Davis & Driver, 1997); Attention spreading depends on whether object components are similar or connected (Baylis & Driver, 1992). Attention spreads even between visual hemifields. Kasai & Kondo (2007) and Kasai (2010) presented stimuli to both hemifields, which were either connected or unconnected by a line. The task involved target detection in one hemifield. Attention was reflected by a larger amplitude of ERP at occipito-temporal electrode sites in the contralateral hemisphere. These effects were revealed in ERP: first in N1 (150210 ms) and also in the subsequent N2pc (330/310-390 ms). The N1 component is associated with orienting visuospatial attention to a task relevant stimulus (Luck et al, 1990) and with enhancing target signal (Mangun et al., 1993); The N2pc component is assocated with spatial selection of target stimuli in visual search displays (Eimer, 1996; Luck & Hilliard, 1994) and in particular with selecting task-relevant targets or suppression of their surrounding nontargets (Eimer, 1996). These effects were reduced by the presence of a connection between the two objects. Thus, attention spreads mandatorily based on connectedness. Attention involves already organized representations; attentional selection, therefore, cannot prevent the intrusion of information that the early visual feature integration processes have already tied up with the target. Effects of irrelevant features into selective attention can, therefore, be interpreted as a sign that feature integration has taken place (cf. Mounts & Tomaselli, 2005; Pomerantz & Lockhead, 1991). Two of its particular manifestations, incongruence and (MacLeod, 1991; van Leeuwen and Bakker, 1995; Patching and Quinlan, 2002) and Garner effects (Garner 1974, 1976, 1988), have had a crucial role for detecting feature integration in behavioral studies. Incongruence effects involve the deterioration of a response to a target feature resulting from one or more incongruent but irrelevant other features presented at the same trial, as compared to a congruent feature. They belong to the family that also includes the classical Stroop task (Stroop, 1935) in which naming the ink color of a color-word is delayed if the color-word is different 12 (incongruent) from the color of the ink which has to be named (e.g. the word red printed in green ink), as well as auditory versions (Hamers & Lambert, 1972), the Eriksen flanker paradigm (Eriksen and Eriksen, 1974), tasks using individual faces and names (Egner and Hirsch, 2005), numerical values and physical sizes (Algom, Dekel, & Pansky, 1996), names of countries and their capitals (Dishon-Berkovits & Algom, 2000), and versions employing object- or shape-based stimuli (Pomerantz, Pristach, and Carson, 1989; for a review: Marks, 2004). These effects, therefore, are generic to different levels of processing. Different Stroop-like tasks will involve a mixture of partially overlapping, and partially distinct brain mechanisms (see, for instance, a recent meta-analysis in Nee, Wager, and Jonides, 2007). Consider the stimuli in Fig. 3. According to their contours the stimuli on one diagonal are congruent and the ones on the other incongruent. Participants responding to whether the concave contour has a rectangular or triangular shape, show an effect of congruency of the outer contour on response latencies and EEG. These effects imply that concave and surrounding contour shapes have somehow become related in the representation of the figure. Figure 3. (from Boenke, Ohl, Nikolaev et al., 2009). Stimuli composed of a larger outer contour (global feature G) and a smaller inner contour (local feature L) which were either a triangular or rectangular in shape, yielding the congruent stimuli G3L3, G4L4 and the incongruent ones: G3L4, G4L3. Participants in Boenke et al. (2009) classified the figures as triangular or rectangular according to the shape of the inner contour. Garner interference was named by Pomerantz (1983) after the work of Garner (1974, 1976, and 1988). Stimulus dimensions, such as brightness or saturation, are assumed to describe a stimulus in a "feature space" (Garner, 1976). Dimensions are called separable if variation along the irrelevant dimension results in same performance as without variation. An example of separable dimensions are circle size and radius inclination (Garner and Felfoldy, 1970). When variation of the stimuli along an irrelevant dimension of this space slow the response to the target compared to when the 13 irrelevant dimension is held constant, Garner called such dimensions integral, which means that they have been integrated perceptually. Brightness and saturation are typically integral dimensions (Garner, 1976). In one of his studies, for instance, Garner (1988) used the dimensions "letters" and "color". Letters C and O were presented in green or red ink color. The task was to name the ink color, which varied randomly in both letter conditions. Here, the irrelevant feature was associated with the "letters" dimension. In the baseline condition, the letters "O" or "C" would occur in separate blocks; in the filtering conditions they would be randomly intermixed. Irrelevant variation of the letters had impact on the response to the color dimension, which implies that letter identity and color are integral dimensions. As independent factors in one single experiment, incongruence and Garner effects occured either jointly (Pomerantz, 1983; Pomerantz et al., 1989; Marks, 2004) or mutually exclusively (Melara and Mounts, 1993; Patching and Quinlan, 2002, van Leeuwen and Bakker, 1995). These effects might thus be considered as belonging to different mechanisms. But perhaps better, they could be regarded as the same mechanism operating on two different time scales. In both cases, the principle is that attentional selection failed, based on the previous inclusion with the target information of taskirrelevant information. Their difference may then be considered in terms of the time it takes this irrelevant information to become connected with the target. Incongruence effects occur when conflicting information is presented within a narrow time window (Flowers, 1990). Thus, memory involvement is minimal. The Garner effect, on the other hand, is a conflict operating between presentations, and thus involves episodic memory. Incongruence and Garner effects, therefore, differ considerably in the width of their scope and that of their feedback cycle, the drawing upon a much wider feedback cycle than the former. As a result, their time course will differ. Boenke et al. (2009) used ERP analyses to observe the time course of incongruence and Garner effects. In accordance with Kasai’s (2010) effects of spreading of attention, they found incongruence effects on N1 and N2. The first interval was observed on N1, between 172-216 ms after stimulus onset and had a maximum at 200 ms, located in the parietooccipital areas, more predominantly on the right. The amplitude was larger in incongruent than congruent condition. The second interval occurred between 268-360 ms after stimulus onset and included the negative component N2 and the rising part of the P3 component, predominantly in the fronto-central region of the scalp. Garner effects in Boenke et al. (2009) started off later. The earliest one between 328-400 ms after stimulus onset. This interval corresponded to the rising part of the positive component P3 and was observed predominantly above the fronto-central areas). The first maximum in the Garner effect almost coincided with the second maximum in the incongruence effect. This moment (336 ms) was also the maximum of interaction with the Garner effect observed over left frontal, central, temporal, and parietal areas. This result implies that Stroop and Garner effects occur in cascaded stages, resolving the longstanding question about their interdependence. We may conclude that the time course of Garner effects follows the principle of spreading attention; with Garner effects depending on information from the preceding episode, they depend on a wider feedback cycle than incongruence effects, and thus the rise time of the former is longer, and their latency larger, than that of the latter. 14 5. Conclusions and open issues In the present chapter, I have been trying to go beyond placing some critical notes in the margin of the hierarchical approach to perception, and instead of hierarchical convergence to higher-order representation, suggest an alternative principle of perception. I have sketched the visual system as a complex network of lateral and large-scale within area connections as well as between-areas feedback loops; these enable areas and circuits to reach integral representation through recurrent activation cycles operating at multiple scales. These cycles work in parallel (e.g. between ventral and dorsal stream), but where the onset of their evoked activity differs, they operate as cascaded stages. According to a principle I have been peddling since the late eighties (e.g. van Leeuwen, Steijvers, & Nooter, 1997), early holism is realized through diffusive coupling through lateral and large-scale intrinsic connections, prior to the deployment of attentional feedback. The coupling results in spreading activity on, respectively, circuit-scale (Gong & van Leeuwen, 2009), area-scale (Alexander, Trengove, Sheridan, et al., 2011) and whole head-scale traveling wave activity (Alexander, Jurica, Trengove, et al. 2013). Starting from approximately 100 ms after onset of a stimulus, attentional feedback also begins to spread, but cannot separate what earlier processes have already joined together. Early-onset attentional feedback processes have been shown to extend to congruency of proximal information in the visual display; later ones to extend to information in episodic memory (Boenke et al., 2009). This is because the onset latency of the effect is determined by the width of the feedback cycle, which determines the time it takes for the contextual modulation to arrive: short for features close by within the pattern or long for episodic memory. 5.1. Perceiving beyond the hierarchy Spreading activity in perceptual systems cannot go on forever. It needs to settle, and next be annihilated, in order for the system to continue working. Within each area, we may therefore expect activation to go through certain macrocycles, in which pattern coherence is periodically reset. In olfactory perception, Skarda and Freeman (1987) have described such macrocycles as transitions between stable and instable regimes in system activity, as coordinated with the breathing cycle; upon inhalation the system is geared towards stability, and thereby responsive to incoming odor; upon exhalation the attractors are annihilated for the system to be optimally sensitive to new information. Freeman and van Dijk (1987) observed a similar cycle in visual perception; we might consider a system becoming instable, and thus ready to anticipate new information in preparation for, what was dubbed a “visual sniff” (Freeman, 1991). Whenever new information is expected, for instance, when moving our eyes to a new location, we may be taking a visual sniff. Macrocycles in visual perception can be considered on the scale of saccadic eye-movement, i.e. approx. 300-450 ms on average. Within this period, the visual system to envelop several perceptual cycles, starting from the elementary interactions between neighboring neurons and gradually extending to include episodic and semantic memory. 5.2. Open issues In this chapter, I drew a perspective of visual processing based on intrinsic holism, as established through the dynamic spreading of signals via short and long range lateral, as well as top-down feedback connections. Since the mechanism is essentially indifferent with respect to pre-attentional and attentional processes in perception, we might consider a unified theoretical framework, in which processes are distinguished, based on the scale of which these interactions are taking place. The 15 exact layout of the theory will depend on a precise, empirical study of the way, spreading activity can achieve coherence in the brain. The next chapter will provide some of the results that could offer the groundwork for such a theory. 16 6. References Alexander, D.A., Jurica, P., Trengove, C., Nikolalev, A.R., Gepshtein, S., Zviagyntsev, M., Mathiak, K., Schulze-Bonhage, A., Rüscher, J., Ball, T., & van Leeuwen, C. (2013). Traveling waves and trial averaging; the nature of single-trial and averaged brain responses in large-scale cortical signals. NeuroImage, doi: 10.1016/j.neuroimage.2013.01.016 Alexander, D.M., Trengove, C., Sheridan, P., & van Leeuwen, C. (2011). Generalization of learning by synchronous waves: from perceptual organization to invariant organization. Cognitive Neurodynamics, 5, 113-132. Alexander, D.M., & van Leeuwen, C. (2010). Mapping of contextual modulation in the population response of primary visual cortex. Cognitive Neurodynamics, 4, 1–24. Algom, D., Dekel, A., & Pansky, A. (1996). The perception of number from the separability of the stimulus: the Stroop effect revisited. Memory & Cognition, 24, 557–572. Amedi, A., Jacobson, G., Hendler, T., Malach, R., & Zohary, E. (2002). Convergence of visual and tactile shape processing in the human lateral occipital complex. Cerebral Cortex, 12, 1202–1212. Bauer, R., & Heinze, S. (2002). Contour integration in striate cortex. Experimental Brain Research, 147, 145–152. Baylis, G.C., & Driver, J. (1992). Visual parsing and response competition: The effect of grouping factors. Perception & Psychophysics, 51, 145–162. Ben-Shahar, O., Huggins, P.S., Izo, T., Zucker, S.W. (2003). Cortical connections and early visual function: intra-and inter-columnar processing. Journal of Physiology Paris, 97, 191-208. Ben-Shahar, O., & Zucker, S. W. (2004). Sensitivity to curvatures in orientation-based texture segmentation. Vision research, 44, 257-277. Berkes, P. White, B.L., & Fiser, J. (2009). No evidence for active sparsification in the visual cortex. Paper presented at NIPS 22. http://books.nips.cc/papers/files/nips22/NIPS2009_0145.pdf Blakemore, C., & Tobin, E. A. (1972). Lateral inhibition between orientation detectors in the cat's visual cortex. Experimental Brain Research, 15, 439-440. Boenke, L.T., Ohl, F., Nikolaev, A.R., Lachmann, T., & van Leeuwen, C. (2009). Stroop and Garner interference dissociated in the time course of perception, an event-related potentials study. NeuroImage, 45, 1272–1288. Bushnell, B.N., &Pasupathy, A. (2011). Shape encoding consistency across colors in primate V4. Journal of Neurophysiology, 108, 1299-1308. Carlson, E.T., Rasquinha, R.J., Zhang, K., & Connor, C.E. (2011). A sparse object coding scheme in area V4. Current Biology, 21, 288-293. Clark, V. P., Fan, S., & Hillyard, S. A. (2004). Identification of early visual evoked potential generators by retinotopic and topographic analyses. Human Brain Mapping, 2(3), 170-187. Corthout, E., & Supèr, H. (2004). Contextual modulation in V1: the Rossi-Zipser controversy. Experimental Brain Research, 156, 118-123. Das, A. & Gilbert, C. D. (1995). Long-range horizontal connections and their role in cortical reorganization revealed by optical recording of cat primary visual cortex. Nature, 375, 780 – 784. Das, A. & Gilbert, C. D. (1999).Topography of contextual modulations mediated by short-range interactions in primary visual cortex. Nature, 399, 655–661. Davis, G., & Driver, J. (1997). Spreading of visual attention to modally versus amodally completed regions. Psychological Science, 8(4), 275-281. Dehaene, S., Changeux, J. P., Naccache, L., Sackur, J., & Sergent, C. (2006). Conscious, preconscious, and subliminal processing: a testable taxonomy. Trends in Cognitive Sciences, 10, 204-211. Di Russo, F., Martínez, A., & Hillyard, S. A. (2003). Source analysis of event-related cortical activity during visuo-spatial attention. Cerebral Cortex, 13(5), 486-499. 17 Dishon-Berkovits, M., Algom, D. (2000). The Stroop effect: it is not the robust phenomenon that you have thought it to be. Memory & Cognition, 28, 1437–1449. Desimone, R., & Duncan, J. (1995). Neural mechanisms of selective visual attention. Annual review of neuroscience, 18(1), 193-222. Duncan, J., & Humphreys, G. W. (1989). Visual search and stimulus similarity. Psychological Review; Psychological Review, 96, 433-458. Eimer, M. (1996). The N2pc component as an indicator of attention selectivity. Electroencephalography and Clinical Neurophysiology, 99, 225-234. Egeth, H. E., & Yantis, S. (1997). Visual attention: Control, representation, and time course. Annual review of psychology, 48(1), 269-297. Egner, T., & Hirsch, J. (2005). Cognitive control mechanisms resolve conflict throughcortical amplification of task-relevant information. Nature Neuroscience, 8, 1784–1790. Enns, J. T., & Rensink, R. A. (1990). Sensitivity to three-dimensional orientation in visual search. Psychological Science, 1(5), 323-326. Eriksen, B.A., & Eriksen, C.W. (1974). Effects of noise letters upon the identification of a target letter in a nonsearch task. Perception & Psychophysics, 16, 143–149. Feldman, J., & Singh, M. (2005). Information along contours and object boundaries. Psychological Review, 112, 243–252. Felleman, D.J., & Van Essen, D.C. (1991). Distributed hierarchical processing in the primate cerebral cortex. Cerebral Cortex, 1, 1–47. Fiorani, M., Rosa, M.G., Gattass, R., & Rocha-Miranda, C.E. (1992). Dynamic surrounds of receptive fields in primate striate cortex: a physiological basis for perceptual completion? Proceedings of the National Academy of Sciences USA, 89, 8547-51. Fitzpatrick, D. (2000) Seeing beyond the receptive field in primary visual cortex, Current Opinions in Neurobiology, 10 438–443. Flowers, J.H. (1990). Priming effects in perceptual classification. Perception & Psychophysics, 47, 135–148. Foxe, J. J., & Simpson, G. V. (2002). Flow of activation from V1 to frontal cortex in humans. Experimental Brain Research, 142(1), 139-150. Freeman, W. J. (1991). Insights into processes of visual perception from studies in the olfactory system. Chapter 2. In L. Squire, N. M. Weinberger, G. Lynch, & J. L. McGaugh (Eds.), Memory: Organization and locus of change (pp. 35-48). New York, NY: Oxford University Press. Freeman, W. J. & van Dijk, B. W. (1987). Spatial patterns of visual cortical fast EEG during conditioned reflex in a rhesus monkey. Brain Research, 422(2), 267-276. Garner, W.R. (1974). The processing of information and structure. Potomac, MD: Erlbaum Publishers, Garner,W.R. (1976). Interaction of stimulus dimensions in concept and choice processes. Cognitive Psychology, 8, 98–123. Garner, W.R. (1988). Facilitation and interference with a separable redundant dimension in stimulus comparison. Perception & Psychophysics, 44, 321–330. Garner, W.R., & Felfoldy, G.L. (1970). Integrality of stimulus dimensions in various types of information processing. Cognitive Psychology, 1, 225–241. Gepshtein, S., & Kubovy, M. (2007). The lawful perception of apparent motion. Journal of Vision, 7(8). Gilaie-Dotan, S, Perry, A., Bonneh, Y., Malach, R., & Bentin, S. (2009). Seeing with profoundly deactivated mid-level visual areas: nonhierarchical functioning in the human visual cortex. Cerebral Cortex, 19, 1687-1703. Gong, P. & van Leeuwen, C. (2009). Distributed dynamical computation in neural circuits with propagating coherent activity patterns. PloS Computational Biology 5(12) e1000611. 18 Goodale, M.A., and Milner, A.D. (1992). Separate visual pathways for perception and action. Trends in Neuroscience, 15, 20–25. Grosof, D. H., Shapley, R. M., & Hawken, M. J. (1993). Macaque V1 neurons can signal ‘illusory contours’. Nature, 365, 550–552. Hamers, J.F., & Lambert, W.E. (1972). Bilingual interdependencies in auditory perception. Journal of Verbal Learning and Verbal Behaviour, 11, 303–310. Han, S., Song, Y., Ding, Y., Yund, E.W., & Woods, D.L. (2001). Neural substrates for visual perceptual grouping in humans. Psychophysiology, 38, 926-935. Han, S., Jiang, Y., Mao, L., Humphreys, G.W., & Qin, J. (2005). Attentional modulation of perceptual grouping in human visual cortex: ERP studies. Human Brain Mapping 26, 199–209. Hesselmann, G., Sadaghiani, S., Friston, K.J., & Kleinschmidt, A., Predictive coding or evidence accumulation? False inference and neuronal fluctuations. PloS ONE 5(3), e9926. Doi:10.1371/journal.pone.0009926 Hillyard, S. A., Vogel, E. K., & Luck, S. J. (1998). Sensory gain control (amplification) as a mechanism of selective attention: electrophysiological and neuroimaging evidence. Philosophical Transactions of the Royal Society of London. Series B: Biological Sciences, 353, 1257-1270. Hochstein, S., & Ahissar, M. (2002). View from the top-hierarchies and reverse hierarchies in the visual system. Neuron, 36(5), 791-804. Hubel, D. H., & Wiesel, T. N. (1959). Receptive fields of single neurones in the cat's striate cortex. Journal of Physiology, 148, 574-591. Hubel, D.H., & Wiesel, T.N. (1962). Receptive fields, binocular interaction and functional architecture in the cat’s visual cortex. Journal of Physiology, 160, 106–154. Hubel, D. H., & Wiesel, T. N. (1974). Sequence regularity and geometry of orientation columns in the monkey striate cortex. Journal of Comparative Neurology, 158, 267-294. Hubel, D.H., & Wiesel T. N. (1998). Early exploration of the visual cortex. Neuron, 20, 401-412. Kahneman, D., & Henik, A. (1981). Perceptual organization and attention. In M Kubovy, JR Pomerantz (Eds). Perceptual Organization. Hillsdale, NJ: Erlbaum (pp. 181–211). Kanizsa, G. (1994). Gestalt theory has been misinterpreted, but has also had some real conceptual difficulties. Philosophical Psychology, 7, 149-162. Kapadia, M.K., Ito, M., Gilbert, C.D., & Westheimer, G. (1995). Improvement in visual sensitivity by changesin local context: parallel studies in human observers and in V1 of alert monkeys. Neuron 15, 843–856. Kasai, T. (2010). Attention-spreading based on hierarchical spatial representations for connected objects. Journal of Cognitive Neuroscience, 22, 12-22. Kasai, T., & Kondo, M. (2007). Electrophysiological correlates of attention-spreading in visual grouping. Neuroreport, 18, 93-98. Kastner , S., De Weerd, P., Pinsk, M.A., Elizondo, M.I., Desimone, R., & Ungerleider, L.G. (2001). Modulation of sensory suppression: implications for receptive field sizes in the human visual cortex. Journal of Neurophysiology, 86, 1398-1411. Kenemans, J. L., Baas, J. M., Mangun, G. R., Lijffijt, M., & Verbaten, M. N. (2000). On the processing of spatial frequencies as revealed by evoked-potential source modeling. Clinical neurophysiology: official journal of the International Federation of Clinical Neurophysiology, 111, 1113-1123. Khoe, W., Freeman, E. , Woldorff, M.G. , & Mangun. G.R. (2004). Electrophysiological correlates of lateral interactions in human visual cortex. Vision Research 44, 1659–1673 Kimchi, R., & Bloch, B. (1998). Dominance of configural properties in visual form perception. Psychonomic Bulletin & Review, 5, 135-139. Kitterle, F. L., Hellige, J. B., & Christman, S. (1992). Visual hemispheric asymmetries depend on which spatial frequencies are task relevant. Brain and Cognition, 20, 308-314. 19 Kok, P., Jehee, J.F.M., & de Lange, F.P. (2012). Less is more: expectation sharpens representations in the primary cortex. Neuron, 75, 265-270. Konen, Ch., & Kastner, S. (2008). Tho hierarchically organized neural systems for object information in human visual cortex. Nature Neuroscience, 11, 224-231. Kubovy, M., Holcombe, A. O., & Wagemans, J. (1998). On the lawfulness of grouping by proximity. Cognitive Psychology, 35, 71-98. Lamme, V. A., Supèr, H., & Spekreijse, H. (1998). Feedforward, horizontal, and feedback processing cortex. Current Opinion in Neurobiology, 8, 529-535. Lee, T. S., & Mumford, D. (2003). Hierarchical Bayesian inference in the visual cortex. JOSA A, 20(7), 1434-1448. Livingstone, M., & Hubel, D. (1988). Segregation of form, color, movement, and depth: anatomy, physiology, and perception. Science, 240, 740–749. Lörincz, A., Szirtes, G., Takács, B., Biederman, I., & Vogels, R. (2002). Relating priming and repetition suppression. International Journal of Neural Systems, 12, 187-201. Luck, S. J., Chelazzi, L., Hillyard, S. A., & Desimone, R. (1997). Neural mechanisms of spatial selective attention in areas V1, V2, and V4 of macaque visual cortex. Journal of neurophysiology, 77, 24-42. Luck, S.J., Heinze, H. J., Mangun, G. R., & Hillyard, S.A. (1990). Visual event-related potentials index focused attention within bilateral stimulus arrays: II. Functional dissociations of P1 and N1 components. Electroencephalography and Clinical Neurophysiology, 75, 528-542. Luck, S.J., & Hillyard, S.A. (1994). Spatial filtering during visual search: evidence from human electrophysiology. Journal of Experimental Psychology: Human Perception and Performance, 20, 1000-1014. Lund, J.S., Yoshioka, T., & Levitt, J.B. (1993). Comparison of intrinsic connectivity in different areas of macaque monkey cerebral cortex. Cerebral Cortex, 3, 148–162. MacLeod, C. M. (1991). Half a century of research on the Stroop effect: an integrative review. Psychological bulletin, 109, 163-203 Malach, R., Amir, Y., Harel, M., & Grinvald, A. (1993). Relationship between intrinsic connections and functional architecture revealed by optical imaging and in vivo targeted biocytin injections in primate striate cortex. Proceedings of the National Academy of Sciences USA, 90, 10469-10473. Mangun, G.R., Hillyard, S.A. & Luck, S.J. (1993) Electrocortical substrates of visual selective attention. In Meyer, D. & Kornblum, S. (Eds), Attention and Performance XIV. MIT Press: Cambridge, Massachusetts, pp. 219–243. Marks, L.E. (2004). Cross-modal interactions in speeded classification. In: G. Calvert, C. Spence, & B.E. Stein (Eds.), The Handbook of Multisensory Processes. Cambridge, MA: MIT Press (pp. 85–106). Martinez, A., Anllo-Vento, L., Sereno, M. I., Frank, L. R., Buxton, R. B., Dubowitz, D. J., ... & Hillyard, S. A. (1999). Involvement of striate and extrastriate visual cortical areas in spatial attention. Nature Neuroscience, 2, 364-369. Melara, R.D., & Mounts, J.R. (1993). Selective attention to Stroop dimensions: effects of baseline discriminability, response mode, and practice. Memory & Cognition, 21, 627–645. Melcher, D.,& Colby, C.L. (2008). Trans-saccadic perception. Trends in Cognitive Science, 12, 466– 473. Mounts, J. R., & Tomaselli, R. G. (2005). Competition for representation is mediated by relative attentional salience. Acta psychologica, 118, 261-275. Murray, M.M., Wylie. G.R., Higgins, B.A., Javitt, D.C., Schroeder, C.E., & Foxe, J.J. (2002) The spatiotemporal dynamics of illusory contour processing: combined high-density electrical mapping, source analysis, and functional magnetic resonance imaging, Journal of Neuroscience, 22 5055– 5073. 20 Murray, S. O., Kersten, D., Olshausen, B. A., Schrater, P., & Woods, D. L. (2002). Shape perception reduces activity in human primary visual cortex. Proceedings of the National Academy of Sciences USA, 99, 15164-15169. Murray, S. O. (2008). The effects of spatial attention in early human visual cortex are stimulus independent. Journal of Vision, 8(10). Nakatani, C., Pollatsek, A., & Johnson, S. H. (2002). Viewpoint-dependent recognition of scenes. The Quarterly Journal of Experimental Psychology: Section A, 55(1), 115-139. Nakayama, K., & Silverman, G.H. (1986). Serial and parallel processing of visual feature conjunctions. Nature, 320, 264-265. Nauhaus, I., Nielsen, K.J., Disney, A.A., & Callaway, E.M. (2012). Orthogonal micro-organization of orientation and spatial frequency in primate primary visual cortex. Nature Neuroscience, 15, doi:10.1038/nn.3255. Nee, D.E., Wager, T.D., & Jonides, J. (2007). Interference resolution: insights from a meta-analysis of neuroimaging tasks. Cognitive, Affective, & Behavioral Neuroscience, 7, 1–17. Neisser, U. (1967). Cognitive psychology. East Norwalk, CT, US: Appleton-Century-Crofts. Neisser, U. (1976). Cognition and reality: Principles and implications of cognitive psychology. WH Freeman, Holt & Co. Nikolaev, A.R. & van Leeuwen, C. (2004). Flexibility in spatial and non-spatial feature grouping: an Event-Related Potentials study. Cognitive Brain Research, 22, 13–25. Nikolaev, A.R., Gepshtein, S., Kubovy, M., & van Leeuwen, C. (2008). Dissociation of early evoked cortical activity in perceptual grouping. Experimental Brain Research, 186, 107–122. Op de Beeck, H.,Wagemans, J., & Vogels, R. (2001). Inferotemporal neurons represent lowdimensional configurations of parameterized shapes. Nature Neuroscience, 4, 1244-1252 Patching, G.R., & Quinlan, P.T. (2002). Garner and congruence effects in the speeded classification of bimodal signals. Journal of Experimental Psychology: Human Perception and Performance, 28, 755– 775. Plomp, G., Liu, L., van Leeuwen, C., & Ioannides, A.A. (2006). The mosaic stage in amodal completion as characterized by magnetoencephalography responses. Journal of Cognitive Neuroscience, 18, 1394–1405. Polat, U., Mizobe, K., Pettet, M. W., Kasamatsu, T. & Norcia, A. M. (1998).Collinear stimuli regulate visual responses depending on cell’s contrast threshold. Nature 391, 580–584. Pomerantz, J.R. (1983). Global and local precedence: selective attention in form and motion perception. Journal of Experimental Psychology, General, 112, 516–540. Pomerantz, J.R., & Lockhead, G.R.(1991). Perception of structure: an overview. In: G.R. Lockhead, & J.R. Pomerantz (Eds.), The Perception of Structure. Washington, DC: American Psychological Association, (pp. 1–20). Pomerantz, J.R., & Pristach, E.A. (1989). Emergent features, attention, and perceptual glue in visual form perception. Journal of Experimental Psychology: Human Perception & Performance, 15, 635649. Pomerantz, J.R., Pristach, E.A., & Carson, C.E. (1989). Attention and object perception. In: B. Shepp, & S. Ballesteros (Eds.). Object Perception: Structure and Process. Hillsdale, NJ: Erlbaum (pp. 53–89). Pomerantz, J. R., Sager, L. C., & Stoever, R. J. (1977). Perception of wholes and of their component parts: some configural superiority effects. Journal of Experimental Psychology: Human Perception and Performance, 3(3), 422. Qiu, F. T., & Von Der Heydt, R. (2005). Figure and ground in the visual cortex: V2 combines stereoscopic cues with Gestalt rules. Neuron, 47(1), 155. Quiroga, R.Q., Kreiman, G., Koch, C., & Fried, I. (2008). Sparse but not ‘grandmother-cell’ coding in the medial temporal lobe. Trends in Cognitive Science, 12, 87–91. 21 Rao, R. P., & Ballard, D. H. (1999). Predictive coding in the visual cortex: a functional interpretation of some extra-classical receptive-field effects. Nature neuroscience, 2, 79-87. Rensink, R.A., & Enns, J.T. (1995). Preemption effects in visual search: Evidence for low-level grouping. Psychological Review, 102, 101-130. Ringach, D., Hawken, M., & Shapley, R. (1997). The dynamics of orientation tuning in the macaque monkey striate cortex. Nature, 387, 281–284. Roelfsema, P.R. (2006). Cortical algorithms for perceptual grouping. Annual Review of Neuroscience, 29, 203-227. Roelfsema, P. R., Lamme, V. A., & Spekreijse, H. (1998). Object-based attention in the primary visual cortex of the macaque monkey. Nature, 395(6700), 376-381. Sergent, J. (1982). The cerebral balance of power: Confrontation or cooperation?." Journal of Experimental Psychology: Human Perception and Performance 8, 253-272. Skarda, C. A., & Freeman, W. J. (1987). How brains make chaos in order to make sense of the world. Behavioral and Brain Sciences, 10, 161-195. Stins, J. & van Leeuwen, C. (1993). Context influence on the perception of figures as conditional upon perceptual organization strategies. Perception & Psychophysics, 53, 34–42 Stroop, J.R., (1935). Studies of interference in serial verbal reactions. J. Exp. Psychol. 18, 643–662. Sugita, Y. (1999). Grouping of image fragments in primary visual cortex. Nature, 401, 269-272. Tanaka, K., Saito, H., Fukada, Y. & Moriya, M. (1991) Coding visual images of objects in the inferotemporal cortex of the macaque monkey. Journal of Neurophysiology, 66, 170–189. Treisman, A., & Gelade, G. (1980). A feature integration theory of attention. Cognitive Psychology, 12, 97-136. Treisman, A., & Sato, S. (1990). Conjunction search revisited. Journal of Experimental Psychology: Human Perception & Performance, 16, 459-478. Tsunoda, K., Yamane, Y., Nishizaki, M., & Tanifuji, M. (2001). Complex objects are represented I macaque inferotemporal cortex by the combination of feature columns. Nature Neuroscience, 4 (8), 832-838. Ungerleider, L.G., & Mishkin, M. (1982). Two cortical visual systems. In D.J. Ingle, M.A. Goodale, & R.J.W. Mansfield (Eds). Analysis of Visual Behavior. Cambridge, MA: MIT Press (pp. 549–580). van Leeuwen, C. & Bakker, L. (1995). Stroop can occur without Garner interference: Strategic and mandatory influences in multidimensional stimuli. Perception & Psychophysics, 57, 379–392. van Leeuwen, C., Steyvers, M., & Nooter, M. (1997). Stability and intermittency in large-scale coupled oscillator models for perceptual segmentation. Journal of Mathematical Psychology, 41, 319–344. von der Heydt, R., & Peterhans, E. (1989). Mechanisms of contour perception in monkey visual cortex. I. Lines of pattern discontinuity. Journal of Neuroscience 9: 1731-1748. Wanning, A., Stanisor, L., & Roelfsema, P.R. (2011). Automatic spread of attentional response modulation along Gestalt criteria in primary visual cortex. Nature Neuroscience, 14, 1243-1244. Wolfe, J. M., Cave, K. R., & Franzel, S. L. (1988). Guided search: An alternative to the feature integration model for visual search. Journal of Experimental Psychology: Human Perception & Performance, 15, 419-433. Wolfe, J.M. & Cave, K.R. (1999) Psychophysical evidence for a binding problem in human vision, Neuron, 24, 11– 17. Yokoi, I. & Komatsu, H. (2009). Relationship between neural responses and visual grouping in the monkey parietal cortex. Journal of Neuroscience, 29, 13210-13221. Young, M.P., & Yamane, S. (1992). Sparse population coding of faces in the inferotemporal cortex. Science, 256, 1327–1331. Zipser, K., Lamme, V. A., & Schiller, P. H. (1996). Contextual modulation in primary visual cortex. The Journal of Neuroscience, 16, 7376-7389. 22 Zhou, H., Friedman, H. S., & Von Der Heydt, R. (2000). Coding of border ownership in monkey visual cortex. The Journal of Neuroscience, 20(17), 6594-6611. 23