Economics 140A Population Regression Models

advertisement

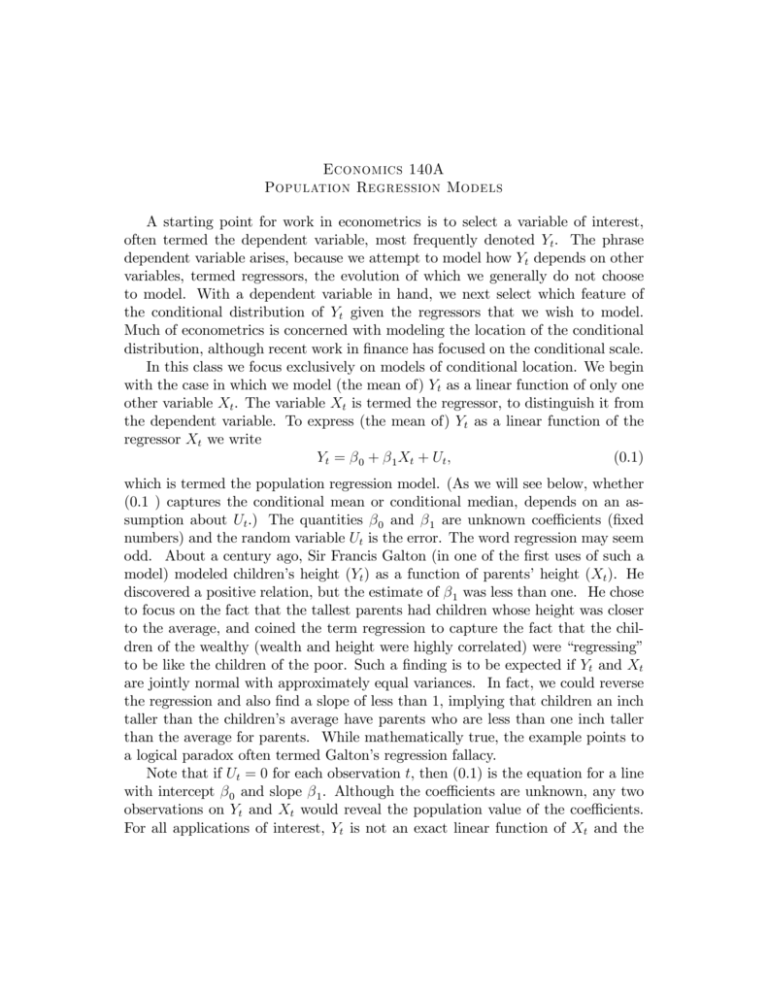

Economics 140A Population Regression Models A starting point for work in econometrics is to select a variable of interest, often termed the dependent variable, most frequently denoted Yt . The phrase dependent variable arises, because we attempt to model how Yt depends on other variables, termed regressors, the evolution of which we generally do not choose to model. With a dependent variable in hand, we next select which feature of the conditional distribution of Yt given the regressors that we wish to model. Much of econometrics is concerned with modeling the location of the conditional distribution, although recent work in …nance has focused on the conditional scale. In this class we focus exclusively on models of conditional location. We begin with the case in which we model (the mean of) Yt as a linear function of only one other variable Xt . The variable Xt is termed the regressor, to distinguish it from the dependent variable. To express (the mean of) Yt as a linear function of the regressor Xt we write Yt = 0 + 1 Xt + Ut ; (0.1) which is termed the population regression model. (As we will see below, whether (0.1 ) captures the conditional mean or conditional median, depends on an assumption about Ut .) The quantities 0 and 1 are unknown coe¢ cients (…xed numbers) and the random variable Ut is the error. The word regression may seem odd. About a century ago, Sir Francis Galton (in one of the …rst uses of such a model) modeled children’s height (Yt ) as a function of parents’height (Xt ). He discovered a positive relation, but the estimate of 1 was less than one. He chose to focus on the fact that the tallest parents had children whose height was closer to the average, and coined the term regression to capture the fact that the children of the wealthy (wealth and height were highly correlated) were “regressing” to be like the children of the poor. Such a …nding is to be expected if Yt and Xt are jointly normal with approximately equal variances. In fact, we could reverse the regression and also …nd a slope of less than 1, implying that children an inch taller than the children’s average have parents who are less than one inch taller than the average for parents. While mathematically true, the example points to a logical paradox often termed Galton’s regression fallacy. Note that if Ut = 0 for each observation t, then (0.1) is the equation for a line with intercept 0 and slope 1 . Although the coe¢ cients are unknown, any two observations on Yt and Xt would reveal the population value of the coe¢ cients. For all applications of interest, Yt is not an exact linear function of Xt and the error, which captures the departures from the exact linear function, is not zero. Because the error is unobservable, if the error is not zero, then two observations on Yt and Xt will not reveal the population value of the coe¢ cients. Given that the error plays such a large role in de…ning the method of analysis, we must be clear as to what the error captures. In many instances the individuals who gather the data (measure Yt and Xt ) are distinct from the individuals who wish to estimate the coe¢ cients. As there are many ways to estimate complicated economic variables (for example, what is the right way to measure output of a university?) the individuals who measure the data may not have used a method that is concordant with the feature that is interesting to estimate. Certain economic variables are obtained by survey (are you employed?, how much did you spend on food last week?) and systematic errors can be introduced by lying or forgetfulness. Other economic variables are simply not well measured because they are subjective (what is the full employment level for the economy?, how many families live in poverty?). Even in the absence of these features, it is unwise to assume that the error is zero. To be clear, consider the following example. You are working for a large grocery chain and have been assigned the task of estimating a price elasticity for aged Parmesan cheese. Let Yt capture the quantity of cheese sold at grocery store t on a given day and let Xt be the corresponding price per pound for the cheese. You vary the price across stores in an attempt to learn the demand response. What could possibly introduce error? First, it may be possible that the quantity sold is mismeasured: at store 3 one of the cash registers was accidentally unplugged from the electronic inventory system for several hours in the morning. As a result, any cheese sold during that period was not recorded and Y3 is mismeasured. Second, the model may be incorrect. For example, you set high prices in a store located in an Italian neighborhood, where the price elasticity is lower than elsewhere. Third, random occurrences are a feature of any model. For example, in one store a pipe in the display case breaks, preventing shoppers from getting to the cheese. Or, a large cheese consumer decides not to buy on the day you gather data because she had a pizza the night before and now wishes no more cheese. To guide us in forming regression models, we present a collection of baseline assumptions that form the classic regression model. For the classic regression model, we can determine an optimal estimator of the parameters of interest (most often the coe¢ cients). While the assumptions of the classic model are rarely true, the classic model is a useful baseline model. By careful thought we can determine which assumptions do not hold, and how to obtain optimal estimators under the 2 relaxed assumptions. The assumptions are Assumption 1: The regression model is correctly speci…ed. The correctly speci…ed model is linear in the coe¢ cients with an additive error term. Remark: For observation t, the regression model is written as Yt = where Xt is a scalar regressor and ( 0 + 0; 1 Xt 1) + Ut ; are the population (true) values. Assumption 2: The regressors have no perfect multicollinearity and are measured without error. The regressors are exogenous. Remark: If the regressors are exogenous and measured without error, then the regressors are independent of the error. Hence if Assumption 2 includes the condition that the regressors are exogenous, the remaining assumptions governing the behavior of the error term do not need to include reference to the regressors. If the variance of Xt is not zero, then the assumption that Xt and Ut are independent identi…es 1 . One can think of 1 Xt as an additive shifter for the conditional distribution of Yt given Xt . (To obtain the properties of the OLS estimators, we do not need to assume that the regressors are independent of the error. Rather we need only assume that the …rst two moments of the error do not depend on the regressors.) An additional assumption is needed to identify 0. Assumption 3: The error term has zero population mean. Remark: For the scalar error Ut , the assumption of zero population mean is expressed as EUt = 0: Assumption 3, expressed as a function of the dependent variable, is E (Yt jXt ) = 0 + 1 Xt : If, instead of Assumption 3 one assumed M ed (Ut ) = 0, then be the conditional median of Yt given Xt . Assumption 4: The error term has constant variance. 3 0 + 1 Xt would Remark: If the error term has constant variance, then the error term is homoskedastic. Assumption 4 is expressed as E Ut2 = 2 : Assumption 4, expressed as a function of the dependent variable, is V ar (Yt jXt ) = 2 : Assumption 5: The error term from one observation is uncorrelated with the error term from any other observation. Remark: Assumption 5 is expressed as E (Us Ut ) = 0 if s 6= t: Assumption 5, expressed as a function of the dependent variable, is Cov (Ys ; Yt jXs ; Xt ) = 0: Assumption 6: The error has a univariate Gaussian distribution. Remark: The assumption of a Gaussian distribution, in conjunction with Assumptions 3-4, is represented as Ut N 0; 2 : Assumption 6, expressed as a function of the dependent variable, is Yt jXt N 0 + 1 Xt ; 2 : Assumption Explanation Assumption 1 does not restrict attention to functions that are linear in Yt and Xt . In fact, Assumption 1 holds for g (Yt ) = 0 + 1 h (Xt ) 4 + Ut : (Examples: Single regressor: squared, log, log-log) Assumption 2: To identify the coe¢ cients, we cannot have perfect multicollinearity. Consider a single regressor that is a constant, and so is perfectly collinear with the column vector of one’s. (Graph) Assumption 2 and 3 contains a basic insight into linear regression models. If the regressor is exogenous and measured without error, then the regressors and the error are uncorrelated E (Ut Xt ) = EX Xt E (Ut jXt ) = EX Xt E (Ut ) = 0; where the last equality is determined by Assumption 3. Thus the regressors and the error are uncorrelated . If the regressors and the error were correlated, we would mistakenly attribute the e¤ect of Ut on Yt to elements of Xt . (Discuss for a single regressor with both positive and negative correlation with the error.) Note that the real condition is that E (Ut jXt ) not vary over t. For example, if E (Ut jXt ) = , then the presence of an intercept allows us to rewrite the model in such a way that Assumption 3 holds: Yt = + 1 Xt + Ut ; + )+ 1 Xt + (Ut 0 is equivalently written as Yt = ( 0 ): The intercept always absorbs the mean of Ut , which is one component leading to di¢ culty in interpreting the intercept. Assumption 4 leads directly to e¢ ciency of estimators. A measure of the accuracy of an observation is the variance, with larger variance the noise is increased. If all the errors have the same variance, then the information contained in each observation is the same and the observations should be equally weighted. If the variance di¤ered across errors, then the observations with larger error variance are prone to larger quantities of noise and should be downweighted. Assumption 5 also leads directly to e¢ ciency of estimators. If the errors were correlated, then there would be information in one error about the value of another error. The information could be used to improve estimation, which typically would require far more than simply equally weighting the observations. 5 Assumption 6 augments the assumptions of the …rst two moments of the errors with knowledge of the full distribution. Such additional knowledge leads to equivalence between OLS and ML estimators. 6