Solutions to Selected Problems In: Pattern Classification by Duda

advertisement

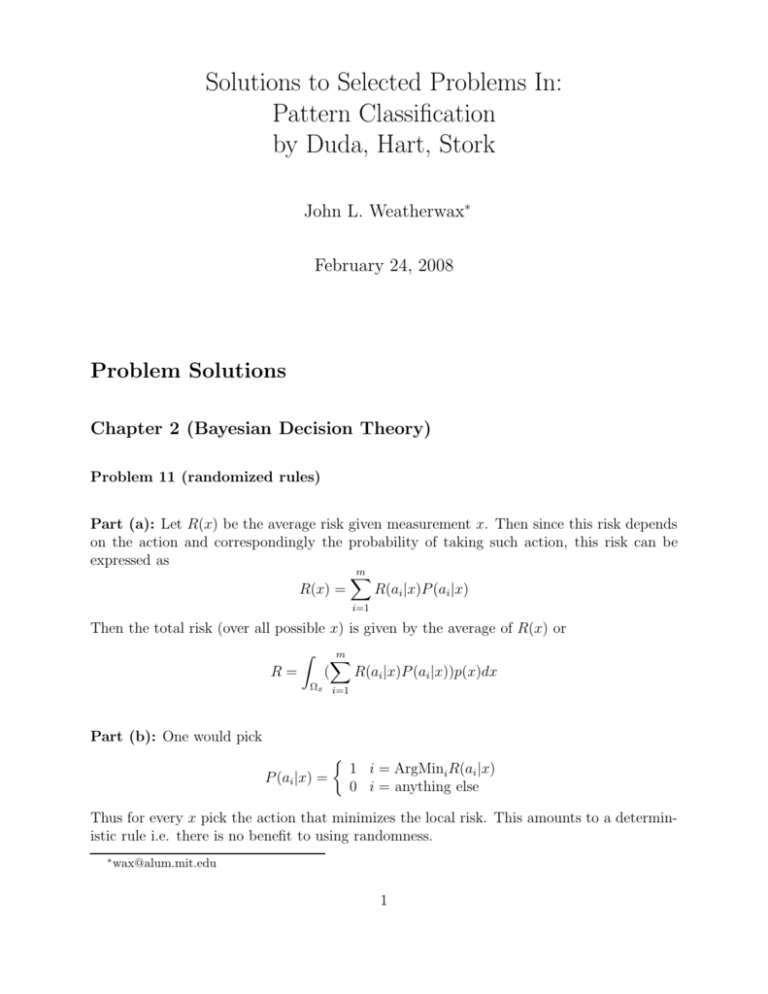

Solutions to Selected Problems In:

Pattern Classification

by Duda, Hart, Stork

John L. Weatherwax∗

February 24, 2008

Problem Solutions

Chapter 2 (Bayesian Decision Theory)

Problem 11 (randomized rules)

Part (a): Let R(x) be the average risk given measurement x. Then since this risk depends

on the action and correspondingly the probability of taking such action, this risk can be

expressed as

m

X

R(x) =

R(ai |x)P (ai |x)

i=1

Then the total risk (over all possible x) is given by the average of R(x) or

R=

Z

(

m

X

Ωx i=1

R(ai |x)P (ai |x))p(x)dx

Part (b): One would pick

P (ai |x) =

1 i = ArgMini R(ai |x)

0 i = anything else

Thus for every x pick the action that minimizes the local risk. This amounts to a deterministic rule i.e. there is no benefit to using randomness.

∗

wax@alum.mit.edu

1

Part (c): Yes, since in a deterministic decision rule setting randomizing some decisions

might further improve performance. But how this would be done would be difficult to say.

Problem 13

Let

0 i=j

λr i = c + 1

λ(αi |ωj ) =

λs otherwise

Then since the definition of risk is expected loss we have

R(αi |x) =

c

X

i=1

λ(ai |ωj )P (ωj |x)

i = 1, 2, . . . , c

(1)

R(αc+1 |x) = λr

(2)

Now for i = 1, 2, . . . , c the risk can be simplified as

R(αi |x) = λs

c

X

j=1, j6=i

λ(ai |ωj )P (ωj |x) = λs (1 − P (ωi |x))

Our decision function is to choose the action with the smallest risk, this means that we pick

the reject option if and only if

λr < λs (1 − P (ωi|x))

This is equivalent to

P (ωi |x) < 1 −

λr

λs

∀ i = 1, 2, . . . , c

∀ i = 1, 2, . . . , c

This can be interpreted as if all posterior probabilities are below a threshold (1 −

should reject. Otherwise we pick action i such that

λs (1 − P (ωi |x)) < λs (1 − P (ωi|x))

λr

)

λs

we

∀ j = 1, 2, . . . , c, j 6= i

This is equivalent to

P (ωi|x) > P (ωj |x)

∀ j 6= i

This can be interpreted as selecting the class with the largest posteriori probability. In

summary we should reject if and only if

P (ωi |x) < 1 −

λr

λs

∀ i = 1, 2, . . . , c

(3)

Note that if λr = 0 there is no loss for rejecting (equivalent benefit/reward for correctly

classifying) and we always reject. If λr > λs , the loss from rejecting is greater than the loss

from missclassifing and we should never reject. This can be seen from the above since λλrs > 1

so 1 − λλrs < 0 so equation 3 will never be satisfied.

Problem 31 (the probability of error)

Part (a): We can assume with out loss of generality that µ1 < µ2 . If this is not the case

initially change the labels of class one and class two so that it is. We begin by recalling the

probability of error Pe

Pe = P (Ĥ = H1 |H2 )P (H2) + P (Ĥ = H2 |H1 )P (H1)

Z ξ

Z ∞

=

p(x|H2 )dx P (H2) +

p(x|H1 )dx P (H1 ) .

−∞

ξ

Here ξ is the decision boundary between the two classes i.e. we classify as class H1 when

x < ξ and classify x as class H2 when x > ξ. Here P (Hi ) are the priors for each of the

two classes. We begin by finding the Bayes decision boundary ξ under the minimum error

criterion. From the discussion in the book, the decision boundary is given by a likelihood

ratio test. That is we decide class H2 if

p(x|H2 )

P (H1 ) C21 − C11

,

≥

p(x|H1 )

P (H2 ) C12 − C22

and classify the point as a member of H1 otherwise. The decision boundary ξ is the point

at which this inequality is an equality. If we use a minimum probability of error criterion

the costs Cij are given by Cij = 1 − δij , and the decision boundary reduces when P (H1) =

P (H2 ) = 12 to

p(ξ|H1 ) = p(ξ|H2) .

If each conditional distributions is Gaussian p(x|Hi ) = N (µi , σi2 ), then the above expression

becomes

1 (ξ − µ1 )2

1 (ξ − µ2 )2

1

1

√

√

exp{−

exp{−

}

=

}.

2

σ12

2

σ22

2πσ1

2πσ2

If we assume (for simplicity) that σ1 = σ2 = σ the above expression simplifies to (ξ − µ1 )2 =

(ξ − µ2 )2 , or on taking the square root of both sides of this we get ξ − µ1 = ±(ξ − µ2 ). If we

take the plus sign in this equation we get the contradiction that µ1 = µ2 . Taking the minus

sign and solving for ξ we find our decision threshold given by

1

ξ = (µ1 + µ2 ) .

2

Again since we have uniform priors (P (H1 ) = P (H2 ) = 21 ), we have an error probability

given by

Z ∞

Z ξ

1 (x − µ2 )2

1 (x − µ1 )2

1

1

√

√

exp{−

exp{−

}dx

+

}dx .

2Pe =

2

σ2

2

σ2

2πσ

2πσ

ξ

−∞

or

Z ∞

Z ξ

√

1 (x − µ1 )2

1 (x − µ2 )2

2 2πσPe =

}dx +

}dx .

exp{−

exp{−

2

σ2

2

σ2

ξ

−∞

To evaluate the first integral use the substitution v =

√ 2 so dv = √dx . This then gives

use v = x−µ

2σ

2σ

√ Z

2 2πσPe = σ 2

√

µ1 −µ2

√

2 2σ

−∞

−v2

e

x−µ

√ 2

2σ

√ Z

dv + σ 2

so dv =

∞

−

µ1 −µ2

√

2 2σ

√dx ,

2σ

2

e−v dv .

and in the second

or

1

Pe = √

2 π

Z

µ1 −µ2

√

2 2σ

−v2

e

−∞

1

dv + √

2 π

Z

∞

−

2

e−v dv .

µ1 −µ2

√

2 2σ

2

Now since e−v is an even function, we can make the substitution u = −v and transform the

second integral into an integral that is the same as the first. Doing so we find that

Z µ1√−µ2

2 2σ

1

2

Pe = √

e−v dv .

π −∞

Doing the same transformation we can convert the first integral into the second and we have

the combined representation for Pe of

Z µ1√−µ2

Z ∞

2 2σ

1

1

2

−v2

e dv = √

e−v dv .

Pe = √

π −∞

π − µ1√−µ2

2

2σ

Since we have that µ1 < µ2 , we see that the lower limit on the second integral is positive. In

the case when µ1 > µ2 we would switch the names of the two classes and still arrive at the

above. In the general case our probability of error is given by

Z ∞

1

2

Pe = √

e−v dv ,

π √a

2

−µ2 |

du

and

. To match exactly the book let v = √u2 so that dv = √

with a defined as a = |µ12σ

2

the above integral becomes

Z ∞

1

2

Pe = √

e−u /2 du .

2π a

Rx 2

In terms of the error function (which is defined as erf(x) = √2π 0 e−t dt), we have that

#

"

"

#

Z ∞

Z ∞

Z √a

2

1 2

2

2

1

2

2

2

√

√

e−v dv

Pe =

e−v dv − √

e−v dv =

2

π √a

2

π 0

π 0

2

"

#

Z √a

2

1

2

2

=

1− √

e−v dv

2

π 0

1 1

|µ1 − µ2 |

√

=

− erf

.

2 2

2 2σ

Since the error function is programmed into many mathematical libraries this form is easily

evaluated.

Part (b): From the given inequality

1

Pe = √

2π

Z

∞

2 /2

e−t

a

dt ≤ √

1

2

e−a /2 ,

2πa

and the definition a as the lower limit of the integration in Part (a) above we have that

Pe

1

≤ √

2π

= √

1

|µ1 −µ2 |

2σ

−

e

(µ1 −µ2 )2

2(4σ 2 )

1 (µ1 − µ2 )2

2σ

exp{−

} → 0,

8

σ2

2π|µ1 − µ2 |

2|

as |µ1 −µ

→ +∞. This can happen if µ1 and µ2 get far apart of σ shrinks to zero. In each

σ

case the classification problem gets easier.

Problem 32 (probability of error in higher dimensions)

As in Problem 31 the classification decision boundary are now the points (x) that satisfy

||x − µ1 ||2 = ||x − µ2 ||2 .

Expanding each side of this expression we find

||x||2 − 2xt µ1 + ||µ1 ||2 = ||x||2 − 2xt µ2 + ||µ2 ||2 .

Canceling the common ||x||2 from each side and grouping the terms linear in x we have that

xt (µ1 − µ2 ) =

Which is the equation for a hyperplane.

1

||µ1 ||2 − ||µ2 ||2 .

2

Problem 34 (error bounds on non-Gaussian data)

Part (a): Since the Bhattacharyya bound is a specialization of the Chernoff bound, we

begin by considering the Chernoff bound. Specifically, using the bound min(a, b) ≤ aβ b1−β ,

for a > 0, b > 0, and 0 ≤ β ≤ 1, one can show that

P (error) ≤ P (ω1)β P (ω2)1−β e−k(β)

for 0 ≤ β ≤ 1 .

Here the expression k(β) is given by

k(β) =

1

β(1 − β)

(µ2 − µ1 )t [βΣ1 + (1 − β)Σ2 ]−1 (µ2 − µ1 ) + ln

2

2

|βΣ1 + (1 − β)Σ2 |

|Σ1 |β |Σ2 |1−β

!

.

When we desire to compute the Bhattacharyya bound we assign β = 1/2. For a two class

scalar problem with symmetric means and equal variances that is where µ1 = −µ, µ2 = +µ,

and σ1 = σ2 = σ the expression for k(β) above becomes

2

β(1 − β)

|βσ 2 + (1 − β)σ 2 |

1

2 −1

k(β) =

(2µ) βσ + (1 − β)σ

(2µ) + ln

2

2

σ 2β σ 2(1−β)

2β(1 − β)µ2

.

=

σ2

Since the above expression is valid for all β in the range 0 ≤ β ≤ 1, we can obtain the tightest

possible bound by computing the maximum of the expression k(β) (since the dominant β

expression is e−k(β) . To evaluate this maximum of k(β) we take the derivative with respect

to β and set that result equal to zero obtaining

d 2β(1 − β)µ2

=0

dβ

σ2

which gives

1 − 2β = 0

or β = 1/2. Thus in this case the Chernoff bound equals the Bhattacharra bound. When

β = 1/2 we have for the following bound on our our error probability

P (error) ≤

p

µ2

P (ω1)P (ω2 )e− 2σ2 .

To further simplify things if we assume that our variance is such that σ 2 = µ2 , and that our

priors over class are taken to be uniform (P (ω1 ) = P (ω2) = 1/2) the above becomes

1

P (error) ≤ e−1/2 ≈ 0.3033 .

2

Chapter 3 (Maximum Likelihood and Bayesian Estimation)

Problem 34

In the problem statement we are told that to operate on a data of “size” n, requires f (n)

numeric calculations. Assuming that each calculation requires 10−9 seconds of time to compute, we can process a data structure of size n in 10−9f (n) seconds. If we want our results

finished by a time T then the largest data structure that we can process must satisfy

10−9 f (n) ≤ T

or n ≤ f −1 (109 T ) .

Converting the ranges of times into seconds we find that

T = {1 sec, 1 hour, 1 day, 1 year} = {1 sec, 3600 sec, 86400 sec, 31536000 sec} .

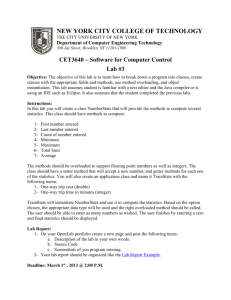

Using a very naive algorithm we can compute the largest n such that f (n) by simply incrementing n repeatedly. This is done in the Matlab script prob 34 chap 3.m and the results

are displayed in Table XXX.

Problem 35 (recursive v.s. direct calculation of means and covariances)

Part (a): To compute µ̂n we have to add n, d-dimensional vectors resulting in nd computations. We then have to divide each component by the scalar n, resulting in another n

computations. Thus in total we have

nd + n = (1 + d)n ,

calculations.

To compute the sample covariance matrix, Cn , we have to first compute the differences

xk − µ̂n requiring d subtraction for each vector xk . Since we must do this for each of the n

vectors xk we have a total of nd calculations required to compute all of the xk − µ̂n difference

vectors. We then have to compute the outer products (xk − µ̂n )(xk − µ̂n )′ , which require

d2 calculations to produce each (in a naive implementation). Since we have n such outer

products to compute computing them all requires nd2 operations. We next, have to add all

of these n outer products together, resulting in another set of (n − 1)d2 operations. Finally

dividing by the scalar n − 1 requires another d2 operations. Thus in total we have

nd + nd2 + (n − 1)d2 + d2 = 2nd2 + nd ,

calculations.

Part (b): We can derive recursive formulas for µ̂n and Cn in the following way. First for

µ̂n we note that

X

n+1

n

1 X

xn+1

1

n

µ̂n+1 =

xk =

xk

+

n+1

n+1

n+1 n

k=1

k=1

n

1

µ̂n .

xn+1 +

=

n+1

n+1

Writing

n

n+1

as

n+1−1

n+1

=1−

1

n+1

the above becomes

1

1

xn+1 + µ̂n −

µ̂n

n + 1

n

+

1

1

(xn+1 − µ̂n ) ,

= µ̂n +

n+1

µ̂n+1 =

as expected.

To derive the recursive update formula for the covariance matrix Cn we begin by recalling

the definition of Cn of

n+1

1X

Cn+1 =

(xk − µ̂n+1 )(xk − µ̂n+1 )′ .

n k=1

Now introducing the recursive definition of the mean µ̂n+1 as computed above we have that

n+1

Cn+1

1X

1

1

=

(xk − µ̂n −

(xn+1 − µ̂n ))(xk − µ̂n −

(xn+1 − µ̂n ))′

n k=1

n+1

n+1

n+1

n+1

X

1X

1

=

(xk − µ̂n )(xk − µ̂n )′ −

(xk − µ̂n )(xn+1 − µ̂n )′

n k=1

n(n + 1) k=1

n+1

n+1

X

X

1

1

′

(xn+1 − µ̂n )(xk − µ̂n ) −

(xn+1 − µ̂n )(xn+1 − µ̂n )′ .

−

n(n + 1) k=1

n(n + 1)2 k=1

Since µ̂n =

1

n

Pn

k=1

xk we see that

n

1X

xk = 0 or

nµ̂n −

n k=1

n

1X

(µ̂n − xk ) = 0 ,

n k=1

Using this fact the second and third terms the above can be greatly simplified. For example

we have that

n+1

n

X

X

(xk − µ̂n ) =

(xk − µ̂n ) + (xn+1 − µ̂n ) = xn+1 − µ̂n

k=1

k=1

Using this identity and the fact that the fourth term has a summand that does not depend

on k we have Cn+1 given by

!

n

1

1 X

n−1

(xk − µ̂n )(xk − µ̂n )′ +

(xn+1 − µ̂n )(xn+1 − µ̂n )′

Cn+1 =

n

n − 1 k=1

n−1

2

1

(xn+1 − µ̂n )(xn+1 − µ̂n ) +

(xn+1 − µ̂n )(xn+1 − µ̂n )′

n(n + 1)

n(n + 1)

1

1

1

=

1−

Cn +

(xn+1 − µ̂n )(xn+1 − µ̂n )′

−

n

n n(n + 1)

1 n+1−1

1

(xn+1 − µ̂n )(xn+1 − µ̂n )′

= Cn − Cn +

n

n

n+1

1

n−1

Cn +

(xn+1 − µ̂n )(xn+1 − µ̂n )′ .

=

n

n+1

−

which is the result in the book.

Part (c): To compute µ̂n using the recurrence formulation we have d subtractions to compute xn − µ̂n−1 and the scalar division requires another d operations. Finally, addition by

the µ̂n−1 requires another d operations giving in total 3d operations. To be compared with

the (d + 1)n operations in the non-recursive case.

To compute the number of operations required to compute Cn recursively we have d computations to compute the difference xn − µ̂n−1 and then d2 operations to compute

the outer

product (xn − µ̂n−1 )(xn − µ̂n−1 )′ , another d2 operations to multiply Cn−1 by n−2

and finally

n−1

2

d operations to add this product to the other one. Thus in total we have

d + d2 + d2 + d2 + d2 = 4d2 + d .

To be compare with 2nd2 + nd in the non recursive case. For large n the non-recursive

calculations are much more computationally intensive.

Chapter 6 (Multilayer Neural Networks)

Problem 39 (derivatives of matrix inner products)

Part (a): We present a slightly different method to prove this here. We desire the gradient

(or derivative) of the function

φ ≡ xT Kx .

The i-th component of this derivative is given by

∂φ

∂

xT Kx = eTi Kx + xT Kei

=

∂xi

∂xi

where ei is the i-th elementary basis function for Rn , i.e. it has a 1 in the i-th position and

zeros everywhere else. Now since

(eTi Kx)T = xT K T eTi = eTi Kx ,

the above becomes

∂φ

= eTi Kx + eTi K T x = eTi (K + K T )x .

∂xi

Since multiplying by eTi on the left selects the i-th row from the expression to its right we

see that the full gradient expression is given by

∇φ = (K + K T )x ,

as requested in the text. Note that this expression can also be proved easily by writing each

term in component notation as suggested in the text.

Part (b): If K is symmetric then since K T = K the above expression simplifies to

∇φ = 2Kx ,

as claimed in the book.

Chapter 9 (Algorithm-Independent Machine Learning)

Problem 9 (sums of binomial coefficients)

Part (a): We first recall the binomial theorem which is

n X

n

n

xk y n−k .

(x + y) =

k

k=0

If we let x = 1 and y = 1 then x + y = 2 and the sum above becomes

n X

n

n

,

2 =

k

k=0

which is the stated identity. As an aside, note also that if we let x = −1 and y = 1 then

x + y = 0 and we obtain another interesting identity involving the binomial coefficients

n X

n

(−1)k .

0=

k

k=0

Problem 23 (the average of the leave one out means)

We define the leave one out mean µ(i) as

µ(i) =

1 X

xj .

n−1

j6=i

This can obviously be computed for every i. Now we want to consider the mean of the leave

one out means. To do so first notice that µ(i) can be written as

µ(i) =

1 X

xj

n − 1 j6=i

n

X

1

=

n−1

j=1

xj − xi

!

!

X

n

1

1

n

xj − xi

=

n−1

n j=1

n

xi

=

µ̂ −

.

n−1

n−1

With this we see that the mean of all of the leave one out means is given by

n

n

xi

n

µ̂ −

n−1

n−1

X

n

n

1

1

=

xi

µ̂ −

n−1

n − 1 n i=1

1X

1X

µ(i) =

n i=1

n i=1

=

n

µ̂

µ̂ −

= µ̂ ,

n−1

n−1

as expected.

Problem 40 (maximum likelihood estimation with a binomial random variable)

Since k has a binomial distribution with parameters (n′ , p) we have that

′ ′

n

pk (1 − p)n −k .

P (k) =

k

Then the p that maximizes this expression is given by taking the derivative of the above

(with respect to p) setting the resulting expression equal to zero and solving for p. We find

that this derivative is given by

′ ′ d

′

n

n

k−1

n′ −k

pk (1 − p)n −k−1 (n′ − k)(−1) .

kp (1 − p)

+

P (k) =

k

k

dp

Which when set equal to zero and solve for p we find that p = nk′ , or the empirical counting

estimate of the probability of success as we were asked to show.