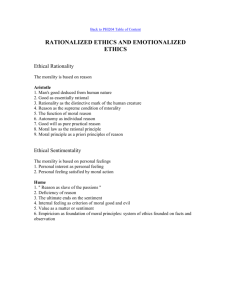

Moral Rationalization and the Integration of Situational Factors and

advertisement