Deep Learning in NLP

advertisement

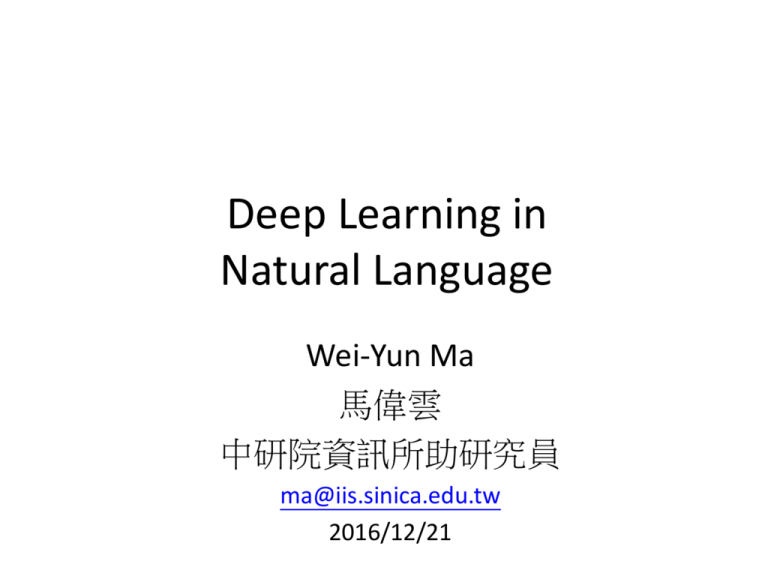

Deep Learning in Natural Language Wei-Yun Ma 馬偉雲 中研院資訊所助研究員 ma@iis.sinica.edu.tw 2016/12/21 Outline Introduction to applications of DL on NLP Word Representations Named entity recognition using Window-based NN Language model using Recurrent NN Machine translation using LSTM Syntactic Parsing using Recursive NN Sentiment analysis using Recursive NN Sentence classification using Convolutional NN Information retrieval using DSSM Knowledge base completion using knowledge base embedding • Concluding Remarks • • • • • • • • • • Word Representations • Dictionary-based Word Representation (Discrete Word Representation) Is there other ways to represent a word? Adapted slides from "Deep Learning for NLP(without Magic)" of Socher and Manning Continuous Word Representations • A word is represented as a dense vector 蝴蝶 = 0.234 0.283 −0.435 0.485 −0.934 −0.384 0.234 0.548 −0.834 0.437 0.483 Continuous Word Representations • Word embedding captures the word meaning and project the meaning into a semantic vector space Adapted picture from "Deep Learning for NLP(without Magic)" of Socher and Manning Why are Continuous Word Representations useful? Adapt slides of NAACL 2015 tutorial given by Wen-tau Yih, Xiaodong He and Jianfeng Gao Why are Continuous Word Representations useful? Trainable features could be directly trained through supervised training on labeled data. Adapted slides of NAACL 2015 tutorial given by Wen-tau Yih, Xiaodong He and Jianfeng Gao Or, they could be pre-trained through unsupervised training on raw data and then trained through supervised training on labeled data. Why are Continuous Word Representations useful? Adapted slides from "Deep Learning for NLP(without Magic)" of Socher and Manning Continuous Word Representations Adapted slides of NAACL 2015 tutorial given by Wen-tau Yih, Xiaodong He and Jianfeng Gao Latent Semantic Analysis Adapted slides of NAACL 2015 tutorial given by Wen-tau Yih, Xiaodong He and Jianfeng Gao Word2vec Continuous Bag-of-Words (CBOW) (Mikolov et.al, Workshop at ICLR 2013) Skip-gram 12 Word2vec 彩色 的 蝴蝶 翩翩 起舞 彩色 彩色 的 的 蝴蝶 蝴蝶 翩翩 翩翩 起舞 起舞 Continuous Bag-of-Words (CBOW) (Mikolov et.al, Workshop at ICLR 2013) Skip-gram 13 Review Softmax • Softmax layer as the output layer Softmax Layer z1 z2 3 1 z3 -3 e e e e z1 20 e 0.88 ÷ z 2 2.7 e z3 3 + ∑e j =1 y1 = e 0.12 ÷ 0.05 Probability: 1 > 𝑦𝑦𝑖𝑖 > 0 ∑𝑖𝑖 𝑦𝑦𝑖𝑖 = 1 y2 = e ÷ ≈0 y3 = e 3 z1 ∑e zj j =1 3 z2 ∑e zj j =1 3 z3 ∑e zj j =1 zj Adapted slides of Hung-yi Lee Skip-gram using softmax • The objective of the Skip-gram model is to maximize the average log probability • The basic Skip-gram formulation defines p(wt+j |wt) using the softmax function: (Mikolov et.al, NIPS 2013) The probability is also used on training. But so many calculations! Skip-gram using Negative sampling • We define Negative sampling (NEG) by the objective k is the number of negative samples • The sigmoid function The function is also used on training. So little calculations! (Mikolov et.al, NIPS 2013) (Mikolov et.al, NIPS 2013) (Mikolov et.al, Workshop at ICLR 2013) Named entity recognition using Window-based NN NER: I O Iive O in O New BLOC York ILOC last O year O O BPER IPER BLOC ILOC BORGIORG Neural Network I Iive context in New York last context year Language model using Recurrent NN • Review recurrent NN y1 Wo Wh y2 Wo Wh Wi x1 y3 Wo Wh Wi …… Wi x3 x2 The same network is used again and again. Output yi depends on x1, x2, …… xi Adapted slides of Hung-yi Lee Language model using Recurrent NN • Language Model: Estimate the probability of a given word sequence • The problems of traditional N-gram LM: No memory and need smoothing Training: P(彩色 的 蝴蝶 ) = P(彩色)*P(的|彩色 )* P(蝴蝶 | 彩色 P(蝴蝶 | 彩色 的) 的) = count(蝴蝶)/ count(彩色 的 蝴蝶) = 3/789 Testing: P(彩色 的 P(鳳蝶| 彩色 鳳蝶) = P(彩色)*P(的|彩色 )* P(鳳蝶| 彩色 的) = count(鳳蝶)/ count(彩色 的 的) 鳳蝶) = 0/789 • Recurrent NN has memory and it can provide smoothing naturally Language model using Recurrent NN • Review recurrent NN y1 Wo Wh y2 Wo Wh Wi x1 y3 Wo Wh Wi …… Wi x3 x2 The same network is used again and again. Output yi depends on x1, x2, …… xi Adapted slides of Hung-yi Lee Language model using Recurrent NN Training: P(next word is “彩色”) P(next word is “的”) P(next word is “蝴蝶”) P(next word is “END”) 1-of-N encoding 1-of-N encoding 1-of-N encoding 1-of-N encoding of “START” of “彩色” of “的” of “蝴蝶” Adapted slides of Hung-yi Lee Language model using Recurrent NN Testing: P(next word is “彩色”) P(next word is “的”) P(next word is “鳳蝶”) P(next word is “END”) 1-of-N encoding 1-of-N encoding 1-of-N encoding 1-of-N encoding of “START” of “彩色” of “的” of “鳳蝶” Adapted slides of Hung-yi Lee Machine translation using LSTM • Review LSTM Other part of the network Signal control the output gate (Other part of the network) Signal control the input gate (Other part of the network) Output Gate Special Neuron: 4 inputs, 1 output Memory Cell Forget Gate Input Gate LSTM Other part of the network Signal control the forget gate (Other part of the network) Adapted slides of Hung-yi Lee Machine translation using LSTM Target language Source language Softmax v is the fixed dimensional representation of the input sequence given by the last hidden state of the LSTM (Sutskever et.al, NIPS 2014) Machine translation using LSTM (Sutskever et.al, NIPS 2014) Model Analysis (Sutskever et.al, NIPS 2014) Model Analysis (Sutskever et.al, NIPS 2014) Syntactic Parsing using Recursive NN • What is parsing? Short Ans: Structure prediction Adapted slides from Socher’s deep learning course Syntactic Parsing using Recursive NN Adapted slides from Socher’s deep learning course Syntactic Parsing using Recursive NN Adapted slides from Socher’s deep learning course Syntactic Parsing using Recursive NN Adapted slides from Socher’s deep learning course Syntactic Parsing using Recursive NN Adapted slides from Socher’s deep learning course Syntactic Parsing using Recursive NN Adapted slides from Socher’s deep learning course Sentiment analysis using Recursive NN • What is sentiment analysis? negative postive • Most methods start with a bag of words +linguistic fearures/processing/lexicon, but they are hard to distinguish the above case. Adapted slides from Socher’s deep learning course Sentiment analysis using Recursive NN 5 classes ( -- , - , 0 , + , ++ ) (Socher et.al, ICML 2011) Sentiment analysis using Recursive NN 5 classes is decided by softmax (Socher et.al, ICML 2011) Sentence classification using Convolutional NN Adapted slides from Socher’s deep learning course Sentence classification using Convolutional NN (KIM, EMNLP 2014) Sentence classification using Convolutional NN Model Comparison Adapted slides from "Deep Learning for NLP(without Magic)" of Socher and Manning Model Comparison Adapted slides from "Deep Learning for NLP(without Magic)" of Socher and Manning Information retrieval using DSSM query Source: http://www.cs.toronto.edu/~hinton/science.pdf Bag-of-word word string (document or query) How to achieve that? (No target ……) Adapted slides of Hung-yi Lee Click-through data: q1 d1 : + d2 : - q2 Training: query q1 d3 : …… Far apart close document d1 + d4 : + document d2 - Far apart close Adapted slides of Hung-yi Lee query q2 document d3 - document d4 + DSSM v.s. Typical DNN DSSM Typical DNN reference input query q document d + query q document d + Adapted slides of Hung-yi Lee Click-through data: q1 q2 d1 : + d2 : d3 : …… d4 : + • How to do retrieval? Retrieved New Query q’ Document d1 Document d2 Adapted slides of Hung-yi Lee Knowledge base completion using knowledge base embedding Adapted slides of NAACL 2015 tutorial given by Wen-tau Yih, Xiaodong He and Jianfeng Gao Knowledge Base Applications Adapted slides of NAACL 2015 tutorial given by Wen-tau Yih, Xiaodong He and Jianfeng Gao Reasoning with Knowledge Base Adapted slides of NAACL 2015 tutorial given by Wen-tau Yih, Xiaodong He and Jianfeng Gao Knowledge Base Embedding Adapted slides of NAACL 2015 tutorial given by Wen-tau Yih, Xiaodong He and Jianfeng Gao Knowledge Base Representation – Tensor Adapted slides of NAACL 2015 tutorial given by Wen-tau Yih, Xiaodong He and Jianfeng Gao Knowledge Base Representation – Tensor Adapted slides of NAACL 2015 tutorial given by Wen-tau Yih, Xiaodong He and Jianfeng Gao Tensor Decomposition Objective Adapted slides of NAACL 2015 tutorial given by Wen-tau Yih, Xiaodong He and Jianfeng Gao Measure the Degree of a Relationship Adapted slides of NAACL 2015 tutorial given by Wen-tau Yih, Xiaodong He and Jianfeng Gao Concluding Remarks • Pre-trained through unsupervised training on raw data and then trained through supervised training on labeled data. • Physical meaning of each model is crucial • Novel applications are everywhere • Collaboration of traditional NLP/resource and DNN-based NLP/resource My current work – Lexical Knowledge Base Embedding • E-Hownet (http://ehownet.iis.sinica.edu.tw/index.php) My current work – Lexical Knowledge Base Embedding • E-Hownet (http://ehownet.iis.sinica.edu.tw/index.php) 工廠: 場所 domain location telic 製造 工 0.234 0.283 −0.435 0.485 −0.934 −0.384 0.234 0.548 −0.834 0.437 0.483 My current work – Dependency Chinese Word Embedding property theme property 大學 兼任 助理 納保 爭議 未 歇 59 CKIP Chinese Parser theme negation property property Head[VP] apposition 爭議 未 大學 兼任 兼任 助理 Nac Dc Ncb VG2 VG2 Nab Head[S] Head[S] Head[NP] Head[NP] range Head[NP] 歇 歇 爭議 爭議 納保 納保 VA12 VA12 Nac Nac Nb Nb 60 Demo • http://dep2.ckip.cc Reference Tutorials • "Deep Learning for NLP(without Magic)“ of Socher and Manning • NAACL 2015 tutorial given by Wen-tau Yih, Xiaodong He and Jianfeng Gao • UFLDL Tutorial • Socher’s deep learning course 2015 • Hung-yi Lee’s deep learning course 2015 Thank you for your attention!