The Hendrick Hudson Lincoln-Douglas Philosophical Handbook

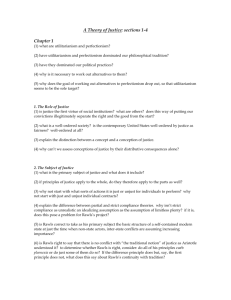

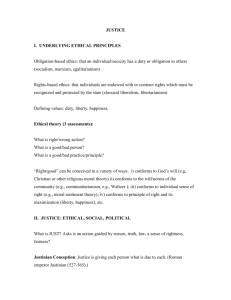

advertisement