Assessment Report, English 1A

advertisement

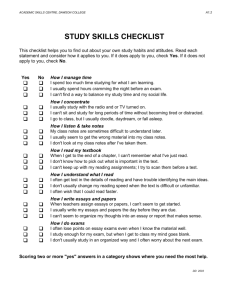

Follow-Up Report on English 1A Assessment for General Education Outcomes: Spring 2012 1 1 Background and Methodology During Spring, 2011, the English discipline at Norco College undertook a comprehensive assessment project intended to evaluate the degree to which students in English 1A—the transferlevel composition course—demonstrated competency in three main areas of general education: written expression, critical thinking, and information competency. English 1A is the only course required of all Norco College students as part of their GE program, so we thought that using the course as an assessment proxy for the program itself made good sense. A detailed report on that project, including methodology and results, may be found at http://www.norcocollege.edu/employees/faculty/Documents/OutcomesAssessment/Report%20o n%20English%201A%20Assessment%20for%20General%20Education%20Outcomes.pdf. Of the four competencies assessed (written expression was broken into two subcomponents, the first having to do with rhetorical control; the second with grammatical and stylistic effectiveness), students did reasonably well with the written expression, but had significant levels of difficulty with both critical thinking and information competency. The discipline resolved to conduct another assessment of English 1A in Spring, 2012 after using the results generated by the 2011 assessment to try to improve teaching and learning in the course. After carefully studying and discussing the 2011 results, the discipline concluded that a number of students failed to demonstrate critical thinking or information competency skills because the assignments they were given to write on seemed not to invite either skill. Some of the sample essays we read were not in any way source-dependent (even if their topics suggested that sources might have been employed to advantage); they contained no citations or Works Cited. Many of the other essays were well-written, source-dependent reports: students demonstrated an ability to gather sources, organize material, and write with fluency and intelligibility, but they did not marshal evidence to support a claim, or examine competing explanations for a phenomenon, or try to solve a complex problem—characteristics generally associated with critical thinking. (To be clear, we notified English 1A instructors at various points during the semester that we would be conducting an assessment of these skills at the end of the term and asked them to contribute sample essays in response to assignments that required students to demonstrate those skills.) The discipline resolved to try to bring more consistency and rigor to the course (taught by a mixture of full-time and part-time instructors) over the 2011-2012 academic year, through a variety of approaches, including • • • 1 Holding a half-day workshop in September, 2011 at which results of the assessment were presented and discussed, and instructors broke into groups by courses taught to review best practices, including sample assignments. Providing instructors in all courses in the composition sequence with a handbook including FAQs on the course, sample syllabi, and sample assignments. (The English 1A FAQs are included as Appendix B to this report.) Appointing a full-time faculty member as a course lead person for each course in the composition sequence, someone who would monitor book orders and syllabi, discuss assignments with instructors, and be a resource person when instructors had questions. This report has been prepared by Arend Flick, Professor of English and outcomes assessment coordinator at Norco College. 2 On June 8, 2012, 13 instructors (eight full-time and five part-time) met for five hours to read, evaluate, and discuss 69 sample essays gathered from sections of English 1A taught during that spring semester. We employed the same methods we did last year to ensure a random, representative sampling of essays. Like the previous year, sample essays were provided from every section. We had fewer sample essays to read in 2012 because there were three fewer sections of 1A offered in 2012 as compared to 2011. Once again, we employed an analytic rubric (Appendix A) with four-point scales for each of the four criteria. Each essay was scored by two readers independently of each other, with a third reader brought in to resolve discrepancies (differences of two or more points on a particular criterion) and 2/3 splits. The two numbers were then added together to generate a composite score for each criterion. Scores of six or higher therefore denote that the essay demonstrated adequate or strong evidence that the skill was demonstrated by the essay. Results Scores for the reading were as follows (N = 69): 2 0 1 2 4 WE 1 WE 2 Critical Thinking Info Competency 3 2 0 3 3 4 11 10 24 12 6 40 43 34 31 7 10 12 2 12 The results, expressed as percentages (2011 results including for comparison purposes): % not demonstrating competency (2011) 32.9 22.4 47 49.4 WE 1 WE 2 Critical Thinking Information Competency % not demonstrating competency (2012) 18.8 15.9 42 27.5 8 6 3 4 7 % % demonstrating demonstrating competency competency (2011) (2012) 67.1 81.2 78.6 84.1 53 58 50.6 72.5 Discrepancy rates In order to ensure that readers were sufficiently normed, we spent nearly two hours before the reading itself discussing each criterion, looking at anchor papers, and reading and discussing sample papers. The discrepancy rates for the 2012 reading are as follows (with 2011 rates given for comparison): 2011 discrepancy chart Written E 1 Written E 2 Discrepancies (N = 85) % Discrepant 2/3 splits % 2/3 splits 8 4 8.4 4.7 13 13 15.3 15.3 Critical Thinking Info Competency 10 8 11.7 9.4 13 14 15.3 16.5 3 2012 discrepancy chart Written E 1 Written E 2 Critical Thinking Info Competency Discrepancies (N = 69) % Discrepant 2/3 splits % 2/3 splits 3 4 4 2 4.3 5.8 5.8 2.9 10 12 16 9 14.5 17.4 23.2 13.0 Interpretation of Data • • • Discrepancies were, once again, kept to an acceptably low level; readers were basically normed. In percentage terms, there were fewer actual discrepancies in three of the four assessment categories than there were in 2011. We also generated fewer 2/3 splits, percentage-wise, in two of the four criteria. With critical thinking, there were significantly more 2/3 splits this year than last, but significantly fewer major discrepancies—an indication that we were better normed this year. (Six of the 16 splits were resolved as 2 for CT, 10 as 3.) A closer look at the essays that generated 2/3 splits for CT and WE 2 suggest that these were indeed often borderline essays, with marginal evidence of those specific skills present but not sufficiently clear evidence in the minds of many readers. Ideally, we would continue to work to refine our rubrics and spend even more time with sample papers before the reading itself begins. We have some cause for celebration over the fact that in two of the four areas we assessed, scores went up considerably from 2011 to 2012. With information competency, we improved by 21.9 percentage points, and with written expression I 2, we improved by 14.1 points. We can reasonably conclude that some or all of the various interventions we employed this past year to ensure greater consistency in the way we teach the course have paid off. In a third area, written expression II, we improved by only 5.5 points, but we were already reasonably strong in that area anyway. Both of the WE areas are now above 80% in rate of success, a reasonable benchmark. We need to make 80% our goal for information competency too. The great disappointment of the reading is that we did not improve our critical thinking scores significantly, going from 53% demonstrating competency in 2011 to 58% in 2012. Why Does Critical Thinking Remain a Significant Problem? Several explanations for our continued weakness in ensuring that students demonstrate CT skills are worth considering. Confusion about what CT actually is 2 WE (Written Expression) 1 refers to organization and coherence; WE 2 refers to stylistic and grammatical control. 4 One possibility, suggested at the reading itself and in informal email exchanges afterwards, is that instructors may still be in doubt about what exactly critical thinking is. The term is, in fact, notoriously fuzzy—likely to mean different things to different people in much the same way such popular concepts in higher education as “academic freedom,” “engagement,” and even “assessment” itself seem at times to be slippery in their referents. An effort made in 2007 by the Riverside Community College Assessment Committee to assess CT across the district lends some credence to this theory. We asked instructors in a number of different courses across the district to provide us with sample student work demonstrating critical thinking, which we evaluated against a rubric. What struck the readers most forcefully was that a number of the assignments given by those same instructors simply didn’t ask their students for anything that could be construed as higher order thinking skills. A music instructor, for example, provided short essays in which students simply reported on having attended a concert. The reports were entirely factual, without a scintilla of analysis or argumentation. Other paper assignments purported to call for “analysis” but rewarded students for merely summarizing the views of particular theorists in a given field. This was distressingly typical of many of the assignments we evaluated—even more discouraging, they were provided by instructors who were told that we were looking for student work in response to assignments that called for critical thinking. In the case of English instructors, however, there is some reason to doubt this explanation as the source of our poor CT success rates. Note, once again, that our discrepancy rates were very low— instructors at least can fall into consensus about CT at norming sessions. Moreover, there is a rich history in the standard composition handbooks and works of theory about the teaching of Freshman English about what constitutes appropriate assignments. Most sample essays in these texts are ones that state and support a thesis with evidence. Most acknowledge opposing arguments and refute (or, when necessary) concede counter-claims. Less mainstream assignments (e.g., ones that review possible causes of or solutions for a problem, ones that synthesize best current thinking on an issue without necessarily taking a position) are also common enough as models in these texts that it’s hard to imagine that the cause of our own problem is simply lack of understanding of or consensus about what it is we are looking for. That said, it still seems prudent to do all we can to define CT more clearly as a discipline (and perhaps as a college), and to remind ourselves that California ed code requires (perhaps somewhat dubiously) that all college courses teach CT. Deficiencies in student preparation or performance If instructors understand what sorts of assignments can generate effective critical thinking and if they prepare students adequately to perform well on those assignments, deficiencies in student performance must logically be attributed to the students themselves. We’ll consider in a moment whether our assumptions about the role of instructors are warranted; for now, though, it’s worth considering whether our low success rate in student demonstration of CT skills is the primarily fault of the students. Reviewing the quantitative data and the essays themselves, it’s hard to conclude that students are the main problem—even if, as several instructors have pointed out, they seem increasingly disengaged. One of the things we would expect to see, if student performance were the main problem, is a lot of students failing English 1A. That hasn’t been happening. We don’t yet have grades for Spring, 2012, but in Spring, 2011, over 90% of the students whose essays we sampled passed the course, most with an A or a B. It may well be that these students possess critical thinking skills their essays don’t demonstrate, but that would seem to say something about the assignments they’ve been asked to address, not their own skill level. Of the 29 students in 2012 who didn’t demonstrate CT skills, five failed only CT and demonstrated competency in the other three criteria; two failed all four criteria. The other 22 failed either one or two other criteria, with information competency (15 of the 29) being the most common. Most of the 29 essays that failed for CT demonstrated competency in WC1 or WC 2, or both. Most of the failing CT essays were well enough written in terms of rhetorical and stylistic control. 5 Assignment deficiencies One of the most challenging responsibilities of a college English instructor—or really ANY college instructor where essay writing is required—is crafting good writing assignments. No matter how long we’ve taught a particular course, it seems as if any new assignment needs to go through a kind of vetting process before we can feel reasonably confident it works. Experiments are useful, even necessary—but they rarely work as well as we hope they will. A fault line in almost any course, it seems, is the quality of the writing assignments given—quality judged in terms of its capacity to allow strong students to demonstrate what they’ve learned and to prevent weak students from getting credit for what they haven’t. Carefully reviewing the 29 essays that failed for CT, one is struck by the fact that assignment problems seem to account for the failure of a majority of them. Specifically, • • • 3 Seven of the 29 were short reports on topics like bacon, energy drinks, and daylight savings time. Most of these essays were what John Bean 3 calls “all about” papers—they were not thesis driven. None was consistently argumentative or in any way analytical. In addition, all had been written relatively early in the semester (despite the instruction that end-ofterm assignments be used) and had instructor marginal comments that did not suggest the writers had misunderstood the assignments. A few of the seven did contain vestiges of argument (one student wrote on why students drop out of school; another tried to argue that too much exercise is bad. In these two instances, readers gave them passing scores for written expression but found the essays radically under-sourced. Students had simply not provided enough evidence to support claims—and the claims themselves were murky. Six of the 29 were addressed to a question that clearly befuddled almost all students attempting it (no student was successful in demonstrating CT with this assignment): is it essential to the long term health of the U.S. economy that the country focus on increasing manufacturing jobs? It seems likely that this topic was simply too complex for first-year college students—without specialized training in economics or knowledge of the complex historical reasons for job outsourcing and the loss of U.S. manufacturing jobs over the past 50 years—to engage successfully. Six of the 29 addressed, as one title put it, the “pros and cons of illegal immigration”— unsuccessfully. These essays took up a very broad question in papers of generally five or so pages in length—a reminder that the fewer the pages we expect students to write, the narrow the topic ought to be. There are obviously topics related to illegal immigration that can be made to work in an English 1A class, and it’s possible that these papers were all unsuccessful less because of the assignment than because of the way the assignment was taught. But since all these students had trouble defining a debatable issue (no one is exactly John Bean, Engaging Ideas: The Professor’s Guide to Integrating Writing, Critical Thinking, and Active Learning in the Classroom. Second Edition, 2011. Jossey-Bass. • “for” illegal immigration), in addition to writing on a topic of vast scope in few pages, it seems likely that the assignment itself played a role in their failure. 4 6 Four of the 29 were on the role of technology (or in some cases a subset of technology like “media”) on our lives today. All read more like reports than arguments (or analysis of arguments). All took up a topic even vaster than illegal immigration. The one successful essay for CT apparently written in response to this assignment had a narrower focus and a clearer rhetorical position, arguing that technology has generally been more harmful on than helpful for education—while conceding that it has also done some good. These four assignments accounted for 23 of the 29 essays that failed for critical thinking. The assignments that succeeded tended to be diverse: on the value of mail-order condoms, for revising the legal drinking age, against teenage students having a job. These were not always exciting, original, or immensely thoughtful essays, but they all demonstrated the writers’ capacity to marshal relevant evidence in support of reasonable and narrow claims. Seeing how well 40 of the 69 sample writers performed this necessary skill makes one wonder why it was so difficult for instructors to elicit from a greater number of their students. Perhaps at least we can take solace from the possibility that some of the 29 essays that failed for CT were written by students who possess significant CT skills; they were just not able to demonstrate it on this particular assignment. Using Assessment Results for Improvement We had hoped our 2012 assessment of English 1A would demonstrate significant levels of improvement in all areas we evaluated. But we were most desirous of, and hopeful for, improvement in critical thinking skills. We spend a great deal of time and energy convincing fellow instructors—some full-time faculty—that certain kinds of assignments they had worked hard to develop and which they knew to be effective in teaching, say, organizational and information skills, were simply not appropriate for late 1A papers that need to demand argumentation or analysis as a central purpose. We were heartened that so many of our colleagues demonstrated a receptivity and open-mindedness to these criticisms that is itself a mark of strong critical thinking skills. And so we are disappointed that our efforts—while fruitful with some of the skills we tested for—have not yet paid off in significant gains in critical thinking. What to do, then? We are already experimenting this summer and fall with a common assignment in English 1A that will be read collectively with a common rubric. It may be necessary to consider greater standardization (or at least oversight) of English 1A assignments across the curriculum. We might possibly allow instructors to develop some of their own writing prompts while making the final one—to which all other assignments point—common, or at least common in some of its elements. We may also do more to educate ourselves, to conduct workshops, to have our course leaders meet with the course faculty. (There is clearly an argument here for more full-time English faculty. We 4 The instructor who gave this assigned identified himself and indicated that he did not intend students to write about the pros and cons of illegal immigration. Rather, having asked students to read several articles on illegal immigration, he asked students 1) whether the alleged problems connected with immigration are “grounded in fact”; 2) what solutions have been proposed publicly; and 3) what they saw as the “fairest, most just, or practical solutions to those problems we can determine ARE grounded in fact.” Students generally seemed unable to synthesize material from their reading to produce a coherent response to these questions, however. 7 can only expect so much from associate faculty who teach also at other colleges and can’t be paid for the meetings we ask them to attend at Norco College.) But in the end, if we want to see higher levels of performance in areas like critical thinking and information competency, we may have to consider a greater degree of oversight and supervision where assignments are concerned—and perhaps even standardization of elements of them. It would not be absolutely necessary to develop a common research paper assignment; perhaps a more rigorous template or shell for such an assignment—specifying general purpose, audience, length, format, etc.—would suffice. (It would also make assessment of sample essays easier.) If there are better alternatives than this approach, it would be helpful for those who have them to make them known. We might also consider a diagnostic test for beginning English 1B students that would act in part as a check on 1A instructors to ensure that they are properly preparing their students for what lies ahead. Students in 1B could be given a writing prompt that asks them to demonstrate their ability to read and respond to a single text (or perhaps two)—with correct citation of that text also required. These essays would have to count for something toward the 1B grade, but they could also, when sampled and read against a rubric, be a method of assessing 1A. We might even want to collect student ID numbers to see how students coming from Norco College 1A courses did versus those who entered the course in some other way. Finally, as a way of determining more reliably whether English 1B does lead to increased critical thinking skills (even if those skills aren’t always manifested in English 1B papers), we might consider administering a nationally normed test like the Collegiate Learning Assessment (CLA) to a sample group of early 1B and late 1B students. Comparing scores between the two groups might permit us to conclude that English 1B does lead to increases in CT ability—or not. The problems with standardized tests like the CLA, however (cost, difficulty in ensuring students do their best work on it, concerns about whether the CT it tests is the CT we teach) may outweigh the benefits. Appendix A: 8 9 Appendix B FAQ’s: Teaching English 1A at Norco College I’ve read the course outline of record and taught similar courses elsewhere. What do you expect me to emphasize when I teach the course at Norco? At Norco College (and in fact, throughout the Riverside Community College District), English 1A is defined as a course in college-level reading, critical thinking, information competency, and academic writing, intended primarily to help students become proficient in the kinds of skills they are likely to need for success in other classes. Each of the four components of the class—reading, thinking, information competency, and writing—is equally important; none should be shortchanged. To help students acquire these skills, 1. assign challenging expository or argumentative prose to students (avoid imaginative literature—we treat that in 1B) and teach students how to read it. 2. emphasize argumentative and analytical writing—not descriptive or narrative writing. Personal or report writing, such as students often practice in high school, should be avoided for the most part. 3. ask students to engage and explain texts (and the ideas contained therein) in their essays. 4. make sure a central concern of the class is instruction in how to locate, evaluate, and use sources. Students MUST also leave 1A proficient in the use of MLA format to cite sources. How many essays should I assign? The Course Outline of Record (COR) specifies the number of words students should write (obviously a rough guideline!) but not the number of essays. Some instructors assign as many as six to eight essays over the course of the semester, others as few as three or four. We know that writing improves with frequent feedback, so consider the following as guidelines. 1. Students should be submitting a draft or a revision of an essay at least every other week in a standard 15-week class. (Short, often ungraded, pieces of writing—ideally ones that provide the seeds for the essays themselves—should be assigned more often.) A first essay should be due in draft no later than the second week of classes and a graded essay should be returned to students no later than the fourth week. 2. Sequence the essay assignments in a logical way, perhaps asking students to engage a single text in the first essay, compare two texts in a second essay, and consider an argument or test a hypothesis about three or more texts in a third essay. 3. Consider requiring students to submit multiple drafts of essays. The first you comment on extensively without giving a grade; the second you grade with minimal commentary. Each draft counts toward the number of words students are expected to produce. Putting final grades on first drafts just about guarantees a lot of low grades—unless your ask too little of students or grade them too leniently. Can you give me some ideas about effective writing assignments? Consider these as possible models: 10 1. Ask students to explain how Martin Luther King distinguishes between just and unjust laws in “Letter from Birmingham Jail,” then identify a contemporary law that they believe to be unjust (whether by King’s definition or their own) and explain why. Or ask them to explain what Crèvecœur means by “this new man, this American” and consider to what extent they believe such a definition fits many Americans today. 2. Ask students to explain a concept or argument in a text and then apply, agree with, disagree with, etc. the argument or concept. 3. Ask what writer X would think of a concept or argument expressed by writer Y. 4. Comparative assignments, particularly if you’ve carefully explained to students the purpose of comparison and how comparisons can be structured, can be very effective. 5. Many 1A instructors like to do a unit on the principles of argumentation, including logical fallacies, that leads naturally to an essay in which students pick a particularly fallacious article or website to analyze. (You may want to assign particular articles or sites. ) 6. The research paper poses special problems, but many of us assign source-dependent papers that emerge from shared readings (with students adding sources they’ve individually found and perhaps taking the paper itself in entirely unique directions). Issues that are narrower and closer to student interests (e.g., “should California require all students in public colleges and universities to perform a certain number of community service hours each year?) work better than broader and more remote issues about which students can only gain a position of false authority after a few weeks of research. Must I assign a traditional 10 – 15-page research paper in the class? That’s up to you. Some instructors do, but many don’t. It’s possible to make all, or nearly all, papers for the course “source dependent,” in which case the final assignment might be fewer than ten pages and employ fewer than ten sources. What seems important to all of us is that you 1. ask students to work with sources throughout the semester (not just in the last paper) and that at least one of your assignments asks students to compose something longer than a three-or four-page paper. 2. keep your topics narrow enough to make a depth of analysis part of the expectation for the assignment. We’ve all seen (and cringed over) essay assignments in the WRC that, for example, ask students to compare and contrast American involvement in World I and World War II. You speak of “teaching” information competency. What do you mean by that? We’ve found that asking students to read a textbook chapter on research and MLA format, and then expecting them to produce effective source-dependent papers as a result, simply doesn’t work. To help students acquire skills in information competency, 1. use active learning strategies to teach students how to formulate researchable questions, ones that they can become experts on in the weeks you allot for the assignment. 2. show students how to locate sources, how to distinguish biased or otherwise inadequate sources from effective ones. (Stress quality of support over quantity.) 3. make sure you teach MLA format in some detail, including the proper way to use quotation, summary, and paraphrase. (Group work exercises can be especially effective with this teaching.) 4. schedule library visits and orientations. English 1B instructors frequently complain that they have to teach what they expect students to have learned in 1A. Try not to send students to 1B without these skills. 11 You also speak of teaching critical thinking. What does that mean for the teaching of 1A? Critical thinking is higher order thinking: analysis, synthesis, and argument, as opposed to simple rote memorization, description, narration. The line between critical and non-critical thinking is not always clear, but you are probably asking your students to think critically when you 1. ask them to write argumentatively (most writing in 1A should be thesis driven) or examine the effectiveness of the arguments of others. 2. ask them to consider issues or problems to which there is not a single correct solution— exploring multiple hypotheses or explanations for a phenomenon is a hallmark of critical thinking. 3. avoid assignments that call primarily for description, narration, reporting facts, etc. A great resource for teaching writing AND critical thinking is John Bean’s Engaging Ideas: The Professor’s Guide to Integrating Writing, Critical Thinking, and Active Learning in the Classroom, the second edition of which is being published by Jossey-Bass in 2011. A certain amount of what we MUST teach in 1A, of course, does not particularly invite critical thinking, and that’s fine. When students are learning the nuts and bolts of MLA format, they are doing important work, but they are not engaged in higher order thinking. That might begin at the moment they consider WHY it’s useful to employ a series of citation conventions in a particular essay. Look for opportunities to engage your students in reflective, metacognitive, and analytical thinking as much as possible. What should I be asking students to read in English 1A? How much should they be expected to read? As noted earlier, you want to make sure you emphasize challenging, college-level texts, and you should avoid imaginative literature. Some other guidelines: 1. Assign approximately six to ten essays, and perhaps one book-length work. That should give you time to discuss these works thoroughly. 2. Consider assigning an anthology or reader/rhetoric. Since many contain upwards of 50 – 75 essays, it’s also possible to use public-domain texts from the Internet or do judicious amounts of photocopying and avoid an anthology altogether. Some instructors bundle essays into course packs—check with publishers or our bookstore about that option. Many instructors favor classical texts, as found in such anthologies as A World of Ideas; others like to focus on more contemporary material. 3. Consider choosing readings that focus on a particular theme—or not. There are obvious advantages with this decision, but many students grow restless with what can seem a monotonous preoccupation with a single theme all semester—particularly if the theme didn’t interest them much in the first place. 4. Choose readings that reflect ethnic, historical, and gender diversity. We want the course to map to our “global awareness” general education learning outcome, so ordinarily your reading list should include works by women, people of color, etc. 12 5. Emphasize depth over breadth in readings. Composition theorist (and historian, novelist, and writing program director) Richard Marius argues that college composition courses should assign no more than 75-100 pages of reading during the term, which allows for each text to be worked over carefully and fully. In other words, if you assign a text as rich as “Allegory of the Cave” or “Letter from Birmingham Jail,” give over at least a few days (rather than a few minutes) to discussion of it. Your students know how to skim. Your job is to teach them how to read—and to ponder deeply what they’ve read. How can I have students use the WRC effectively in 1A? The central principle when making WRC assignments is this: such assignments must involve activities that can ONLY be done under instructor supervision in the WRC—they aren’t supposed to be using the Center simply to work on the computers. Ask students to write in the WRC under time constraint, practicing this important skill, with the requirement that they seek advice on improving the paper from the instructor on duty. Ask students to use the instructor or a tutor to check and help with particular writing issues on a week by week basis: MLA format one week, topic sentences another, and so on. What mistake do many instructors make in teaching 1A? Based on our assessment of sample 1A papers in spring, 2011, along with a lot of anecdotal evidence (some derived from classroom visitations during the improvement of instruction process), some of the most common are 1. Not asking students to write papers that invite critical thinking. A paper in the form of a report, with a title like “Mothers Against Drunk Driving” or “The Democratic Party,” should set off alarm bells. Tweaking such an assignment a bit so that the title becomes “Has Mothers Against Drunk Driving Been Effective in Reducing DUI rates on the Nation’s Roads?”: the Evidence For and Against” is far more likely to produce an essay consistent with the COR expectations. 2. Not teaching students how to quote effectively, how to cite sources correctly, how to compose a proper Works Cited page. As noted elsewhere, you need to give over weeks of your class to this, with instruction, drills, practice sessions (send students to the board!), examples, etc. 3. Asking students to read too many texts too superficially. Or failing to assign enough texts and failing to ask students to write about them in their papers. Or choosing only simplistic, journalistic texts for students to engage. 4. Giving students passing grades for the course when they haven’t mastered the major learning outcomes of the course. Is there anything else you want me to keep in mind about the course that you haven’t mentioned? 1. Regrettably, plagiarism can sometimes be a problem. Try to develop assignments that are difficult, if not impossible, to plagiarize. Talk to your students about what is, and isn’t, plagiarism, and make sure they know your policy on dealing with it. If necessary, submit essays electronically to our “turnitin” account. 2. Don’t be afraid of giving your students a rigorous, demanding course. English 1A at Norco College is supposed to be the same course as English 1A at UCLA or Berkeley. A great deal 13 of evidence suggests students are more engaged in difficult courses than they are in easy ones, and that they CAN meet our expectations even when those expectations are high. Even so, a number of your students are likely to be underprepared for the course. Do what you can to help them succeed—and direct them to the appropriate resources the college has to offer. 3. For advice on any and all aspects of teaching the course, consult the course lead person, Sheryl Tschetter, at Sheryl.Tschetter@norocollege.edu.