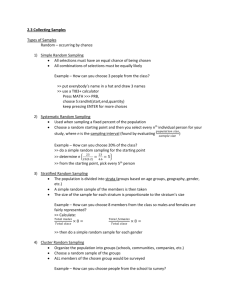

Guidance on sampling methods for audit authorities

advertisement