Reservoir Simulation: Keeping Pace with Oilfield

advertisement

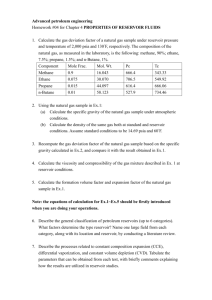

Reservoir Simulation: Keeping Pace with Oilfield Complexity David A. Edwards Dayal Gunasekera Jonathan Morris Gareth Shaw Kevin Shaw Dominic Walsh Abingdon, England Paul A. Fjerstad Jitendra Kikani Chevron Energy Technology Company Houston, Texas, USA Jessica Franco Total SA Luanda, Angola Geologic complexity and the high cost of resource development continue to push reservoir simulation technology. Next-generation simulators employ multimillion cell models with unstructured grids to handle geologies with high-permeability contrasts. Through the use of more-realistic models, these new simulators will aid in increasing ultimate recovery from both new and existing fields. Viet Hoang Chevron Energy Technology Company San Ramon, California, USA Lisette Quettier Total SA Pau, France Oilfield Review Winter 2011/2012: 23, no. 4. Copyright © 2012 Schlumberger. ECLIPSE and INTERSECT are marks of Schlumberger. Intel®, Intel386™, Intel486™, Itanium® and Pentium® are registered trademarks of Intel Corporation. Linux® is a registered trademark of Linus Torvalds. Windows® is a registered trademark of Microsoft Corporation. 1950 4 Interest in simulators is not new. People have long used simulators to model complex activities. Simulation can be categorized into three periods—precomputer, formative and expansion.1 The Buffon needle experiment in 1777 was the first recorded simulation in the precomputer era (1777 to 1945). In this experiment, needles were thrown onto a flat surface to estimate the value of π.2 In the formative simulation period (1945 to 1970), people used the first electronic computers to solve problems for military applications. These ranged from artillery firing solutions to the development of the hydrogen bomb. The expansion simulation period (1970 to the present) is distinguished by a profusion of simulation applications. These applications range from 2000 Oilfield Review Surface Network Simulator Process Simulator Economic Simulator Static and Dynamic Data Reservoir Simulator > Production simulation. A reservoir engineer takes static and dynamic data (bottom right ) and develops input for a reservoir simulator (bottom left ). The reservoir simulator, whose primary task is to analyze flow through porous media, calculates production profiles as a function of time for the wells in the reservoir. These data are passed to a production engineer to develop well models and a surface network simulator (top left ). A facilities engineer uses the production and composition data to build a process plant model with the help of a process simulator (top right ). Finally, data from all the simulators are passed to an economic simulator (right ). games to disaster preparedness and simulation of artificial life forms.3 Industry and government interest in computer simulation is increasing in areas that are computationally difficult, potentially dangerous or expensive. Oilfield simulations fit all of these criteria. Oil and gas simulations model activities that extend from deep within the reservoir to process plants on the surface and ultimately include final economic evaluation (above). Numerous factors are driving current production simulation planning to produce accurate results in the shortest possible time. These include remote locations, geologic complexity, complex well trajectories, enhanced recovery schemes, heavy-oil recovery and unconventional gas. Today, operators must make investment decisions quickly and can no lon- ger base field development decisions solely on data from early well performance. Operators now want accurate simulation of the field from formation discovery through secondary recovery and final abandonment. Nowhere do these factors come into sharper focus than in the reservoir. This article describes the tools and processes involved in reservoir simulation and discusses how a next-generation simulator is helping operators in Australia, Canada and Kazakhstan. Visualizing the Reservoir Oilfield Review The earliest reservoir date from the WINTER simulators 11/12 1930s and were physical models; the interaction Intersect Fig. 1 ORWNT11/12-INT 1 viewed— of sand, oil and water could be directly often in vessels with clear sides.4 Early physical simulators were employed when reservoir behav- ior during waterfloods surprised operators. In addition to physical simulators, scientists used electrical simulators that relied on the analogy between flow of electrical current and flow of reservoir fluids. In the early 1950s, although physical simulators were still in use, researchers were starting to think about how a reservoir might be described analytically. Understanding what happens in a reservoir during production is similar in some respects to diagnosing a disease. Data from various laboratory tests are available but the complete disease process cannot be viewed directly. Physicians must deduce what is happening from laboratory results. Reservoir engineers are in a similar position—they cannot actually view the subject of their interest, but must rely on data to 1. Goldsman D, Nance RE and Wilson JR: “A Brief History of Simulation,” in Rossetti MD, Hill RR, Johansson B, Dunkin A and Ingalls RG (eds): Proceedings of the 2009 Winter Simulation Conference. Austin, Texas, USA (December 13–16, 2009): 310–313. 2. Buffon’s needle experiment is one of the oldest known problems in geometric probability. Needles are dropped on a sheet of paper with grid lines, and the probability of the needle crossing one of the lines is calculated. This probability is related directly to the value of π. For more information: Weisstein FW: “Buffon’s Needle Problem,” WolframMathWorld, http://mathworld.wolfram.com/ BuffonsNeedleProblem.html (accessed July 25, 2011). 3. Freddolino PL, Arkhipov AS, Larson SB, McPherson A and Schulten K: “Molecular Dynamics of the Complete Satellite Tobacco Mosaic Virus,” Structure 14, no. 3 (March 2006): 437–449. 4. Peaceman DW: “A Personal Retrospection of Reservoir Simulation,” in Proceedings of the First and Second International Forum on Reservoir Simulation. Alpbach, Austria (September 12–16, 1988 and September 4–8, 1989): 12–23. Adamson G, Crick M, Gane B, Gurpinar O, Hardiman J and Ponting D: “Simulation Throughout the Life of a Reservoir,” Oilfield Review 8, no. 2 (Summer 1996): 16–27. Winter 2011/2012 5 tell them what is happening deep below the Earth’s surface. Production and other data are used to build analytical models to describe flow and other reservoir characteristics. In a reservoir model, the equations that describe fluid behavior arise from fundamental principles that have been understood for more than a hundred years. These principles are the conservation of mass, fluid dynamics and thermodynamic equilibrium between phases.5 When these principles are applied to a reservoir, the resulting partial differential equations are complex, numerous and nonlinear. Early analytical derivations for describing flow behavior in the reservoir were constrained to simple models, whereas current formulations show a more complex picture (below).6 While formulation of the equations for the reservoir has always been straightforward, they cannot be solved exactly and must be solved by finite-difference methods.7 In reservoir simulation, there is a trade-off between model complexity and ability to converge to a solution. Advances in computing capability have helped enhance reservoir simulator capability—especially when complex models and large numbers of cells are involved (next page).8 Computing hardware advances over the past decades have led to a steady progression in simulation capabilities.9 Between the early 1950s and 1970, reservoir simulators progressed from two dimensions and simple geometry to three dimensions, realistic geometry and a black oil fluid model.10 In the 1970s, researchers introduced compositional models and placed a heavy emphasis on enhanced oil recovery. In the 1980s, simulator development emphasized complex well management and fractured reservoirs; during the 1990s, graphical user interfaces brought enhanced 1951 1D flow of a compressible fluid ∂ 2p x ∂x = 2 cφµ ∂p k ∂t . 2011 3D flow of n components in a complex reservoir z x y V ∆t δ (φ Np,c Σρ S χ p p p cp W ) + qc – Faces Np,c ΣT Σ k k p ρp k rp χ ∆p – ∆Pc p – ρp g ∆h ) µp cp ( = Rc . > Reservoir simulation evolution. One of the first attempts to analytically describe reservoir flow occurred in the early 1950s. Researchers developed a partial differential equation to describe 1D flow of a compressible fluid in a reservoir (top). This equation is derived from Darcy’s law for flow in porous media plus the law of conservation of mass; it describes pressure as a function of time and position. (For details: McCarty DG and Peaceman DW: “Application of Large Computers to Reservoir Engineering Problems,” paper SPE 844, presented at a Joint Meeting of University of Texas and Texas A&M Student Chapters of AIME, Austin, Texas, February 14–15, 1957.) Recent models developed for reservoir simulation consider the flow of multiple components in a reservoir that is divided into a large number of 3D components known as grid cells (bottom). Darcy’s law and conservation of mass, plus thermodynamic equilibrium of components between phases, govern equations that describe flow in and out of these cells. In addition to flow rates, the models describe other variables including pressure, temperature and phase saturation. (For details: Cao et al, reference 6.) 6 ease of use. Nearing the end of the 20th century, reservoir simulators added features such as local grid refinement and the ability to handle complex geometry as well as integration with surface facilities. Now, simulators can handle complex reservoirs while offering integrated full-field management. These models—known as next-generation simulators—have taken advantage of several recently developed technologies, including parallel computing. Parallel Computing—Divide and Conquer One of the hallmarks of current reservoir simulators is the use of parallel computing systems. Parallel computing operates on the principle that large problems like reservoir simulation can be broken down into smaller ones that are then solved concurrently—or in parallel. The shift from serial processing to parallel systems is a direct result of the drive for improved computational performance. In the 1980s and 1990s, computer hardware designers relied on increases in microprocessor speed to improve computational performance. This technique, called frequency scaling, became the dominant force in processor performance for personal computers until about 2004.11 Frequency scaling came to an end because of the increasing power consumption necessary to achieve higher frequencies. Hardware designers for personal computers then turned to multicore processors— one form of parallel computing. The kind of thinking that would eventually lead to parallel processing for reservoir simulators, however, began around 1990. In an early experiment, oilfield researchers demonstrated that an Intel computer with 16 processors could efficiently handle an oil-water simulation model.12 Since then, the use of parallel computer systems for reservoir simulation has become more commonplace. As prices for computing equipment have decreased, it has become standard practice to operate parallel computing systems as clusters of single machines connected by a network. These multiple machines, operating in parallel, act as a single entity. The goal in parallel computing has always been to solve large problems more quickly by going n times faster on n processors.13 For a host of reasons, this ideal performance is rarely achieved. To understand the limitations in parallel networks, it is instructive to visualize a typical system used by a modern reservoir simulator. This system might have several stand-alone computers networked through a hub and a switch to a Oilfield Review The Next Generation Since 2000, a petroleum engineer could choose from a number of reservoir simulators. Simulators were numerous enough that the SPE supported frequent projects to compare them.17 Although the simulators differ from one another, their structures have common roots, which lie in serial computing and reliance on simple grids. An example of this type of reservoir simulator is the ECLIPSE simulator.18 The ECLIPSE simulator has been a benchmark for 25 years and has been continually updated to handle a variety of reservoir features. Like microprocessors, however, reservoir simulators have reached a point at which the familiar tools of the past may not be appropriate for some current field development challenges. Scientists have developed new reservoir tools— the next-generation simulators—to broaden the Winter 2011/2012 108 109 Intel Itanium 2 microprocessor Reservoir cells Intel microprocessor 108 Intel Pentium 4 microprocessor Int 106 107 Intel Pentium II microprocessor Intel Pentium microprocessor Intel486 microprocessor 105 106 Intel386 microprocessor 104 Intel286 microprocessor Number of transistors on microprocessor 107 Number of reservoir cells employed controller computer and a network server.14 As each of the individual computers works on its portion of the reservoir, messages are passed between them to the controller computer and over the network to other systems. In parallel terminology, the individual processors are the parallel portions of the system, while the work of the controllers is the serial part.15 The overall effect of communications is the primary reason why ideal performance in parallel systems can be only approached but not realized. All computing systems, even parallel systems, have limitations. The maximum expected improvement that a parallel system can deliver is embodied in Amdahl’s law.16 Consider a simulator that requires 10 hours on a single processor. The 10-hour total time can be broken down into a 9-hour part that is amenable to parallel processing and a 1-hour part that is serial in nature. For this example, Amdahl’s law states that no matter how many processors are assigned to the parallel part of the calculation, the minimum execution time cannot be less than one hour. Because of the effect of serial communications, in reservoir simulation there is often an optimal number of processors for a given problem. Although the data management and housekeeping parts of the system are the primary reasons for a departure from the ideal state, there are others. These include load balancing between processors, bandwidth and issues related to congestion and delays within various parts of the system. Reservoir simulation problems destined for parallel solution must use software and hardware that are designed specifically for parallel operation. 105 Intel8086 microprocessor 103 1970 1980 1990 2000 2010 104 Year > Computing capability and reservoir simulation. During the past four decades, computing capability and reservoir simulation evolved along similar paths. From the 1970s until 2004, computer microprocessors followed Moore’s law, which states that transistor density on a microprocessor (red circles), doubles about every two years. Reservoir simulation paralleled this growth in computing capability with the growth in number of grid cells (blue bars) that could be accommodated. In the last decade, computing architecture has focused on parallel processing rather than simple increases to transistor count or frequency. Similarly, reservoir simulation has moved toward parallel solution of the reservoir equations. 5.Brown G: “Darcy’s Law Basics and More,” http://bioen. white_papers/FromDeadEndToOpenRoad.pdf (accessed okstate.edu/Darcy/LaLoi/ basics.htm (accessed September 13, 2011). August 23, 2011). Flynn LJ: “Intel Halts Development of 2 New Smith JM and Van Ness HC: Introduction to Chemical Microprocessors,” The New York Times (May 8, 2004), Engineering Thermodynamics, 7th Edition. New York http://www.nytimes.com/2004/05/08/business/ City: McGraw Hill Company, 2005. intel-halts-development-of-2-new-microprocessors.html (accessed Sept 13, 2011). 6.Cao H, Crumpton PI and Schrader ML: “Efficient General Formulation Approach for Modeling Complex Physics,” 12.Wheeler JA and Smith RA: “Reservoir Simulation on a paper SPE 119165, presented at the SPE Reservoir Hypercube,” SPE Reservoir Engineering 5, no. 4 Simulation Symposium, The Woodlands, Texas, February (November 1, 1990): 544–548. 2–4, 2009. 13.Speedup, a common measure of parallel computing 7.Finite-difference equations are used to approximate effectiveness, is defined as the time taken on one solutions for differential equations. This method obtains processor divided by the time taken on n processors. Parallel effectiveness can also be stated in terms of an approximation of a derivative by using small, Oilfield Review efficiency—the speedup divided by the number of incremental steps from a base value. WINTER 8.Intel Corporation: “Moore’s Law: Raising the Bar,” Santa 11/12processors. Intersect Fig.14.Baker 3 Clara, California, USA: Intel Corporation (2005), ftp:// M: “Cluster Computer White Paper,” Portsmouth, download.intel.com/museum/Moores_law/Printed_ England: ORWNT11/12-INT 3 University of Portsmouth (December 28, 2000), Materials/ Moores_Law_Backgrounder.pdf (accessed http://arxiv.org/ftp/cs/00040004014.pdf (accessed October 17, 2011). July 16, 2011). Fjerstad PA, Sikandar AS, Cao H, Liu J and Da Sie W: Each of these computers in the parallel configuration “Next Generation Parallel Computing for Large-Scale may have either a single core or multiple core Reservoir Simulation,” paper SPE 97358, presented at microprocessors. Each individual core is termed a the SPE International Improved Oil Recovery Conference parallel processor and can act as an independent part in Asia Pacific, Kuala Lumpur, December 5–6, 2005. of the system. 9.Watts JW: “Reservoir Simulation: Past, Present, and 15.The serial portion is often called data management Future,” paper SPE 38441, presented at the SPE and housekeeping. Reservoir Simulation Symposium, Dallas, June 8–11, 1997. 16.Barney B: “Introduction to Parallel Computing,” 10.In the black oil fluid model, composition does not https//computing.llnl.gov/tutorials/parallel_comp/ change as fluids are produced. For more information (accessed September 13, 2011). see: Fevang Ø, Singh K and Whitsun CH: “Guidelines for 17.Christie MA and Blunt MJ: “Tenth SPE Comparative Choosing Compositional and Black-Oil Models for Solution Project: A Comparison of Upscaling Volatile Oil and Gas-Condensate Reservoirs,” paper SPE Techniques,” paper SPE 66599, presented at the 63087, presented at the SPE Annual Technical SPE Reservoir Simulation Symposium, Houston, Conference and Exhibition, Dallas, October 1–4, 2000. February 11–14, 2001. 11.Scaling, or scalability, is the characteristic of a system 18.Pettersen Ø: “Basics of Reservoir Simulation with the or process to handle greater or growing amounts of Eclipse Reservoir Simulator,” Bergen, Norway: work without difficulty. For more information: Shalom N: University of Bergen, Department of Mathematics, “The Scalability Revolution: From Dead End to Open Lecture Notes (2006), http://www.scribd.com/ Road,” GigaSpaces (February 2007), http://www. doc/36455888/Basics-of-Reservoir-Simulation gigaspaces.com/files /main/Presentations/ByCustomers/ (accessed September 13, 2011). 7 Wellbore Segment Node Nodes at branch junction Reservoir Σ FIN Σ FOUT ΣF OUT ΣF IN Nodes at well connections with grid cells > Multisegment well model. For each segment node in a wellbore, the new well model calculates the total flow in (ΣFIN) and total flow out (ΣFOUT), including any flow between the wellbore and the connected grid cell in the reservoir. Assuming a three-phase black oil simulation, there are three mass conservation equations and a pressure drop equation associated with each well segment. During the simulation, the well equations are solved, along with the other reservoir equations, to give pressure, flow rates and composition in each segment. One of the next-generation tools available technology to handle the greater complexity now present in the oil field. These simulators take now, the INTERSECT reservoir simulator, is advantage of several new technologies that include the result of a collaborative effort between parallel computing, advanced gridding techniques, Schlumberger and Chevron that was initiated in modern software engineering and high-perfor- late 2000.19 Total, which also collaborated on the mance computing hardware. The choice between project from 2004 to 2011, assisted researchers in the next-generation simulators and the older ver- developing the thermal capabilities of the softsions is determined by field complexity and busi- ware. Following the research phase and a subseness needs. Next-generation tools should be quent development phase, Schlumberger released considered if the reservoir needs a high cell count the INTERSECT simulator in late 2009. This systo capture complex geologic features, has exten- tem integrates several new technologies in one sive local grid refinements or has a high permea- package. These include a new well model, bility contrast. advanced gridding, a scalable parallel computing In addition to handling fields of greater com- foundation, an efficient linear solver and effective plexity, the next-generation simulator gives the field management. To fully understand this simuoperator an important advantage—speed. Many lator, it is instructive to examine each of these reservoir simulations involve difficult calcula- parts, starting with the new model for wells. Oilfield tions that can take hours or days to reach com- Review WINTER 11/12 pletion using older tools. The next-generation Multisegment Well Model Intersect Fig. 4_2 simulators can reduce calculation times on com- The INTERSECT simulator uses a new multisegORWNT11/12-INT 4 plex reservoirs by an order of magnitude or ment well model to describe fluid flow in the wellgreater. This allows operators to make field devel- bore.20 Wells have become more complex through opment decisions in time and with confidence, the years, and models that describe them must thus maximizing value and reducing risk. Shorter reflect their actual design and be able to handle a runs lead to more runs, which in turn leads to variety of different situations and equipment. These operators having a better understanding of the include multilateral wells, inflow control devices, reservoir and the impact of geologic uncertain- horizontal sections, deviated wells and annular ties. Shorter run times also allow the simulator to flow. Older, conventional well models treated the be used more dynamically—it can evaluate well as a mixing tank that had a uniform fluid comdevelopment scenarios and optimize designs as position, and the models thus reflected total inflow new data and information become available. to the well. The new multisegment model overcomes this method of approximation, allowing each branch to produce a different mix of fluids. 8 This well model provides a detailed description of wellbore fluid conditions by discretizing the well into a number of 1D segments. Each segment consists of a segment node and a segment pipe and may have zero, one or more connections with the reservoir grid cells (left). A segment’s node is positioned at the end farthest away from the wellhead, and its pipe represents the flow path from the segment’s node to the node of the next segment toward the wellhead. The number of segment pipes and nodes defined for a given well is limited only by the complexity of the particular well being modeled. It is possible to position segment nodes at intermediate points along the wellbore where tubing geometry or inclination angle changes. Additional segments can be defined to represent valves or inflow control devices. The optimal number of segments for a given well depends on a compromise between speed and accuracy in the numerical simulation. An advantage of the multisegment model is its flexibility in handling a variety of well configurations, including laterals and extended-reach wells. The model also handles different types of inflow control devices, packers and annular flow. The new multisegment well model is, however, only the beginning of the story on the INTERSECT simulator and others like it. The next step splits the reservoir into smaller areas, called domains. 19.DeBaun D, Byer T, Childs P, Chen J, Saaf F, Wells M, Liu J, Cao H, Pianelo L, Tilakraj V, Crumpton P, Walsh D, Yardumian H, Zorzynski R, Lim K-T, Schrader M, Zapata V, Nolen J and Tchelepi H: “An Extensible Architecture for Next Generation Scalable Parallel Reservoir Simulation,” paper SPE 93274, presented at the SPE Reservation Simulation Symposium, Houston, January 31–February 2, 2005. For another example of a next-generation simulator: Dogru AH, Fung LSK, Middya U, Al-Shaalan TM, Pita JA, HemanthKumar K, Su HJ, Tan JCT, Hoy H, Dreiman WT, Hahn WA, Al-Harbi R, Al-Youbi A, Al-Zamel NM, Mezghani M and Al-Mani T: “A Next-Generation Parallel Reservoir Simulator for Giant Reservoirs,” paper SPE 119272, presented at the SPE Reservoir Simulation Symposium, The Woodlands, Texas, February 2–4, 2009. 20.Youngs B, Neylon K and Holmes J: “Multisegment Well Modeling Optimizes Inflow Control Devices,” World Oil 231, no. 5 (May 1, 2010): 37–42. Holmes JA, Byer T, Edwards DA, Stone TW, Pallister I, Shaw G and Walsh D: “A Unified Wellbore Model for Reservoir Simulation,” paper SPE 134928, presented at the SPE Annual Technical Conference and Exhibition, Florence, Italy, September 19–22, 2010. 21.DeBaun et al, reference 19. 22.Weisstein FW: “Traveling Salesman Problem,” Wolfram MathWorld, http://mathworld.wolfram.com/Traveling SalesmanProblem.html (accessed October 12, 2011). 23.Karypis G, Schloegel K and Kumar V: “ParMETIS— Parallel Graph Partitioning and Sparse Matrix Ordering Library,” http://mpc.uci.edu/ParMetis/manual.pdf (accessed July 7, 2011). Karypis G and Kumar V: “Parallel Multilevel k-way Partitioning Scheme for Irregular Graphs,” SIAM Review 41, no. 2 (June 1999): 278–300. 24.Fjerstad et al, reference 8. 25.Hesjedal A: “Introduction to the Gullfaks Field,” http:// www.ipt.ntnu.no/~tpg5200/intro/gullfaks_introduksjon. html (accessed September 26, 2011). Oilfield Review Domains and a Parallel, Scalable Solver The calculation of flow within the reservoir is the most difficult part of the simulation—even for simulators using parallel computing hardware. The number of potential reservoir cells is many times larger than the number of processors available. It is natural to parallelize this calculation by dividing the reservoir grid into areas called domains and assigning each one to a separate processor. Partitioning a structured Cartesian grid into segments containing equal numbers of cells while minimizing their surface area may be a straightforward process; partitioning realistic unstructured grids, however, is more difficult (right). Realistic grids must be used to model the heterogeneous nature of a reservoir that has complex faults and horizons. The grids must also have sufficient detail to delineate irregularities such as water fronts, gas breakthroughs, thermal fronts and coning near wells. These irregularities are usually captured by the use of local grid refinements. Partitioning unstructured grids with these complex features and numerous local refinements is challenging; to address this, next-generation simulators typically use partitioning algorithms.21 The objective of partitioning the unstructured grid is to divide the grid into a number of segments, or domains, that represent equal computational loads on each of the parallel processors. Calculating the optimal partitioning for unstructured grids is difficult, and the solution is far from intuitive. Reservoir partitioning is similar to the “traveling salesman problem” in combinatorial mathematics that seeks to determine the shortest route that permits only one visit to each of a set of cities.22 Unlike the traveling salesman who is concerned only about minimizing his time in transit, partitioning of the reservoir must be guided by the physics of the problem. To this end, the INTERSECT simulator employs the ParMETIS reservoir partitioning algorithm.23 The advantages of partitioning a complex reservoir grid to balance the parallel workload become obvious by considering simulation of the Gullfaks field in the Norwegian sector of the North Sea.24 Gullfaks, discovered in 1979 and operated by Statoil, is a complex offshore field that has 106 wells producing about 30,000 m3/d [189,000 bbl/d] of oil.25 This field is highly faulted with deviated and horizontal wells crossing the faults. An INTERSECT simulation of this field developed several domain splits so that different numbers of parallel processors could be evaluated for load balancing (right). When compared Winter 2011/2012 Structured Reservoir Grid Well Unstructured Reservoir Grid > Reservoir grids. Reservoir simulators may lay out the grid in either a structured pattern (upper left ) or as an unstructured pattern (lower right ). Structured grids have hexahedral (cubic) cells laid out in a uniform, repeatable order. Unstructured grids consist of polyhedral cells having any number of faces and may have no discernable ordering. Both grid types partition the reservoir space without gaps or overlaps. Structured grids with many local grid refinements around wells are usually treated as unstructured. Similarly, when a large number of faults are present in a structured grid, it becomes unstructured as a result of the nonneighbor connections created. Gullfaks Field Unstructured Grid Gullfaks Field Domain Split Oilfield Review WINTER 11/12 Intersect Fig. 5 ORWNT11/12-INT 5 > Gullfaks domain decomposition. The highly faulted nature of the Gullfaks field and the number of wells and their complexity result in complicated reservoir communication and drainage patterns. The simulator takes these factors into account and develops a complex, unstructured grid in preparation for partitioning (left ). Fine black lines define individual cell boundaries; vertical lines (magenta) represent wells. Different colors denote varying levels of oil saturation from high (red) to low (blue). This unstructured grid is split into eight domains using a partitioning algorithm for an eight-processor simulation (right ). In the partitioned reservoir, different colors denote the individual domains. Only seven colors appear in the figure—one domain is on the underside of the reservoir and cannot be viewed from this angle. The primary criterion for the domain partition is an equal computational load on each of the parallel processors. 9 0 Equations for cellnearest neighbors 2,550 Row number 2,000 Row number 4,000 6,000 2,560 2,570 2,580 2,590 Equations for other reservoir connections 2,550 2,570 2,590 Column number 8,000 Equations for wells 10,000 0 2,000 4,000 6,000 8,000 10,000 Column number > Matrix structure. A matrix of the linearized reservoir simulation equations is typically sparse and asymmetrical (left ). The unmarked spaces represent matrix positions with no equation, while each dot represents the derivative of one equation with respect to one variable (right ). The nine points inside the red square (right ) represent mass conservation equations for gas, water and oil phases. The points on the off-diagonal (left ) represent equations for connections between cells and their neighboring cells in adjacent layers. Points near the vertical and horizontal axes (left ) represent the well equations. V Δ φ ( ρo So xi + ρg Sgyi + ρw SwWi ( = _ (qo + qg + qw ) + Δt r kr Δxyz T ρo xi μo (Δp _ ΔPcgo _ γo ΔZ ( + o krg Δxyz T ρgyi μ (Δp _ γg ΔZ ( + g Residual, R(x) krw Δxyz T ρwwi μ (Δp _ ΔPcgo _ ΔPcwo _ γw ΔZ ( w Tolerance 0 xn x2 Oilfield Review WINTER 11/12 Intersect Fig. 7 ORWNT11/12-INT 7 x1 x0 Solution variable x > Numerical solution. The complete set of fundamental reservoir equations can be written in finitedifference form (top). These equations describe how the values of the dependent variables in each grid cell—pressure, temperature, saturation and mole fractions—change with time. The equations also include a number of property-related terms including porosity, pore volume, viscosity, density and permeability (see DeBaun et al, reference 19). Numerical solution of this large set of equations is carried out by the Newton-Raphson method illustrated on the graph. A residual function R(x) that is some function of the dependent variables is calculated at x0 (dashed black line marks coordinate position) and x 0 plus a small increment (not shown). This allows a derivative or tangent line (black) to be calculated, that when extrapolated, predicts the residual going to zero at x1. Another derivative is calculated at x1 that predicts the residual going to zero at x2. This procedure is carried out iteratively until successive calculated values of R(x) agree within some specified tolerance. The locus of points at the intersection of the derivative line and its corresponding value of x describe the path of the residual as it changes with each successive iteration (red). 10 with an ECLIPSE simulation on Gullfaks using eight processors, the INTERSECT approach decreased computational time by more than a factor of five. Runs with higher numbers of processors showed similar improvements and confirmed the scalability of the simulation. Proper domain partitioning is only part of the next-generation simulation story. Once the reservoir cells are split to balance the workload on the parallel processors, the model must numerically solve a large set of reservoir and well equations. These equations for the reservoir and wells form a large, sparsely populated matrix (left). Although the equations generated in the simulator are amenable to parallel computation, they are often difficult to solve. Several factors contribute to this difficulty, including large system sizes, discontinuous anisotropic coefficients, nonsymmetry, coupled wells and unstructured grids. The resultant simulation equations exhibit mixed characteristics. The pressure field equations have long-range coupling and tend to be elliptic, while the saturation or mass balance equations tend to have more local dependency and are hyperbolic. The INTERSECT simulator uses a computationally efficient solver to achieve scalable solution of these equation systems.26 It is based on preconditioning the equations to make them easier to solve numerically. Preconditioning algebraically decomposes the system into subsystems that are then manipulated based on their particular characteristics to facilitate solution. The resulting reservoir equations are solved numerically by iterative techniques until convergence is reached for the entire system including wells and surface facilities (left).27 The solver provides significant improvements in scalability and performance when compared with current simulators. A major advantage of this highly scalable solver is its ability to handle both structured and unstructured grids in a general framework for a variety of field situations (next page, top). 26.Cao H, Tchelepi HA, Wallis J and Yardumian H: “Parallel Scalable Unstructured CPR-Type Linear Solver for Reservoir Simulation,” paper SPE 96809, presented at the SPE Annual Technical Conference and Exhibition, Dallas, October 9–12, 2005. 27.The linear solver consumes a significant share of system resources. In a typical INTERSECT case, the solver may use 60% of the central processing unit (CPU) time. 28.Güyagüler B, Zapata VJ, Cao H, Stamati HF and Holmes JA: “Near-Well Subdomain Simulations for Accurate Inflow Performance Relationship Calculation to Improve Stability of Reservoir-Network Coupling,” paper SPE 141207, presented at the SPE Reservoir Simulation Symposium, The Woodlands, Texas, February 21–23, 2011. Oilfield Review Winter 2011/2012 Ideal scaling Fractured carbonate oil field Highly faulted supergiant field Offshore supergiant field Massive gas condensate field Large onshore oil field Highly faulted oil field 18 16 14 Run time on 16 processors Run time on n processors Field Management Workflow An improved field management workflow is one of the components of the INTERSECT simulator package. Field management tasks include design of and modifications to surface facilities, sen­ sitivity analyses and economic evaluations. Traditionally, field management tasks have been distributed among the various simulators—includ­ ing a reservoir simulator, process facilities simula­ tor and economic simulator. The isolation of the simulators in the traditional workflow tends to produce suboptimal field management plans. The field management (FM) module in the INTERSECT simulator addresses the weaknesses of traditional methods with a collection of tools, algorithms, logic and workflows that allow all of the different simulators to be coupled and run in concert. This provides a great deal of flexibility; for example, the module would allow two iso­ lated, offshore gas reservoirs to be linked to a single surface processing facility for modeling and evaluation.28 At the top level, the module executes one or more strategies that are the focal point of the whole framework. Strategies, which consist of a list of instructions and an optional balancing action, can encompass a wide variety of scenarios that might affect production. These strategies may include factors that affect subsurface deliv­ erability such as reservoir performance, well per­ formance and recovery methods. Other strategies affecting production may include surface capa­ bility and economic viability. After the strategy is selected, the FM module employs tools to create a complete topological representation of the field including wells, completions and inflow control devices. Once the strategy has been set and the field topology is defined, the module uses operat­ ing targets and limits to set well balancing actions and potential field topology changes. An important feature of the FM workflow is the abil­ ity to control multiple simulators running on dif­ ferent machines and operating systems and in different locations (right). Chevron and its partners used the INTERSECT simulator in their field development of a major gas project off the coast of Australia. The large capital outlays envisioned for this project required a next-generation simulator that could run cases quickly on large, unstructured grids characterized by highly heterogeneous geology. 12 10 8 6 4 2 16 50 100 150 200 250 300 Number of processors, n > INTERSECT simulation scalability. This simulation system has been used in a variety of offshore and onshore field scenarios including large gas condensate fields and fields with significant faulting. Scalability—measured as the run time on 16 processors divided by the run time on n processors, or speedup—is calculated as a function of the number of processors. The diagonal straight line (dashed) represents ideal scaling. INTERSECT field management Surface network simulator Reservoir A, using the ECLIPSE reservoir simulator Oilfield Review WINTER 11/12 Intersect Fig. 9 ORWNT11/12-INT 9 Reservoir B, using the INTERSECT reservoir simulator >Multiple reservoir coupling. The field management module can link independent Reservoirs A and B and surface facilities (center ) via network links. In this example, Reservoir A (lower left ) is using the ECLIPSE simulator on a Microsoft Windows desktop computer while Reservoir B (lower right ) is using the INTERSECT system on a Linux parallel cluster. The surface network simulator, running on a Microsoft Windows desktop, is handling surface facilities for this network (upper right ). The FM module (top) that controls all of these simulators may be a desktop or local mainframe computer. 11 AUSTRALIA IO/Jansz field Gorgon field Barrow Island Dampier to Bunbury natural gas pipeline Pipeline junction LNG plant Gorgon pipeline Existing pipeline 0 0 km 50 mi 50 > Gorgon project, offshore Australia. The Gorgon project includes the Gorgon and IO/Jansz subsea gas fields that lie 150 to 220 km [93 to 137 mi] off the mainland. Gas is moved from the fields by deep underwater pipelines (black) to Barrow Island, about 50 km off the coast. There, the raw gas is stripped to remove CO2 and then either liquefied to LNG for export by tanker or moved to the mainland by pipeline for domestic use. On the mainland, gas from Barrow Island is transported through an existing pipeline (blue) that gathers gas from other producing areas nearby. Better Decisions—Reduced Uncertainty The Gorgon project—a joint venture of Chevron, Royal Dutch Shell and ExxonMobil—will produce LNG for export from large fields off the coast of northwest Australia.29 This project will take subsea gas from the Gorgon and IO/Jansz fields and move it by underwater pipeline to Barrow Island about 50 km [31 mi] off the oast (above). Chevron—the operator—is building a 15 million–tonUK [15.2 million–metric ton] LNG plant on Barrow Island to prepare the gas for export to customers in Japan and Korea. Engineers at Chevron knew that one of the challenges would be to dispose of the high levels of CO2 separated from the raw gas.30 Chevron will meet this challenge by removing the CO2 at the LNG plant and burying it deep beneath the surface of Barrow Island (below). Gorgon will be capable of injecting 6.2 million m3/d [220 MMcf/d] of CO2 using nine injection wells spread over three drill centers on Barrow Island. Oilfield Review WINTER 11/12 Intersect Fig. 11 ORWNT11/12-INT 11 CO2 stripping Gorgon project gas fields LNG plant With billions of dollars of capital and LNG revenues at stake, Chevron and its partners understood from the start that the engineers developing the business case would need to know how much the project would yield and for how long. Extensive reservoir modeling and simulation were the solutions to this challenge. Some of Chevron’s simulations on an internal serial simulator with fine grid models of individual Gorgon formations were taking 13 to 17 hours per run. Early in the project, Chevron decided that migration to the INTERSECT simulator would be required for timely project development. Although some computer models require a minimal amount of input data, that cannot be said for reservoir simulators. These simulators employ large datasets and typically use purposebuilt migrators to move the data from one simulator to another. For Gorgon, Chevron used internal migrating software to transform input from their internal simulator to the corresponding INTERSECT input dataset. These data were used to develop history-matching cases at data centers in Houston and in San Ramon, California, USA.31 The results from these cases showed that both simulators were producing equivalent results— although taking very different amounts of CPU time to do it. This process was repeated on highperformance, parallel computing clusters at the Chevron operations center in Perth, Western Australia, Australia. As Chevron project teams in Australia began advanced project planning, the INTERSECT simulator reduced simulation times by more than an order of magnitude. In one Gorgon gas field simulation with 15 wells and 287,000 grid cells, serial run times with the internal simulator were six to eight hours, while the INTERSECT system reduced run times to under 10 minutes with LNG product Seismic surveys Surveillance wells CO2 CO2 disposal > CO2 disposal. As natural gas is produced from the various reservoirs in the Gorgon project (left ), it is fed to a CO2 stripping facility located near the LNG plant (middle). Stripped natural gas (orange) flows to liquefaction and an associated domestic gas plant (not shown), while the extracted CO2 (blue) is compressed and injected into an unused saline aquifer 2.5 km [1.6 mi] beneath the surface for disposal. Conditions in the CO2 storage formation are monitored by seismic surveys and surveillance wells (right ). 12 Oilfield Review excellent scalability. In addition to this simulation, Chevron has used the INTERSECT simulator on other fields in the Gorgon area including Wheatstone, IO/Jansz and West Tryal Rocks. Both black oil and compositional models have been used with grids ranging from 45,000 to 1.4 million cells. INTERSECT simulation times on these cases using the Perth cluster ranged from 2 minutes to 20 minutes depending on the case. Next-generation reservoir simulation on geologic scale models with fast run times has enhanced decision analysis and uncertainty management at Gorgon. Reducing Simulation Time Reduction of reservoir simulation execution time was also a key factor for Total at their Surmont oil sands project in Canada. Surmont, located in the Athabasca oil sands area about 60 km [37 mi] southeast of Fort McMurray, Alberta, Canada, is a joint venture between ConocoPhillips Canada and Total E&P Canada (right).32 The project was initiated in 2007 with a production of 4,293 m3/d [27,000 bbl/d] of heavy oil; it is expected to reach full capacity of 16,536 m3/d [104,000 bbl/d] in 2012. At Surmont, the highly viscous bitumen in the unconsolidated reservoir is produced by steamassisted gravity drainage (SAGD). In this process, steam is injected through a horizontal well, and heated bitumen is produced by gravity from a parallel, horizontal producing well below the injector. Typically, one steam chamber is associated with each injector and producing well, and a SAGD development consists of several adjacent well pairs. From a simulations point of view, at the start of SAGD operations, the individual steam chambers are independent of each other and simulations can be performed on individual SAGD pairs. As the heating and drainage proceed, this independence between well pairs ceases because of pressure communications, gas channeling and aquifer interactions. Including all well pairs in a typical SAGD development quickly leads to multimillion-cell models that could not be run in a reasonable time frame with commercial thermal simulators.33 Total turned to the INTERSECT new-generation simulator to model the full-field, nine-pair SAGD operation at Surmont. The model describes an oil sands reservoir with an oil viscosity of 1.5 million mPa.s [1.5 million cp] and 1.7 million grid blocks with heterogeneous cell properties.34 The model includes external heat sources and sinks to describe the interaction with Winter 2011/2012 C A N A D A 0 0 km U N I T E D S T A T E S 200 mi 200 Fort McMurray Surmont Athabasca oil sands A l b e r t a Edmonton Calgary > Surmont project. The Surmont oil sands project is located southeast of Fort McMurray, Alberta, Canada, within the greater Athabasca oil sands area. Depending on the topography and the depth of the overburden, oil sands at Athabasca may be produced by surface mining or steam-assisted processes such as SAGD. over- and underburden material. The producers are on a parallel computer cluster. To test its speed controlled by maximum steam rate, maximum liq- and scalability, the software was used on different uid rate and minimum bottomhole pressure (BHP). parallel hardware configurations ranging from 1 The injectors are controlled by maximum injection to 32 processors. These tests proved the ability of this application to handle this large heterogerate and maximum BHP. The INTERSECT system was used to model the neous model quickly enough to support operational decisions. For example, using 16 processors, first three years of SAGD operations atOilfield SurmontReview WINTER 11/12 29.Flett M, Beacher G, Brantjes J, Burt A, Dauth Intersect C, TC, Nations T and Noonan SG: “SAGD Gas Lift Fig.32.Handfield 13Completions Koelmeyer F, Lawrence R, Leigh S, McKenna J, Gurton R, and Optimization: A Field Case Study at ORWNT11/12-INT 13 paper SPE/PS/CHOA 117489, presented at the Robinson WF and Tankersley T: “Gorgon Project: Surmont,” Subsurface Evaluation of Carbon Dioxide Disposal Under SPE International Thermal Operations and Heavy Oil Barrow Island,” paper SPE 116372, presented at the Symposium, Calgary, October 20–23, 2008. SPE Asia Pacific Oil and Gas Conference and Exhibition, 33.Total initially tried a commercial, thermal simulator to Perth, Western Australia, Australia, October 20–22, 2008. model operations at Surmont. Case run times were very 30.Raw gas from the Gorgon fields has about 14% CO2. long—45 hours—making this approach impractical. 31.To ensure that two reservoir simulators are producing 34.The oil is modeled using two pseudocomponents— equivalent results, a user may employ a technique called one light and one heavy. history-matching. Each simulator will run the same case, and the oil or gas production rates, as a function of time, will be compared. If they match, the two cases are deemed equivalent. This technique can also be used to calibrate a simulator to a field where long-term production data are available. 13 Conserving Resources As operators continue to push into remote areas in search of resources, next-generation simulators will be there to aid in planning and development. A case in point is the new Chevron Tengiz field in the Republic of Kazakhstan, at the shore of the Caspian Sea. Tengiz is a deep, supergiant, naturally fractured carbonate oil and gas field with an oil column of about 1,600 m [5,250 ft] and a production rate of 79,500 m3/d [500,000 bbl/d].36 The Tengiz field is expansive, covering an area of the INTERSECT simulator executed the Surmont case in 2.6 hours.35 Parallel scalability is also good—10 times faster on 16 processors compared with a serial run. In addition to predicting flow performance from SAGD operations, the system can also give information on profiles of important variables such as pressure and temperature in the steam chambers (below). In preparation for fully deploying the INTERSECT technology at Surmont, Total is confirming these results on the most current version of the simulator. Temperature, °C 10 66 122 178 234 > Steam chambers. The nine steam chambers at Surmont are located at a depth of 300 m [984 ft] near the bottom of the oil sands reservoir. These chambers have a lateral spacing of about 100 m [328 ft] and a length of nearly 1,000 m [3,281 ft]. Each chamber has a pair of wells—one steam injector (magenta) and a parallel producing well (not shown). INTERSECT simulation of a thermal process such as SAGD also yields information on temperature profiles in the steam chambers. At Surmont, the temperature varies from more than 230°C [446°F] (red areas) at the core of the chamber to ambient temperature at the periphery (blue areas). Gaps along the length of the chambers reflect permeability differences in the oil sands. The operator monitors temperature in the steam chambers during production. While the steam chambers are relatively small, the SAGD process is efficient. Once the chamber growth reaches the rock at the top of the reservoir, thermal efficiency drops because of heat transfer to the overburden. 35.This case used 16 processors—four multicore Lim K-T and Hoang V: “A Next-Generation Reservoir processors each having four cores built into the chip. Simulator as an Enabling Tool for Routine Analyses of Heavy Oil and Thermal Recovery Process,” WHOC paper 36.Tankersley T, Narr W, King, G, Camerlo R, 2009-403, presented at the World Heavy Oil Congress, Zhumagulova A, Skalinski M and Pan Y: “Reservoir Puerto La Cruz, Venezuela, November 3–5, 2009. Modeling to Characterize Dual Porosity, Tengiz Field, Republic of Kazakhstan,” paper SPE 139836, presented 39.Afifi AM: “Ghawar: The Anatomy of the World’s Largest at the SPE Caspian Carbonates Technology Conference, Oil Field,” Search and Discovery (January 25, 2005), Atyrau, Kazakhstan, November 8–10, 2010. http://searchanddiscovery.com/documents/2004/afifi01/ (accessed September 29, 2011). 37.The Tengiz simulation also couples the reservoir and well models to surface separation facilities to maximize 40.Dogru et al, reference 19. plant capacities as part of development planning. 41.Dogru AH, Fung LS, Middya U, Al-Shaalan TM, Byer T, Oilfield Review 38.Chevron Corporation: “Envisioning Perfect Oil Fields, Hoy H, Hahn WA, Al-Zamel N, Pita J, Hemanthkumar K, WINTER 11/12 Growing Future Energy Streams,” Next*, no. 4 Mezghani M, Al-Mana A, Tan J, Dreiman W, Fugl A and (November 2010): 2–3. Al-Baiz A: “New Frontiers in Large Scale Reservoir Intersect Fig. 14 Simulation,” Chevron is also using the INTERSECT system to reduce ORWNT11/12-INT 14 paper SPE 142297, presented at the SPE Reservoir Simulation Symposium, The Woodlands, run time in field scale models for thermal recovery Texas, February 21–23, 2011. processes. For more information, see: 14 about 440 km2 [170 mi2], and contains an estimated 4.1 billion m3 [26 billion bbl] in place. The challenge for Chevron in modeling Tengiz was the field’s geologic complexity coupled with the need to reinject large quantities of H2S recovered from the production stream. This required combining detailed geologic information with information on the distinctly different flow behaviors between fractured and nonfractured areas of the field. To assist in current field management and support future growth, Chevron developed an INTERSECT case that encompassed the 116 producing wells. The model contained 3.7 million grid blocks in an unstructured grid that included more than 12,000 fractures.37 Chevron has experienced improved efficiency using the new simulator at Tengiz; simulations that once took eight days now take eight hours.38 More-realistic geologic input leads to more-accurate production forecasts that allow engineers to make better field development decisions. In addition to their use in the development of new fields, next-generation simulators may also aid recovery of additional oil and gas from older fields. The world energy markets rely heavily on the giant reservoirs of the Middle East. The largest of these reservoirs—Ghawar—was discovered in 1948 and has been producing for 60 years.39 Ghawar is a large field, measuring 250 km [155 mi] long by 30 km [19 mi] wide. Simulation of a reservoir the size of Ghawar is challenging because of the fine grid size that must be employed to capture the heterogeneities seen in high-resolution seismic data. Using fine grid sizes can reduce errors in upscaling (next page). To handle reservoirs the size of Ghawar and the other giant fields that it owns, Saudi Aramco has developed a next-generation reservoir simulator.40 In one Ghawar black oil simulation, the model used more than a billion cells with a 42-m [138-ft] grid and 51 layers with 1.5-m [5-ft] spacing.41 Using a large parallel computing system, 42.Dogru AH: “Giga-Cell Simulation,” The Saudi Aramco Journal of Technology (Spring 2011): 2–7. 43.Farber D: “Microsoft’s Mundie Outlines the Future of Computing,” CNET News (September 25, 2008) http:// news.cnet.com/830113953_3-10050826-80.html (accessed August 4, 2011). 44.Dogru et al, reference 41. 45.Bridger T: “Cloud Computing Can Be Applied for Reservoir Modeling,” Hart Energy E&P (March 1, 2011), http://www.epmag.com/Production-Drilling/CloudComputing-Be-Applied-Reservoir-Modeling_78380 (accessed August 11, 2011). Oilfield Review 50 m 250 m © 2011 Google-Imagery © 2011 Digital Globe, GOIEYE © 2011 Google-Imagery © 2011 Digital Globe, GOIEYE > Grid resolution. Areal grid size plays an important role in capturing reservoir heterogeneity and eliminating errors caused by upscaling. Overhead photos of the Colosseum in Rome illustrate this concept. If the area of interest is the Colosseum floor (dashed box, upper left), then a 50 m x 50 m [164 ft x 164 ft] grid is appropriate to capture what is required. Choice of a larger 250 m x 250 m [820 ft x 820 ft] grid (dashed box, right) includes driveways, streets, landscaping and other features not associated with the focal point of interest. In the case of the Colosseum, use of the larger grid to capture properties associated with the floor would introduce errors. this model simulated 60 years of production history in 21 hours.42 The results were compared with an older simulation run using a 250-m [820ft] grid and a given production plan. The older simulator predicted no oil left behind after secondary recovery; the new simulator revealed oil pockets that could be produced using infill drilling or other methods. This example shows how next-generation simulators may facilitate additional resource recovery. Although a primary goal of next-generation simulators has been to more completely describe reservoirs through reduced grid size and upscaling, scientists are also pursuing other technology innovations. Improved user interfaces and new hardware options for reservoir simulation are imminent. These improved user interfaces embody a concept known as spatial computing. Spatial computing relies on multiple core processors, parallel programming and cloud services to produce a virtual world controlled by speech and gestures.43 This concept is being tested for controlling large Winter 2011/2012 reservoir simulations with hand gestures and verbal commands rather than with a computer mouse.44 To test this concept, a room is equipped with cameras and sensors connected to large screens on the walls and a visual display on a table. Using hand gestures and speech, engineers manage the simulator input and output. If needed, the system can be used in a collaborative manner via a network with engineers and scientists at other locations. This kind of system has vast possibilities—it tends to mask computing system complexOilfield Review ity and allows the engineers and scientists to freely WINTER 11/12 interact with the reservoir simulation. Intersect Fig. 15 ORWNT11/12-INT 15 Just as ideas such as spatial computing will enhance the user interface, new hardware utilization concepts that go beyond onsite parallel computing clusters will add to reservoir simulation capability. Clusters of parallel computers are expensive and the associated infrastructure is complex and difficult to maintain. Some operators are discovering that it may be useful to use cloud computing to communicate with multiple clusters in many locations.45 Using this approach, the operator can add system capacity as the situation dictates rather than depending on a fixed set of hardware. This approach allows the user to communicate with the cloud system via a “thin client” such as a laptop or a tablet. Reservoir modeling tools using this technology have already been developed, and more will follow. New technology for reservoir simulation is emerging on several fronts. Foremost are nextgeneration reservoir simulators that produce more-accurate simulations on complex fields with reduced execution time. Other technologies such as spatial and cloud computing are on the near horizon and will allow scientists and engineers to interact more naturally with the simulations and potentially add hardware capability at will. These developments will give operators more-accurate forecasts, and those improved forecasts will lead to better field development decisions. —DA 15